DSP to .Net under Windows. Jedi Power Post

Hello!

In the first article, we talked about our infrastructure as a whole. Now it's time to focus on specific products. This article is about DSP. As many know, DSP (Demand Side Platform) is an automated advertising purchase system. The system requirements are tough: it must hold a high load (thousands of requests per second), respond quickly (up to 50 ms, or even less) and, most importantly, choose the most appropriate ads. Most often, such projects are developed for Linux, we were able to create a truly high-performance service for Windows Server. How to achieve this, and how did we manage it? I'll tell you about it.

We have DSP consists of two applications: the actual bidder is a Windows service for interacting with the SSP, and DspDelivery is an ASP.NET application for the ultimate delivery and recording of interactive user actions. Delivery is more or less simple, but we'll take a closer look at the bidder.

')

The platform uses .NET, the main language is C #. Since we are writing a bidder, we need a web server and a binding. At first, we chose a simple path: we screwed up IIS, created an ASP.NET application with the ASP.NET Web API framework, and began to cut the business logic. It quickly became clear that the whole structure does not hold more than 500-700 requests per second. No matter how much we called in IIS or tweaked 100,500 parameters, the problem was not solved. And it didn’t seem to be possible to get inside the IIS, which means we won’t achieve complete control over the situation. IIS - the notorious black box, in which it is difficult to drastically change something.

Then we tried Katana project server (implementation of Microsoft's OWIN infrastructure). Katana is an open source project, so you could see the insides. In addition, the Web API has support for OWIN, which means it won't be necessary to change the code much. Katana provides an opportunity to work with IIS, as well as with their simple server, written on the basis of the .NET HttpListener. That is what we took. I was pleased with the result: now the server held about 2000 requests per second, and the ASP.NET application was transformed into a Windows service.

However, the server load increased, new features were sawed. It became clear that this option does not suit us either. Then we took drastic measures: from the whole Katana only HttpListener remained with a small binding for asynchrony, nothing was left of the Web API, that is, the application was completely sharpened by HTTP requests for bidder. As a result, the server was able to handle up to 9000 requests per second. The conclusion is simple: the whole OWIN- and Web API binding has a critical impact on high-performance applications. Want faster - write easier and non-universally. (This does not mean that there should be a nuclear govnod within the application. We have everything modular, quite expandable: DI, patterns and all that). Sample request processing code:

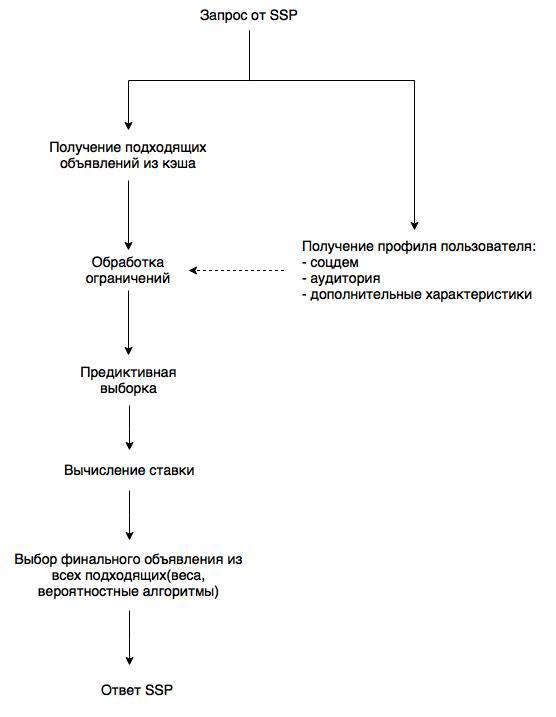

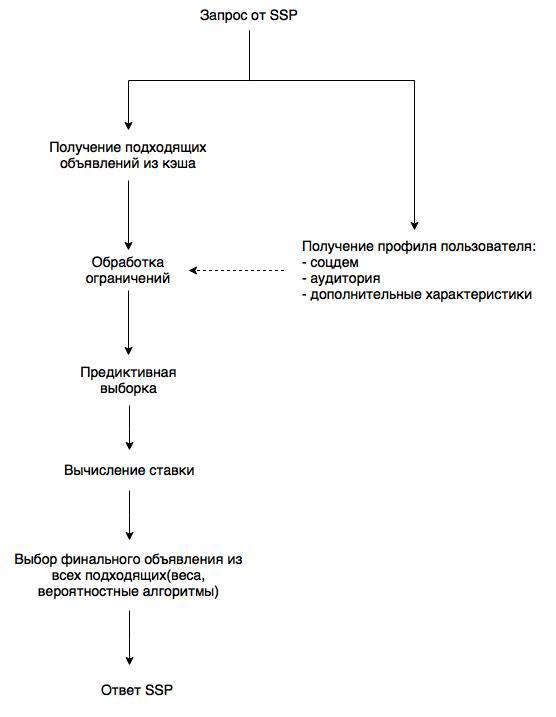

Let us return to the subject area and look at the bidder work scheme:

The scheme is simplified, but it’s clear what you should pay attention to. First, inventory (that is, a list of ads with restrictions). The list is stored in memory and updated once a minute. But for the user profile you need to climb into the database every time. In the same database, DMP updates profiles, which allows not to waste resources on remote interaction with data sources. Restrictions are set according to a flexible scheme: any atomic filters (belonging to an audience, geo, black and white lists of domains, sotsdem, etc.) are combined into any sequences and / or containers. Predictor allows you to optimize the purchase. And here we come to the question of choosing a database. Without a fast and scalable solution, it’s impossible to maximize performance.

Here we also did not manage to get there the first time and we started with beautiful well-promoted solutions (no, not with MS SQL). First, we took MongoDB (2.4): attracted good performance, JSON-scheme, replication, sharding out of the box, supposedly simple support for expanding the cluster. In fact, everything was not so rosy. The locks on the write operations strongly hampered the system, sharding turned out to be difficult to configure (I still remember how we argued which shard key to choose for the collection) - the data did not want to be distributed evenly, and the data dump to disk (not so frequent, however) added additional locks.

The next attempt was Couchbase. There were no locks here and the data structure was simpler - the usual key-value. But there were still flaws: the load on the disk subsystem was excessive, as well as insufficient configurability and disgusting extensibility (or rather lack thereof). Technical support also left much to be desired.

But it was then that the harsh and expensive Aerospike database got a free license. This turned out to be the right solution. The speed has increased, the load on the disk has fallen, the configuration of the cluster has been simplified. Aerospike also had bugs. But after describing a problem on the forum (for example, a problem with sampling by range ), it was promptly fixed in the update. As a result, we have an Aerospike cluster of 7 servers that easily handles the entire load.

The main DSP integration is, of course, third-party SSPs. And they are clearly divided into two well-known groups: Russian and Western. Everything is simple with the Russians: they work using the OpenRTB 2 protocol. * And differ only in the presence or absence of additional features (such as support for a fullscreen banner). Each Western SSP operates on its own protocol and integration takes a not so short time. That is, you have to implement support for their protocol. The most famous example is Google.

It should be said about the purely technical interaction. First, information about the actions of visitors is sent to the DMP through Apache Flume. Another direction: uploading statistics to the Trading Desk. In this case, the corresponding Trading Desk service itself requests statistics for a certain minimum period and receives it in a packed form (zMQ + MessagePack). after which it groups and writes to its database (this is how Hybrid works).

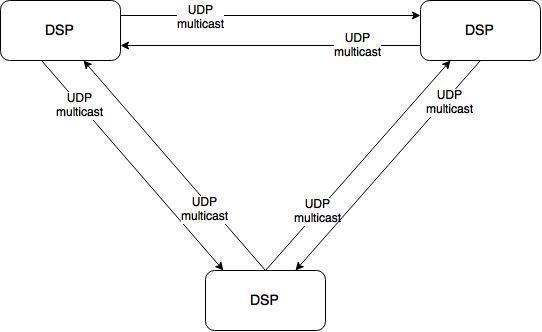

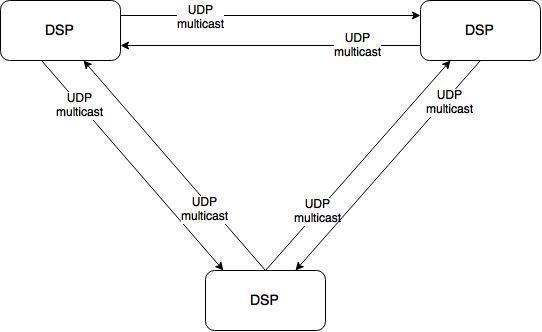

Now we will rise on level above and we will look at system entirely. These are 10 servers, each with one DSP instance. For correct operation instances need to exchange data. For example, about the degree of the campaign rollback (to avoid twisting) and the correct work of restricting specific campaigns to a specific user (frequency capping). To do this, every time a visitor acts, a notification is sent to other DSP instances over a UDP multicast. Thus synchronization is performed. No harsh frameworks are used here, only pure and uncomplicated hardcore.

As a result, we received a high-performance and fault-tolerant system, where each server processes up to 9000 requests per second with a response of up to 10 ms. We have passed a difficult path and now we understand exactly what a modern DSP should be and know in practice how to build it. We have plans for integration with new systems, improvement of procurement optimization and budget allocation. And much more interesting))

For those interested, the system configuration:

In the first article, we talked about our infrastructure as a whole. Now it's time to focus on specific products. This article is about DSP. As many know, DSP (Demand Side Platform) is an automated advertising purchase system. The system requirements are tough: it must hold a high load (thousands of requests per second), respond quickly (up to 50 ms, or even less) and, most importantly, choose the most appropriate ads. Most often, such projects are developed for Linux, we were able to create a truly high-performance service for Windows Server. How to achieve this, and how did we manage it? I'll tell you about it.

We have DSP consists of two applications: the actual bidder is a Windows service for interacting with the SSP, and DspDelivery is an ASP.NET application for the ultimate delivery and recording of interactive user actions. Delivery is more or less simple, but we'll take a closer look at the bidder.

')

Platform

The platform uses .NET, the main language is C #. Since we are writing a bidder, we need a web server and a binding. At first, we chose a simple path: we screwed up IIS, created an ASP.NET application with the ASP.NET Web API framework, and began to cut the business logic. It quickly became clear that the whole structure does not hold more than 500-700 requests per second. No matter how much we called in IIS or tweaked 100,500 parameters, the problem was not solved. And it didn’t seem to be possible to get inside the IIS, which means we won’t achieve complete control over the situation. IIS - the notorious black box, in which it is difficult to drastically change something.

Then we tried Katana project server (implementation of Microsoft's OWIN infrastructure). Katana is an open source project, so you could see the insides. In addition, the Web API has support for OWIN, which means it won't be necessary to change the code much. Katana provides an opportunity to work with IIS, as well as with their simple server, written on the basis of the .NET HttpListener. That is what we took. I was pleased with the result: now the server held about 2000 requests per second, and the ASP.NET application was transformed into a Windows service.

However, the server load increased, new features were sawed. It became clear that this option does not suit us either. Then we took drastic measures: from the whole Katana only HttpListener remained with a small binding for asynchrony, nothing was left of the Web API, that is, the application was completely sharpened by HTTP requests for bidder. As a result, the server was able to handle up to 9000 requests per second. The conclusion is simple: the whole OWIN- and Web API binding has a critical impact on high-performance applications. Want faster - write easier and non-universally. (This does not mean that there should be a nuclear govnod within the application. We have everything modular, quite expandable: DI, patterns and all that). Sample request processing code:

var listener = new HttpListener(); listener.IgnoreWriteExceptions = true; // listener.Start(); var listenThread = new Thread(() => { while (listener.IsListening) { try { var result = listener.BeginGetContext(ar => { try { var context = listener.EndGetContext(ar); byte[] buffer = null; object requestObj = ""; if (context.Request.HttpMethod == "POST") { try { if (context.Request.RawUrl.Contains("/openrtb")) { buffer = HandleOpenRtbRequestAndGetResponseBuffer(context.Request, out requestObj); } else if (context.Request.RawUrl.Contains("/doubleclick")) { buffer = HandleDoubleClickRtbRequestAndGetResponseBuffer(context.Request, out requestObj); } // } catch (Exception ex) { // } if (buffer != null) { WriteNotEmptyResponse(context.Response, buffer, "application/json"); } else { WriteEmptyResponse(context.Response); } } else { WriteNotFoundResponse(context.Response); } context.Response.Close(); } catch (Exception ex) { // } }, listener); result.AsyncWaitHandle.WaitOne(); } catch (Exception ex) { // } }); listenThread.Start(); Scheme of work

Let us return to the subject area and look at the bidder work scheme:

The scheme is simplified, but it’s clear what you should pay attention to. First, inventory (that is, a list of ads with restrictions). The list is stored in memory and updated once a minute. But for the user profile you need to climb into the database every time. In the same database, DMP updates profiles, which allows not to waste resources on remote interaction with data sources. Restrictions are set according to a flexible scheme: any atomic filters (belonging to an audience, geo, black and white lists of domains, sotsdem, etc.) are combined into any sequences and / or containers. Predictor allows you to optimize the purchase. And here we come to the question of choosing a database. Without a fast and scalable solution, it’s impossible to maximize performance.

Database

Here we also did not manage to get there the first time and we started with beautiful well-promoted solutions (no, not with MS SQL). First, we took MongoDB (2.4): attracted good performance, JSON-scheme, replication, sharding out of the box, supposedly simple support for expanding the cluster. In fact, everything was not so rosy. The locks on the write operations strongly hampered the system, sharding turned out to be difficult to configure (I still remember how we argued which shard key to choose for the collection) - the data did not want to be distributed evenly, and the data dump to disk (not so frequent, however) added additional locks.

The next attempt was Couchbase. There were no locks here and the data structure was simpler - the usual key-value. But there were still flaws: the load on the disk subsystem was excessive, as well as insufficient configurability and disgusting extensibility (or rather lack thereof). Technical support also left much to be desired.

But it was then that the harsh and expensive Aerospike database got a free license. This turned out to be the right solution. The speed has increased, the load on the disk has fallen, the configuration of the cluster has been simplified. Aerospike also had bugs. But after describing a problem on the forum (for example, a problem with sampling by range ), it was promptly fixed in the update. As a result, we have an Aerospike cluster of 7 servers that easily handles the entire load.

Integration

The main DSP integration is, of course, third-party SSPs. And they are clearly divided into two well-known groups: Russian and Western. Everything is simple with the Russians: they work using the OpenRTB 2 protocol. * And differ only in the presence or absence of additional features (such as support for a fullscreen banner). Each Western SSP operates on its own protocol and integration takes a not so short time. That is, you have to implement support for their protocol. The most famous example is Google.

It should be said about the purely technical interaction. First, information about the actions of visitors is sent to the DMP through Apache Flume. Another direction: uploading statistics to the Trading Desk. In this case, the corresponding Trading Desk service itself requests statistics for a certain minimum period and receives it in a packed form (zMQ + MessagePack). after which it groups and writes to its database (this is how Hybrid works).

Synchronization

Now we will rise on level above and we will look at system entirely. These are 10 servers, each with one DSP instance. For correct operation instances need to exchange data. For example, about the degree of the campaign rollback (to avoid twisting) and the correct work of restricting specific campaigns to a specific user (frequency capping). To do this, every time a visitor acts, a notification is sent to other DSP instances over a UDP multicast. Thus synchronization is performed. No harsh frameworks are used here, only pure and uncomplicated hardcore.

Result

As a result, we received a high-performance and fault-tolerant system, where each server processes up to 9000 requests per second with a response of up to 10 ms. We have passed a difficult path and now we understand exactly what a modern DSP should be and know in practice how to build it. We have plans for integration with new systems, improvement of procurement optimization and budget allocation. And much more interesting))

For those interested, the system configuration:

- 10 servers (Windows Server 2008 R2) for the application (bidder + delivery) - 2x Xeon E5 2620 with 6 cores each, 64 GB of RAM,

- 7 servers (CentOS 6.6) Aerospike - 1x Xeon E5 2620, 200 GB of RAM.

Source: https://habr.com/ru/post/261745/

All Articles