Web experiments # 1. Adaptability lvl. 80: Web Applications, Brain Waves and Attention Levels

The introductory part where I try to tell you that the current approaches to adaptability are kindergarten (in the sense that this is only the beginning) in the development of interfaces and interaction experience

Today, speaking of adaptability on the web, one usually understands such a simple and trivial thing as adjusting the display of a site (web application) to the width of the screen of the corresponding device. Responsive design, responsive design, rubber design - you just can’t hear. Some argue that all this has long been invented and introduced into everyday practice ten years ago, others present it as a breakthrough trend for which the future is, some still draw boundaries between terms, some think what browser features can be adapted to, and Now browsers embed responsive images , and not far off checking the availability of the necessary functionality in CSS! - Fifth are trying to look at the problem more broadly, including issues and accessibility, and issues of adjusting for different input methods, and a lot of oh yet. Of course, everyone, in a certain degree, is right.

Personally, I like the fifth camp most of all (see, for example, Aaron Gustavson’s article on the potential for developing adaptability, “ Where Do We Go From Here? ”), Which encourages thinking about adaptability in a broader sense, considering grids, transition points and beautiful animations between different states only as a special case. Of course, the case is important, curious and interesting to explore, beautiful and convenient for showing, demonstrating and persuading customers. (So that you don’t get the feeling that I’m concerned with this issue with restrained emotions, I’ll frankly say that I don’t: I’m generally delighted with the possible prospects for the development of adaptive interfaces, but the network and other trinkets are just the first step. I would be happy , if designers with the same enthusiasm were engaged in research and development of this subject, and not repeated from time to time the beaten decisions.)

')

However, imagine for a moment that a certain system (application) adapts not only, as is customary today, to the screen size and resolution, but also to the input methods (which is an additional emphasis in Windows 10); under the context of a person: light, time, location, events, etc. (what has been talked about for a long time as “context-aware” systems, Cortana, by the way, may well serve as such an example); his condition: emotions and mood, memory, state of health, attention (which is well shown in the feature film “She”), social context, including working relationships (for example, based on an office or social graph), and, of course, restrictions users - temporary or permanent. This is such a space from the design and design of interfaces, “rocket science”, in which the industry is just beginning to sink, requiring appropriate research and engineering solutions.

Step by step, we (here I am summarizing the industry) go to this.

Part theoretical, in which I come to a specific example of going beyond the usual pattern

I have had a funny device for some time now - MindWave Mobile, which I haven’t found practical use for a long time, except for “trying to count the waves of my brain in demonstration examples”.

For those who hear about him for the first time, I will briefly tell you what it does. You put this thing on your head, snap the clip on the earlobe of your ear and attach another connector to your forehead (you wouldn’t say that you can do active exercises in such a state or endure a clothespin on the ear for a very long time). Then the circuit closes and the device begins to read alpha, beta, and other rhythms of your brain and simultaneously calculate two integral parameters: the level of attention and the level of meditation. All this via Bluetooth (BLE) is transmitted to the connected signal receiver (smartphone, tablet, laptop, etc.), where the corresponding application does something with it.

Such is the entertainment. There is an SDK, it is possible for fun to write an application where you crush evil thoughts with magical energy of evil bugs or trolls. You can just try to collect statistics, but I suspect that you need to be a little neuro-someone to sanely read the readings of the sensors. You can simply train in the concentration of attention or education of unconsciousness of consciousness.

In general, the device lay in my box for almost a year until three things happened.

- there was a good wrapper for WinRT, which I was more or less able to work from JavaScript (I feel so comfortable, although it didn’t work without C # code),

- in the context of Windows 10 appeared so-called. Hosted Web Apps (this is a topic for a separate story, but see briefly below),

- I thought that why would I not try to adapt some web experience for brain activity.

Well, I tried.

The idea for the experiment: what if the video on the site will change the playback speed depending on the level of attention of the viewer? This is just such a discontinuous (in the sense of brain patterns) script, which is not about grids and adaptive pictures.

The practical part, in which I open Visual Studio and begin to code the code

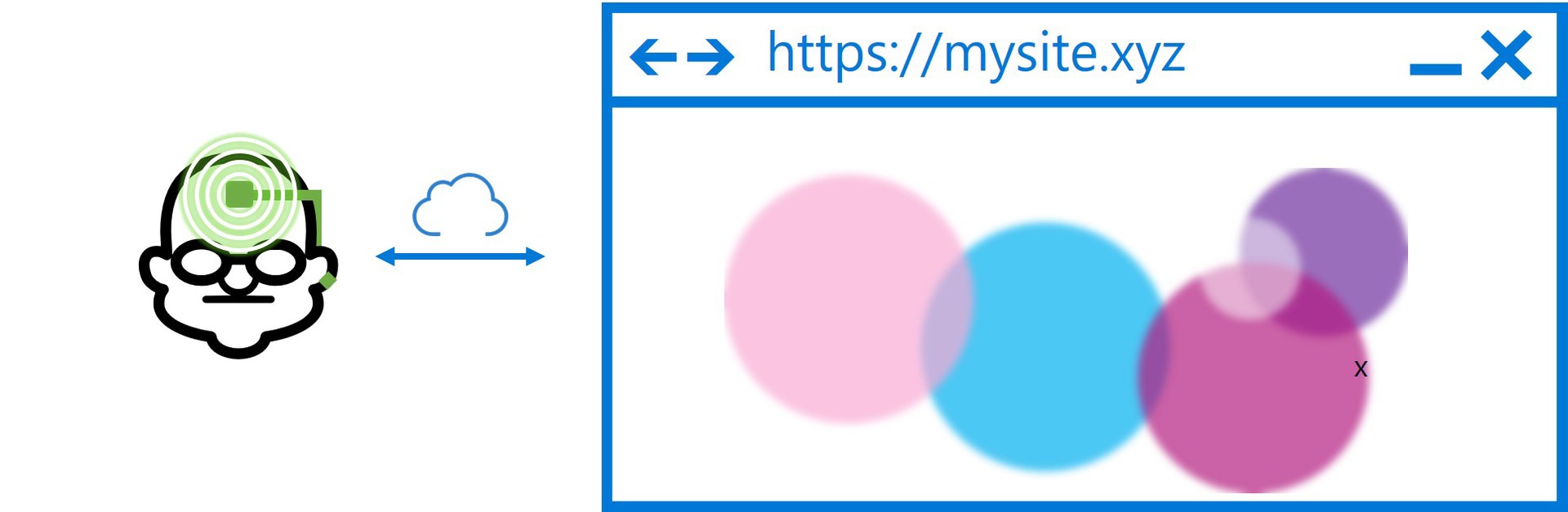

The entire solution, conditionally, consists of three parts:

- The application for Windows 10 on HTML / JS, in the manifest of which an external site is registered as the start page, and the access rights to the system APIs are indicated - this is the " Project Westminster " (UWP Bridge for Windows 10).

- A WinRT component for working with a MindWave device, a wrapper over the corresponding library, marked as available for the web context (this is important!).

- The site itself on HTML / JS, located anywhere, which focuses all the logic and display.

Let's understand in order.

Application. Since I have no goal in this article to dive into the history of web applications in Windows 10, I’ll just say a few things.

First: each application has a start page (entry point) from which it begins. Previously, in Windows 8, 8.1 and Windows Phone 8.1 in HTML / JS applications, this could only be a page inside a package. In Windows 10, application content can be almost completely hosted on the server side:

<Application Id="App" StartPage="http://codepen.io/kichinsky/pen/rVMBOm"> Here I just put the code on CodePen, for the experiment is quite suitable.

Second: in the application you need to explicitly allow, from which domains you allow the content and access to a particular functionality (WinRT, components).

<uap:ApplicationContentUriRules> <uap:Rule Type="include" WindowsRuntimeAccess="allowForWebOnly" Match="http://codepen.io/kichinsky/"/> <uap:Rule Type="include" WindowsRuntimeAccess="allowForWebOnly" Match="http://s.codepen.io/"/> </uap:ApplicationContentUriRules> WinRT component. As a basis, I took a ready-made library available through NuGet - Neurosky SDK from Sebastiano Galazzo. To install, you can use the command in the package manager:

PM> Install-Package Neurosky For use in an HTML / JS application, one library is not enough; you need a WinRT component with the corresponding data. Therefore, without hesitation, I wrapped the methods I needed in the appropriate component (in fact, a class in C #).

[Windows.Foundation.Metadata.AllowForWeb] public sealed class MindWaveDevice { private IMindwaveSensor mindDevice; private MindWaveDevice(IMindwaveSensor device) { this.mindDevice = device; this.mindDevice.StateChanged += MindDevice_StateChanged; this.mindDevice.CurrentValueChanged += MindDevice_CurrentValueChanged; } …. } As I said above, the class needs to be marked with metadata as accessible to the web context. Outlined: receiving a device object with the ability to subscribe to state change events and data. Further, as soon as the device is connected and the user is allowed to work with it, a data stream of approximately the following format begins to fall:

public struct MindReading { public int AlphaHigh; public int AlphaLow; public int BetaHigh; public int BetaLow; public int Delta; public int Attention; public int Meditation; public int GammaLow; public int GammaMid; public int Quality; public int Theta; } In JavaScript, this is all translated by an object with corresponding numeric (Number) fields.

Site. In principle, for the experiment it is absolutely not critical where the data is hosted (at least localhost). Inside the site you work the way you used to work, but with one small addition. Imagine that the target browser suddenly learned to remove the user's brain activity and put you at the appropriate API.

Following the tradition of “feature detection”, you check the availability of the API and, if everything is in order, expand the functionality of the site:

if (window.MindWaveController != undefined) { MindWaveController.MindWaveDevice.getFirstConnectedMindDeviceAsync().then(function (foundDevice) { mindDevice = foundDevice; mindDevice.onstatechangedevent = stateChangedHandler; mindDevice.onvaluechangedevent = valueChangedHandler; }); } At the right time, data begins to come to you:

function valueChangedHandler(reading) { if (mindDevice.currentState == "ConnectedWithData") { attentionBar.value = reading.attention; meditationBar.value = reading.meditation; …. } } And it's all! All the magic is in the simplicity of this solution: everything just fits in and starts working.

Well, then you do whatever your heart desires. I started with a simple experiment and tried to visualize the data:

The experimental part in which I began to adapt the video, taking into account the signals of the cortex of my brain

In the brain waves, I must admit, I am a complete layman. Of course, I know that they say they are different and that they are activated differently at different moments of life. For example, in some clever book they told that the theta waves were suppressed while observing the activity of another person. I'll have to check it out somehow.

But, fortunately, the device has a small controller that can produce the magical meanings of the attention and meditativeness of the patient. For mere mortals - that is necessary.

Then I wondered why this might be needed in practical application. I started to imagine sites that can respond to the visitor's brain activity ...

At first, I was thinking about various complex scenarios, starting with collecting statistics on the visitor's brain activity on different pages, to various automated scenarios like speeding through the text in a book. Until finally, I didn’t reach the video playback and remembered that in HTML5 Video there’s even the playbackRate property that can be controlled.

Things are easy: add a video to the page and see what happens.

Conversion of attention to speed. The first thing to do was to figure out how to relate attention (0-100) to speed (1.0 is normal playback speed). Experimentally decided to limit the speed range from 0.5 (slow playback) to 2.0 (fast). Make a simple mapping:

var playRates = [0.5, 0.7, 0.8, 0.9, 1.0, 1.1, 1.2, 1.4, 1.6, 1.8, 2.0]; function attention2speed(attention) { var speed = 0; if (attention > 30) { speed = playRates[Math.floor((attention - 30) / 7)]; } else { speed = 0.5; } return speed; } All parameters are experimental without an explicit scientific component behind them, albeit with a certain logic. The main idea: with low attention, we set a small speed, and then we do a discrete display.

Next step: change the playback speed. Unfortunately, an attempt to make in the forehead, that is, to change the playback speed when new information was received, failed miserably. First, attention values will download, that is, they need to be averaged, and secondly, when updating too often (for example, at each piece of data or each frame), the video playback speed starts to “lag” - and it becomes impossible to listen to it frankly.

Therefore, also experimentally and from personal preferences, I limited myself to a simple scheme:

- collect values from the brain in the last 5 seconds,

- we average and update the playback speed accordingly.

Like that:

function getMidSpeed() { var speed = 0; var count = speedBuffer.length; if (count == 0) { speed = video.playbackRate; // } else { for (var i = 0; i < count; i++) { speed += speedBuffer[i]; } speed = speed / count; } return speed; } function updatePlaybackRate() { var speed = getMidSpeed(); speedBar.value = speed; video.playbackRate = speed; if (video.paused) video.play(); speedBuffer = []; } Here's what it looks like:

Part of the final, with fantasies

Of course, this is just an experiment with practical overtones, and it is unlikely that in the near future we can expect a mass appearance of devices capable of transmitting a person’s emotional state or some integral indicators of his brain or brain activity to a site or application.

Although remember the "girl" from the film "She", in theory, the film focuses on voice control and interaction, but since the hero was put in the ear, why not assume that it can read the pulse and run, take some other options?

If the interaction object “at the other end of the wire” can “adapt” to the actions of a person and his state, then this is precisely that adaptability in the broad sense of the word.

Or imagine a Google Analytics report or Yandex. Metrics in a few years:

- Chief, according to fresh statistics, on a test group of users, adding images of spotted kittens increased emotional attachment to our site and the level of involvement by 10 points!

- Great job! Have you tried to show striped kittens?

- In an hour we will try on the second group, I will return with statistics.

Technologically, the beauty of it is that you can do it on the same site that you are doing now, on the fly, just as you are collecting visitor statistics today. We just need users with a "special" user agent, who can read the necessary indicators.

Concluding, my fantasies will note one more observation. The average temperature in the hospital suggests that today it has become fashionable to make adaptive sites that adapt to the form factor of the user's device . I hope that someday it will be just as fashionable to make websites that adapt to the users themselves .

ps I'm waiting for the tracker eye to be brought.

Source: https://habr.com/ru/post/261727/

All Articles