Pitfalls of A / B testing or why 99% of your split tests are conducted incorrectly?

The “hot” and frequently discussed topic of conversion optimization led to unconditional popularization of A / B testing, as the only objective way to learn the truth about the performance of various technologies / solutions related to the increase in economic efficiency for online business.

Behind this popularity lies the almost complete absence of culture in the organization, conduct, and analysis of the results of experiments. In Retail Rocket, we have accumulated a great deal of expertise in assessing the cost-effectiveness of personalization systems in e-commerce. For two years, the ideal process of conducting A / B tests was rebuilt, which we want to share in this article.

Two words about the principles of A / B testing

In theory, everything is incredibly simple:

- We hypothesize that some kind of change (for example, personalization of the main page ) will increase the conversion of the online store.

- Create an alternative version of the site "B" - a copy of the original version "A" with the changes from which we are waiting for the growth of the effectiveness of the site.

- We randomly divide all site visitors into two equal groups: we show the original version to one group, the second alternative - to the second one.

- At the same time we measure the conversion for both versions of the site.

- We determine the statistically significant winning option.

The beauty of this approach is that any hypothesis can be tested using numbers. There is no need to argue or rely on the opinion of pseudo-experts. We launched the test, measured the result, and proceeded to the next test.

')

Example in numbers

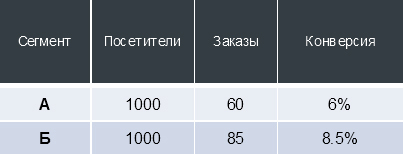

For example, imagine that we made a change to the site, launched the A / B test and obtained the following data:

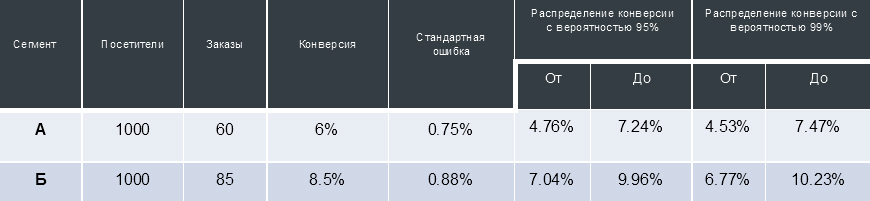

Conversion is not a static value, depending on the number of “trials” and “success” (in the case of an online store, site visits and completed orders, respectively), the conversion is distributed in a certain interval with a calculated probability.

For the table above, this means that if we list another 1000 users for the site version “A” under unchanged external conditions, then with a probability of 99% these users will make from 45 to 75 orders (that is, converted into buyers with a ratio from 4.53% to 7.47 %).

By itself, this information is not very valuable, however, during the A / B test, we can get 2 intervals of distribution of conversion. Comparing the intersection of the so-called “confidence intervals” of conversions received from two user segments interacting with different versions of the site, allows you to decide and argue that one of the tested site variants is statistically significantly superior to the other. Graphically, this can be represented as:

Why are 99% of your A / B tests conducted incorrectly?

So, the majority of the above described concept of conducting experiments is already familiar, they tell about it at industry events and write articles. Retail Rocket simultaneously passes 10-20 A / B tests, over the past 3 years we have been faced with a huge amount of nuances that are often ignored.

There is a huge risk in this: if the A / B test is conducted with an error, then the business is guaranteed to make the wrong decision and take hidden losses. Moreover, if you previously performed A / B tests, then most likely they were conducted incorrectly.

Why? Let us examine the most frequent mistakes that we encountered in the process of conducting a variety of post-test analyzes of the results of experiments conducted when implementing Retail Rocket into our customers' online stores.

Audience share in the test

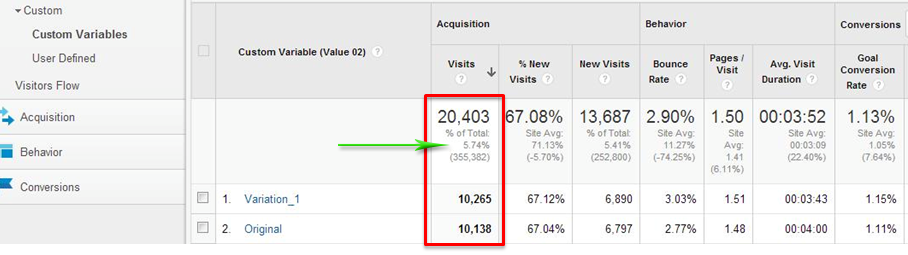

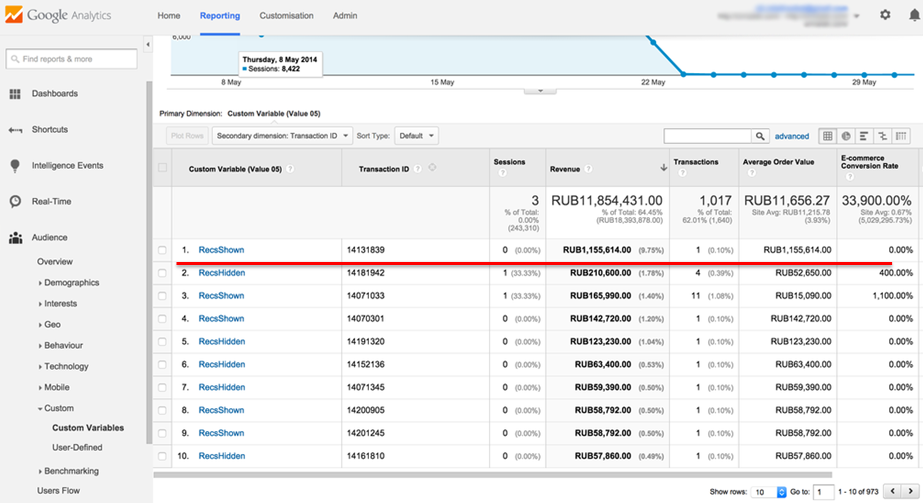

Perhaps the most common mistake - when testing starts, it is not checked that the entire audience of the site participates in it. A fairly frequent example of life (screenshot from Google Analytics):

The screenshot shows that in total, a little less than 6% of the audience took part in the test. It is extremely important that the entire audience of the site belongs to one of the test segments, otherwise it is impossible to assess the impact of the change on the business as a whole.

The uniform distribution of the audience between the tested variations

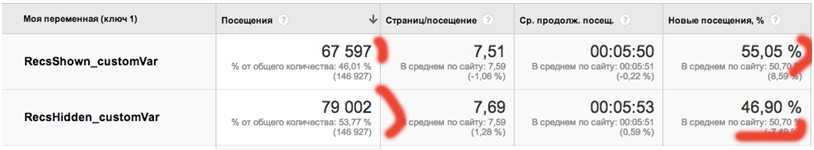

It is not enough to distribute the entire audience of the site into test segments. It is also important to do this evenly across all cuts. Consider the example of one of our clients:

Before us is a situation in which the audience of the site is divided unevenly between the segments of the test. In this case, a 50/50 traffic division was set up in the test tool settings. This picture is a clear sign that the traffic distribution tool does not work as expected.

In addition, pay attention to the last column: it is clear that the second segment gets more repeated, and therefore more loyal audience. Such people will make more orders and distort the test results. And this is another sign of incorrect testing tool.

To exclude such errors a few days after testing starts, always check the uniformity of traffic division across all available slices (at least by city, browser and platform).

Filtering staff online store

The next common problem is related to employees of online stores, who, once in one of the test segments, place orders received by telephone. Thus, employees form additional sales in one segment of the test, while the callers are in all. Of course, such anomalous behavior will ultimately distort the final results.

Call center operators can be identified using the report on networks in Google Analytics:

In the screenshot, an example from our experience: a visitor visited the site 14 times under the name “Electronics Shopping Center on Presnya” and placed an order 35 times - this is a clear behavior of a store employee who, for some reason, ordered orders through a basket on the site, and not through the store admin panel.

In the screenshot, an example from our experience: a visitor visited the site 14 times under the name “Electronics Shopping Center on Presnya” and placed an order 35 times - this is a clear behavior of a store employee who, for some reason, ordered orders through a basket on the site, and not through the store admin panel.In any case, you can always unload orders from Google Analytics and assign them the property “decorated by the operator” or “decorated not by the operator”. Then build a pivot table as in the screenshot, reflecting another situation that we face quite often: if you take the revenues of the RR and Not RR segments (“site with Retail Rocket” and “without”, respectively), then “site with Retail Rocket” brings less money than “without”. But if you highlight the orders issued by the operators of the call-center, it turns out that Retail Rocket gives a revenue increase of 10%.

What indicators should pay attention to the final assessment of the results?

Last year, an A / B test was conducted, the results of which were as follows:

- + 8% for conversion in the segment “site with Retail Rocket”.

- The average check practically did not change (+ 0.4% - at the level of error).

- Growth in revenue + 9% in the segment “Website with Retail Rocket”.

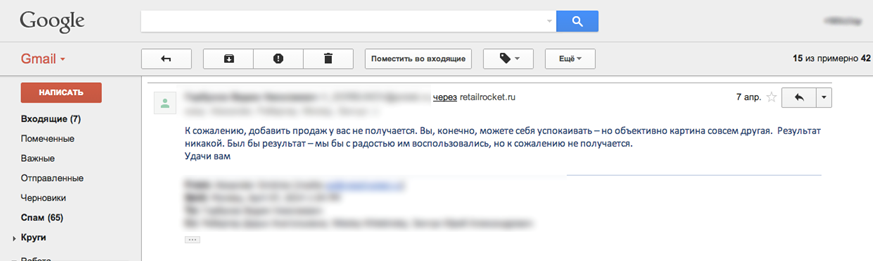

After reporting the results, we received a letter from the client:

The manager of the online store insisted that if the average check did not change, then there was no effect from the service. At the same time, the fact of total increase in revenue due to the recommendation system is completely ignored.

So which indicator should you focus on? Of course, the most important thing for business is money. If, as part of an A / B test, traffic is divided evenly between the segments of visitors, then the desired indicator for comparison is the revenue for each segment.

In life, no tool for randomly dividing traffic gives absolutely equal segments, there is always a difference in a fraction of a percent, so you need to rationalize the revenue by the number of sessions and use the “revenue per visit” metric.

This is recognized in the world of KPI, which we recommend to focus on when conducting A / B tests.

It is important to remember that revenue from orders placed on the site and “executed” revenue (revenue from actually paid orders) are completely different things.

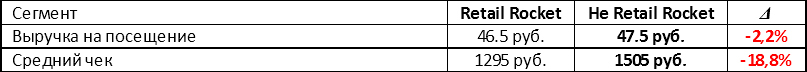

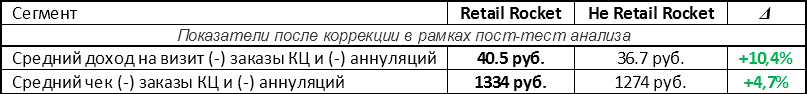

Here is an example of an A / B test, in which the Retail Rocket system was compared with another recommender system:

The “non Retail Rocket” segment wins in all respects. However, within the next stage of the post-test analysis, the call-center orders, as well as canceled orders were excluded. Results:

Post-test results analysis - a mandatory item when conducting A / B-testing!

Data slices

Working with different data slices is an extremely important component in the post-test analysis.

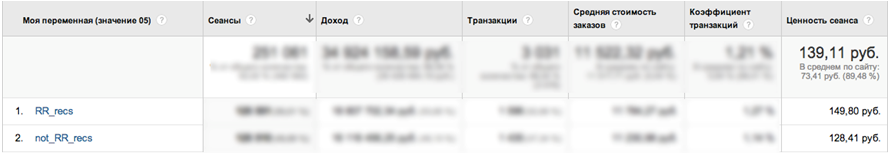

Here is another test case for Retail Rocket at one of the largest online stores in Russia:

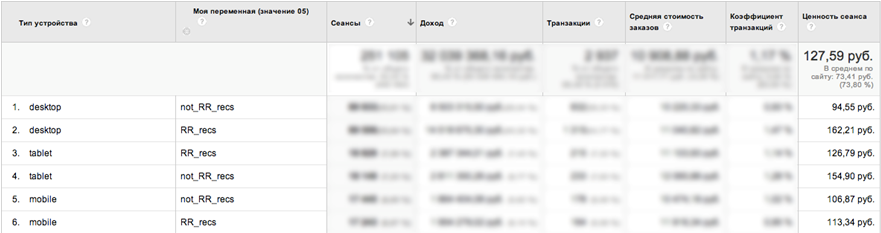

At first glance, we got an excellent result - revenue growth + 16.7%. But if you add an additional slice of data “Device Type” to the report , you can see the following picture:

- Desktop traffic revenue growth of almost 72%!

- On tablets in the Retail Rocket segment drawdown.

As it turned out after testing, blocks of Recommendations Retail Rocket were incorrectly displayed on the tablets.

It is very important in the post-test analysis to build reports at least in the context of the city, browser and user platform, so as not to miss such problems and maximize the results.

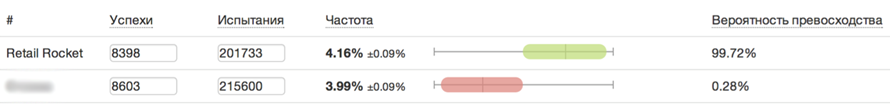

Statistical confidence

The next topic that needs to be addressed is statistical confidence. It is possible to make a decision on the introduction of changes to the site only after the statistical certainty of excellence has been achieved.

To calculate the statistical accuracy of the conversion, there are many online tools, for example, htraffic.ru/calc/ :

But conversion is not the only indicator that determines the economic efficiency of the site. The problem with most A / B tests today is that only the statistical confidence of the conversion is checked, which is insufficient.

Average check

The revenue of the online store is based on the conversion (the share of people who buy) and from the average check (purchase size). The statistical reliability of a change in the average check is more difficult to calculate, but without this in any way, otherwise incorrect conclusions are inevitable.

The screenshot shows another example of the Retail Rocket A / B test, in which an order worth more than a million rubles got into one of the segments:

This order constitutes almost 10% of the total revenue for the test period. In this case, when achieving statistical confidence in conversion, can the results in revenue be considered reliable? Of course not.

Such huge orders significantly distort the results, we have two approaches to post-test analysis from the point of view of the average check:

- Complicated. " Bayesian statistics ", which we will discuss in the following articles. In Retail Rocket, we use it to assess the reliability of the average receipt of internal tests for optimizing recommendation algorithms.

- Plain. Cutting off several percentiles of orders at the top and bottom (usually 3-5%) of the list, sorted in descending order amounts.

Test time

And finally, always pay attention to when you run the test and how long it takes. Try not to run the test a few days before major gender holidays and on holidays / weekends. Seasonality is also traced to the level of salaries: as a rule, it stimulates the sale of expensive goods, in particular, electronics.

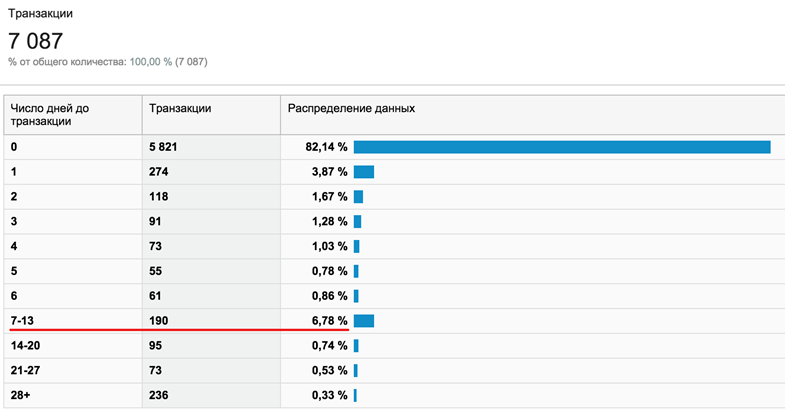

In addition, there is a proven relationship between the average check in a store and the time it takes a decision to purchase. Simply put, the more expensive the goods - the longer they are chosen. In the screenshot is an example of a store in which 7% of users think about 1 to 2 weeks before buying:

If an A / B test is conducted for less than a week on such a store, then about 10% of the audience will not get into it and the impact of the change on the website on the business cannot be unambiguously assessed.

Instead of output. How to conduct a perfect A / B test?

So, to eliminate all the problems described above and to conduct a proper A / B test, you need to perform 3 steps:

1. Split traffic 50/50

Difficult: using a traffic balancer.

Simple: use the open source library Retail Rocket Segmentator , which is supported by the Retail Rocket team. For several years of testing, we were unable to solve the problems described above in tools like Optimizely or Visual Website Optimizer.

Goal on the first step:

- Get a uniform distribution of the audience across all available sections (browsers, cities, traffic sources, etc.).

- 100% of the audience should get into the test.

2. Conduct A / A test

Without changing anything on the site, transfer to Google Analytics (or another web analytics system that you like) different user segment identifiers (in the case of Google Analytics - Custom var / Custom dimension).

The goal in the second step: not to get a winner, i.e. in two segments with the same versions of the site there should be no difference in key indicators.

3. Conduct post-test analysis

- Exclude company employees.

- Cut out extreme values.

- Check the significance of the conversion value, use performance data and cancellation of orders, i.e. take into account all the cases mentioned above.

The goal in the last step: make the right decision.

Share your case studies of A / B tests in the comments!

Source: https://habr.com/ru/post/261593/

All Articles