How we transport data centers (the complexity of data center migration in the middle lane)

The result of moving and combining the two server and telecommunications from the office

Sometimes you need to take and transport the data center to a new place. The reasons are very different. For example, moving a large office with a data center inside. Or gathering server of a large Russian company from the regions to Moscow. Or here's a fun case - the union of banks, when you need to combine two data centers into one.

I personally participated in 7 moves, and our team dragged over exactly 30 large objects. Therefore, we know a lot about perversions.

')

Relocation of IT equipment is different from the classic move in that you can not just take and move everything to another point on Saturday evening. The problem is that IT services are needed around the clock and without downtime. Plus, a lot of nuances on the temporary and new network infrastructure, on transporting hard drives in old servers and pulling out two-ton storage systems by crane from the windows of the office, where after the check-in doors and carpeting were installed.

1. Great preparation

First comes the customer and says: "I need to go and move." The customer, as a rule, is a large business that has already transported an office or a small server business. Therefore, in general, it represents the scale and approximate outlines of possible problems. The main requirement at this stage is to do everything smoothly, calmly and without situations, about which the stories will later be told. That is the most boring and predictable.

The first stage is the coordination of technical requirements. It happens in different ways: sometimes the customer asks us to make a relocation plan, sometimes brings ready and asks to check. Best of all went with one European bank: it came with ready documents, but we made edits and comments, for example, due to the fact that in one iteration it is better to transport fewer racks (because of the need to mount everything on the receiving site without an “ant hill” from 10 engineers). From experience, we know that 6 people at the installation is normal, and 10 are already beginning to interfere with each other.

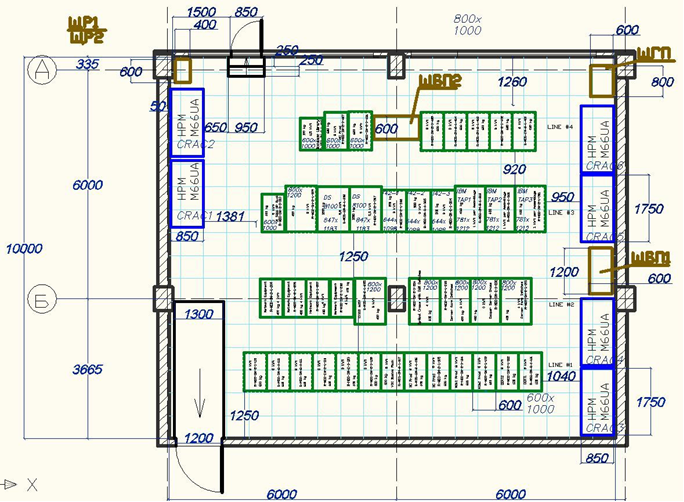

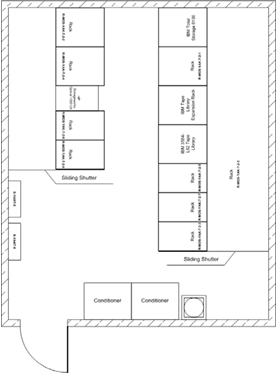

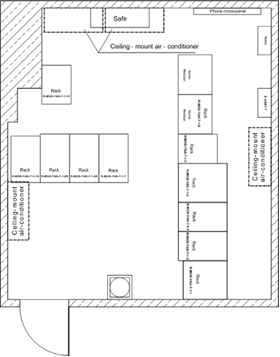

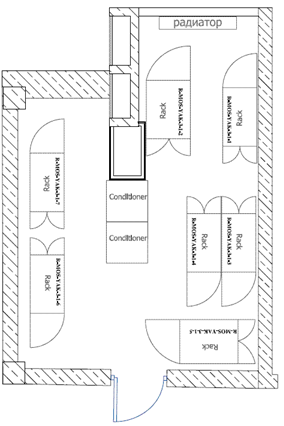

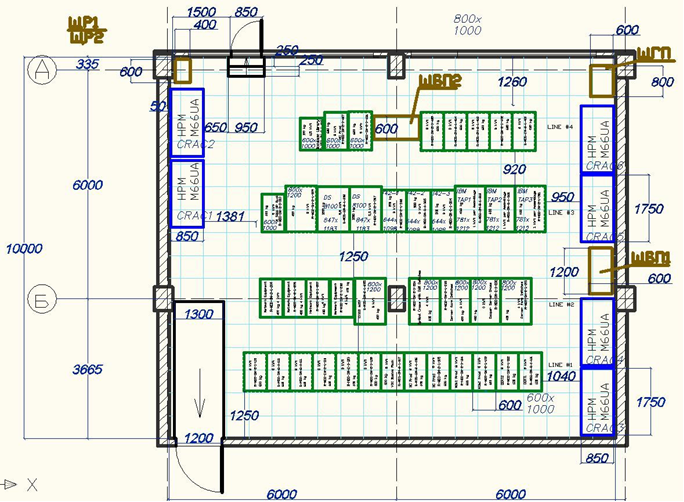

The result of the first stage is the diagrams of both data centers before, after and “make time” during the iterations of the move, an accurate understanding of what equipment and how it will be delivered, accurate switching for each stage and a list of hardware by serial numbers, who and when and how it goes. Here is an example of combining three server servers into one.

Before:

Main server room on the 7th floor of the office

Telecommunication room on the 7th floor of the office

Server room on the 3rd floor of the office

After (you have already seen this diagram above):

If there is a downtime (sometimes it is possible), it is coordinated, so travel is planned at the weekend. But, by the way, the January holidays are rarely very busy for us in terms of work.

2. Technical training

Then we start to prepare technically. By the third move, you understand that the main parts of the work are usually done without failures, but the greatest attention should be paid to trifles. For example, a forgotten screwdriver with the necessary form factor is a simple minimum of 15 minutes, which is highly undesirable. We have a huge check-list of equipment such as scotch tape, markers, screwdrivers, and so on.

Then in stock we postpone spare parts for the most critical glands. Yes, here I must say that we have very large service warehouses, so almost all systems can find analogues. If a hard disk, a power supply unit or a motherboard fails (after these components fly most often) after transportation, another one will be brought up exactly one hour from the warehouse. This is especially important for customers carrying equipment not under warranty.

The result of this stage - everything from our side is ready for the implementation of the move.

3. Work at the sites

The first iteration begins in the evening before moving to the original site of the customer. With the help of etiquette-printer each cable and each server is marked, so that they immediately form the correct commutation on the “other” side. Moreover, the marking is not done by “AS IS”, but according to the plan of the new switching, so that the receiving engineers can immediately assemble as it should. This is important because from one rack of the current site, the equipment may well spread out over the 5 posts of the new site. The most important label is the rack number on the new site and the unit on the new one, in order not to keep the equipment in the corridor, but immediately put it after unpacking: TIER III data centers often do not allow more than 15 minutes of equipment downtime in the technical corridor. Sometimes security guards do not like to see equipment outside their fences either. Therefore, it simply gets out of the box, rails are inserted, immediately mounted.

It is impossible to do strong marking in advance: on the last day there may well be substitutes for what goes and what doesn't because of the previous iteration and the work of those involved in the software part. And so - two or three hours before the move is quite good to do it. Marked in the evening, dismantled in the morning, the loaders pick up.

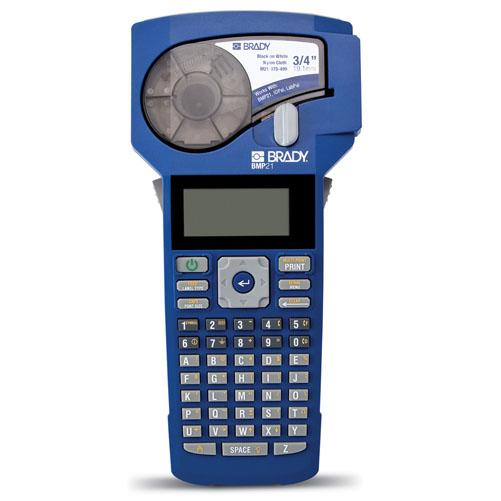

This is a good printer that we use to label wires. Self-adhesive tape, cut off by pressing on the sides (gray buttons), there are knives inside. They need to be changed sometimes. Printer programmable, printed labels in batches. Each cable was numbered from two sides - the name of the port for each.

Everything is packaged first in a healthy antistatic, then in a huge pile of film with pimples, then in a corrugated cardboard. Yes, we work with a proven logistics company that has done many moves with us. They are well aware of the specifics: the right number of belts for different racks, the most flat distribution of servers on the body. Know that you can not put the server on the server. They know that the servers (oh, horror!) Can not be turned over in the process of transportation. Why they don’t know, but what kind of a coup they tear off their hands is well understood.

Equipment is almost always insured (except for the very old, ready to be written off). Insurance even against the coup machine, accident, flooding and falling in the hands of porters. I (pah-pah) have not yet had serious insurance claims, but, of course, it happened that the old HDDs could not stand the road.

4. Next iteration

On-site - switching in the new scheme (we do in most cases we), the launch and verification of the server’s performance remain at the customer and his engineers, sometimes with our help. We leave the site only when the customer picks up all the services whose equipment was transported at the current iteration.

Then the next iteration of the move is performed. If the current was the last - everything is checked and the move ends.

Special features

One of the longest preparation processes is the new network infrastructure. As a rule, at the time of the move, the services do not stop, because we make two Active-Active instances, and then we disconnect one, transport and connect to a new place. That is, at the time of transportation and installation, the system remains without a hot reserve, except perhaps with a backup. Sometimes you need to translate only 5 servers, but we do it in 3 stages, because they reserve each other, and you cannot lose fault tolerance.

Often you need to save the network topology even during the iterations of the move, so as not to rebuild, for example, everything in the regions that knocks on the head data center. Or, you need to immediately develop a new scheme and immediately include it in it - but so that nothing changes for the end user.

For example, in 2011, two large banks went through the merger procedure - they had to combine the bases, processing, and harmonize the systems. It was necessary to transport the office and data center of one bank to the territory of the second. This was done within Moscow. The project consisted of 6 stages. Physically, a lot of equipment, it was necessary to agree on a schedule for shutting down systems. The bank put systems into operation at the new site, we transported more servers, the customer combined them with the previous ones, then we delivered a new batch. Once a week or two dragged, depending on readiness. Of the features, there was a new switching system, and the bank sysadmins did a completely magical thing - they took our schemes and provided patchcords of exactly that length needed for them. The patch cords were each more than the previous 20-30 cm, so there were no wires and loops hanging like snot in three turns. At this installation, vendor employees came to us, following the disconnection of heavy iron, because it was under warranty.

There are difficult moves. For example, I somehow drove the data center, which we decided to move from the office to our TIER III collocation. The office moved and the new one was not intended for equipment. In general, they came to their old office in such a way that there were only three servers, so they just put them in the little room. Then, as is usual with the timeframes, for almost 10 years the little room added greatly to the equipment: there were more racks, air conditioning on the floor, blades ... One of the closets did not fit into the elevator at all, had to be removed by a crane through the window.

Cases have been different. Once they collected iron from all over the country, because they consolidated the regions in the central office when they implemented VDI. It turned out cheaper in terms of hardware and support, plus it is convenient to administer. For the sake of one piece of iron it is not necessary to keep specialists in the field.

From Vladivostok carried by plane, it was necessary to fasten on pallets, shaking. For such situations, factory packages are often needed - we try to find the “native” one, because there is foam plastic and screeds. Just a film and a corrugation is not enough, there can be strong shocks on the plane. Usually the customer keeps the packaging himself, plus we have a boxed one in the warehouse - 2-3 samples of all that has ever arrived to us. Very useful, because if a customer threw out boxes from large RISC servers, we definitely have a couple of the same ones.

Once the customer insisted that we pull out the hard drives and take them separately from the servers. First we took out the disks, numbered them in the order of insertion, then packed each disk separately. Folded in sets of 8 pieces per carton. During this move dropped 5 discs from 5 racks of half stuffing. Not everyone experienced the landing-landing: for disks it is sometimes more stress than moving to their native slots. Then they stopped getting, for the remaining 5 iterations only two disks died. Generally, there is shamanism, of course: it happens that a server for 2 years of uptime is worth it, and then someone just turns it on and off - and oops - the HDD does not work.

Once a huge metal doorway was dismantled - the door to the data center was not large. Twisted, reamed to remove the lower threshold. The tape library did not pass. When they dragged her there, the door was not there. Fooled, demons!

At the first stage, you need to fool about passes for everyone. We, for example, had a completely enchanting case recently: one of the objects of heightened responsibility was not allowed foreign loaders. At one o'clock in the morning, the contractor had to change people.

On large-scale moving groups of engineers work shifts. For example, many racks moved at once. On Friday at 21:00, the backup nodes stop, and on Saturday at 9:00, you need to start them to synchronize with the main ones and turn them into leading ones. 12 hours in a row is difficult to work, so some people will unmount, and others will mount. The manager stays and watches everything from beginning to end.

Prices

Our engineer’s hour of work rate is slightly higher than the market average (not an order of magnitude). But this price is usually fully satisfied with an experienced customer, because he knows what he pays for. We do not charge the cost of the transportation itself (how much the transport gives out - we broadcast it directly). Plus there is an infrastructure for replacements in which case. We still very clearly and reasonably show how much time it takes: 2 hours - disassembly, 3 hours - assembly, relocation, simple. The cost is voiced at the first stage of preparation and does not change, even if there were any states of emergency. If the disks fly out, there will be more hours after the fact, but we will not take extra for them.

Sometimes the customer himself increases the number of hours by 6–12: “Let's lay down the next day and one specialist in case of unforeseen circumstances, we may need something, for example, to rewire after launch”.

It is difficult with contests - it’s hard to say the terms of work to an exact understanding of the switching scheme, so you have to lay the plug.

Something like that. If suddenly there is a question not for comments - write to IShklyaev@croc.ru. By the same mail, I can preliminarily count the move (free of charge), so that there is a reference point if you take something responsible.

Source: https://habr.com/ru/post/261441/

All Articles