Environment for Dell VDI Virtual Machines

Today we will talk about VDI ( Virtual Desktop Infrastructure ) - the deployment environment of virtual machines. It is needed primarily for those who work with large amounts of data. And in order to demonstrate the capabilities of the infrastructure, we deployed a test installation of VDI - it is running under VMware Horizon View and includes 800 workstations. For maximum data transfer rates, we used Dell Fluid Cache for SAN technology and a Dell Compellent disk array.

Part 1: Formulate the task and describe the system configuration

Why use virtual machines? Simply, it is profitable. Let's start with the fact that "virtuals" allow you to save on the purchase and maintenance of equipment, they are safe and easy to manage. Even an outdated computer and a mobile device that is no longer able to perform its basic functions can become a terminal in a virtual environment - sheer advantages! However, this applies to "normal" jobs. Developers, researchers, analysts and other professionals working with large amounts of data, usually use powerful workstations. The Dell VDI platform is designed to virtualize just such machines.

')

Here is what we had to do:

• Develop a holistic infrastructure for Horizon View and VDI based on vSphere for “hard” tasks. We were going to use Fluid Cache technologies, Compellent disk arrays, PowerEdge servers and Dell Networking switches.

• Determine the performance of the assembly at each level of the implementation of the VDI stack with high requirements for the exchange with the disk subsystem. We considered it as 80-90 IOPS per 1 VM during the whole working process.

• Determine the drop in performance at peak loads (the so-called Boot and Login Storms).

The hardware and software platform consisted of:

• VMware Horizon View 6.0,

• VMware vSphere 5.5 hypervisor,

• Dell PowerEdge R720 servers with Dell Express PCIe SSDs installed,

• Dell Networking S4810 switches,

• Dell Compellent SC8000 disk array.

What is written below will be interesting for system architects who plan to implement virtualization technologies for high-load user workstations.

System components

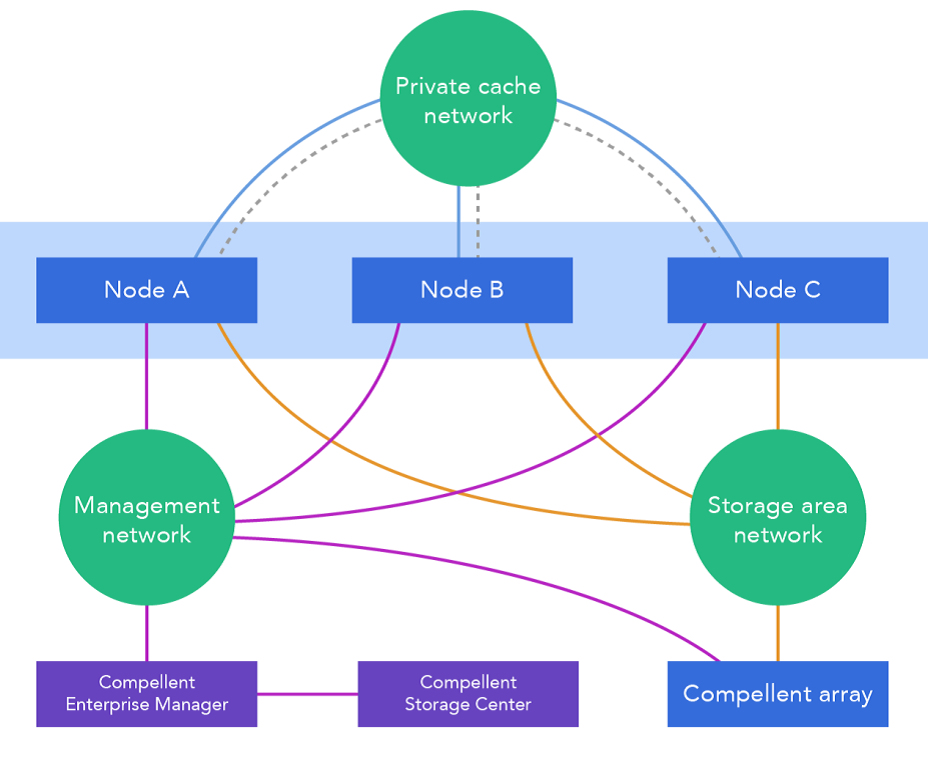

1. Dell Fluid Cache for SAN and VDI

This technology uses the Express Flash NVMe PCIe SSD installed in servers and provides remote memory access (RDMA). An additional cache level is a fast intermediary between the disk array and servers. Features of the architecture is that it is initially parallelized, resistant to failures and ready for load distribution, and paired with a high-speed SAN allows you to install disks for caching at least in all servers of the assembly, at least in some of them. Faster data sharing will be even those machines that do not contain Flash NVMe PCIe SSD. Cached data is transmitted to the network using the RDMA protocol, the advantage of which, as is well known, is in minimal delays. As Dell Compellent Enterprise software detects cache components, a Fluid Cache cluster is created and configured automatically in it. The fastest way when using Fluid Cache is to write data to an external disk array - this increases the number of IOPS (I / O operations per second).

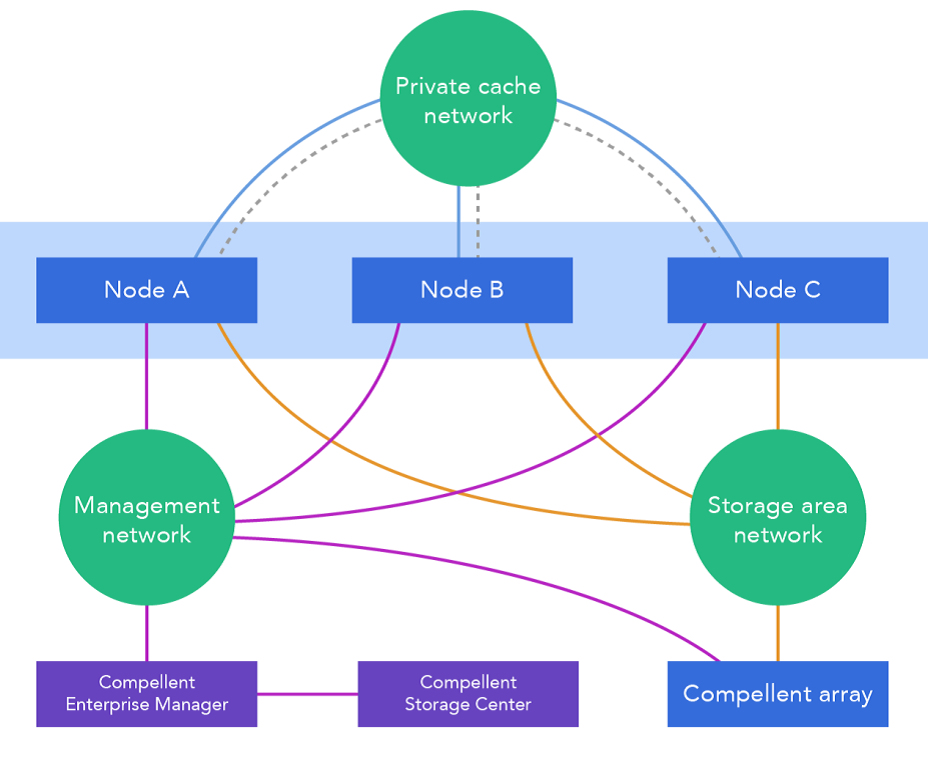

The diagram shows the network architecture of the distributed cache.

2. Solution architecture

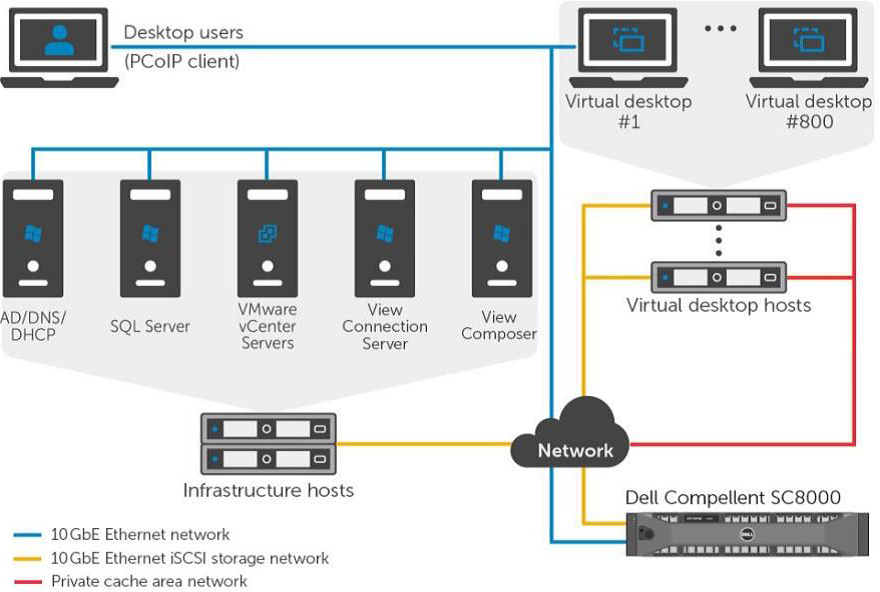

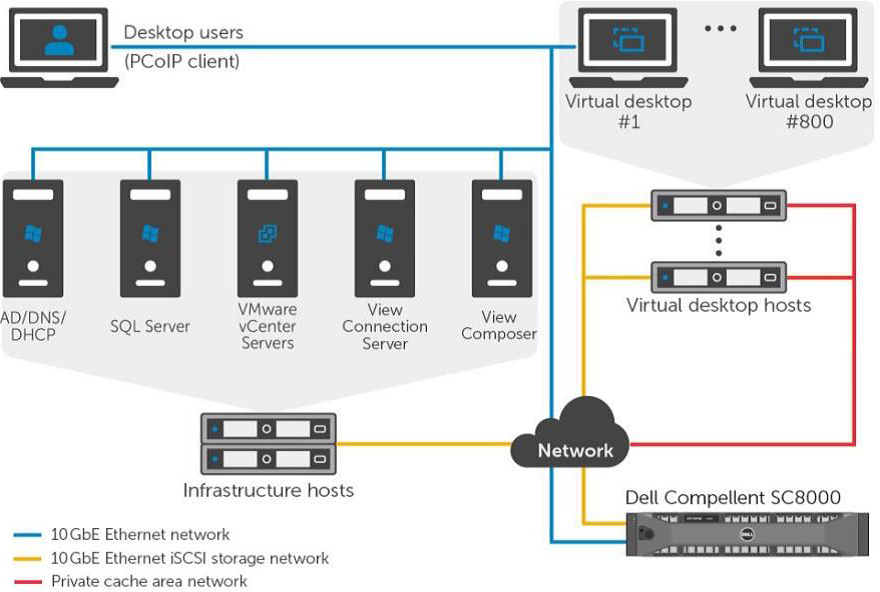

This is how high-speed data exchange between virtual machines and an external disk array looks like:

3. Programs

Horizon view

Horizon view is a complete VDI solution that makes virtual desktops safe and manageable. Its components operate at the level of software, network environment, and hardware.

Virtual Jobs

It is necessary to work differently with different virtual machines. A permanent “virtualka” of a particular user has its place on the disk, where the OS files, programs, and personal data are located. A temporary virtual environment is created on the basis of the original OS image when the user connects to the system (respectively, it is destroyed upon exit). This scheme of work saves system resources and allows you to create a unified workplace - safe and meets all the requirements of the company. This is suitable for typical tasks, but not all employees perform typical tasks.

VMware View Personal profile management is good because it combines the best of both approaches. Windows has long been able to work not only with local user profiles, but also those stored on the server. As a result, the user receives a temporary virtual machine from the OS image, but personal data from his profile on the server is loaded into it. Such machines — VMware-linked clones — are best used with a high load on disk storage.

Horizon view desktop pools

Horizon view creates different pools for different types of virtual machines - so they are easier to administer. For example, permanent physical and virtual machines fall into the Manual pool, and the Automated Pool contains “disposable”, template ones. Through the program interface you can manage clones with the assigned user profile. Here you can read about the features of the configuration, configuration and maintenance.

Hypervisor: VMware vSphere 5.5

The VMware vSphere 5.5 platform is needed to create VDI and cloud solutions. It consists of three main levels: virtualization, management and interface. The level of virtualization is infrastructure and system software. The management level helps to create a virtual environment. Interface is web-based utilities and vSphere client programs.

According to the recommendations of VMware and Microsoft, the network core services are also used in the virtualization environment - NTP, DNS, Active Directory and others.

4. Dell hardware

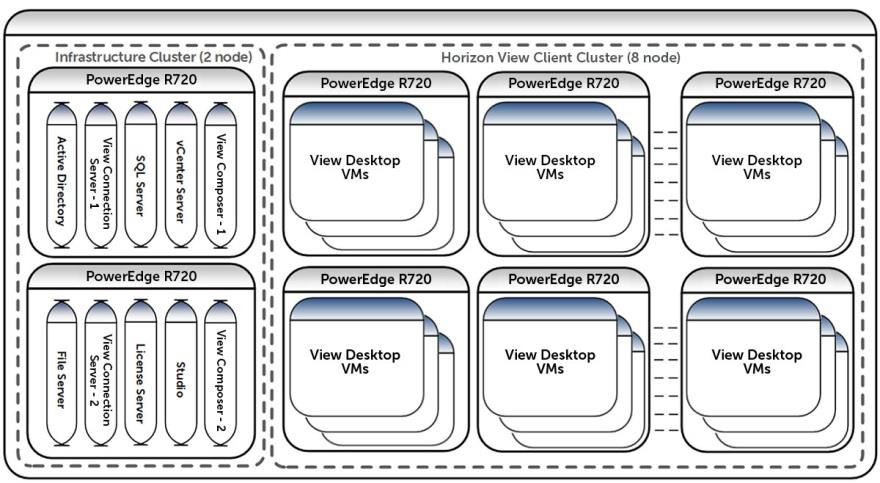

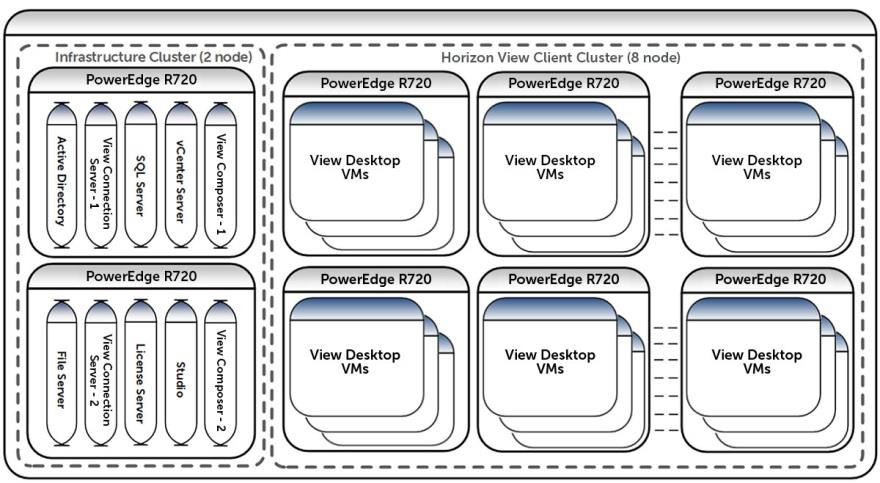

Virtual machines reside on a cluster of eight nodes that are managed by the vSphere. Each server has a PCIe SSD and Fluid Cache provider functions. All of them are connected to three networks: 10 GB Ethernet iSCSI SAN, VDI control network and the one that provides the cache.

There is another cluster of two nodes running vSphere. There are virtual machines that make the VDI infrastructure work: vCenter Server, View Connection Server, View Composer Server, SQL Server, Active Directory, etc. These nodes are also connected to the VDI and iSCSI SAN control networks.

The Dell Compellent SC8000 disk array has two types of paired controllers and drives: Write Intensive (WI) SSD, 15K SAS HDD and 7.2K NL-SAS HDD. As you can see, the disk subsystem is both productive and capacious.

Switches are stacked in pairs - this provides fault tolerance at the network level. They serve all three networks — the client for virtual machines, the control for the VDI environment, and the iSCSI SAN. Bandwidth is divided based on VLAN, and traffic prioritization is also performed. The optimal bandwidth for these networks is 40 Gb / s, but 10 Gb / s is enough.

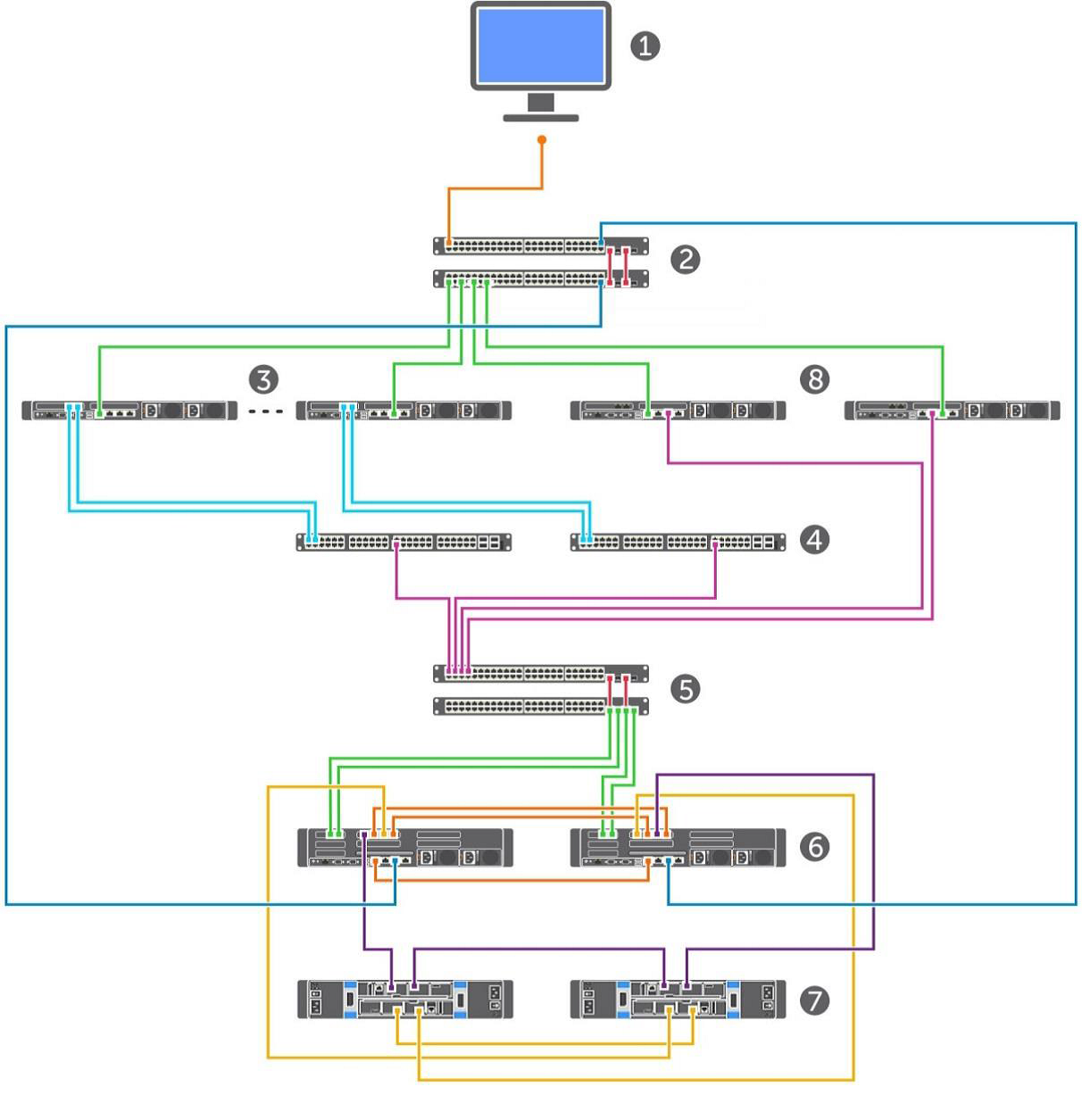

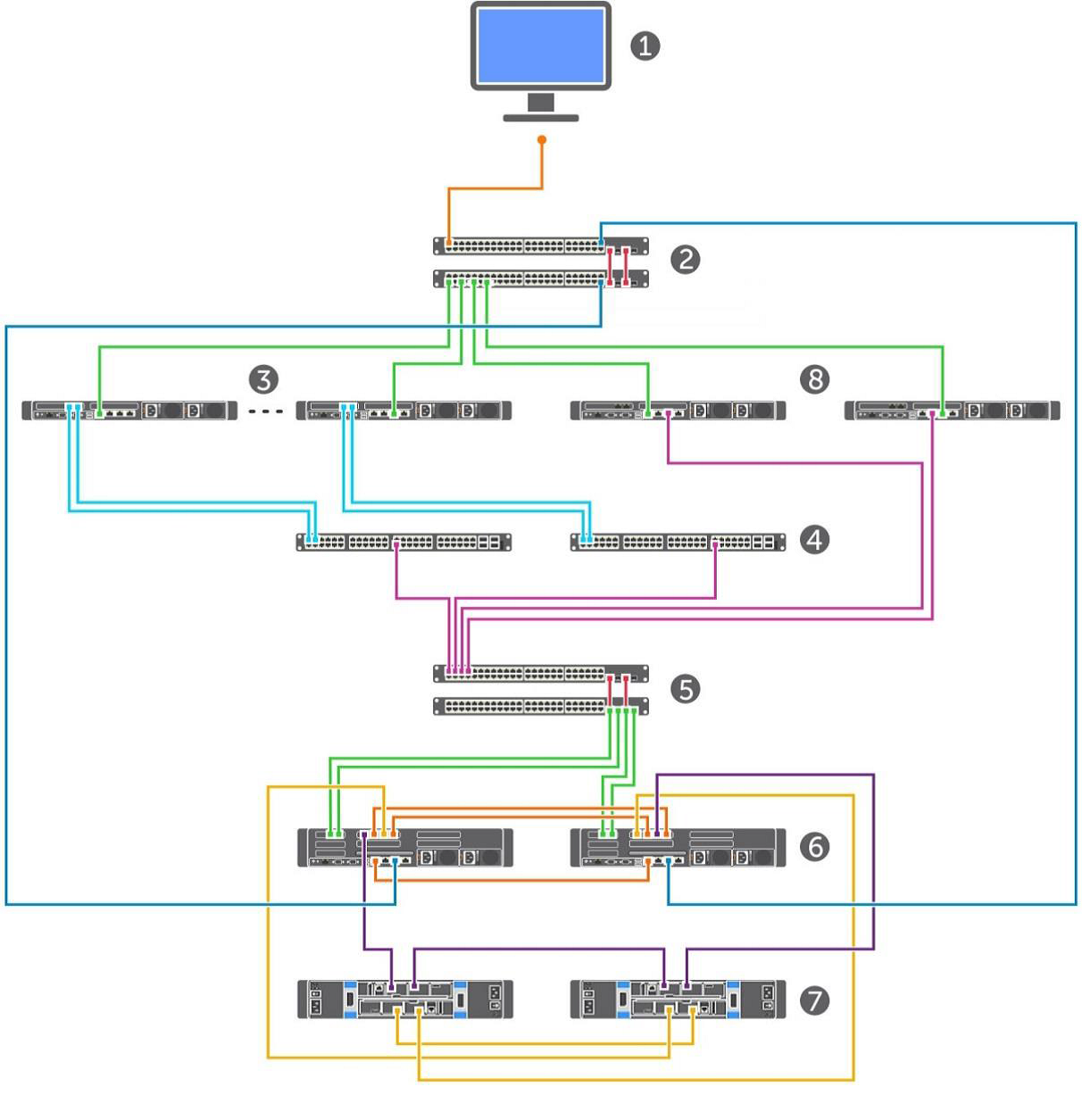

Here is a general installation plan:

1. Management console.

2. VDI and client management networks.

3. vSphere servers for virtual machines.

4. Fluid Cache controllers (2 pcs.).

5. SAN switches (2 pcs.).

6. Disk array controllers (2 pcs.).

7. Shelves disk array (2 pcs.).

8. Servers providing VDI infrastructure.

5. Configuration of components.

Servers

Each of the eight Dell PowerEdge R720 servers that make up the cluster for a VM has:

• two ten-core Intel Xeon CPU E5-2690 v2 @ 3.00GHz,

• 256 GB of RAM,

• Dell Express Flash PCIe SSD drive with a capacity of 350 GB,

• Mellanox ConnectX-3 network card,

• Broadcom NetXtreme II BCM57810 10 Gigabit Network Controller,

• Quad-port Broadcom NetXtreme BCM5720 gigabit network controller.

On a cluster of two Dell PowerEdge R720, we deployed virtual machines for Active Directory, VMware vCenter 5.5 server, Horizon View Connection server (primary and backup), View Composer server, file server based on Microsoft Windows Server 2012 R2 and MS SQL Server 2012 R2 . Forty LoginVSI launcher virtual machines are used to test the installation - they create a load.

Network

We used three pairs of switches in the installation: Force10 S55 1 GB and Force10 S4810 10 GB. The first was responsible for creating the VDI environment and managing servers, disk array, switches, vSphere, etc. VLAN for all these components are different. The second pair, the Dell Force10 S4810 10 GB, helped organize the Fluid Cache caching network. Another similar pair was responsible for the operation of the iSCSI network between the clusters and the Compellent SC8000 disk array.

Disk array Compellent SC8000

For the virtual servers of the service servers, we created a 0.5 TB disk where we placed Active Directory, SQL Server, vCenter Server, View Connection Server, View Composer and file server. For user profiles, a 2 TB disk was allocated (approximately 2.5 GB per machine). The master OS images are located on a 100 GB mirror disk. Finally, for virtual machines, we decided to make 8 disks of 0.5 TB each.

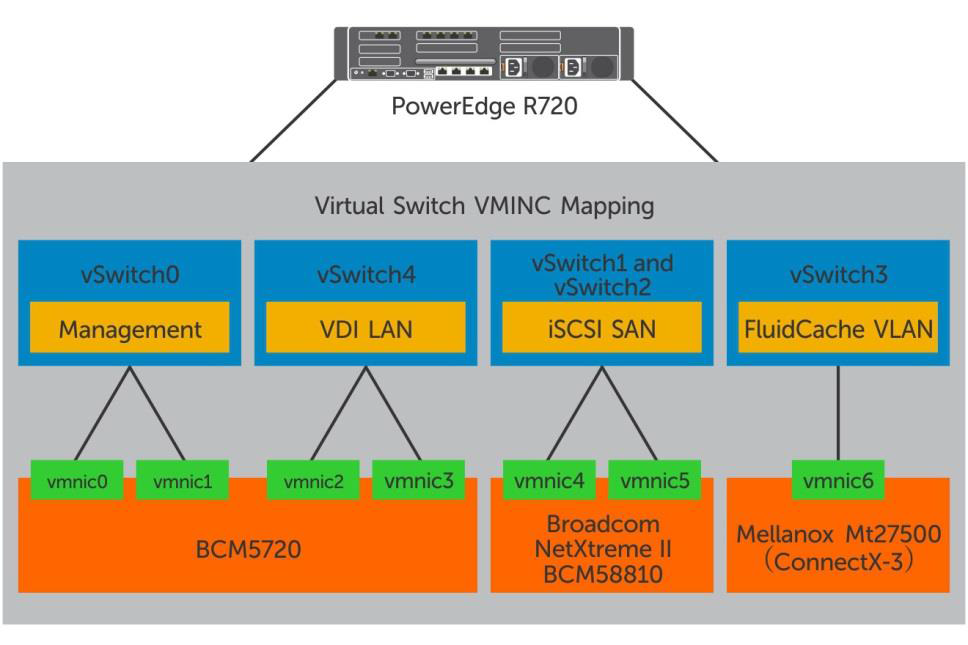

Virtual network

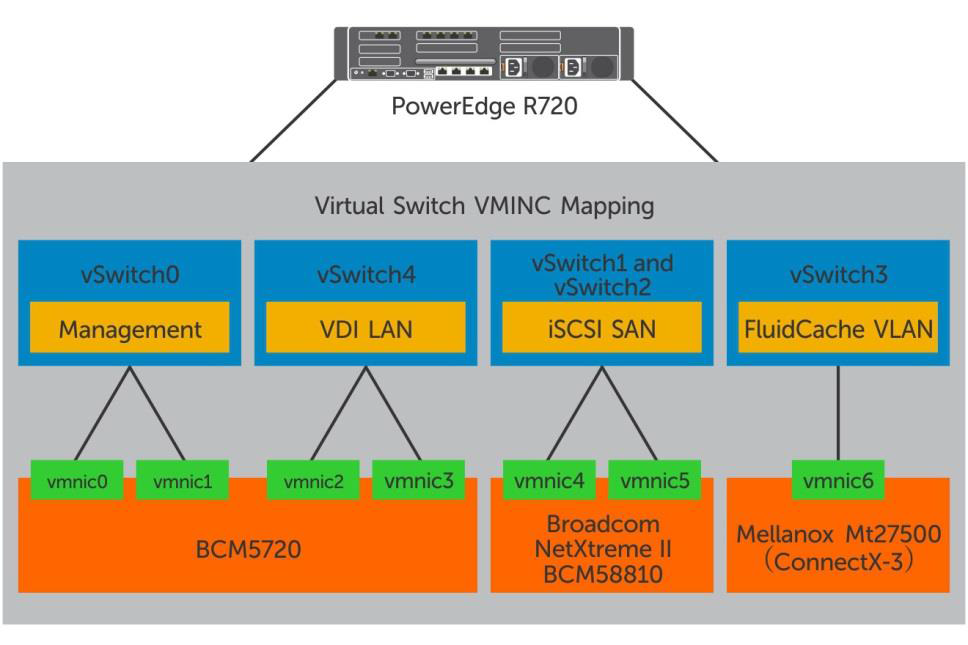

Each host running the VMware vSphere 5.5 hypervisor has five virtual switches:

Horizon view

We set up this instruction .

Virtual machines

We started setting up the original Windows 7 images using the VMware and Login VSI manuals. Here's what we got:

• VMware Virtual Hardware v8,

• 2 virtual CPUs,

• 3 GB RAM, 1.5 GB reserved,

• 25 GB of disk memory

• one virtual network adapter connected to a VDI network,

• Windows 7 64-bit.

VMware Horizon Guide with View Optimization Guide for Windows 7 and Windows 8 can be downloaded here .

Part 2: We test, we describe results, we draw conclusions.

Methods for testing and configuring components

Then we checked how the system behaves with different scenarios for the use of virtual machines. We wanted to ensure that the eight hundred “virtual machines” worked without noticeable delays and exchanged information with the disk subsystem in the area of 80-90 IOPS throughout the day. At the same time, we checked the work of the installation under the usual “office” load — both during the working day, and when virtual machines were created, and when users logged on to the system — for example, at the beginning of the working day and after the lunch break. Let's call these conditions Boot & Login Storm.

For testing, we used two utilities - a comprehensive program, Login VSI 4.0, which loads the virtual machines and analyzes the results, as well as Iometer - it helps to monitor the disk subsystem.

In order to create a “standard” load for an average office machine, we:

• Launched up to five applications simultaneously.

• Used Microsoft Internet Explorer, Microsoft Word, Microsoft Excel, Microsoft PowerPoint, PDF reader, 7-Zip, Movie player and FreeMind.

• Re-run scripts approximately every 48 minutes, pause - 2 minutes.

• Every 3-4 minutes measured the response time to user actions.

• Set the print speed to 160 ms per character.

Imitated a high load using the same test, and to activate the exchange with the disk subsystem in each virtual machine, Iometer was launched. A standard set of tests generated 8-10 IOPS per machine, with a high load, this indicator was 80-90 IOPS. We created the base OS images in the Horizon view pool with standard settings and a set of software for LoginVSI, plus an additional install of Iometer, which was run from the logon script.

To monitor work and record performance under load, we used the following tools:

• Dell Compellent Enterprise Manager for evaluating the performance of Fluid Cache and Compellent SC8000 storage array,

• VMware vCenter statistics for monitoring vSphere,

• Login VSI Analyzer for user environment assessment.

The criterion for evaluating the performance of the disk subsystem is the response time to user actions. If it is not higher than 10 ms, then everything is in order.

System load at the hypervisor level:

• The load on any CPU in the cluster should not exceed 85%.

• Minimum memory ballooning (sharing of the memory area by virtual machines).

• The load on each of the network interfaces is no more than 90%.

• Re-sending TCP / IP packets - no more than 0.5%.

The maximum number of requests from virtual machines was calculated by the method of VSImax .

A single set of virtual machines was created based on the base image of Windows 7 using the VMware Horizon View Administrator interface.

The settings were as follows:

• Automatic Desktop Pool: machines are created upon request to "enable".

• Each user is assigned a random machine.

• View Storage Accelerator is allowed for all machines with an update period of 7 days.

• 800 virtual machines are located on 8 physical machines (100 each).

• Source OS images are on separate disks.

Test results and analysis

Scenarios were as follows:

1. Boot Storm: the maximum load on the storage system, when at the same time many users "turn on", that is, create virtual machines.

2. Login Storm: another strong load on the disk subsystem when users log on to the system at the beginning of the working day or after the break. For this test, we turned on the virtual machines and left to log in for 20 minutes without any action.

3. Standard load: after users logged in, the “standard” office work script was launched. The response of the system to user actions was observed by the VSImax (Dynamic) parameter from Login VSI.

4. High load: similar to standard, but IOPS is 10 times higher.

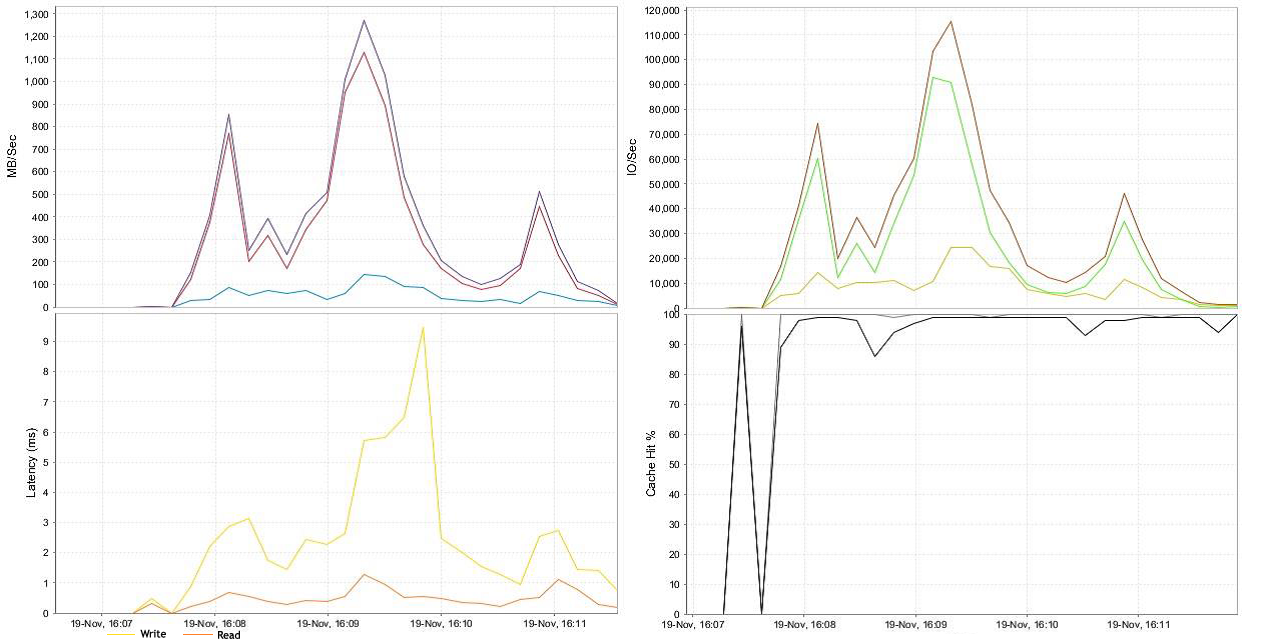

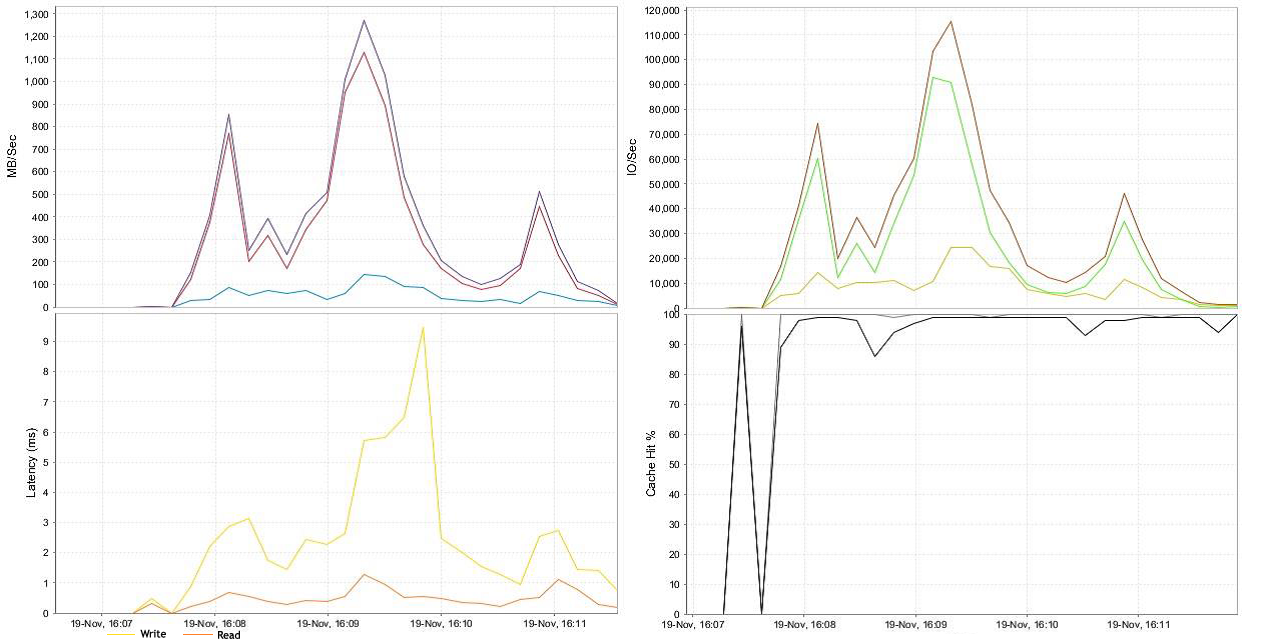

Boot storm

Note: Hereinafter, latency is indicated by the storage system.

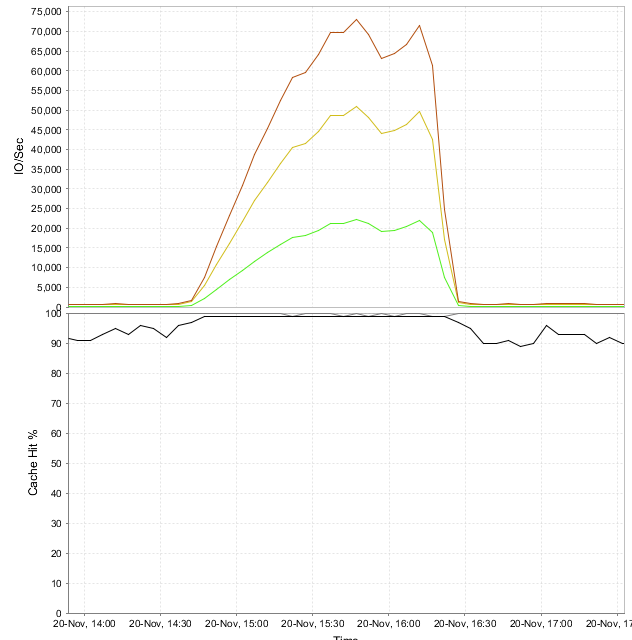

800 machines generated an average of 115,000 IOPS during the test. Write operations prevailed. Four minutes later all the cars were available for work.

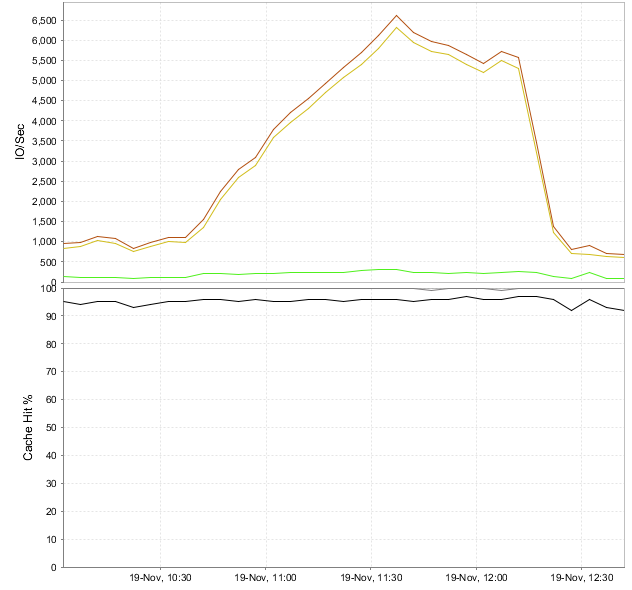

Login Storm and Standard Load

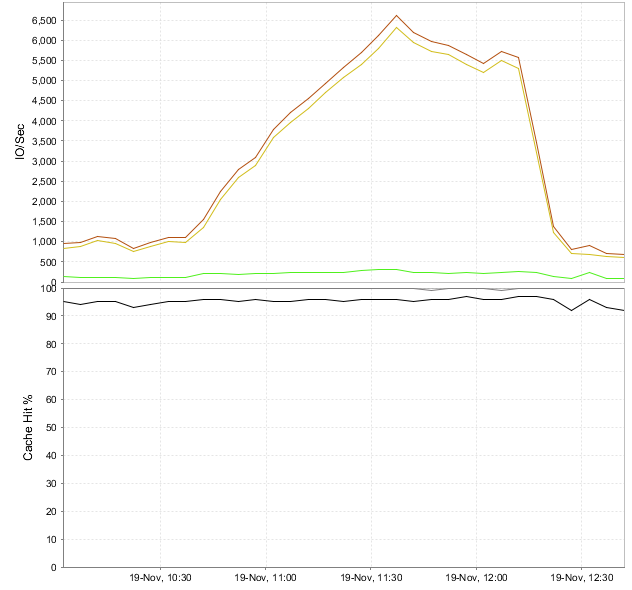

We programmed the Login VSI to log into 800 virtual machines about an hour after they were loaded. Peak IOPS did not exceed 6,500 for the entire platform (or 8-10 per machine). There were more requests for writing than during the startup of machines and further work, since a user profile was created, OS services and application applications were launched. After the time required for stabilization, the OS caches the main user and program data in RAM - the load on the disk subsystem is reduced. At the Fluid Cache level, with a maximum of 6,500 IOPS, write operations are cached at 100%, reads are about 95%. The 98 Mbps data stream causes a disk access delay of only 2 ms.

Performance at the physical level

The performance statistics of the physical components of the vSphere system we shot using VMware vCenter Server. For the entire time of the test, the load on the processors, memory and all levels of the storage system was about the same. The results were the same as we expected to see, setting the task:

• CPU load not more than 85%.

• The active disk space used is no more than 80% (when virtual machines were created) and no more than 60% during tests.

• Memory ballooning is minimal (sometimes not used at all).

• Network load is about 45%, including iSCSI, SAN, VDI LAN, Management LAN and vMotion LAN.

• Average read / write latency on disk storage adapters - 2 ms.

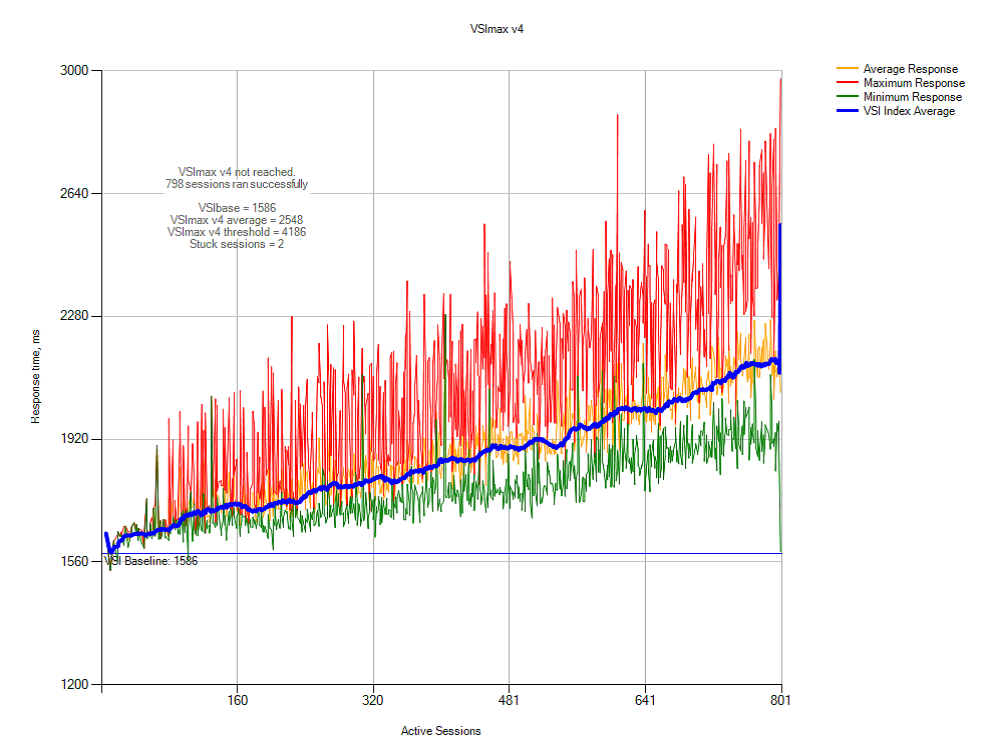

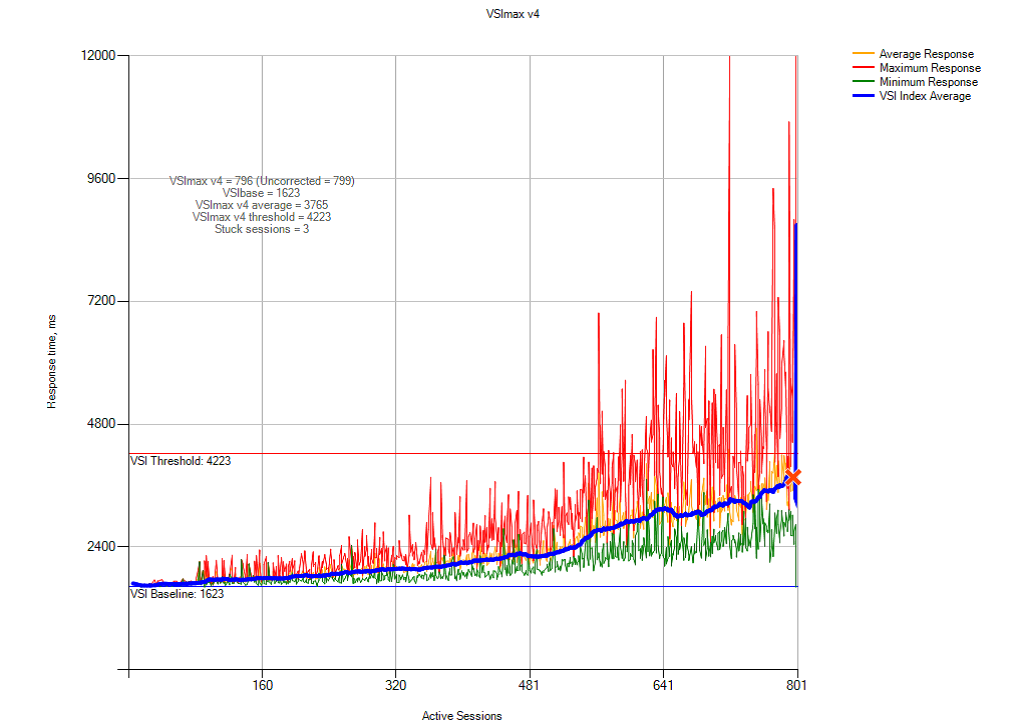

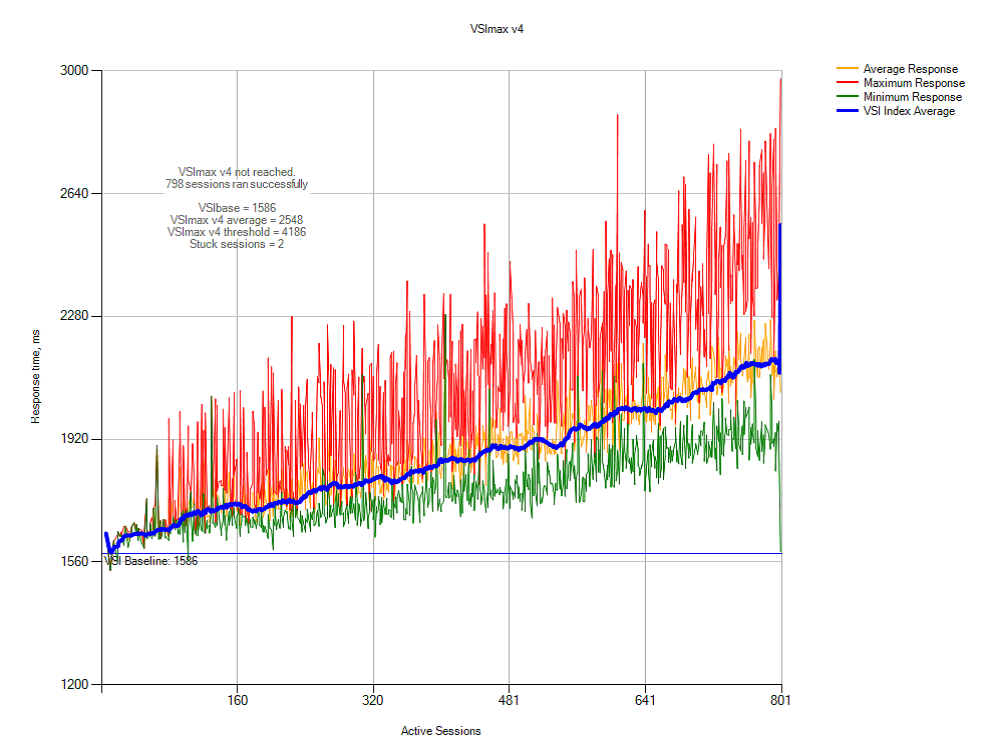

This is a graph of system performance from the user's point of view. It depends on the number of machines that operate simultaneously:

In real life, this means that on our system, you can safely deploy jobs for thousands of users. There will be no OS delays even if everyone is actively working at the same time.

Here are the conclusions from the three tests of the “usual” user environment:

• The Dell Fluid Cache for SAN-based VDI test system handled the task perfectly: 800 users can work simultaneously.

• Write operations are 98%, and only 2% are read operations.

• None of the system resources loaded more than we expected.

• Login Storm within 20 minutes did not exceed 8-10 IOPS and did not cause disk access delays of more than 2 ms.

Heavy load

These were the settings for Iometer for a large load on the I / O system: the block size is 20 KB, 80% of write operations, 75% of which are not from the cache, 25 ms Burst Delay.

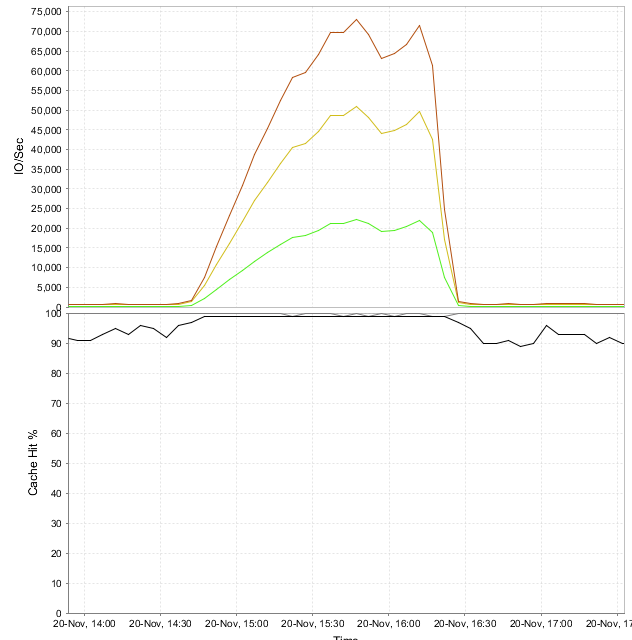

At the Fluid Cache level, the maximum I / O intensity reached 72,000 (90 IOPS per virtual machine). Caching write operations - 100%, reading - 95%. With a peak storage performance of 900 Mbps, the maximum delay was 7.5 ms.

The load at the physical level of the installation was the same as in the previous test. The storage, CPU, and network adapters worked as we planned.

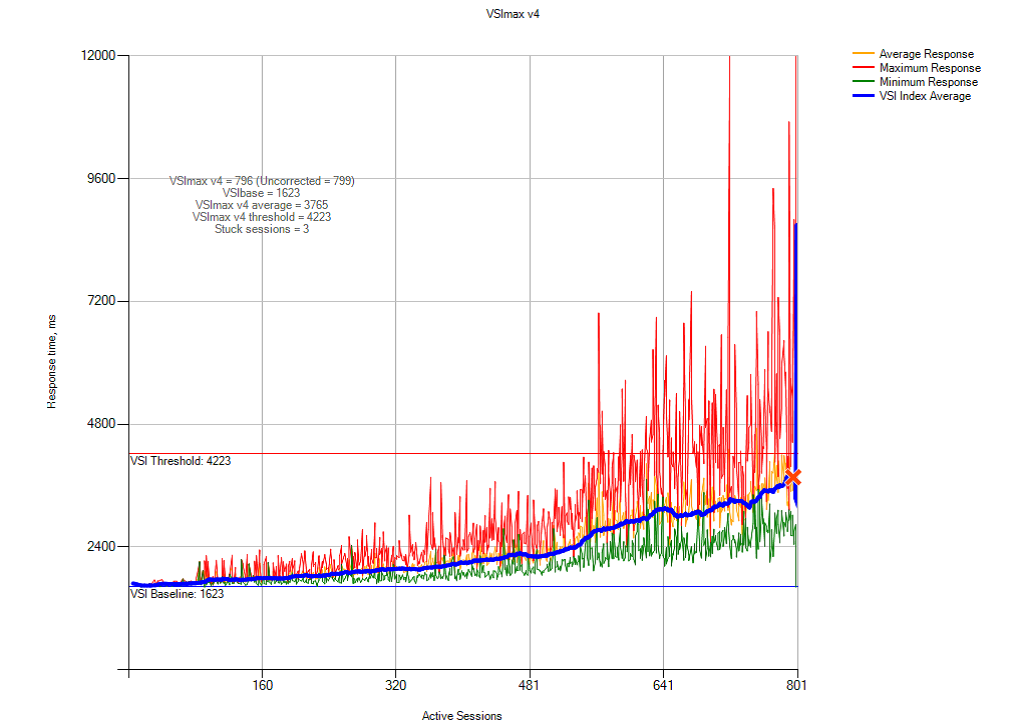

This is a graph of the response of the system to user actions. It depends on the number of machines that operate simultaneously:

Our findings from testing under high load:

• Dell VDI based on Dell Fluid Cache for SAN can be used to simultaneously run 800 virtual machines that intensively exchange data with disk memory.

• 98% fall on write operations, 2% on read operations.

• None of the system resources of the base servers used by vSphere has been maximized. The indicated limits were not exceeded.

• High load on virtual machines created a delay of 7.5 ms with a total exchange rate of 72,000 IOPS (90 IOPS per each 800 machines). This is also within the acceptable speed of the OS reaction to user actions.

Recommendations

VDI is a virtual machine environment with Dell Fluid Cache for SAN.

• If you use roaming user profiles and store data in redirected folders, the load on the I / O system will be lower. This is important during peak times when virtual machines are created and users log in to the system in large quantities. The same applies to network share folders. This scheme saves system resources and facilitates administration. We recommend allocating a separate high-speed disk array for user data.

• Considering the high load on the system when creating virtual machines, we suggest keeping several ready virtual machines ready. In VMware Horizon View, you can configure the number of workplaces that will always be available to users.

• We recommend that you configure the original Windows 7 and 8 images according to this instruction . If you disable unnecessary system services, then the client will speed up authorization and screen drawing, and the overall performance will only increase.

Setup Installation:

• Follow the recommendations of VMware and Dell when installing and configuring vSphere.

• Create separate virtual switches to separate iSCSI SAN, VDI, vMotion, and control network traffic.

• For fault tolerance, assign each network path to at least two physical adapters.

• Reserve at least 10% of physical drives to make the service more accessible.

Network level:

• Use at least two physical network adapters under the VDI network on each of the vSphere servers.

• When you use a VLAN to separate traffic within the same physical network, spread the VDI, vMotion, and control logical networks about different virtual networks.

• On the physical layer, separate the iSCSI SAN and Fluide Cache networks. This will increase the overall system performance.

• The vSphere virtual switches are limited to 120 ports by default. If the machines need more network connections, then after changing the settings, the server will have to be overloaded.

• On iSCSI SAN switches, disable the spanning tree protocol. For ports connected to both servers and storage adapters, enable PortFast. Jumbo frames and Flow control, if they are supported by switches and adapters, need to be activated on all components of the iSCSI network. Here you can read more about this.

Storage System:

• Dell SC Compellent storage arrays works great with Fluid Cache technology. This is a productive and relatively inexpensive solution for the Dell VDI environment.

• Fluid Cache improves storage performance when exchanging data between a server and a disk array. Running the Storage Center 6.5 software, the computing cluster, network environment, and storage system are working at their full potential. A single server can not boast.

• We recommend using separate disk arrays for source OS images and user-defined virtual machines. This makes it easier to administer disks, measure performance and track bottlenecks. In addition, it will be a good basis for further scaling of the system.

• We recommend to allocate disk arrays for roaming user profiles and network folders with shared access, as well as system servers and public services.

Part 1: Formulate the task and describe the system configuration

Why use virtual machines? Simply, it is profitable. Let's start with the fact that "virtuals" allow you to save on the purchase and maintenance of equipment, they are safe and easy to manage. Even an outdated computer and a mobile device that is no longer able to perform its basic functions can become a terminal in a virtual environment - sheer advantages! However, this applies to "normal" jobs. Developers, researchers, analysts and other professionals working with large amounts of data, usually use powerful workstations. The Dell VDI platform is designed to virtualize just such machines.

')

Here is what we had to do:

• Develop a holistic infrastructure for Horizon View and VDI based on vSphere for “hard” tasks. We were going to use Fluid Cache technologies, Compellent disk arrays, PowerEdge servers and Dell Networking switches.

• Determine the performance of the assembly at each level of the implementation of the VDI stack with high requirements for the exchange with the disk subsystem. We considered it as 80-90 IOPS per 1 VM during the whole working process.

• Determine the drop in performance at peak loads (the so-called Boot and Login Storms).

The hardware and software platform consisted of:

• VMware Horizon View 6.0,

• VMware vSphere 5.5 hypervisor,

• Dell PowerEdge R720 servers with Dell Express PCIe SSDs installed,

• Dell Networking S4810 switches,

• Dell Compellent SC8000 disk array.

What is written below will be interesting for system architects who plan to implement virtualization technologies for high-load user workstations.

System components

1. Dell Fluid Cache for SAN and VDI

This technology uses the Express Flash NVMe PCIe SSD installed in servers and provides remote memory access (RDMA). An additional cache level is a fast intermediary between the disk array and servers. Features of the architecture is that it is initially parallelized, resistant to failures and ready for load distribution, and paired with a high-speed SAN allows you to install disks for caching at least in all servers of the assembly, at least in some of them. Faster data sharing will be even those machines that do not contain Flash NVMe PCIe SSD. Cached data is transmitted to the network using the RDMA protocol, the advantage of which, as is well known, is in minimal delays. As Dell Compellent Enterprise software detects cache components, a Fluid Cache cluster is created and configured automatically in it. The fastest way when using Fluid Cache is to write data to an external disk array - this increases the number of IOPS (I / O operations per second).

The diagram shows the network architecture of the distributed cache.

2. Solution architecture

This is how high-speed data exchange between virtual machines and an external disk array looks like:

3. Programs

Horizon view

Horizon view is a complete VDI solution that makes virtual desktops safe and manageable. Its components operate at the level of software, network environment, and hardware.

| Component | Explanation |

| Client devices | Personal devices that give the user access to virtual desktops: specialized terminals (for example, Dell Wyse end points), mobile phones, tablets, PCs, etc. |

| Horizon View Connection Server | A software product that authorizes a user in the system and then redirects him to the terminal, virtual or physical machine. |

| Horizon View Client | Client part of the program. It is used to access workstations running Horizon View. |

| Horizon view agent | A service that provides communication between clients and Horizon View servers. |

| Horizon View Administrator | A web interface to manage Horizon View’s infrastructure and components. |

| vCenter Server | The primary platform for administering, configuring, and managing arrays of VMware virtual machines. |

| Horizon View Composer | Application for creating a pool of virtual machines based on the base OS image. With it, it is easier to administer the system and you can save resources. |

Virtual Jobs

It is necessary to work differently with different virtual machines. A permanent “virtualka” of a particular user has its place on the disk, where the OS files, programs, and personal data are located. A temporary virtual environment is created on the basis of the original OS image when the user connects to the system (respectively, it is destroyed upon exit). This scheme of work saves system resources and allows you to create a unified workplace - safe and meets all the requirements of the company. This is suitable for typical tasks, but not all employees perform typical tasks.

VMware View Personal profile management is good because it combines the best of both approaches. Windows has long been able to work not only with local user profiles, but also those stored on the server. As a result, the user receives a temporary virtual machine from the OS image, but personal data from his profile on the server is loaded into it. Such machines — VMware-linked clones — are best used with a high load on disk storage.

Horizon view desktop pools

Horizon view creates different pools for different types of virtual machines - so they are easier to administer. For example, permanent physical and virtual machines fall into the Manual pool, and the Automated Pool contains “disposable”, template ones. Through the program interface you can manage clones with the assigned user profile. Here you can read about the features of the configuration, configuration and maintenance.

Hypervisor: VMware vSphere 5.5

The VMware vSphere 5.5 platform is needed to create VDI and cloud solutions. It consists of three main levels: virtualization, management and interface. The level of virtualization is infrastructure and system software. The management level helps to create a virtual environment. Interface is web-based utilities and vSphere client programs.

According to the recommendations of VMware and Microsoft, the network core services are also used in the virtualization environment - NTP, DNS, Active Directory and others.

4. Dell hardware

Virtual machines reside on a cluster of eight nodes that are managed by the vSphere. Each server has a PCIe SSD and Fluid Cache provider functions. All of them are connected to three networks: 10 GB Ethernet iSCSI SAN, VDI control network and the one that provides the cache.

There is another cluster of two nodes running vSphere. There are virtual machines that make the VDI infrastructure work: vCenter Server, View Connection Server, View Composer Server, SQL Server, Active Directory, etc. These nodes are also connected to the VDI and iSCSI SAN control networks.

The Dell Compellent SC8000 disk array has two types of paired controllers and drives: Write Intensive (WI) SSD, 15K SAS HDD and 7.2K NL-SAS HDD. As you can see, the disk subsystem is both productive and capacious.

Switches are stacked in pairs - this provides fault tolerance at the network level. They serve all three networks — the client for virtual machines, the control for the VDI environment, and the iSCSI SAN. Bandwidth is divided based on VLAN, and traffic prioritization is also performed. The optimal bandwidth for these networks is 40 Gb / s, but 10 Gb / s is enough.

Here is a general installation plan:

1. Management console.

2. VDI and client management networks.

3. vSphere servers for virtual machines.

4. Fluid Cache controllers (2 pcs.).

5. SAN switches (2 pcs.).

6. Disk array controllers (2 pcs.).

7. Shelves disk array (2 pcs.).

8. Servers providing VDI infrastructure.

5. Configuration of components.

Servers

Each of the eight Dell PowerEdge R720 servers that make up the cluster for a VM has:

• two ten-core Intel Xeon CPU E5-2690 v2 @ 3.00GHz,

• 256 GB of RAM,

• Dell Express Flash PCIe SSD drive with a capacity of 350 GB,

• Mellanox ConnectX-3 network card,

• Broadcom NetXtreme II BCM57810 10 Gigabit Network Controller,

• Quad-port Broadcom NetXtreme BCM5720 gigabit network controller.

On a cluster of two Dell PowerEdge R720, we deployed virtual machines for Active Directory, VMware vCenter 5.5 server, Horizon View Connection server (primary and backup), View Composer server, file server based on Microsoft Windows Server 2012 R2 and MS SQL Server 2012 R2 . Forty LoginVSI launcher virtual machines are used to test the installation - they create a load.

Network

We used three pairs of switches in the installation: Force10 S55 1 GB and Force10 S4810 10 GB. The first was responsible for creating the VDI environment and managing servers, disk array, switches, vSphere, etc. VLAN for all these components are different. The second pair, the Dell Force10 S4810 10 GB, helped organize the Fluid Cache caching network. Another similar pair was responsible for the operation of the iSCSI network between the clusters and the Compellent SC8000 disk array.

Disk array Compellent SC8000

| Role | Type of | amount | Explanations |

| Controller | SC8000 | 2 | System Center Operating System (SCOS) 6.5 |

| Shelf | External | 2 | 24 compartments for 2.5 "disks |

| Ports | 10 Gbps Ethernet | 2 | Front end host connectivity |

| SAS 6 Gbps | four | Back end drive connectivity | |

| Discs | 400 GB WI SSD | 12 | 11 active and 1 hot standby |

| 300 GB 15K SAS | 21 | 20 active and 1 hot standby | |

| 4 TB 7.2K NL-SAS | 12 | 11 active and 1 hot standby |

For the virtual servers of the service servers, we created a 0.5 TB disk where we placed Active Directory, SQL Server, vCenter Server, View Connection Server, View Composer and file server. For user profiles, a 2 TB disk was allocated (approximately 2.5 GB per machine). The master OS images are located on a 100 GB mirror disk. Finally, for virtual machines, we decided to make 8 disks of 0.5 TB each.

Virtual network

Each host running the VMware vSphere 5.5 hypervisor has five virtual switches:

| vSwitch | Purpose |

| vSwitch0 | Control network |

| vSwitch1 and vSwitch2 | iSCSI SAN |

| vSwitch3 | Fluid Cache VLAN |

| vSwitch4 | VDI LAN |

Horizon view

We set up this instruction .

| Role | amount | Type of | Memory | CPU |

| Horizon View Connection Servers | 2 | VM | 16 GB | 8nos |

| View Composer Server | one | VM | 8 GB | 8nos |

Virtual machines

We started setting up the original Windows 7 images using the VMware and Login VSI manuals. Here's what we got:

• VMware Virtual Hardware v8,

• 2 virtual CPUs,

• 3 GB RAM, 1.5 GB reserved,

• 25 GB of disk memory

• one virtual network adapter connected to a VDI network,

• Windows 7 64-bit.

VMware Horizon Guide with View Optimization Guide for Windows 7 and Windows 8 can be downloaded here .

Part 2: We test, we describe results, we draw conclusions.

Methods for testing and configuring components

Then we checked how the system behaves with different scenarios for the use of virtual machines. We wanted to ensure that the eight hundred “virtual machines” worked without noticeable delays and exchanged information with the disk subsystem in the area of 80-90 IOPS throughout the day. At the same time, we checked the work of the installation under the usual “office” load — both during the working day, and when virtual machines were created, and when users logged on to the system — for example, at the beginning of the working day and after the lunch break. Let's call these conditions Boot & Login Storm.

For testing, we used two utilities - a comprehensive program, Login VSI 4.0, which loads the virtual machines and analyzes the results, as well as Iometer - it helps to monitor the disk subsystem.

In order to create a “standard” load for an average office machine, we:

• Launched up to five applications simultaneously.

• Used Microsoft Internet Explorer, Microsoft Word, Microsoft Excel, Microsoft PowerPoint, PDF reader, 7-Zip, Movie player and FreeMind.

• Re-run scripts approximately every 48 minutes, pause - 2 minutes.

• Every 3-4 minutes measured the response time to user actions.

• Set the print speed to 160 ms per character.

Imitated a high load using the same test, and to activate the exchange with the disk subsystem in each virtual machine, Iometer was launched. A standard set of tests generated 8-10 IOPS per machine, with a high load, this indicator was 80-90 IOPS. We created the base OS images in the Horizon view pool with standard settings and a set of software for LoginVSI, plus an additional install of Iometer, which was run from the logon script.

To monitor work and record performance under load, we used the following tools:

• Dell Compellent Enterprise Manager for evaluating the performance of Fluid Cache and Compellent SC8000 storage array,

• VMware vCenter statistics for monitoring vSphere,

• Login VSI Analyzer for user environment assessment.

The criterion for evaluating the performance of the disk subsystem is the response time to user actions. If it is not higher than 10 ms, then everything is in order.

System load at the hypervisor level:

• The load on any CPU in the cluster should not exceed 85%.

• Minimum memory ballooning (sharing of the memory area by virtual machines).

• The load on each of the network interfaces is no more than 90%.

• Re-sending TCP / IP packets - no more than 0.5%.

The maximum number of requests from virtual machines was calculated by the method of VSImax .

A single set of virtual machines was created based on the base image of Windows 7 using the VMware Horizon View Administrator interface.

The settings were as follows:

• Automatic Desktop Pool: machines are created upon request to "enable".

• Each user is assigned a random machine.

• View Storage Accelerator is allowed for all machines with an update period of 7 days.

• 800 virtual machines are located on 8 physical machines (100 each).

• Source OS images are on separate disks.

Test results and analysis

Scenarios were as follows:

1. Boot Storm: the maximum load on the storage system, when at the same time many users "turn on", that is, create virtual machines.

2. Login Storm: another strong load on the disk subsystem when users log on to the system at the beginning of the working day or after the break. For this test, we turned on the virtual machines and left to log in for 20 minutes without any action.

3. Standard load: after users logged in, the “standard” office work script was launched. The response of the system to user actions was observed by the VSImax (Dynamic) parameter from Login VSI.

4. High load: similar to standard, but IOPS is 10 times higher.

Boot storm

Note: Hereinafter, latency is indicated by the storage system.

800 machines generated an average of 115,000 IOPS during the test. Write operations prevailed. Four minutes later all the cars were available for work.

Login Storm and Standard Load

We programmed the Login VSI to log into 800 virtual machines about an hour after they were loaded. Peak IOPS did not exceed 6,500 for the entire platform (or 8-10 per machine). There were more requests for writing than during the startup of machines and further work, since a user profile was created, OS services and application applications were launched. After the time required for stabilization, the OS caches the main user and program data in RAM - the load on the disk subsystem is reduced. At the Fluid Cache level, with a maximum of 6,500 IOPS, write operations are cached at 100%, reads are about 95%. The 98 Mbps data stream causes a disk access delay of only 2 ms.

Performance at the physical level

The performance statistics of the physical components of the vSphere system we shot using VMware vCenter Server. For the entire time of the test, the load on the processors, memory and all levels of the storage system was about the same. The results were the same as we expected to see, setting the task:

• CPU load not more than 85%.

• The active disk space used is no more than 80% (when virtual machines were created) and no more than 60% during tests.

• Memory ballooning is minimal (sometimes not used at all).

• Network load is about 45%, including iSCSI, SAN, VDI LAN, Management LAN and vMotion LAN.

• Average read / write latency on disk storage adapters - 2 ms.

This is a graph of system performance from the user's point of view. It depends on the number of machines that operate simultaneously:

In real life, this means that on our system, you can safely deploy jobs for thousands of users. There will be no OS delays even if everyone is actively working at the same time.

Here are the conclusions from the three tests of the “usual” user environment:

• The Dell Fluid Cache for SAN-based VDI test system handled the task perfectly: 800 users can work simultaneously.

• Write operations are 98%, and only 2% are read operations.

• None of the system resources loaded more than we expected.

• Login Storm within 20 minutes did not exceed 8-10 IOPS and did not cause disk access delays of more than 2 ms.

Heavy load

These were the settings for Iometer for a large load on the I / O system: the block size is 20 KB, 80% of write operations, 75% of which are not from the cache, 25 ms Burst Delay.

At the Fluid Cache level, the maximum I / O intensity reached 72,000 (90 IOPS per virtual machine). Caching write operations - 100%, reading - 95%. With a peak storage performance of 900 Mbps, the maximum delay was 7.5 ms.

The load at the physical level of the installation was the same as in the previous test. The storage, CPU, and network adapters worked as we planned.

This is a graph of the response of the system to user actions. It depends on the number of machines that operate simultaneously:

Our findings from testing under high load:

• Dell VDI based on Dell Fluid Cache for SAN can be used to simultaneously run 800 virtual machines that intensively exchange data with disk memory.

• 98% fall on write operations, 2% on read operations.

• None of the system resources of the base servers used by vSphere has been maximized. The indicated limits were not exceeded.

• High load on virtual machines created a delay of 7.5 ms with a total exchange rate of 72,000 IOPS (90 IOPS per each 800 machines). This is also within the acceptable speed of the OS reaction to user actions.

Recommendations

VDI is a virtual machine environment with Dell Fluid Cache for SAN.

• If you use roaming user profiles and store data in redirected folders, the load on the I / O system will be lower. This is important during peak times when virtual machines are created and users log in to the system in large quantities. The same applies to network share folders. This scheme saves system resources and facilitates administration. We recommend allocating a separate high-speed disk array for user data.

• Considering the high load on the system when creating virtual machines, we suggest keeping several ready virtual machines ready. In VMware Horizon View, you can configure the number of workplaces that will always be available to users.

• We recommend that you configure the original Windows 7 and 8 images according to this instruction . If you disable unnecessary system services, then the client will speed up authorization and screen drawing, and the overall performance will only increase.

Setup Installation:

• Follow the recommendations of VMware and Dell when installing and configuring vSphere.

• Create separate virtual switches to separate iSCSI SAN, VDI, vMotion, and control network traffic.

• For fault tolerance, assign each network path to at least two physical adapters.

• Reserve at least 10% of physical drives to make the service more accessible.

Network level:

• Use at least two physical network adapters under the VDI network on each of the vSphere servers.

• When you use a VLAN to separate traffic within the same physical network, spread the VDI, vMotion, and control logical networks about different virtual networks.

• On the physical layer, separate the iSCSI SAN and Fluide Cache networks. This will increase the overall system performance.

• The vSphere virtual switches are limited to 120 ports by default. If the machines need more network connections, then after changing the settings, the server will have to be overloaded.

• On iSCSI SAN switches, disable the spanning tree protocol. For ports connected to both servers and storage adapters, enable PortFast. Jumbo frames and Flow control, if they are supported by switches and adapters, need to be activated on all components of the iSCSI network. Here you can read more about this.

Storage System:

• Dell SC Compellent storage arrays works great with Fluid Cache technology. This is a productive and relatively inexpensive solution for the Dell VDI environment.

• Fluid Cache improves storage performance when exchanging data between a server and a disk array. Running the Storage Center 6.5 software, the computing cluster, network environment, and storage system are working at their full potential. A single server can not boast.

• We recommend using separate disk arrays for source OS images and user-defined virtual machines. This makes it easier to administer disks, measure performance and track bottlenecks. In addition, it will be a good basis for further scaling of the system.

• We recommend to allocate disk arrays for roaming user profiles and network folders with shared access, as well as system servers and public services.

Source: https://habr.com/ru/post/261385/

All Articles