NetBackup 7.6 Implementation Experience

In this article we will try to briefly tell you about the experience of implementing the Symantec NetBackup backup system.

In order to increase the security of internal corporate systems against information loss and reduce the production costs of recovery in case of failures, the customer decided to implement a backup system based on NetBackup 7.6. We leave the description of the process of choosing a specific solution by a customer outside the scope of this article.

As a result, the main parameters of the solution were identified:

and formulated the project objectives, the achievement of which was considered necessary.

')

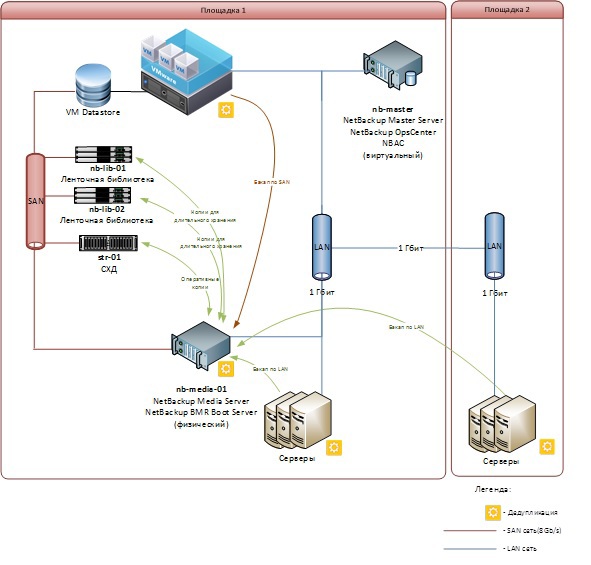

In preparation for the project, a survey was conducted of the existing infrastructure, which allowed us to get an idea of all the systems to be backed up, determine the requirements for the volumes of backup storage and data transmission capacity, as well as create the necessary schemes.

Since the introduction of the system was carried out mainly in a remote mode, the presence of diagrams and descriptions made at the survey stage, simplified the solution of a large number of issues that arose during the work.

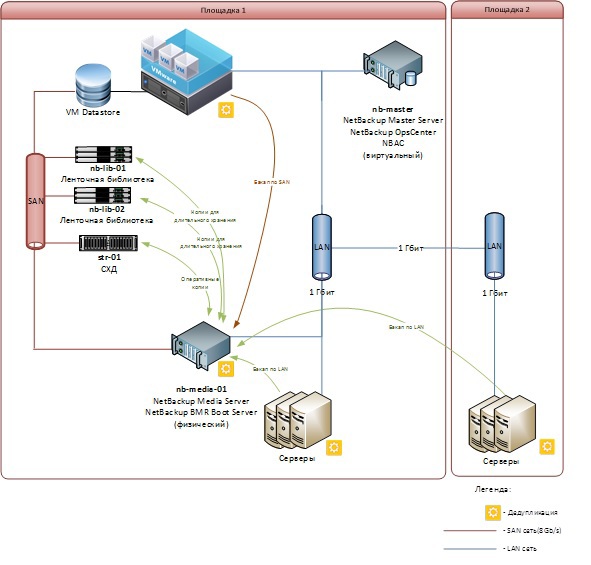

The customer's infrastructure, on the one hand, is relatively simple, as there is a clear separation of functions between servers, and, on the other hand, its heterogeneity and richness in various systems required the development of a large number of different backup policies. The task was to perform the backup of all systems using only one master server and one media server.

The list of systems to be backed up includes about 40 servers (both physical and virtual) with a total storage capacity of about 60 TB.

Most of the servers, virtualization, disk systems and tape libraries are located on the first site of the customer. Separate hardware servers - on the second (within one city). The sites are interconnected by a GbE network. Inside the first site, there are two storage network factories to which storage resources and various servers are connected.

Of course, in order to ensure the safety of data in various serious incidents (fires, flooding, etc.), working data and backup copies should be better placed at different sites, but in this case the customer’s available resources did not allow such a scheme to be implemented, and this task was put in the project.

The solution includes:

Both tape libraries, disk arrays and a media server are connected to two isolated SAN factories built on Brocade switches with a bandwidth of up to 8 Gb / s. Virtualization hosts with VMware ESXi hypervisors are also connected to the SAN. This allowed us to further use the backup mode of virtual machines over the SAN and significantly relieve the GbE network.

Correct SAN tuning is one of the key factors affecting the stability of the solution as a whole. Errors in the SAN zone configuration or in the physical transport of the network (on ports, links to servers and repositories, in inter-switch communications, etc.), overload during peak traffic, lack of reception and transmission credits and other not always obvious problems can lead to first glance, to incomprehensible or unreasonable unavailability of resources, their sudden disappearance with the slightest changes, etc. In addition to configuring the storage network itself, at this stage, setting up the publication of resources on storage systems: both on disk arrays and libraries.

Sometimes it happens that the customer’s specialists do not particularly follow the work of the SAN, since for them this part of the work is not everyday. As they say, once set up and forgotten, while working - do not touch. And when preparing the network for launching the backup system, a lot of changes are made in its configuration and storage system settings. Therefore, a detailed study of the SAN configuration before network changes is a very important step.

On disk arrays, LUNs were allocated for storing backup copies: LUNs of 6 TB size for deduplicated data and LUN sizes of just less than 2 TB for storing data that are easier to compress than deduplicate, for example, these types of data include logs of database transactions. Both disk volumes are connected to a media server on which they were marked up as GPT basic volumes by means of Windows Server OS.

For backup purposes, it makes sense immediately to mark up disk resources in GPT, since the MBR limit of 2 TB is quite easily reached during operation and there is a need to increase the storage volume above this value. At the same time, the PDDO component in NetBackup, which will be described later, has a limitation: on a media server, it can use only one logical drive to store deduplicated data. Therefore, in order not to create multiple LUNs of 2 TB each in the future and not to combine them into a Spanned Volume with dynamic volume management tools, it is much easier to immediately mark the desired LUN in GPT.

Here, of course, you need to consider whether the necessary backup volume will be available within one LUN from one array. If the disk space is “sliced” into various pieces from several arrays, the management of the disk space on the media server can be complicated. Especially, considering that when using virtual machine backup over SAN, the media server should have access to all VMFS volumes as well.

Due to the limited bandwidth of the network interfaces of the media server and the high density of backup tasks performed at night, the system was originally designed to use the following techniques, which significantly speed up the backup process:

SAN backup is used as part of a solution to create copies of virtual machines hosted on VMware vSphere. In short, the meaning of this technique is that when creating a backup, the data from the virtualization host is transmitted to the media server not over the Ethernet network, but over the SAN, which, if you use the GbE network, unloads it very much (for 10GbE networks this is obviously is no longer so relevant). At the same time, the media server directly via the SCSI protocol (wrapped in FC traffic) interacts with disk storage, on which LUNs are located with virtual machines. In this case, appropriate zones must be created in the SAN from the media server to disk arrays containing LUNs with virtual machines, and these LUNs should be presented to the media server by means of disk arrays.

Deduplication in NetBackup is implemented by either Media Server Deduplication Pool (MSDP), PureDisk Deduplication Option (PDDO), or Open Storage Technology (OST). All three options require special licensing and are not included in the basic package of NetBackup. The first two options allow you to create special types of storage on a media server (Media Server Deduplication Pool and PureDisk Deduplication Pool, respectively). The third option allows you to connect external OST-compatible third-party disk storage.

Deduplication in NetBackup can be done both on the media server and on the client. For obvious reasons, network traffic will be significantly reduced if deduplication is used on the client. The flip side of the coin here is the increase in the load on the client's CPU, which may be unacceptable for highly loaded systems. Theoretically, deduplication efficiency can reach 100% (if the data did not change at all in the interval between the individual backup tasks). In reality, the effectiveness of deduplication varies in the whole range of values from 0 to 100% and depends on the number of changes made to the data. The illustration below in the Deduplication Rate column shows the high performance of SAP DB deduplication when performing backups to the PDDO pool.

When using deduplication on the client, there is a regular decrease in the time required to back up data (if the client CPU is doing the job normally). For example, the first full backup in a volume of 1 TB may take 5 hours conditionally, and the second with the same amount of data can be performed in 1 hour or less if the changes in the copied files were small. This should be considered when planning backup windows.

Compressing the client also reduces network traffic and loads the client's CPU. At the same time, compression (like encryption) greatly changes the contents of the files transferred to the media server, which leads to a sharp drop in the effectiveness of deduplication. Therefore, when using deduplication, the compression and encryption options in the backup policy should be disabled.

To preserve the effectiveness of deduplication and compression, NetBackup, by default, independently compresses (but does not encrypt) the deduplicated data on the media server, i.e., first the data is deduplicated and then compressed. This is regulated via the pd.conf configuration file, whose parameters are described in detail in the “Symantec NetBackup Deduplication Guide” in the “MSDP pd.conf file parameters” section.

If the data changes frequently and often (for example, logs of transaction logs of the database), their compression may be more effective than deduplication. In this case, the compressed data should be written to the storages of the types BasicDisk or AdvancedDisk that do not support deduplication.

NetBackup Accelerator is a technology that allows, as the name implies, to speed up the creation of backup copies. This is done by detecting data blocks on the client, backup copies of which have already been created, and the data has not changed since the previous backup was created, and it is still available in NetBackup repositories.

Unlike the well-known “Archive” file attribute in Windows (or backup based on the date the file was changed), this method allows transferring unchanged files as a whole, but only modified blocks in these files, which saves the bandwidth between the client and the media server and significantly reduces the time to create a backup. At the same time, a new backup copy from old (unchanged) and new (changed) data blocks is synthesized on the fly.

NetBackup Accelerator is compatible only with the following types of backup policies:

The use of Accelerator is only possible with MSDP, PureDisk and OST disk storages. Working with BasicDisk and AdvancedDisk is not supported. Accelerator requires a Data Protection Optimization Option for NetBackup.

In the case of the Standard policy, Accelerator doesn’t depend on the OS; on the client side it maintains a log of changes in files. When using the MS-Windows policy, you must use the NTFS or ReFS file system log, which is enabled by the Use Change Journal option. For these two policies, the logic behind Accelerator is:

The work of Accelerator in the framework of the VMware policy is generally the same, but for logging, the VMware Changed Block Tracking (CBT) option is used, which may require separate configuration on the virtualization system. In addition, for individual events (cold restart of the virtual machine, power failure of the host, etc.), the change log in VMware can be reset, which will create a full backup without taking into account changes in the files and will naturally affect the backup time during the next backup. . In addition, when creating a full backup of a virtual machine and enabled Accelerator, the granular recovery of MS Exchange, MS SQL and MS Sharepoint data from a VMDK VM file is supported.

In addition to Accelerator, NetBackup has another option that allows you to back up only changed data blocks. It is called “Block Incremental Backup” (Block-level Incremental Backup, BLIB) and is designed to work with Oracle databases. BLIB is a technology that allows you to transfer from Oracle to the media server only those blocks of Oracle database files that are marked as modified. In NetBackup, it can only be used when hosting databases on VxFS file systems created using the Symantec Storage Foundation.

In this project, despite the availability of Oracle databases as part of SAP solutions, the BLIB method was not used due to the absence of a Storage Foundation product at the customer. All other methods to speed up the creation of backups were successfully applied and allowed to fit into very small backup windows set by the customer.

The list of resource classes to be regularly backed up included the following:

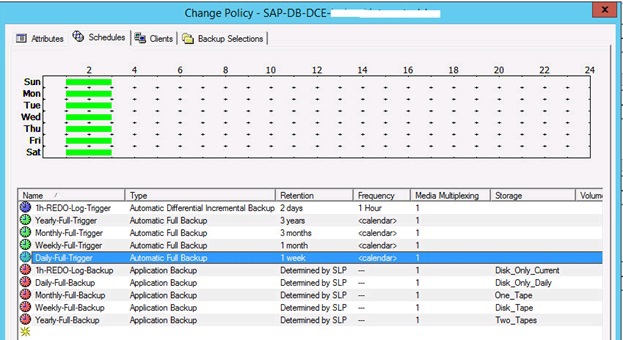

For each resource class, one or more backup policies are created, based on which NetBackup generates backup tasks and a schedule for their launch.

NetBackup backup tasks are the intersection of four types of parameters that can be described in the following words:

The parameters defining the backup destination (storage, volume group) may be defined in the policy attributes, but in practice this method is rarely used, since the backup destination depends on the schedule.

At the same time, if the attributes of a policy are set in a single set for one policy, then the lists of schedules, customers, and resources can include multiple values.

Depending on the class of policy set on the Attributes tab, other parameters may change their meaning.

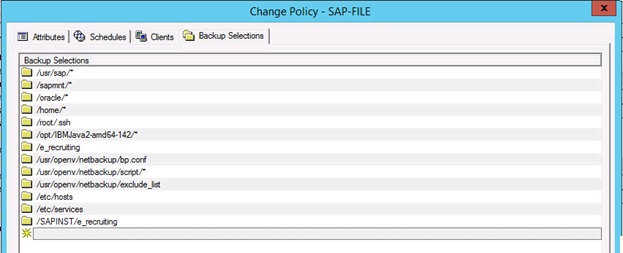

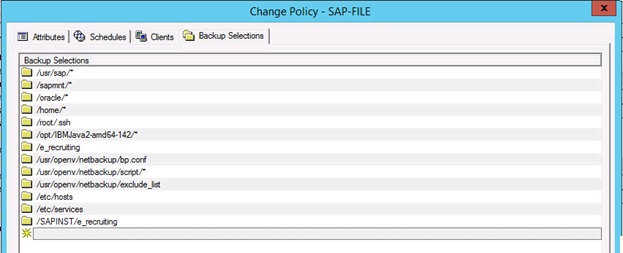

For example, if the Standard policy on the Selections tab lists the list of reserved resources by absolute paths in the client's file system, then for SAP or Oracle backup tasks this list contains the list of scripts that are run by the NetBackup client, and the backup process is already being managed from them. This approach allows you to achieve maximum flexibility in the organization of the backup process, which is especially important for working with large databases.

Depending on the type of backup policy, various additional options are also selected that both optimize the backup process (like Accelerator) and can provide special features in the process of restoring data from a backup. For example, Granular Recovery allows you to restore individual emails from MS Exchange backup or individual files from the Share Point DB.

On the Attributes tab, you can also specify a location for storing backups (Policy Storage), although the practice shows that it’s not always convenient to specify a storage location at the policy level. More features are provided by the definition of storage locations at the Storage Lifecycle Policy (SLP) level. These are special objects that combine information about the place of storage, the period of storage, the order of duplication of backups, as well as the distribution of media (files on disk or tapes) among pools.

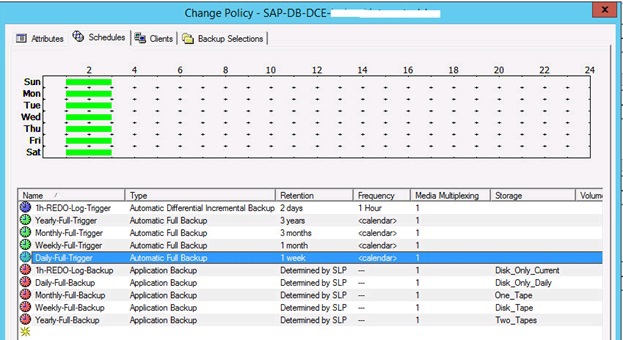

Using SLP allows you to flexibly set the rules for storing backups within the same NetBackup policy, since SLP can be specified for each task schedule separately.

Task schedules are specified on a separate tab of Schedules and allow you to define within one policy the order in which tasks are launched according to the overall backup policy of the organization. Usually, separate schedules are set for annual, monthly, weekly, daily tasks, as well as for tasks that are performed many times during one day (for example, backup tasks for database transaction logs).

A special feature of the backup of database tasks is that for each type of backup, you need to create a couple of tasks:

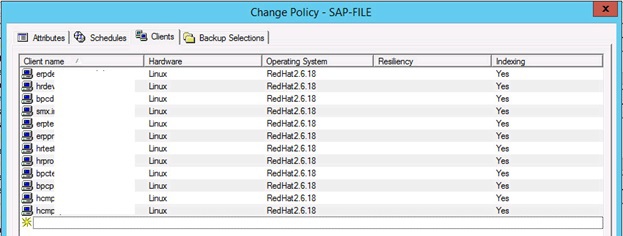

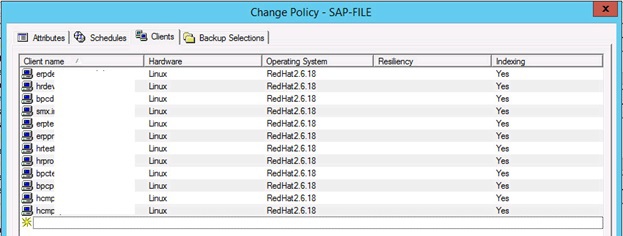

The Clients tab lists the list of clients that are backed up as part of this policy. There can be quite a lot of customers, but the same rules will apply to all of them.

The same applies to the list of copied resources. Net Backup on each of the listed clients will try to find all the resources listed on the Backup Selections tab, and if any particular resource from the list is found, Net Backup will back it up in accordance with the policy.

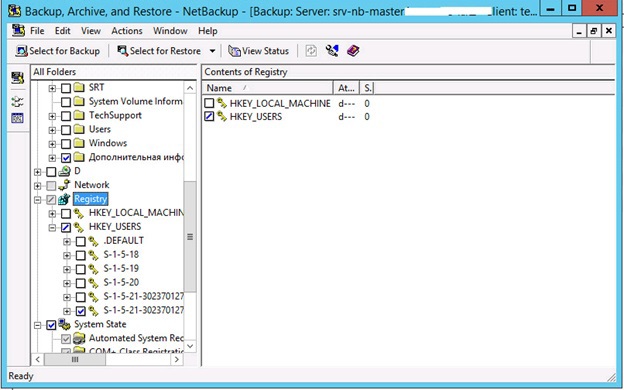

In most cases, you need to use the Backup, Archive and Restore program included in the NetBackup installation to recover data. It allows you to specify a list of resources to be recovered based on the required date, a list of resources, a destination (here you can override the data destination by specifying a different path or another server), as well as recovery rules in exceptional situations (for example, specify whether files are overwritten, if they are already at the restore point).

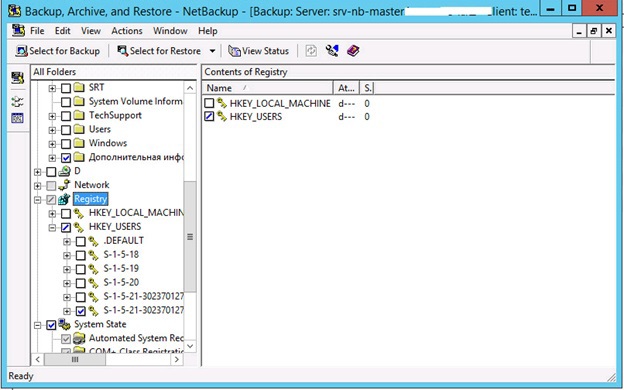

For Windows, you can specify files, directories, the state of the system as a whole, registry keys, etc. in the recovery tree.

The combination of options for restoring data from backups in NetBackup is very much, more than backup methods. The framework of this article does not allow to disclose them in detail. All of them are documented in as much detail as possible in the official instructions of Symantec, and referring to these instructions in the process of working with Net Backup is always useful, since it is quite difficult to cover one person’s full potential. But we can confidently say that if the design and configuration of the backup system were performed correctly, then data recovery in case of real problems related to their damage or loss is a technical matter.

Author: Andrey Khlebnikov, Softline

In order to increase the security of internal corporate systems against information loss and reduce the production costs of recovery in case of failures, the customer decided to implement a backup system based on NetBackup 7.6. We leave the description of the process of choosing a specific solution by a customer outside the scope of this article.

As a result, the main parameters of the solution were identified:

- list of information systems to be backed up;

- backup windows;

- available server resources;

- resources of storage systems and data networks

and formulated the project objectives, the achievement of which was considered necessary.

')

In preparation for the project, a survey was conducted of the existing infrastructure, which allowed us to get an idea of all the systems to be backed up, determine the requirements for the volumes of backup storage and data transmission capacity, as well as create the necessary schemes.

Since the introduction of the system was carried out mainly in a remote mode, the presence of diagrams and descriptions made at the survey stage, simplified the solution of a large number of issues that arose during the work.

Customer infrastructure description

The customer's infrastructure, on the one hand, is relatively simple, as there is a clear separation of functions between servers, and, on the other hand, its heterogeneity and richness in various systems required the development of a large number of different backup policies. The task was to perform the backup of all systems using only one master server and one media server.

The list of systems to be backed up includes about 40 servers (both physical and virtual) with a total storage capacity of about 60 TB.

Most of the servers, virtualization, disk systems and tape libraries are located on the first site of the customer. Separate hardware servers - on the second (within one city). The sites are interconnected by a GbE network. Inside the first site, there are two storage network factories to which storage resources and various servers are connected.

Of course, in order to ensure the safety of data in various serious incidents (fires, flooding, etc.), working data and backup copies should be better placed at different sites, but in this case the customer’s available resources did not allow such a scheme to be implemented, and this task was put in the project.

Solution Architecture Description

The solution includes:

- 1 NetBackup master server (it also has the NBAC access control module and the OpsCenter monitoring tool);

- 1 NetBackup media server;

- Several LUNs from disk arrays designed for storing backup copies;

- 2 tape libraries (3 tape drives in total).

Both tape libraries, disk arrays and a media server are connected to two isolated SAN factories built on Brocade switches with a bandwidth of up to 8 Gb / s. Virtualization hosts with VMware ESXi hypervisors are also connected to the SAN. This allowed us to further use the backup mode of virtual machines over the SAN and significantly relieve the GbE network.

Correct SAN tuning is one of the key factors affecting the stability of the solution as a whole. Errors in the SAN zone configuration or in the physical transport of the network (on ports, links to servers and repositories, in inter-switch communications, etc.), overload during peak traffic, lack of reception and transmission credits and other not always obvious problems can lead to first glance, to incomprehensible or unreasonable unavailability of resources, their sudden disappearance with the slightest changes, etc. In addition to configuring the storage network itself, at this stage, setting up the publication of resources on storage systems: both on disk arrays and libraries.

Sometimes it happens that the customer’s specialists do not particularly follow the work of the SAN, since for them this part of the work is not everyday. As they say, once set up and forgotten, while working - do not touch. And when preparing the network for launching the backup system, a lot of changes are made in its configuration and storage system settings. Therefore, a detailed study of the SAN configuration before network changes is a very important step.

On disk arrays, LUNs were allocated for storing backup copies: LUNs of 6 TB size for deduplicated data and LUN sizes of just less than 2 TB for storing data that are easier to compress than deduplicate, for example, these types of data include logs of database transactions. Both disk volumes are connected to a media server on which they were marked up as GPT basic volumes by means of Windows Server OS.

For backup purposes, it makes sense immediately to mark up disk resources in GPT, since the MBR limit of 2 TB is quite easily reached during operation and there is a need to increase the storage volume above this value. At the same time, the PDDO component in NetBackup, which will be described later, has a limitation: on a media server, it can use only one logical drive to store deduplicated data. Therefore, in order not to create multiple LUNs of 2 TB each in the future and not to combine them into a Spanned Volume with dynamic volume management tools, it is much easier to immediately mark the desired LUN in GPT.

Here, of course, you need to consider whether the necessary backup volume will be available within one LUN from one array. If the disk space is “sliced” into various pieces from several arrays, the management of the disk space on the media server can be complicated. Especially, considering that when using virtual machine backup over SAN, the media server should have access to all VMFS volumes as well.

Features of the implementation of the solution

Due to the limited bandwidth of the network interfaces of the media server and the high density of backup tasks performed at night, the system was originally designed to use the following techniques, which significantly speed up the backup process:

- SAN backup

- deduplication on the client;

- client compression;

- Acceleration of backup copies (Accelerator).

SAN backup is used as part of a solution to create copies of virtual machines hosted on VMware vSphere. In short, the meaning of this technique is that when creating a backup, the data from the virtualization host is transmitted to the media server not over the Ethernet network, but over the SAN, which, if you use the GbE network, unloads it very much (for 10GbE networks this is obviously is no longer so relevant). At the same time, the media server directly via the SCSI protocol (wrapped in FC traffic) interacts with disk storage, on which LUNs are located with virtual machines. In this case, appropriate zones must be created in the SAN from the media server to disk arrays containing LUNs with virtual machines, and these LUNs should be presented to the media server by means of disk arrays.

Deduplication in NetBackup is implemented by either Media Server Deduplication Pool (MSDP), PureDisk Deduplication Option (PDDO), or Open Storage Technology (OST). All three options require special licensing and are not included in the basic package of NetBackup. The first two options allow you to create special types of storage on a media server (Media Server Deduplication Pool and PureDisk Deduplication Pool, respectively). The third option allows you to connect external OST-compatible third-party disk storage.

Deduplication in NetBackup can be done both on the media server and on the client. For obvious reasons, network traffic will be significantly reduced if deduplication is used on the client. The flip side of the coin here is the increase in the load on the client's CPU, which may be unacceptable for highly loaded systems. Theoretically, deduplication efficiency can reach 100% (if the data did not change at all in the interval between the individual backup tasks). In reality, the effectiveness of deduplication varies in the whole range of values from 0 to 100% and depends on the number of changes made to the data. The illustration below in the Deduplication Rate column shows the high performance of SAP DB deduplication when performing backups to the PDDO pool.

When using deduplication on the client, there is a regular decrease in the time required to back up data (if the client CPU is doing the job normally). For example, the first full backup in a volume of 1 TB may take 5 hours conditionally, and the second with the same amount of data can be performed in 1 hour or less if the changes in the copied files were small. This should be considered when planning backup windows.

Compressing the client also reduces network traffic and loads the client's CPU. At the same time, compression (like encryption) greatly changes the contents of the files transferred to the media server, which leads to a sharp drop in the effectiveness of deduplication. Therefore, when using deduplication, the compression and encryption options in the backup policy should be disabled.

To preserve the effectiveness of deduplication and compression, NetBackup, by default, independently compresses (but does not encrypt) the deduplicated data on the media server, i.e., first the data is deduplicated and then compressed. This is regulated via the pd.conf configuration file, whose parameters are described in detail in the “Symantec NetBackup Deduplication Guide” in the “MSDP pd.conf file parameters” section.

If the data changes frequently and often (for example, logs of transaction logs of the database), their compression may be more effective than deduplication. In this case, the compressed data should be written to the storages of the types BasicDisk or AdvancedDisk that do not support deduplication.

NetBackup Accelerator is a technology that allows, as the name implies, to speed up the creation of backup copies. This is done by detecting data blocks on the client, backup copies of which have already been created, and the data has not changed since the previous backup was created, and it is still available in NetBackup repositories.

Unlike the well-known “Archive” file attribute in Windows (or backup based on the date the file was changed), this method allows transferring unchanged files as a whole, but only modified blocks in these files, which saves the bandwidth between the client and the media server and significantly reduces the time to create a backup. At the same time, a new backup copy from old (unchanged) and new (changed) data blocks is synthesized on the fly.

NetBackup Accelerator is compatible only with the following types of backup policies:

- Standard (file resources on any servers);

- MS-Windows (the same file resources on MS Windows systems);

- VMware (VMware virtual machines).

The use of Accelerator is only possible with MSDP, PureDisk and OST disk storages. Working with BasicDisk and AdvancedDisk is not supported. Accelerator requires a Data Protection Optimization Option for NetBackup.

In the case of the Standard policy, Accelerator doesn’t depend on the OS; on the client side it maintains a log of changes in files. When using the MS-Windows policy, you must use the NTFS or ReFS file system log, which is enabled by the Use Change Journal option. For these two policies, the logic behind Accelerator is:

- if resources have not yet been backed up from this client, NetBackup makes a regular full copy and creates a change log;

- during the next backup, NetBackup determines which blocks in the files have changed, and sends to the media server a data stream containing modified blocks and links to immutable blocks from the previous backup;

- the media server reconstructs the full backup based on the information received from the client.

The work of Accelerator in the framework of the VMware policy is generally the same, but for logging, the VMware Changed Block Tracking (CBT) option is used, which may require separate configuration on the virtualization system. In addition, for individual events (cold restart of the virtual machine, power failure of the host, etc.), the change log in VMware can be reset, which will create a full backup without taking into account changes in the files and will naturally affect the backup time during the next backup. . In addition, when creating a full backup of a virtual machine and enabled Accelerator, the granular recovery of MS Exchange, MS SQL and MS Sharepoint data from a VMDK VM file is supported.

In addition to Accelerator, NetBackup has another option that allows you to back up only changed data blocks. It is called “Block Incremental Backup” (Block-level Incremental Backup, BLIB) and is designed to work with Oracle databases. BLIB is a technology that allows you to transfer from Oracle to the media server only those blocks of Oracle database files that are marked as modified. In NetBackup, it can only be used when hosting databases on VxFS file systems created using the Symantec Storage Foundation.

In this project, despite the availability of Oracle databases as part of SAP solutions, the BLIB method was not used due to the absence of a Storage Foundation product at the customer. All other methods to speed up the creation of backups were successfully applied and allowed to fit into very small backup windows set by the customer.

Backup Techniques

The list of resource classes to be regularly backed up included the following:

- file resources on Windows and Linux servers;

- directory services Actve Directory;

- VMware virtual machines;

- physical machines;

- SAP / Oracle database;

- MS SQL database;

- SharePoint portal.

For each resource class, one or more backup policies are created, based on which NetBackup generates backup tasks and a schedule for their launch.

NetBackup backup tasks are the intersection of four types of parameters that can be described in the following words:

- how to copy (policy attributes, Attributes);

- when to copy (task schedules, Schedules);

- from where to copy (backup clients, Clients);

- what to copy (list of copied resources, selections).

The parameters defining the backup destination (storage, volume group) may be defined in the policy attributes, but in practice this method is rarely used, since the backup destination depends on the schedule.

At the same time, if the attributes of a policy are set in a single set for one policy, then the lists of schedules, customers, and resources can include multiple values.

Depending on the class of policy set on the Attributes tab, other parameters may change their meaning.

For example, if the Standard policy on the Selections tab lists the list of reserved resources by absolute paths in the client's file system, then for SAP or Oracle backup tasks this list contains the list of scripts that are run by the NetBackup client, and the backup process is already being managed from them. This approach allows you to achieve maximum flexibility in the organization of the backup process, which is especially important for working with large databases.

Depending on the type of backup policy, various additional options are also selected that both optimize the backup process (like Accelerator) and can provide special features in the process of restoring data from a backup. For example, Granular Recovery allows you to restore individual emails from MS Exchange backup or individual files from the Share Point DB.

On the Attributes tab, you can also specify a location for storing backups (Policy Storage), although the practice shows that it’s not always convenient to specify a storage location at the policy level. More features are provided by the definition of storage locations at the Storage Lifecycle Policy (SLP) level. These are special objects that combine information about the place of storage, the period of storage, the order of duplication of backups, as well as the distribution of media (files on disk or tapes) among pools.

Using SLP allows you to flexibly set the rules for storing backups within the same NetBackup policy, since SLP can be specified for each task schedule separately.

Task schedules are specified on a separate tab of Schedules and allow you to define within one policy the order in which tasks are launched according to the overall backup policy of the organization. Usually, separate schedules are set for annual, monthly, weekly, daily tasks, as well as for tasks that are performed many times during one day (for example, backup tasks for database transaction logs).

A special feature of the backup of database tasks is that for each type of backup, you need to create a couple of tasks:

- normal (Full, Differential, etc.), which is, in fact, the trigger that initiates the launch of the backup script;

- an application's backup task, which accepts data from backup tools located on the client that were started by the script. In the case of database backup, it is in this problem that the SLP is indicated.

The Clients tab lists the list of clients that are backed up as part of this policy. There can be quite a lot of customers, but the same rules will apply to all of them.

The same applies to the list of copied resources. Net Backup on each of the listed clients will try to find all the resources listed on the Backup Selections tab, and if any particular resource from the list is found, Net Backup will back it up in accordance with the policy.

Data recovery from backup

In most cases, you need to use the Backup, Archive and Restore program included in the NetBackup installation to recover data. It allows you to specify a list of resources to be recovered based on the required date, a list of resources, a destination (here you can override the data destination by specifying a different path or another server), as well as recovery rules in exceptional situations (for example, specify whether files are overwritten, if they are already at the restore point).

For Windows, you can specify files, directories, the state of the system as a whole, registry keys, etc. in the recovery tree.

The combination of options for restoring data from backups in NetBackup is very much, more than backup methods. The framework of this article does not allow to disclose them in detail. All of them are documented in as much detail as possible in the official instructions of Symantec, and referring to these instructions in the process of working with Net Backup is always useful, since it is quite difficult to cover one person’s full potential. But we can confidently say that if the design and configuration of the backup system were performed correctly, then data recovery in case of real problems related to their damage or loss is a technical matter.

Author: Andrey Khlebnikov, Softline

Source: https://habr.com/ru/post/261355/

All Articles