Software oriented storage

This article was prepared by Artem Demarin, an expert of the Jet Data Systems Storage Systems Department.

Today, many prefer VoD (Video on Demand) services instead of going to the movies. Or, instead of visiting a cafe, they order food at home. Consumption of resources in the form of services is already something mundane and self-evident.

Gradually, this approach began to be applied to IT infrastructure / IT services. Creation of resources on demand was made possible through the active use of server virtualization systems, which allowed us to abstract from fixed configurations. At the initial stage, this made it possible to consider the IT infrastructure and IT services as services, but still did not provide the necessary flexibility and dynamism to satisfy user requests. To solve this problem, a software-defined data center concept (Software Defined Datacenter, SDDC) was developed, which provides for the abstraction from the hardware of absolutely all data center components.

This approach did not arise yesterday, but several factors make it difficult to implement. The most important is the storage infrastructure. Unlike applications, servers and networks, storage resources, and with them data of productive systems, often turn out to be tied to the unique equipment of various manufacturers.

')

Historically, each vendor created his product based on the available hardware platforms and operating systems, or developed a completely new complex with its own software interfaces (API) and a unique set of functions. As a result, among the platforms and products reigns such diversity that even the arrays of the same manufacturer may be incompatible with each other (there is no possibility for full or partial interaction, for example, for replication).

A significant increase in the computing power of x86 servers, and the active use of cloud technologies became the basis for the development of software-oriented storage (Software-Defined Storage, SDS). The functions of storing and managing data in these solutions are answered by software based on standard components of computing environments (servers, expansion disk shelves, switches, controllers, etc.). Their main feature is the absence of specialized hardware that provides for the implementation of individual functions. Today, there are several classes of software-oriented storage systems:

This classification is very conditional - often products implement functions belonging to several classes.

The concept of SDDC implies simplifying the IT infrastructure by using more homogeneous computing components and assigning them roles at the software level. In this case, both the allocation of components for a role and the combination of several roles on one set of equipment are allowed.

Fig. 1. High-level comparison of traditional and software-oriented storage architectures

In the past 7–10 years, manufacturers of classic arrays have begun to add SDS solutions to their portfolios. For the most part, they repeat classic storage systems: they provide access to data using standard protocols (FC, iSCSI, IB, FCoE, CIFS, NFS) and similar functionality (dynamic resource management, replication, snapshots, clones, etc.).

Obviously, SDS systems that repeat the architecture of classical arrays have the same technical limitations: a fixed number of controllers (nodes), interface ports for interaction, and drives (hard drives, SSD). However, the transition to the concept of software definition made it possible to use technologies that were previously available only in complex Hi-End (for example, virtualization of external disk resources). Due to the inclusion of SDS products in the stack of basic and mid-level solutions, vendors have significantly increased functionality in these segments.

The most popular today are SDS solutions with the object-based principle of data storage. Their main consumers are large and medium-sized cloud storage service providers: Dropbox, Apple, Amazon, Google, etc. In the case of private clouds, the object approach ensures the unification of computing components and the dynamic expansion of the infrastructure by adding universal new nodes (servers) that can provide service storage simultaneously with other roles.

The object principle provides for the storage of multiple copies of the parts of the data set - the so-called objects (the classical solutions are responsible for ensuring that the entire data set is stored within one resource). Data security is ensured when at least one instance of the set is available. The use of object storage made it possible to back up data depending on the location of the SDS components. For example, you can duplicate objects on resources of different nodes (servers - participants of SDS), located in different racks of the data center. In this case, the loss of part of the components is acceptable, provided that one or more copies of each of the objects is saved.

Object principle gives much greater scalability and flexibility in resource management. The first is provided by the use of multiple peer nodes. As a result, this characteristic is less dependent on the limitations of the hardware components, which cannot be said about classic arrays. Resource management flexibility is achieved through the use of dynamic objects distribution and storage algorithms: you can quickly change the principles of storage depending on business requirements.

The main disadvantage of object storages is the need to have an N-fold amount of resources (where N is the number of stored copies of objects and, accordingly, the level of data protection) and to access data using object protocols (S3, Rest API). However, the cost of excess volume is reimbursed through the use of components of mass production (servers, hard drives, switching equipment), which are significantly cheaper than specialized hardware solutions produced in small batches. The problem with data access protocols is sharper: most business applications and application software currently do not have support for object protocols. In this case, the revision or replacement of the software used for an acceptable time and sane money is very often impossible. In this regard, the main SDS-products currently have a separate component (role) for the provision of resources under classical protocols (iSCSI, NFS, CIFS).

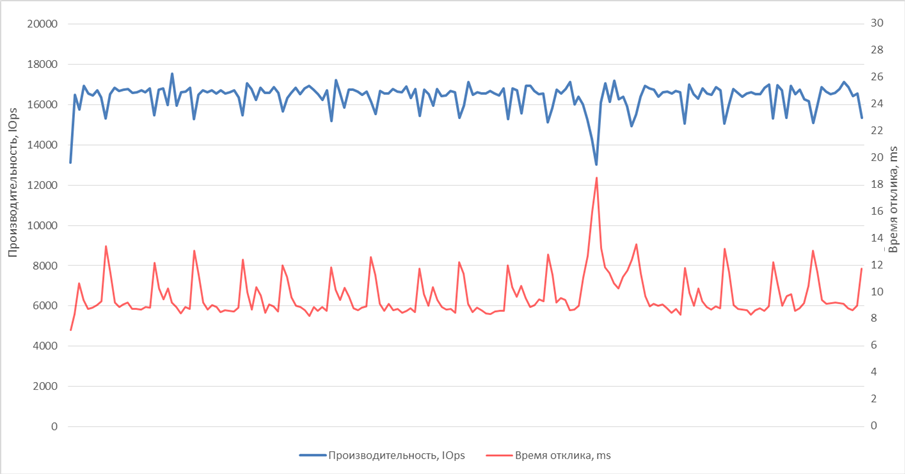

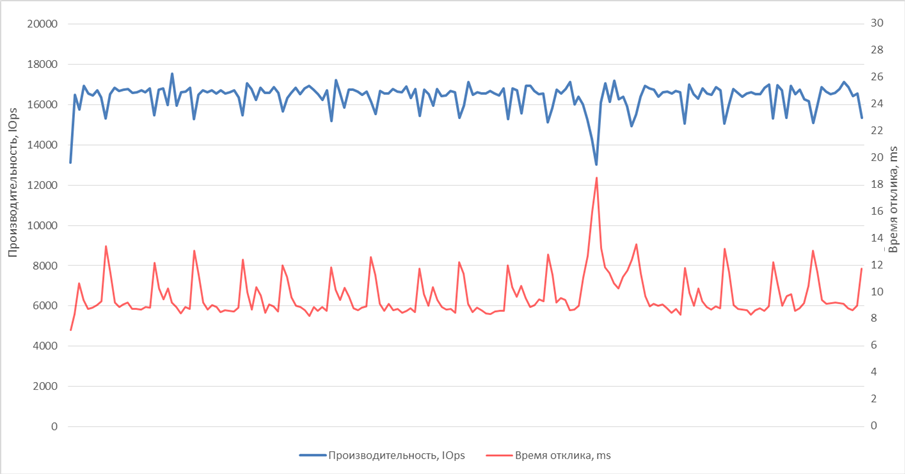

In most cases, the use of object or data storage systems based on them, in contrast to classical or similar arrays, requires more detailed study and evaluation of solution possibilities. This is due to a completely different approach to the organization of infrastructure, data backup (RC) and the complex as a whole (Disaster Recovery, DR). Mistakes made in this place can be costly. One of the possible consequences is the lack of constant performance. Depending on the data distribution and caching algorithms used, there may be a situation when not other local resources are accessed, but other nodes (members of a single data set storage group) are accessed via the internal network - Ethernet, InfiniBand, WAN. In fig. 2 shows an example of such performance fluctuations.

Fig. 2. Graph of possible multi-mode SDS performance

The red line represents the response time when performing I / O operations. Peaks occur in operations at the remote site. An increase in response time directly affects the performance of operations (more than this time, there are fewer operations that can be performed in a certain period). The example shows that the delay in the execution of remote operations is not constant and can cause a significant decrease in overall performance. The longer the execution of operations at the remote site, the greater the impact on the overall performance.

To minimize the impact of this feature of multi-node SDS on productive environments, it is necessary to carry out a preliminary in-depth study of the configuration and an assessment of the fundamental possibility of its application.

The main areas and solutions in which the active use of SDS technologies began:

Software-defined storage systems are the most flexible and dynamically developing technologies in the group of data storage solutions. The algorithms used in them make it possible to reduce the components used and the total cost of the solutions. As an example - the task of building a storage environment. The company has 3 data centers within the same city, connected by IP channels of sufficient bandwidth. Storage requirements are as follows:

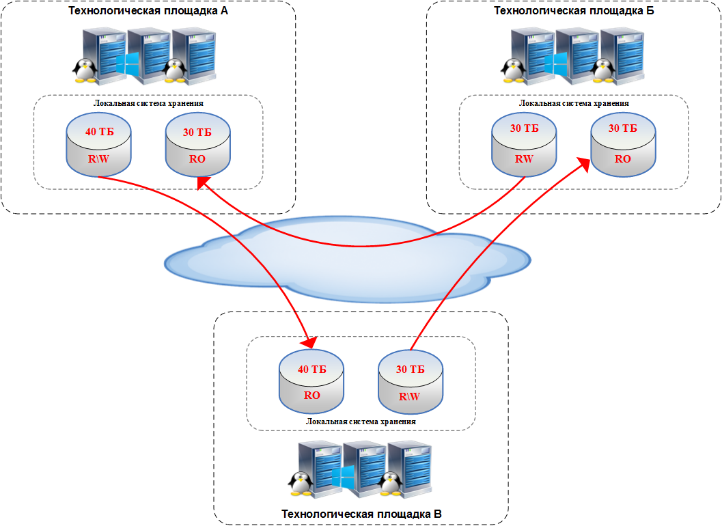

Solving this problem with the help of classic disk arrays does not fully work. Today, there are systems that provide simultaneous access (read / write) from only 2 technology sites (for example, HDS G1000 GAD) or from all data centers to a single storage located in one of the data centers and reserved in others. The conditional solution is the organization of storage resources with division into segments by sites and reservation of these segments between data centers. An example of this implementation is presented in Fig. 3

Fig. 3. A generalized solution scheme using classical arrays

The implementation of this approach requires at least 200 TB of total usable capacity for organizing storage and backup, and limits local full (read / write) access to the volume of the local segment. Access to write to remote segments should be carried out through communication channels between sites. Thus, it is required to ensure the operation of the application software with 3 independent data segments.

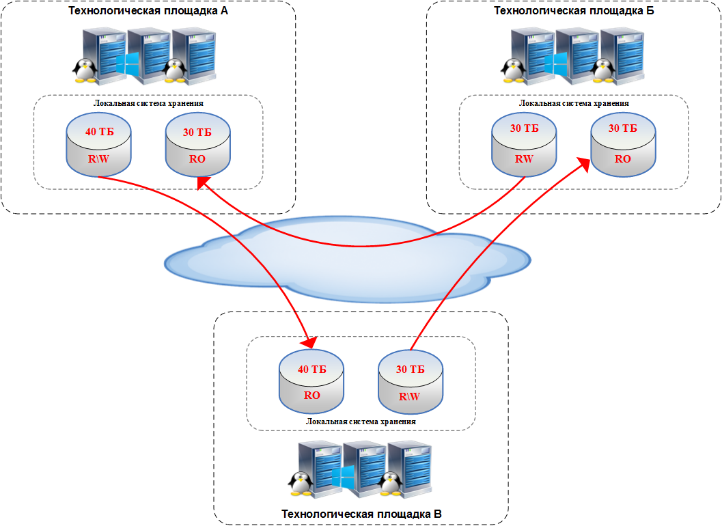

Now consider the use of SDS-systems with object access. In this case, it is possible to build a single data warehouse with the location of components (nodes) on 3 sites. Dynamic storage algorithms make it possible to ensure storage of at least 1 copy on each site, which guarantees data safety in case of loss of up to 2 of 3 data centers. This approach, as is the case with the classical array, requires storage of 3-fold volume, but the use of low-cost components reduces the hardware TCO.

Sometimes there is no possibility to place the full amount of the required equipment in each data center (due to lack of space, lack of power supply resources, cooling, etc.). Output - SDS solutions with unique (more complex) data distribution algorithms, for example, Quantum Lattus: data is stored here in conjunction with the storage of service information for their recovery. A similar solution is RAID 5 or 6 using parity data to recover the array. The main difference between Quantum Lattus and classic RAID is the ability to control the level of service information. Due to this, you can set the required level of data integrity in case of failure of a certain number of components. For example, you can set the loss of all components of one of the technological sites, in this case, the required storage volume to provide 100 TB of usable capacity is ~ 164 TB.

Fig. 4. Generalized solution design using SDS

The solution minimizes the capacity to provide data storage, but at the same time imposes more stringent requirements on availability, bandwidth of communication channels between sites and a set of RMS software and data archiving (you must have the ability to work with object storage systems).

The development and use of software-oriented storage systems should be considered as the most promising direction in the storage industry. The actual use of SDS today is limited by a number of factors, but increasing their functionality and gradually increasing the stability and predictability of component operation will allow, over time, SDS to be considered as the main storage systems for most areas.

The main limitation in using object SDS is software unavailability. In most cases, the implementation of new functions is delayed in large-scale software products, and often it is impossible architecturally.

It remains to hope that there will be no head-on collision of new software and classic products. The best option can be a smooth change of decisions, or a model in which both species will occupy their niches and will not compete strongly. We can take a place in the circle of defenders of this or that technology or watch from the side, applying all the best from both of them to our tasks.

We welcome your constructive comments.

Today, many prefer VoD (Video on Demand) services instead of going to the movies. Or, instead of visiting a cafe, they order food at home. Consumption of resources in the form of services is already something mundane and self-evident.

Gradually, this approach began to be applied to IT infrastructure / IT services. Creation of resources on demand was made possible through the active use of server virtualization systems, which allowed us to abstract from fixed configurations. At the initial stage, this made it possible to consider the IT infrastructure and IT services as services, but still did not provide the necessary flexibility and dynamism to satisfy user requests. To solve this problem, a software-defined data center concept (Software Defined Datacenter, SDDC) was developed, which provides for the abstraction from the hardware of absolutely all data center components.

This approach did not arise yesterday, but several factors make it difficult to implement. The most important is the storage infrastructure. Unlike applications, servers and networks, storage resources, and with them data of productive systems, often turn out to be tied to the unique equipment of various manufacturers.

')

Historically, each vendor created his product based on the available hardware platforms and operating systems, or developed a completely new complex with its own software interfaces (API) and a unique set of functions. As a result, among the platforms and products reigns such diversity that even the arrays of the same manufacturer may be incompatible with each other (there is no possibility for full or partial interaction, for example, for replication).

A significant increase in the computing power of x86 servers, and the active use of cloud technologies became the basis for the development of software-oriented storage (Software-Defined Storage, SDS). The functions of storing and managing data in these solutions are answered by software based on standard components of computing environments (servers, expansion disk shelves, switches, controllers, etc.). Their main feature is the absence of specialized hardware that provides for the implementation of individual functions. Today, there are several classes of software-oriented storage systems:

- software and hardware solutions that repeat the architecture of classical arrays (EMC XtremIO, IBM FlashSystems, etc.);

- independent software for building solutions based on x86 servers (EMC ScaleIO, pNFS, RAIDIX, EuroNAS, Open-E, etc.);

- object data storage (Gluster, OpenStack Swift, Ceph, EMC Atmos, HDS HCP, Quantum Lattus, EMC ViPR, etc.);

- Hadoop-compatible storage (Apache Hadoop HDFS-compatible solutions);

- disk resource virtualization systems (EMC ViPR, IBM SVC, Huawei VIS, etc.).

This classification is very conditional - often products implement functions belonging to several classes.

The concept of SDDC implies simplifying the IT infrastructure by using more homogeneous computing components and assigning them roles at the software level. In this case, both the allocation of components for a role and the combination of several roles on one set of equipment are allowed.

Fig. 1. High-level comparison of traditional and software-oriented storage architectures

Object storage

In the past 7–10 years, manufacturers of classic arrays have begun to add SDS solutions to their portfolios. For the most part, they repeat classic storage systems: they provide access to data using standard protocols (FC, iSCSI, IB, FCoE, CIFS, NFS) and similar functionality (dynamic resource management, replication, snapshots, clones, etc.).

Obviously, SDS systems that repeat the architecture of classical arrays have the same technical limitations: a fixed number of controllers (nodes), interface ports for interaction, and drives (hard drives, SSD). However, the transition to the concept of software definition made it possible to use technologies that were previously available only in complex Hi-End (for example, virtualization of external disk resources). Due to the inclusion of SDS products in the stack of basic and mid-level solutions, vendors have significantly increased functionality in these segments.

The most popular today are SDS solutions with the object-based principle of data storage. Their main consumers are large and medium-sized cloud storage service providers: Dropbox, Apple, Amazon, Google, etc. In the case of private clouds, the object approach ensures the unification of computing components and the dynamic expansion of the infrastructure by adding universal new nodes (servers) that can provide service storage simultaneously with other roles.

The object principle provides for the storage of multiple copies of the parts of the data set - the so-called objects (the classical solutions are responsible for ensuring that the entire data set is stored within one resource). Data security is ensured when at least one instance of the set is available. The use of object storage made it possible to back up data depending on the location of the SDS components. For example, you can duplicate objects on resources of different nodes (servers - participants of SDS), located in different racks of the data center. In this case, the loss of part of the components is acceptable, provided that one or more copies of each of the objects is saved.

Object principle gives much greater scalability and flexibility in resource management. The first is provided by the use of multiple peer nodes. As a result, this characteristic is less dependent on the limitations of the hardware components, which cannot be said about classic arrays. Resource management flexibility is achieved through the use of dynamic objects distribution and storage algorithms: you can quickly change the principles of storage depending on business requirements.

The main disadvantage of object storages is the need to have an N-fold amount of resources (where N is the number of stored copies of objects and, accordingly, the level of data protection) and to access data using object protocols (S3, Rest API). However, the cost of excess volume is reimbursed through the use of components of mass production (servers, hard drives, switching equipment), which are significantly cheaper than specialized hardware solutions produced in small batches. The problem with data access protocols is sharper: most business applications and application software currently do not have support for object protocols. In this case, the revision or replacement of the software used for an acceptable time and sane money is very often impossible. In this regard, the main SDS-products currently have a separate component (role) for the provision of resources under classical protocols (iSCSI, NFS, CIFS).

In most cases, the use of object or data storage systems based on them, in contrast to classical or similar arrays, requires more detailed study and evaluation of solution possibilities. This is due to a completely different approach to the organization of infrastructure, data backup (RC) and the complex as a whole (Disaster Recovery, DR). Mistakes made in this place can be costly. One of the possible consequences is the lack of constant performance. Depending on the data distribution and caching algorithms used, there may be a situation when not other local resources are accessed, but other nodes (members of a single data set storage group) are accessed via the internal network - Ethernet, InfiniBand, WAN. In fig. 2 shows an example of such performance fluctuations.

Fig. 2. Graph of possible multi-mode SDS performance

The red line represents the response time when performing I / O operations. Peaks occur in operations at the remote site. An increase in response time directly affects the performance of operations (more than this time, there are fewer operations that can be performed in a certain period). The example shows that the delay in the execution of remote operations is not constant and can cause a significant decrease in overall performance. The longer the execution of operations at the remote site, the greater the impact on the overall performance.

To minimize the impact of this feature of multi-node SDS on productive environments, it is necessary to carry out a preliminary in-depth study of the configuration and an assessment of the fundamental possibility of its application.

The main areas and solutions in which the active use of SDS technologies began:

- test and development environments;

- storage of backup and archive data;

- public / corporate cloud storage systems (Dropbox, etc.);

- public / corporate data hosting;

- general purpose file storage (file shares);

- unstructured data processing systems (social networks, statistical data processing, etc.);

- virtual machine storage;

- BLOB-storage of secondary data of information systems.

Software-defined storage systems are the most flexible and dynamically developing technologies in the group of data storage solutions. The algorithms used in them make it possible to reduce the components used and the total cost of the solutions. As an example - the task of building a storage environment. The company has 3 data centers within the same city, connected by IP channels of sufficient bandwidth. Storage requirements are as follows:

- total data - 100 TB;

- simultaneous read / write access from 3 data centers;

- fault tolerance to the level of loss of one of the data centers.

Solving this problem with the help of classic disk arrays does not fully work. Today, there are systems that provide simultaneous access (read / write) from only 2 technology sites (for example, HDS G1000 GAD) or from all data centers to a single storage located in one of the data centers and reserved in others. The conditional solution is the organization of storage resources with division into segments by sites and reservation of these segments between data centers. An example of this implementation is presented in Fig. 3

Fig. 3. A generalized solution scheme using classical arrays

The implementation of this approach requires at least 200 TB of total usable capacity for organizing storage and backup, and limits local full (read / write) access to the volume of the local segment. Access to write to remote segments should be carried out through communication channels between sites. Thus, it is required to ensure the operation of the application software with 3 independent data segments.

Now consider the use of SDS-systems with object access. In this case, it is possible to build a single data warehouse with the location of components (nodes) on 3 sites. Dynamic storage algorithms make it possible to ensure storage of at least 1 copy on each site, which guarantees data safety in case of loss of up to 2 of 3 data centers. This approach, as is the case with the classical array, requires storage of 3-fold volume, but the use of low-cost components reduces the hardware TCO.

Sometimes there is no possibility to place the full amount of the required equipment in each data center (due to lack of space, lack of power supply resources, cooling, etc.). Output - SDS solutions with unique (more complex) data distribution algorithms, for example, Quantum Lattus: data is stored here in conjunction with the storage of service information for their recovery. A similar solution is RAID 5 or 6 using parity data to recover the array. The main difference between Quantum Lattus and classic RAID is the ability to control the level of service information. Due to this, you can set the required level of data integrity in case of failure of a certain number of components. For example, you can set the loss of all components of one of the technological sites, in this case, the required storage volume to provide 100 TB of usable capacity is ~ 164 TB.

Fig. 4. Generalized solution design using SDS

The solution minimizes the capacity to provide data storage, but at the same time imposes more stringent requirements on availability, bandwidth of communication channels between sites and a set of RMS software and data archiving (you must have the ability to work with object storage systems).

The development and use of software-oriented storage systems should be considered as the most promising direction in the storage industry. The actual use of SDS today is limited by a number of factors, but increasing their functionality and gradually increasing the stability and predictability of component operation will allow, over time, SDS to be considered as the main storage systems for most areas.

The main limitation in using object SDS is software unavailability. In most cases, the implementation of new functions is delayed in large-scale software products, and often it is impossible architecturally.

It remains to hope that there will be no head-on collision of new software and classic products. The best option can be a smooth change of decisions, or a model in which both species will occupy their niches and will not compete strongly. We can take a place in the circle of defenders of this or that technology or watch from the side, applying all the best from both of them to our tasks.

We welcome your constructive comments.

Source: https://habr.com/ru/post/261223/

All Articles