Organizing Clean Completion of Go Applications

Hello, this post will discuss the topic of organization of “clean” completion for applications written in the Go language.

I call the clean exit the existence of guarantees that at the time of the completion of the process (by a signal or for any other reasons other than system failure), certain procedures will be performed and the output will be delayed until their completion. Next, I will give a few typical examples, talk about the standard approach, and also demonstrate your package for a simplified application of this approach in your programs and services.

TL; DR: github.com/xlab/closer

1. Introduction

So, for sure you have noticed at least once, as some server or utility catches your twisted

Ctrl^C and, wildly apologizing, of course, asks to wait until it resolves matters that cannot be postponed. Well-written programs finish things and go out, while bad ones go into deadlock and are dealt with only at the sight of SIGKILL . More precisely, the program does not have time to learn about SIGKILL , the process is described in detail here: SIGTERM vs. SIGKILL and Unix Signal .')

When switching to Go as the main development language and after using the latter for a long time to write various services, it became clear to me that you need to add signal processing to literally every service. Mainly due to the fact that in

Go multithreading is a primitive language. Within one process, the following threads can work, for example:- Database Client Connection Pool ;

- Consumer for pub / sub queue;

- Publisher for the pub / sub queue;

- N threads of the workers themselves;

- Cache in memory;

- Open log files;

There is nothing supernatural (sorry, if offended), especially in practice it is a few entities that do their work in the background ( go-routines ), and communicate with each other through the go-channels (typed queues). The usual service is microservice architecture.

And with the launch, everything is extremely simple: first we start the pool of database clients, if not started, we exit with an error. Then we initialize the cache in memory. Then we start publisher, if not started, we exit with an error. Then open the files - for example logs. Then we start the workers, and more, who will consume data through the consumer, write to the database and keep something in the cache, and add the results to the publisher. Oh yeah, more processing events will be written to the logs, not necessarily from the same streams. And, finally, we activate all this by opening the data stream of the consumer, and if it is not opened, we exit.

Initialization takes place sequentially, in one thread, in case of an error at one stage, roll back already completed initialization steps is not necessary, since the system is in the zero position all this time until we open the data stream. And so we opened the data stream, and after 5 minutes we urgently needed to go out, complete everything, and so beautiful and clean.

What for? And because not all the results from the buffered channel could have been received by the process of writing to the database, and even those that were read from the channel, could not have time to reach the database via the network. And not all objects could have time to publish in the pub / sub queue. Not all workers could have time to submit their results to the appropriate channels. The consumption of the queue by the workers could also be buffered, which means that a small part of the objects could be read from the pub / sub server of the queue, but not yet processed by the workers. The cache in memory, for example, must be dumped to disk at the time of the completion of the program, and all the buffers with these logs must be cleared into the appropriate files. All of this is listed here in order to show that any primitive service with multiple background tasks is doomed to have a way to reliably track the output of an application. And not at all for the sake of the beautiful “Bye bye ...” notification in the console, but as a vital synchronization mechanism for a multi-threaded combine.

2. A bit of practice

In Go there is a good tool - defer , this expression, being applied to a function, will add it to a special list. Functions from this list will be executed in the reverse order before returning from the current function. Such a mechanism sometimes simplifies working with mutexes and other resources that need to be freed upon return. The

defer effect works even if panic happens (= exception), that is, the code defined in the deferred function receives a guarantee of being executed, and the exceptions themselves can be caught and processed in this way. func Checked() { defer func() { // , if x := recover(); x != nil { // , } }() // - , } But there is one malicious antipattern; for some reason,

defer often used in the main function. For example: func main() { defer doCleanup() // fmt.Println("10 seconds to go...") time.Sleep(10 * time.Second) } The code will work fine in the case of a normal return and even panic, but people have forgotten that

defer will not work if the process receives a completion signal (a syscall exit is performed, from the Go documentation: "The program terminates immediately; deferred functions are not run." ).In order to correctly handle such a situation, the signals should be caught manually by “subscribing” to the desired types of signals. A common practice (judging by the responses to StackOverflow) is to use signal.Notify , the pattern looks like this:

sigChan := make(chan os.Signal, 1) signal.Notify(sigChan, syscall.SIGHUP, syscall.SIGINT, syscall.SIGTERM, syscall.SIGQUIT) go func() { s := <-sigChan // }() To hide the extra details of the implementation, the xlab / closer package was invented, it will be discussed further.

3. Closer

So, a package

closer takes on the responsibility of tracking signals, allows you to bind functions and automatically executes them in the reverse order of completion. The package is thread-safe, thereby eliminating the need for the user to think about possible race conditions when calling closer. Close from several threads at the same time. The API currently consists of 5 functions: Init , Bind , Checked , Hold and Close . Init allows the user to redefine the list of signals and other options, the use of the remaining functions will be discussed by examples.Standard list of signals:

syscall.SIGINT, syscall.SIGHUP, syscall.SIGTERM, syscall.SIGABRT .An example is common

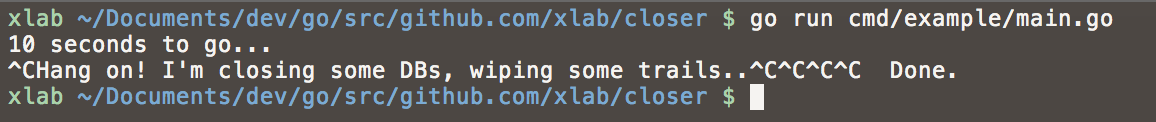

func main() { closer.Bind(cleanup) go func() { // fmt.Println("10 seconds to go...") time.Sleep(10 * time.Second) // closer.Close() }() // , — closer.Close closer.Hold() } func cleanup() { fmt.Print("Hang on! I'm closing some DBs, wiping some trails..") time.Sleep(3 * time.Second) fmt.Println(" Done.") } Error example

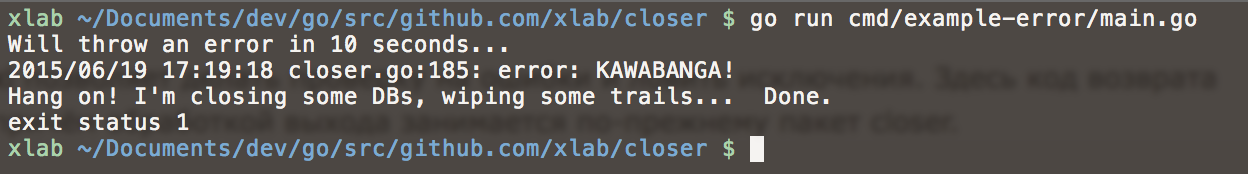

The function closer.Checked allows you to check for errors and catch exceptions. Here, the return code will be different from zero, and the output package is still engaged in the package

closer . func main() { closer.Bind(cleanup) closer.Checked(run, true) } func run() error { fmt.Println("Will throw an error in 10 seconds...") time.Sleep(10 * time.Second) return errors.New("KAWABANGA!") } func cleanup() { fmt.Print("Hang on! I'm closing some DBs, wiping some trails...") time.Sleep(3 * time.Second) fmt.Println(" Done.") } Panic Example (Except)

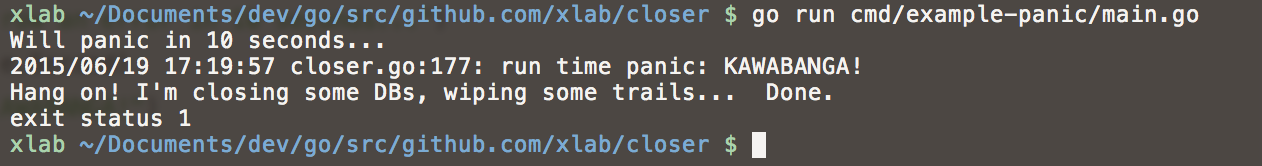

func main() { closer.Bind(cleanup) closer.Checked(run, true) } func run() error { fmt.Println("Will panic in 10 seconds...") time.Sleep(10 * time.Second) panic("KAWABANGA!") return nil } func cleanup() { fmt.Print("Hang on! I'm closing some DBs, wiping some trails...") time.Sleep(3 * time.Second) fmt.Println(" Done.") } Completion Codes Compliance Table:

| ------------- | ------------- error = nil | 0 () error != nil | 1 () panic | 1 () Conclusion

Thus, regardless of the root cause of the completion of the process, your Go application will work through the required “clean” termination procedure. In Go, it is accepted for each entity requiring such a procedure to write the Close method, which would finalize all the internal processes of this entity. This means that the completion of the above described service from the second part of this article will consist in calling the

Close() method for all created entities, in the reverse order.First, the consumer data stream of the pub / sub queue is closed, the system will not receive any new tasks, then the system will wait until all the workers have completed and completed, only after that the cache will be synchronized with the disk, the writer channel is closed, the publisher channel is closed, synchronized and log files are closed, and finally the publisher connections to the database will be closed. In words, it sounds quite seriously, but in fact, it is enough just to correctly write the Close method of each entity and use closer.Bind in

main during initialization. Sketch main for clarity: func main() { defer closer.Close() pool, _ := xxx.NewPool() closer.Bind(pool.Close) pub, _ := yyy.NewPublisher() closer.Bind(function(){ pub.Stop() <-pub.StopChan }) wChan := make(chan string, BUFFER_SIZE) workers, _ := zzz.NewWorkgroup(pool, pub, wChan) closer.Bind(workers.Close) sub, _ := yyy.NewConsumer() closer.Bind(sub.Stop) // ( closer.Hold) sub.Consume(wChan) } Good luck to you synchronization!

Source: https://habr.com/ru/post/260661/

All Articles