NGINX inside: born for performance and scaling

NGINX is deservedly one of the best-performing servers, and all this thanks to its internal structure. While many web and application servers use a simple multi-threaded model, NGINX stands out from the crowd by its non-trivial event-based architecture, which allows it to easily scale to hundreds of thousands of parallel connections.

NGINX is deservedly one of the best-performing servers, and all this thanks to its internal structure. While many web and application servers use a simple multi-threaded model, NGINX stands out from the crowd by its non-trivial event-based architecture, which allows it to easily scale to hundreds of thousands of parallel connections.From top to bottom, Inside NGINX infographics will guide you through the basics of device processes to illustrating how NGINX handles many connections in a single process. This article will look at all this in a little more detail.

Preparing the scene: the NGINX process model

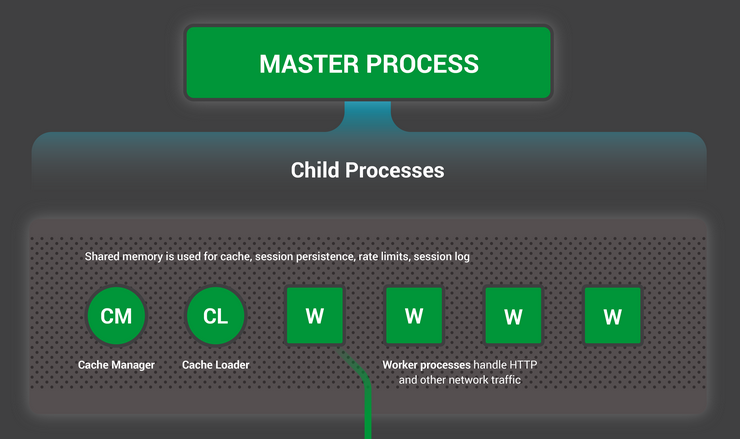

To better represent the device, you first need to understand how NGINX runs. NGINX has one master process (which, on behalf of the superuser, performs operations such as reading configuration and opening ports), as well as a number of working and auxiliary processes.

# service nginx restart * Restarting nginx # ps -ef --forest | grep nginx root 32475 1 0 13:36 ? 00:00:00 nginx: master process /usr/sbin/nginx \ -c /etc/nginx/nginx.conf nginx 32476 32475 0 13:36 ? 00:00:00 \_ nginx: worker process nginx 32477 32475 0 13:36 ? 00:00:00 \_ nginx: worker process nginx 32479 32475 0 13:36 ? 00:00:00 \_ nginx: worker process nginx 32480 32475 0 13:36 ? 00:00:00 \_ nginx: worker process nginx 32481 32475 0 13:36 ? 00:00:00 \_ nginx: cache manager process nginx 32482 32475 0 13:36 ? 00:00:00 \_ nginx: cache loader process On a 4-core server, the NGINX master process creates 4 worker processes and a pair of auxiliary cache processes that manage the contents of the cache on the hard disk.

')

Why is architecture still important?

One of the fundamentals of any Unix application is a process or a thread (from the point of view of the Linux kernel, processes and threads are almost the same - the whole difference in the separation of the address space). A process or thread is a self-contained set of instructions that the operating system can schedule to run on the processor core. Most complex applications run multiple processes or threads in parallel for two reasons:

- To simultaneously use more cores;

- Processes and threads make it easier to perform parallel operations (for example, to process multiple connections at the same time).

Processes and threads themselves consume additional resources. Each such process or thread consumes a certain amount of memory, and in addition they constantly replace each other on the processor (so-called context switching). Modern servers can handle hundreds of active processes and threads, but performance suffers as soon as memory runs out or a huge amount of I / O results in too frequent context changes.

The most typical approach to building a network application is to allocate a separate process or thread for each connection. This architecture is easy to understand and easy to implement, but it doesn’t scale well when an application has to work with thousands of connections at the same time.

How does NGINX work?

NGINX uses a model with a fixed number of processes that most effectively utilizes the available system resources:

- A single master process performs operations that require elevated permissions, such as reading the configuration and opening ports, and then spawns a small number of child processes (the following three types).

- The cache loader is started at the start to load the cache data located on the disk into RAM, and then it is completed. His work is planned so as not to consume a lot of resources.

- The cache manager wakes up periodically and deletes cache objects from the hard disk in order to maintain its size within the specified limit.

- Workflows do all the work. They process network connections, read data from disk and write to disk, communicate with backend servers.

Documentation NGINX recommends that in most cases the number of working processes be set equal to the number of processor cores, which allows using system resources as efficiently as possible. You can set this mode using the worker_processes auto directive in the configuration file:

worker_processes auto; When NGINX is under load, the workflow is mostly busy. Each of them handles multiple connections in non-blocking mode, minimizing the number of context switches.

Each workflow is single threaded and works independently, accepting new connections and processing them. Processes interact with each other using shared memory for cache data, sessions, and other shared resources.

Inside the workflow

Each NGINX workflow is initialized with a predetermined configuration and set of listening sockets inherited from the master process.

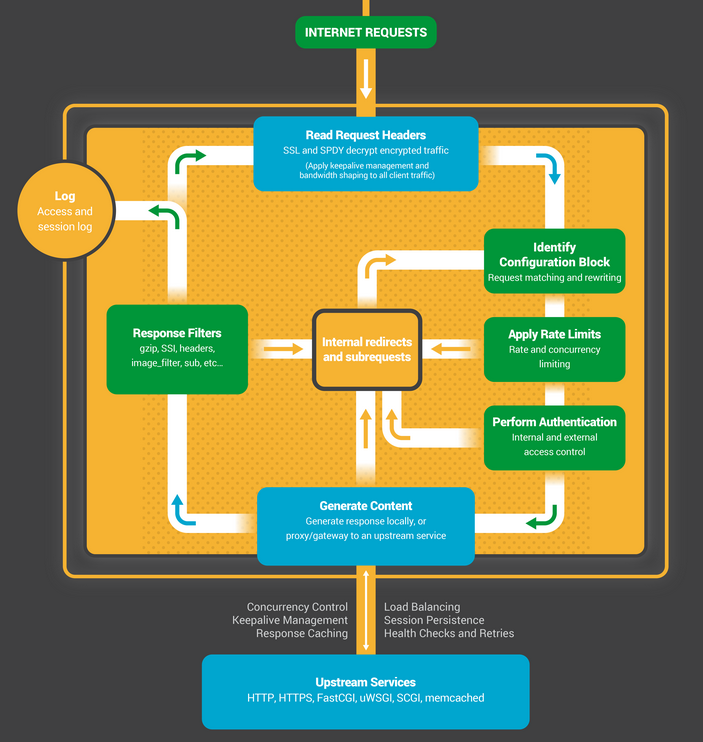

Workflows begin by waiting for events on listening sockets (see also accept_mutex and shared sockets ). Events notify about new connections. These connections fall into the state machine — the most commonly used for processing HTTP, but NGINX also contains state machines for processing TCP traffic flows (the stream module) and a number of e-mail protocols ( SMTP , IMAP and POP3 ).

The state machine in NGINX is essentially a set of instructions for processing a request. Most web servers perform the same function, but the difference lies in the implementation.

State machine device

The state machine can be thought of as rules for the game of chess. Each HTTP transaction is a chess game. On the one hand, the web server is a grandmaster who makes decisions very quickly. On the other side is a remote client, a browser that requests a website or application over a relatively slow network.

However, the rules of the game can be very complex. For example, the web server may need to interact with other resources (to proxy requests to the backend) or to contact the authentication server. Third-party modules can further complicate the processing.

Blocking state machine

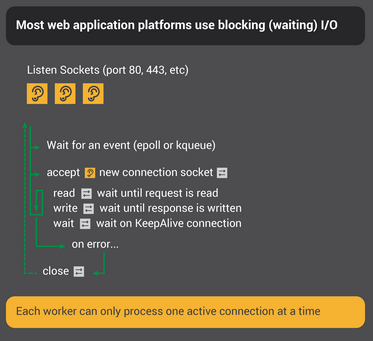

Recall our process or thread definition as a self-contained set of instructions that the operating system can assign to a specific processor core. Most web servers and web applications use a model in which to “play chess” one process or stream is associated per connection. Each process or thread contains instructions for playing one game to the end. All this time, the process, running on the server, spends most of the time blocked in anticipation of the next move from the client.

- The web server process is waiting for new connections (new batches initiated by clients) on listening sockets.

- Having received a new connection, he plays the game, blocking after each move, waiting for a response from the client.

- When a game has been played, the web server process may be awaiting the client’s desire to start the next game (this corresponds to long-lived keepalive connections). If the connection is closed (the client has left or has timed out), the process returns to the meeting of new clients on the listening sockets.

An important point to note is that each active HTTP connection (each batch) requires a separate process or thread (grandmaster). This architecture is simple and easily extensible with the help of third-party modules (new “rules”). However, there is a huge imbalance: a fairly lightweight HTTP connection, represented as a file descriptor and a small amount of memory, corresponds to a separate process or thread, a rather heavy object in the operating system. It is convenient for programming, but very wasteful.

NGINX, like a real grandmaster

Probably you heard about simultaneous play sessions when one grandmaster plays on many chess fields at once with dozens of opponents?

Kiril Georgiev played 360 games in parallel at a tournament in Bulgaria. His final result was 284 wins, 70 draws and 6 losses.

In the same way, the NGINX workflow “plays chess.” Each workflow (remember - usually only one per computational core) is a grandmaster capable of playing hundreds (and in fact hundreds of thousands) of games at a time.

- The workflow waits for events on listening sockets and connection sockets.

- Events occur on sockets and process them:

- An event on the listening socket means that a new client has arrived to start the game. Workflow creates a new socket connection.

- An event on the connections socket signals that the client has made a move. The workflow instantly responds to it.

The workflow, processing network traffic, is never blocked, waiting for the next move from the opponent (client). After the process has made its move, it immediately proceeds to other boards on which players await the move, or it meets new ones at the door.

Why is this faster than blocking multi-threaded architecture?

Each new connection creates a file descriptor and consumes a small amount of memory in the workflow. This is a very low overhead connection. NGINX processes can remain tied to specific processor cores. Context switches are quite rare and mostly when there is no more work left.

In the blocking approach, with a separate process for each connection, a relatively large amount of additional resources is required, and context switches from one process to another occur more frequently.

Additional information on the topic can also be found in the NGINX architecture article by Andrey Alekseev, vice president of development and co-founder of NGINX, Inc.

With an adequate system setup , NGINX perfectly scales to many hundreds of thousands of parallel HTTP connections per workflow and confidently absorbs traffic bursts (crowds of new players).

Update configuration and executable code

The NGINX architecture with a small number of workflows allows you to effectively update the configuration and even its own executable code on the fly.

NGINX configuration update is a very simple, lightweight and reliable procedure. It consists in simply sending the SIGHUP signal to the master process.

When a workflow receives SIGHUP, it performs several operations:

- Reloads the configuration and spawns a new set of workflows. These new workflows immediately begin accepting connections and processing traffic (using the new settings).

- Signals old workflows about smooth completion. They stop accepting new connections. As soon as the processing of current HTTP requests is completed, the connections are closed (no lingering keep-alive connections). As soon as all connections are closed, the workflow is terminated.

This procedure can cause a small surge in load on the processor and memory, but in general it is almost imperceptible amid the cost of processing active connections. You can reload the configuration several times per second (and there are quite a few NGINX users who do this). In rare cases, problems may arise when too many generations of NGINX workflows expect connections to close, but they are quickly resolved.

Updating NGINX executable code is the holy grail of high-availability services. You can update the server on the fly, without losing connections, idle resources and any interruptions in customer service.

The process of updating the executable code uses a similar approach to reloading the configuration. The new NGINX master process runs in parallel with the old one and receives descriptors of listening sockets from it. Both processes are loaded and their workflows handle traffic. Then you can give the command to the old master process for a smooth completion.

The whole procedure is described in detail in the documentation.

Let's sum up

We gave a cursory overview of how NGINX works. Under these simple descriptions lies more than a decade of experience in development and optimization, which allows NGINX to demonstrate outstanding performance on a wide range of equipment and real-world tasks, while remaining reliable and secure, as required by modern web applications.

If you want to learn more about this topic, then we recommend for review:

Source: https://habr.com/ru/post/260065/

All Articles