Predicting stock price using big data and machine learning

Translator's note: In our blog, we already talked about tools for creating trading robots, and even analyzed the relationship between the name of the company's stock ticker and the success of its shares. Today we present to your attention a translation of an interesting article, the author of which developed a system that analyzes changes in stock prices in the past and tries to predict the future stock price using machine learning.

This post is based on an article called “Modeling the dynamics of a high-frequency portfolio of limit orders using the reference vector method”. Roughly speaking, I step by step implement the ideas presented in this article using Spark and Spark MLLib . The authors use abbreviated examples, but I will use the full order book from the New York Stock Exchange ( NYSE ) (selected data are available on the NYSE FTP ), since working with Spark I can easily do this. Instead of using the support vector method, I will use the decision tree algorithm for classification, since Spark MLLib initially supports multiclass classification.

')

If you want to further understand the problem and the proposed solution, you need to read that article. I will do a full review of the problem in one or two sections, but in a less scientific language.

Predictive modeling is the process of choosing or creating a model whose goal is the most accurate prediction of a possible outcome.

The authors propose a framework for extracting feature vectors from an unformatted order log, which can be used as a set of input data for a classification method (for example, support vector machine or decision tree building) to predict a change in the securities rate (grow, decrease, not change). Based on a set of test data with labels assigned to them (price change), the classification algorithm builds a model that places new instances in one of the predefined categories.

In the table, each row represents a trade transaction that reflects the receipt of the order, the cancellation of the order or its execution. The arrival time is counted from midnight in seconds and nanoseconds, the price is in US dollars, and the volume - in the number of shares. Ask means that I sell and ask for my share of the specified price, Bid means that I want to buy at the specified price.

From this log it is very easy to restore the status of the order portfolio after each completed operation. You can learn more about the order book (order book) and limit order portfolio in Investopedia. I will not go into details. The general idea is very simple and straightforward.

This is an electronic list of sell and buy orders for a specific security or financial instrument, sorted by price level.

After the order portfolios are restored from the order journal, we can extract attributes and form feature vectors that will be used as input data for the

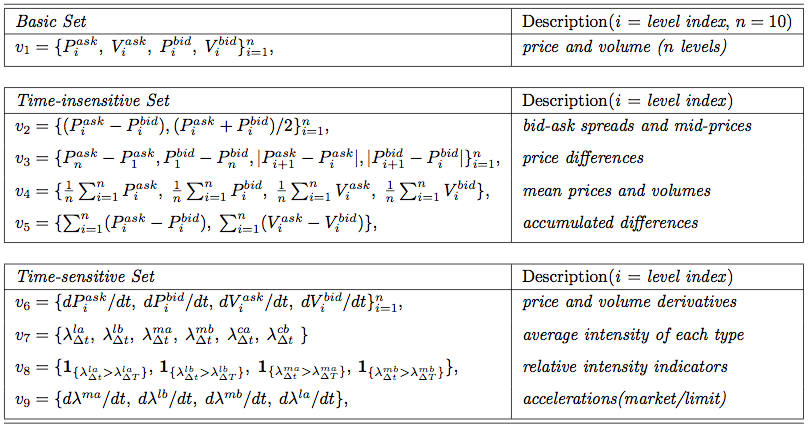

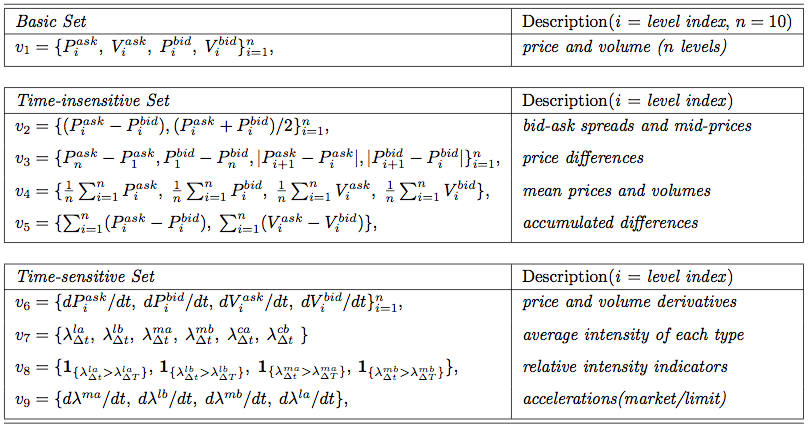

Attributes are divided into three categories: basic, time insensitive and time sensitive. Each of the categories can be calculated directly on the basis of the data obtained. Basic attributes are prices and volumes, both with the ask flag and the bid flag, in the amount of n = 10 different levels (represent price levels of the order portfolio at a given moment), which can be directly obtained from the order portfolio. Timeless insensitive attributes are easily calculated at a single point in time from the attributes of the base set. These include the bid-ask spread and the average price of the spread, price ranges, and the average price and volume at various price levels, which are calculated in feature sets

Preparation of test data for machine learning requires marking each time point, within which a change in the value of shares is observed (1 second, for example). This is a simple task that requires only two portfolios of orders: the current portfolio of orders and a portfolio of orders, formed after some time.

I will use the label

I will use NYSE TAQ OpenBook order logs and parse them using the Scala OpenBook library. A free dataset for two trading days is available for download on the NYSE FTP - it's very easy to get.

The TAQ (Trades and Quotes) databases provide time-varying price glass values in the T + 1 basis for closed markets. The results of TAQ data processing are used in the development and testing of trading strategies, analysis of market trends on real data and market research for the purpose of monitoring or conducting an audit.

I use the

As defined in the original article, we have three sets of features. The values of the first two of them are calculated on the basis of the

and here is the calculation of signs:

To extract tagged data from orders, I use

and so it can be improved with the help of the builder:

In the "real" application, I use 36 attributes from all 3 sets. The tests are run with examples of data downloaded from the NYSE FTP:

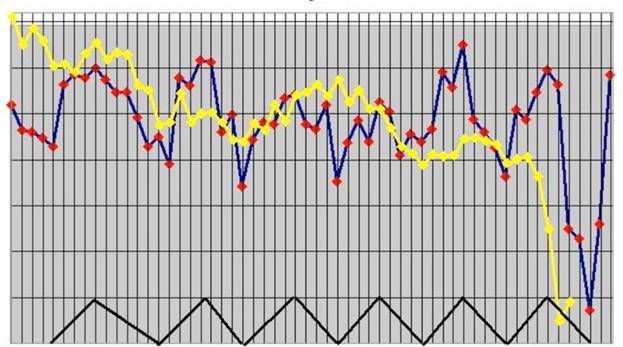

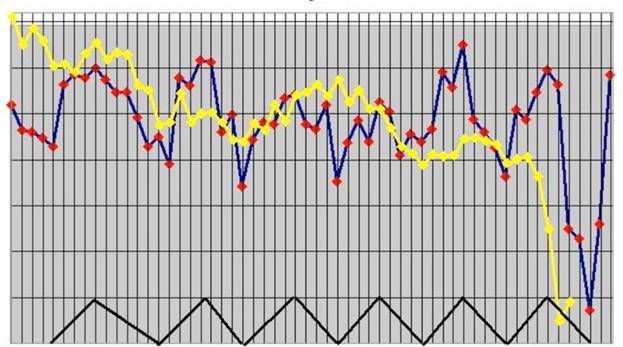

The results of the classification by building a decision tree for a single

As you can see, this rather simple model was able to successfully classify about 70% of the data.

Note : Although the model works very well, this does not mean that it can successfully be used to build a profitable automated trading strategy. First, I do not check if my model predicts any price changes with an average accuracy of 70% with a 95% probability. The model does not measure the "intensity" of the dynamics of securities prices, moreover, in reality, the model should work effectively enough to cover transaction costs. Not to mention the other little things that are important for building a real trading system.

In fact, a lot can be done in terms of improving the system and checking the results. Unfortunately, it is very difficult to get enough data: the data for the two trading days is not enough to draw conclusions and start creating a system that can earn all the money in the world. However, I think this is a pretty good starting point.

I relatively easily conducted a rather complex research project on a larger scale than the one described in the original article.

The latest technologies in the field of big data allow you to create models using all available information without the use of samples. Using all the information helps to create the best models and isolate all the details from the complete data set.

Short review

This post is based on an article called “Modeling the dynamics of a high-frequency portfolio of limit orders using the reference vector method”. Roughly speaking, I step by step implement the ideas presented in this article using Spark and Spark MLLib . The authors use abbreviated examples, but I will use the full order book from the New York Stock Exchange ( NYSE ) (selected data are available on the NYSE FTP ), since working with Spark I can easily do this. Instead of using the support vector method, I will use the decision tree algorithm for classification, since Spark MLLib initially supports multiclass classification.

')

If you want to further understand the problem and the proposed solution, you need to read that article. I will do a full review of the problem in one or two sections, but in a less scientific language.

Predictive modeling is the process of choosing or creating a model whose goal is the most accurate prediction of a possible outcome.

Model architecture

The authors propose a framework for extracting feature vectors from an unformatted order log, which can be used as a set of input data for a classification method (for example, support vector machine or decision tree building) to predict a change in the securities rate (grow, decrease, not change). Based on a set of test data with labels assigned to them (price change), the classification algorithm builds a model that places new instances in one of the predefined categories.

Time(sec) Price($) Volume Event Type Direction - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - - 34203.011926972 598.68 10 submission ask 34203.011926973 594.47 15 submission bid 34203.011926974 594.49 20 submission bid 34203.011926981 597.68 30 submission ask 34203.011926991 594.47 15 execution ask 34203.011927072 597.68 10 cancellation ask 34203.011927082 599.88 12 submission ask 34203.011927097 598.38 11 submission ask In the table, each row represents a trade transaction that reflects the receipt of the order, the cancellation of the order or its execution. The arrival time is counted from midnight in seconds and nanoseconds, the price is in US dollars, and the volume - in the number of shares. Ask means that I sell and ask for my share of the specified price, Bid means that I want to buy at the specified price.

From this log it is very easy to restore the status of the order portfolio after each completed operation. You can learn more about the order book (order book) and limit order portfolio in Investopedia. I will not go into details. The general idea is very simple and straightforward.

This is an electronic list of sell and buy orders for a specific security or financial instrument, sorted by price level.

Extracting feature vectors and preparing test data

After the order portfolios are restored from the order journal, we can extract attributes and form feature vectors that will be used as input data for the

Attributes are divided into three categories: basic, time insensitive and time sensitive. Each of the categories can be calculated directly on the basis of the data obtained. Basic attributes are prices and volumes, both with the ask flag and the bid flag, in the amount of n = 10 different levels (represent price levels of the order portfolio at a given moment), which can be directly obtained from the order portfolio. Timeless insensitive attributes are easily calculated at a single point in time from the attributes of the base set. These include the bid-ask spread and the average price of the spread, price ranges, and the average price and volume at various price levels, which are calculated in feature sets

v2 , v3 and v5 respectively. A set of v5 is needed to keep track of the accumulating difference in price and volume of shares between ask and bid. Then, taking into account the value of the data in previous periods, we calculate the parameters of the time-sensitive category. You can read more about feature calculations in the original article .

Markup test data

Preparation of test data for machine learning requires marking each time point, within which a change in the value of shares is observed (1 second, for example). This is a simple task that requires only two portfolios of orders: the current portfolio of orders and a portfolio of orders, formed after some time.

I will use the label

MeanPriceMove , which can be Stationary , Up or Down (will not change, grow, decrease). trait Label[L] extends Serializable { label => def apply(current: OrderBook, future: OrderBook): Option[L] } sealed trait MeanPriceMove object MeanPriceMove { case object Up extends MeanPriceMove case object Down extends MeanPriceMove case object Stationary extends MeanPriceMove } object MeanPriceMovementLabel extends Label[MeanPriceMove] { private[this] val basicSet = BasicSet.apply(BasicSet.Config.default) def apply(current: OrderBook, future: OrderBook): Option[MeanPriceMove] = { val currentMeanPrice = basicSet.meanPrice(current) val futureMeanPrice = basicSet.meanPrice(future) val cell: Cell[MeanPriceMove] = currentMeanPrice.zipMap(futureMeanPrice) { (currentMeanValue, futureMeanValue) => if (currentMeanValue == futureMeanValue) MeanPriceMove.Stationary else if (currentMeanValue > futureMeanValue) MeanPriceMove.Down else MeanPriceMove.Up } cell.toOption } } Order logs

I will use NYSE TAQ OpenBook order logs and parse them using the Scala OpenBook library. A free dataset for two trading days is available for download on the NYSE FTP - it's very easy to get.

The TAQ (Trades and Quotes) databases provide time-varying price glass values in the T + 1 basis for closed markets. The results of TAQ data processing are used in the development and testing of trading strategies, analysis of market trends on real data and market research for the purpose of monitoring or conducting an audit.

Preparation of test data

OrderBook consists of two sorted tables, where the key is the price, and the value is the number of shares. case class OrderBook(symbol: String, buy: TreeMap[Int, Int] = TreeMap.empty, sell: TreeMap[Int, Int] = TreeMap.empty) Parameter Sets

I use the

Cell tool from the Framian library to visually present the extracted values of attributes: Value , NA or NM .As defined in the original article, we have three sets of features. The values of the first two of them are calculated on the basis of the

OrderBook data, the latter requires the creation of the OrdersTrail table, which is essentially an unformatted order log, which the window Fourier transform has been applied to. sealed trait BasicAttribute[T] extends Serializable { self => def apply(orderBook: OrderBook): Cell[T] def map[T2](f: T => T2): BasicAttribute[T2] = new BasicAttribute[T2] { def apply(orderBook: OrderBook): Cell[T2] = self(orderBook).map(f) } } sealed trait TimeInsensitiveAttribute[T] extends Serializable { self => def apply(orderBook: OrderBook): Cell[T] def map[T2](f: T => T2): TimeInsensitiveAttribute[T2] = new TimeInsensitiveAttribute[T2] { def apply(orderBook: OrderBook): Cell[T2] = self(orderBook).map(f) } } trait TimeSensitiveAttribute[T] extends Serializable { self => def apply(ordersTrail: Vector[OpenBookMsg]): Cell[T] def map[T2](f: T => T2): TimeSensitiveAttribute[T2] = new TimeSensitiveAttribute[T2] { def apply(ordersTrail: Vector[OpenBookMsg]): Cell[T2] = self(ordersTrail).map(f) } } and here is the calculation of signs:

class BasicSet private[attribute] (val config: BasicSet.Config) extends Serializable { private[attribute] def askPrice(orderBook: OrderBook)(i: Int): Cell[Int] = { Cell.fromOption { orderBook.sell.keySet.drop(i - 1).headOption } } private[attribute] def bidPrice(orderBook: OrderBook)(i: Int): Cell[Int] = { Cell.fromOption { val bidPrices = orderBook.buy.keySet if (bidPrices.size >= i) { bidPrices.drop(bidPrices.size - i).headOption } else None } } private def attribute[T](f: OrderBook => Cell[T]): BasicAttribute[T] = new BasicAttribute[T] { def apply(orderBook: OrderBook): Cell[T] = f(orderBook) } def askPrice(i: Int): BasicAttribute[Int] = attribute(askPrice(_)(i)) def bidPrice(i: Int): BasicAttribute[Int] = attribute(bidPrice(_)(i)) val meanPrice: BasicAttribute[Double] = { val ask1 = askPrice(1) val bid1 = bidPrice(1) BasicAttribute.from(orderBook => ask1(orderBook).zipMap(bid1(orderBook)) { (ask, bid) => (ask.toDouble + bid.toDouble) / 2 }) } } Marking test data

To extract tagged data from orders, I use

LabeledPointsExtractor : class LabeledPointsExtractor[L: LabelEncode] { def labeledPoints(orders: Vector[OpenBookMsg]): Vector[LabeledPoint] = { log.debug(s"Extract labeled points from orders log. Log size: ${orders.size}") // ... } } and so it can be improved with the help of the builder:

val extractor = { import com.scalafi.dynamics.attribute.LabeledPointsExtractor._ (LabeledPointsExtractor.newBuilder() += basic(_.askPrice(1)) += basic(_.bidPrice(1)) += basic(_.meanPrice) ).result(symbol, MeanPriceMovementLabel, LabeledPointsExtractor.Config(1.millisecond)) } Extractor prepare the marked points using the MeanPriceMovementLabel with three signs: the ask price (ask price), the set price (bid price) and the average price (mean price).Run a classification model

In the "real" application, I use 36 attributes from all 3 sets. The tests are run with examples of data downloaded from the NYSE FTP:

EQY_US_NYSE_BOOK_20130403 to train the model and EQY_US_NYSE_BOOK_20130404 to check the correctness of the work. object DecisionTreeDynamics extends App with ConfiguredSparkContext with FeaturesExtractor { private val log = LoggerFactory.getLogger(this.getClass) case class Config(training: String = "", validation: String = "", filter: Option[String] = None, symbol: Option[String] = None) val parser = new OptionParser[Config]("Order Book Dynamics") { // .... } parser.parse(args, Config()) map { implicit config => val trainingFiles = openBookFiles("Training", config.training, config.filter) val validationFiles = openBookFiles("Validation", config.validation, config.filter) val trainingOrderLog = orderLog(trainingFiles) log.info(s"Training order log size: ${trainingOrderLog.count()}") // Configure DecisionTree model val labelEncode = implicitly[LabelEncode[MeanPriceMove]] val numClasses = labelEncode.numClasses val categoricalFeaturesInfo = Map.empty[Int, Int] val impurity = "gini" val maxDepth = 5 val maxBins = 100 val trainingData = trainingOrderLog.extractLabeledData(featuresExtractor(_: String)) val trainedModels = (trainingData map { case LabeledOrderLog(symbol, labeledPoints) => log.info(s"$symbol: Train Decision Tree model. Training data size: ${labeledPoints.count()}") val model = DecisionTree.trainClassifier(labeledPoints, numClasses, categoricalFeaturesInfo, impurity, maxDepth, maxBins) val labelCounts = labeledPoints.map(_.label).countByValue().map { case (key, count) => (labelEncode.decode(key.toInt), count) } log.info(s"$symbol: Label counts: [${labelCounts.mkString(", ")}]") symbol -> model }).toMap val validationOrderLog = orderLog(validationFiles) log.info(s"Validation order log size: ${validationOrderLog.count()}") val validationData = validationOrderLog.extractLabeledData(featuresExtractor(_: String)) // Evaluate model on validation data and compute training error validationData.map { case LabeledOrderLog(symbol, labeledPoints) => val model = trainedModels(symbol) log.info(s"$symbol: Evaluate model on validation data. Validation data size: ${labeledPoints.count()}") log.info(s"$symbol: Learned classification tree model: $model") val labelAndPrediction = labeledPoints.map { point => val prediction = model.predict(point.features) (point.label, prediction) } val trainingError = labelAndPrediction.filter(r => r._1 != r._2).count().toDouble / labeledPoints.count log.info(s"$symbol: Training Error = " + trainingError) } } } Learning errors

The results of the classification by building a decision tree for a single

ORCL ticker: ORCL: Train Decision Tree model. Training data size: 64064 ORCL: Trained model in 3740 millis ORCL: Label counts: [Stationary -> 42137, Down -> 10714, Up -> 11213] ORCL: Evaluate model on validation data. Validation data size: 54749 ORCL: Training Error = 0.28603262160039455 As you can see, this rather simple model was able to successfully classify about 70% of the data.

Note : Although the model works very well, this does not mean that it can successfully be used to build a profitable automated trading strategy. First, I do not check if my model predicts any price changes with an average accuracy of 70% with a 95% probability. The model does not measure the "intensity" of the dynamics of securities prices, moreover, in reality, the model should work effectively enough to cover transaction costs. Not to mention the other little things that are important for building a real trading system.

In fact, a lot can be done in terms of improving the system and checking the results. Unfortunately, it is very difficult to get enough data: the data for the two trading days is not enough to draw conclusions and start creating a system that can earn all the money in the world. However, I think this is a pretty good starting point.

results

I relatively easily conducted a rather complex research project on a larger scale than the one described in the original article.

The latest technologies in the field of big data allow you to create models using all available information without the use of samples. Using all the information helps to create the best models and isolate all the details from the complete data set.

Application code on GitHub

Offtopic: the ITinvest developer competition and the StockSharp project team continue - create additions or corrections to the StockSharp system on GitHab, and receive a cash reward for each commit made.

Source: https://habr.com/ru/post/259803/

All Articles