Devices with load balancing in network monitoring systems or "what is Network Packet Broker"

Recently, during the work on the 100GE traffic analyzer, I was assigned the task of studying such type of devices as Network Packet Broker (also called Network Monitoring Switch), or, if simply and in Russian, “balancer”.

Recently, during the work on the 100GE traffic analyzer, I was assigned the task of studying such type of devices as Network Packet Broker (also called Network Monitoring Switch), or, if simply and in Russian, “balancer”.This device is used primarily in network monitoring systems. Gradually, delving into the topic, a sufficient amount of information has accumulated, scattered across different parts of the Internet and documentation. So the idea of an article was born, in which I decided to collect all the found information together and share it with the community.

For those who were wondering what is so special about this type of devices, how they are used and why it is the “balancer” that is - I ask for cat.

What's in a name?

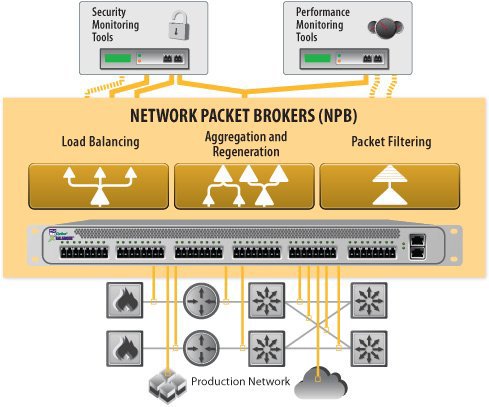

NPB — rack-mounted network device that receives and aggregates network traffic from SPAN ports or TAPs. This traffic (or its copies) is further manipulated by the NPB itself.

NPB — rack-mounted network device that receives and aggregates network traffic from SPAN ports or TAPs. This traffic (or its copies) is further manipulated by the NPB itself.')

NPBs can be either a one-piece device or a modular one (that is, consist of several blades in one package with the possibility of replacement). A typical set of NPB characteristics are:

- Data ports (speeds 1GE, 10GE, 40GE, 100GE with SFP, SFP +, QSFP + and CFP modules, respectively).

- Management ports (most commonly RJ-45 10/100/1000). Management is usually done remotely (API, CLI, GUI, HTTP, SSH, Telnet, or SNMP).

- Functions implemented in the device (from load balancing and its types to specific traffic filtering options).

What is NPB able and what are its features?

The main and most important function of NPB is load balancing (therefore, it can also be called a “balancer” ). By itself, load balancing is the process of splitting an input stream from one or several interfaces into several output interfaces according to certain rules or criteria. Almost always, the following functions are used with balancing:

- Filtering - rules that allow you to select streams with a view to their subsequent balancing and reduce the amount of data in these streams.

- Aggregation — combining streams from multiple input interfaces into one before performing a balancing operation.

- Switching - most NPBs can serve as a switch.

The main use of NPB is to extract necessary data from large data streams and to divide them into smaller ones. This task occurs quite often.

Many companies now have many monitoring tools installed to ensure security, surveillance, analytics, and performance management. There is a problem of transition to other levels of speeds (from 1G to 10G, from 10G to 100G), because there is a lot of equipment that simply does not know how to work at such speeds. There are two options here - either the purchase of expensive new equipment, or the introduction into the existing structure of the layer in the form of NPB, which will be engaged in the adaptation of data streams for old equipment.

Take for example the usual call-center. Almost all of them are now digital and all calls are sent there in the form of VoIP traffic over the LAN, and special recording devices (network traffic recorders) are used to record calls. With an increase in the number of calls to the call-center, one of the recording devices can reach the threshold of its capacity . This is where load balancing is applied, allowing parallel recording devices to work in parallel , with the following conditions:

Take for example the usual call-center. Almost all of them are now digital and all calls are sent there in the form of VoIP traffic over the LAN, and special recording devices (network traffic recorders) are used to record calls. With an increase in the number of calls to the call-center, one of the recording devices can reach the threshold of its capacity . This is where load balancing is applied, allowing parallel recording devices to work in parallel , with the following conditions:- For each call, the entire conversation (in both directions of the traffic flow) must end on a single recording device so that it is not necessary to search for parts of the recorded conversation on several devices.

- A traffic installation call (SIP traffic) must be available for all calls to this recorder.

Traffic coming from multiple ports is aggregated and arrives at the NPB. And here (according to the first of the conditions) one of the main requirements appears to him - if the client equipment works with flows at the session level (and this is almost always the case), then the NPB should not break these sessions (session is a group of packets transmitted between specific nodes ), that is, packets from the same session should always come to the same output interface. This property is called Flow Coherency .

Based on this property, you can give the following examples of types of load balancing that can be used in NPB:

- Uniform (per-packet or round-robin).

Approximately the same amount of traffic goes to all output ports, packets are assigned to output interfaces in a circle. This type of balancing does not provide Flow Coherency, since package direction is in no way connected with directions of other packages. - Static

Balancing occurs on a fixed set of specified rules, for example, on IP source or protocol type. In this case, the amount of data that came to a specific output interface is not taken into account, i.e. There is no feedback on which of the output interfaces received more or less traffic. Depending on exactly what fields are filtered, this type of load balancing may or may not provide Flow Coherency. - Dynamic

It records traffic sent to each output port. Most optimal if there are stringent requirements for uniform load on the output interfaces. Which fields will be used for this type of balancing depends on the algorithm. Supports Flow Coherency. - Based on hashes

To select a port, the hash functions calculated by the packet fields (which are specified by the user) are used. Since the same hash will always be calculated for the same fields, Flow Coherency will ensure balancing when selecting the required fields. If it turns out that the distribution of hashes will be skewed, then balancing can become very uneven, as statistics on the real output load is not used in the balancing algorithm, but the probability of this is not great.

Typically, the streams arriving at NPB are defined using 5-tuple headers (src / dst IP, src / dst port, protocol). Packages with the same 5-tuple, but with changed IP and ports, must go to the same device (to record a conversation in both directions). But at the same time, the threads can be set differently. For example, if a 3-tuple is used , i.e. IP addresses are fixed, and ports change depending on the packet path, or vice versa, ports are fixed, and IP addresses can change, then NPB should be able to adjust to possible changes in the flow structure. At the same time, modern NPBs use algorithms that are not tied to storing information about sessions, and, therefore, are not limited in any way by the number of threads being processed.

There are also some functions that monitor the state of the channels with which the NPB operates.

- Link state awaraness - a function that allows you to monitor the status of output channels. If one of them (or client equipment) fails, the traffic is automatically redistributed between the other output ports of this group. When the channel returns to its operational state, the traffic is redistributed again. At the moments of redistribution, transient coherence of the flow is possible. The performance of the channel is monitored by the presence of a link and / or with the help of keep-alive packets.

- N + M redundancy is a channel redundancy function that works as follows: in the balancing group, N used (active) and M backup channels are selected and if one of the active channels of the group fails, then its traffic is transferred to one of the backup channels. At restoration of the channel of redistribution does not occur and the restored channel becomes reserve. This function is used in the case when even short-term violations of flow coherence are not allowed (instead of link state awaraness).

- Overflow mode - this feature allows you to use the channels as the amount of traffic increases. The user selects a group of channels and indicates those that will be active immediately. The remaining channels will be automatically activated after the load on the main exceeds the threshold set by the user.

As for the SIP traffic from our example, it can be started up on all recording devices, since It creates a small load compared to VoIP traffic. SIP is transmitted over TCP, while VoIP is transmitted over RTP-UDP. Here filters work - one of the filters extracts SIP traffic from the stream and forwards it to all recording devices, while the other selects RTP packets and NPB performs load balancing of this traffic between all recording devices.

Many filtrations, good and different

In order to give a clearer idea of what is happening with the packages inside the NPB and what ultimately goes on balancing, consider some of the basic filtering functions implemented in most devices of this type.

Packet filtering

Allows you to filter incoming packets using the specified rules. This can be protocol, MAC, IP, VLAN tags, MPLS tags and others. The search is conducted on the entire contents of the package (including payload). Templates that are used in this case can be either simple strings with static, user-defined indentation, or complex regular expressions with varying indents. The main task is to skip packets that fall under the specified criteria for their subsequent balancing.

Packet slicing

Some monitoring devices need certain information from the package, its specific part. In this case, viewing the entire package will take extra resources. This feature allows you to “cut out” this part of the package and send it further to the monitoring device for further transformations, analysis or statistics, while avoiding unnecessary viewing of the entire contents of the package. After the slicing procedure, the CRC of the packet is recalculated.

Port stamping

The function of inserting into the packet the label of the port number from which it came. The number generated in the field of the label itself is generated using a specific formula. In some devices it is possible to assign a port number yourself through the CLI. The field length is not more than 2 bytes. In this case, the package CRC after insertion of the label is recalculated.

Time stamping

The analogy with port stamping is the insertion of a timestamp. An 8-byte timestamp block is inserted into the incoming packet (32 bits — seconds counter, 32 bits — nanosecond counter). One of the methods is insertion of a 14-byte trailer at the end of the packet - 2 bytes - Source ID (from port stamping), 8 bytes - Timestamp, 4 bytes - CRC recalculated. The device must support the NTP protocol, which is described in RFC 5905 (talking about NTPv4).

Packet De-duplication

Marks or removes duplicate packets, which are detected using the configured interval of the original packet (from 1 to 50,000 microseconds). Occur when using SPAN and mirror technologies in switches, or when collecting packages from several places.

Tagging (VLAN and MPLS)

Used to insert VLAN or MPLS tags into packets, allowing them to be tracked and managed as they move through the network.

Protocol / Header Stripping, De-incapsulation

Functions inverse to tagging and not differing from each other in the principle of operation - removal of a certain part of a package (for example, headers or tags).

Instead of conclusion

Summarizing all the above, we can say that in the face of Netwok Packet Brokers we have a powerful and flexible customizable tool that allows you to filter, aggregate and redistribute traffic to various devices without replacing the already used equipment.

Payment for the benefit of such devices is the complexity of introducing them into existing networks - this requires many hours of re-planning (depending on the complexity of the structure of the network itself and the desired filtering and balancing criteria).

Among the manufacturers of NPB there are several companies such as VSS Monitoring, Gigamon, Apcon and Ixia (which, more recently, also includes NetOptics, which also produced NPB). Who cares what those or manufacturers offer - you are welcome to the links.

Thanks for attention!

References:

Gigamon website .

VSS Monitoring website .

Ixia website .

Apcon website .

PS I express my deep gratitude to Des333 and paulig for their help in preparing the article.

Source: https://habr.com/ru/post/259633/

All Articles