VMware vSphere: What's New [V5.5 to V6] - protection and transfer of "virtual": Fault Tolerance and vMotion

Recently, we made a video stream from our CA, we broadcast a part of the training course, which described all the updates in vSphere version 6. This module dealt with security and painless migration of virtual machines.

Under the cut is the decryption of the module, and the video for those who do not want to read the long decoding.

Now we will look at the section on fault tolerance, this is HA, there is also related to planned migration, so protection from planned downtime is a function of vMotion and its new features in the current version of vSphere and a feature like Fault Tolerance. This is now in more detail.

')

This is the most popular thing in vSphere 6. You could already meet with this function, now in more detail about it.

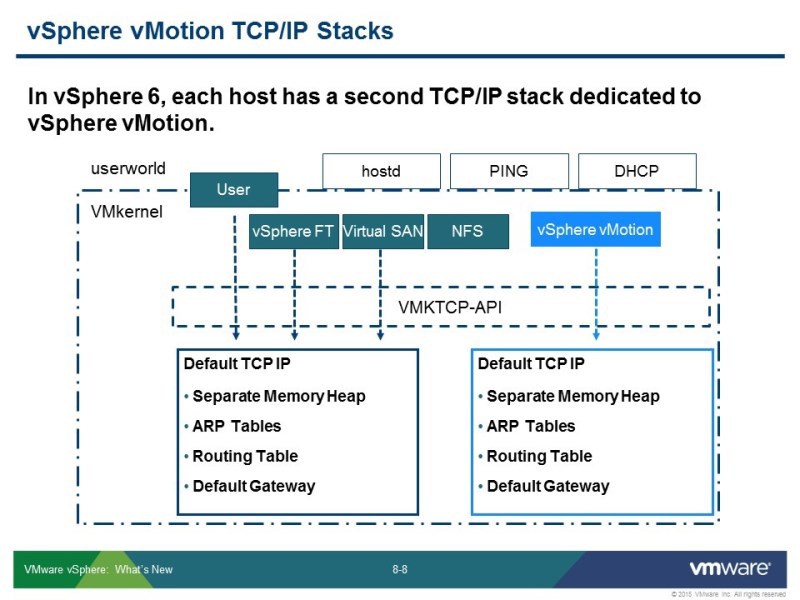

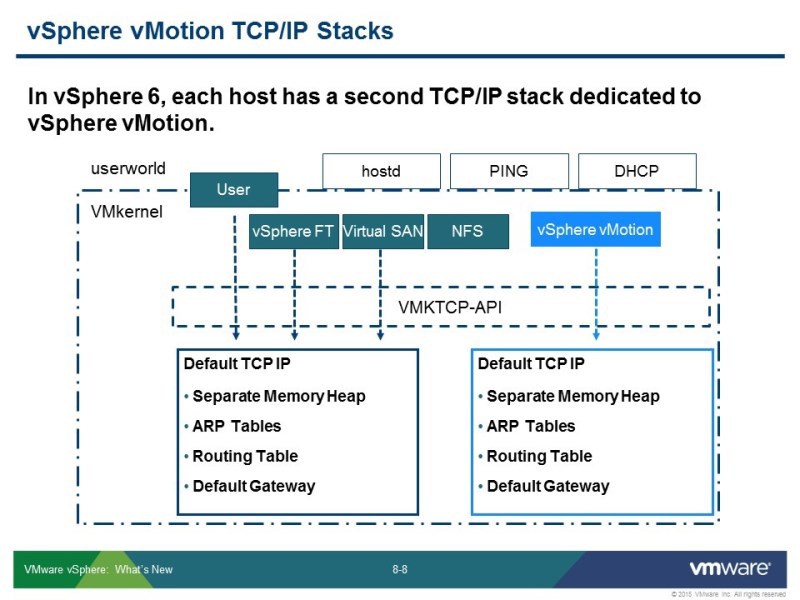

In order to be able to use, for example, vMotion - the migration of virtual machines between V-centers. To do this, it was necessary to solve one problem that was architectural for the subsystem of transferring virtual machines to the “hot” one. The vMotion network - it was the L2 network, so that it worked there had to be one broadcast domain, routing was not supported. Accordingly, in order to transmit over long distances, there was nothing else but a mechanism with large delays; now this delay amounts to 100-150 milliseconds. To do this, it was necessary to make architectural changes to the vMotion network and to the core itself. Plus, you had to add the ability to route to the vMotion network.

For this, the opportunity to create a new TCP / IP stack, which serves for vMotion tasks, has appeared. In fact, custom TCPP stack - it was supported in previous versions, but it was not a supported option, it could be started from the command line, but in order to find out how - it was necessary to rummage through blogs. Now this feature is available immediately. The second IP stack is by default in the system, and it is used to use all the new vMotion news that has appeared.

It turns out that this additional stack does not violate and does not change the vMotion architecture, just before the default gateway was for the internal network, another gateway was needed to go outside, there you set up the channel through which vMotion will communicate. And you do not touch anything that happened before, just add a new function.

What does it allow to do? Migrate virtual machines, the L3 network is used for this - this is the first thing, and secondly, you can transfer machines in a large variant of settings:

- between switches

- between V-centers (not necessarily within the same management domain)

Now it is possible to transfer over long distances. What it can serve as:

- for permanent migration from site to site. For example, if there are 2 data centers, you need to turn off one for servicing, which means the machine can be transferred to another site without stopping, even without shutting down.

- the same can be used as a proactive decision from some kind of catastrophes of a different nature, if you expect this kind of problems - disaster recovery solution (for example, if there is a danger of some kind of storm, then you can transfer everything to another place).

What you need to transfer?

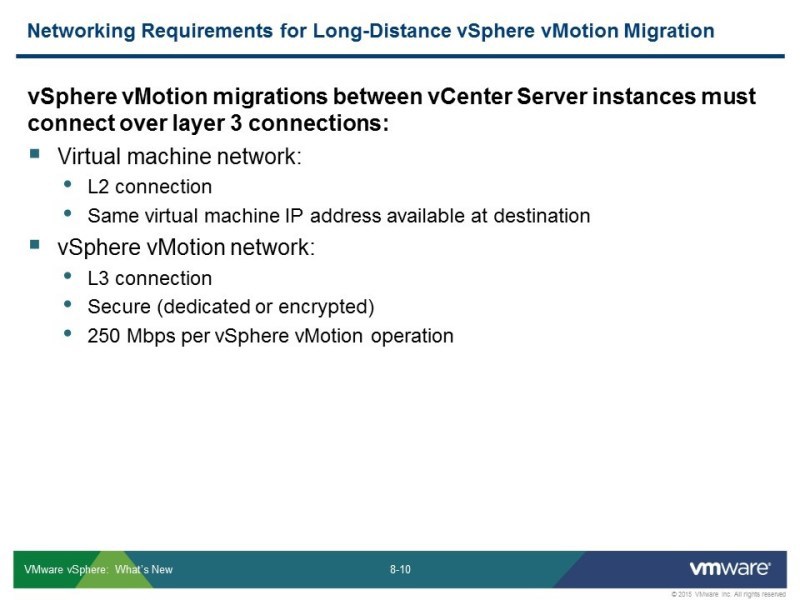

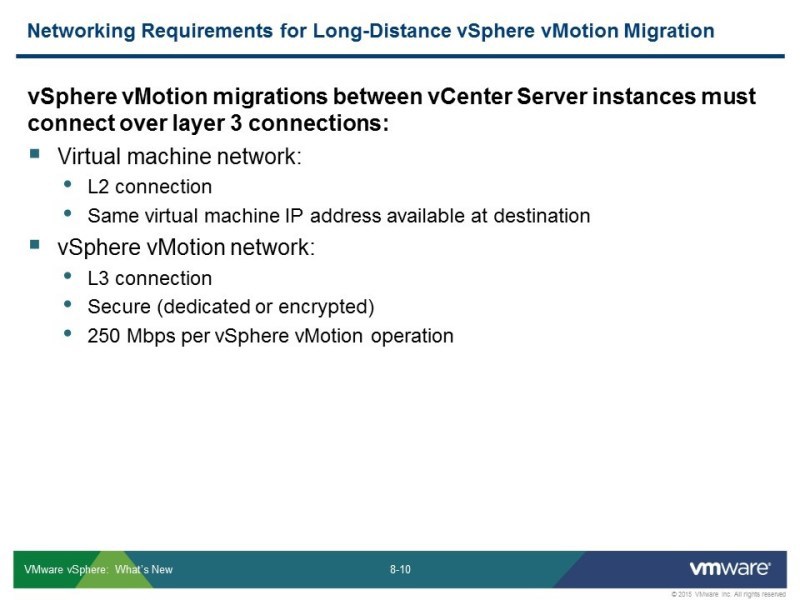

The virtual machines themselves are still the L2 network, because during vMotion you do not run additional scripts that reconfigure the network subsystem of the machine. Therefore, the IP will be the same.

The vMotion network is already the L3 network. To work with such delays, it is necessary to approach the strip more carefully. Now the requirements for the vMotion band are 250 Mbps, which is 3 times lower than in previous versions - 75% of gigabit was used for vMotion to work properly. The reduction in the band requirement must be met in order to run this function.

There are some additional conditions for this to work:

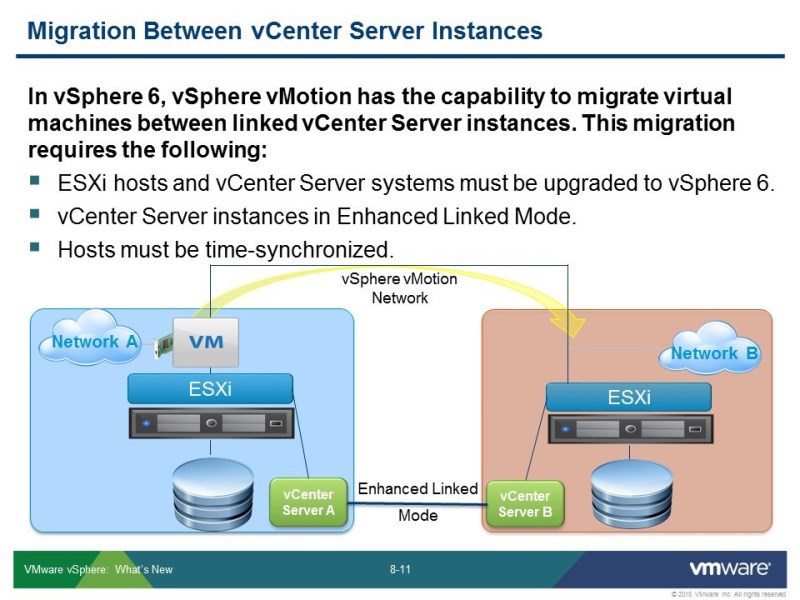

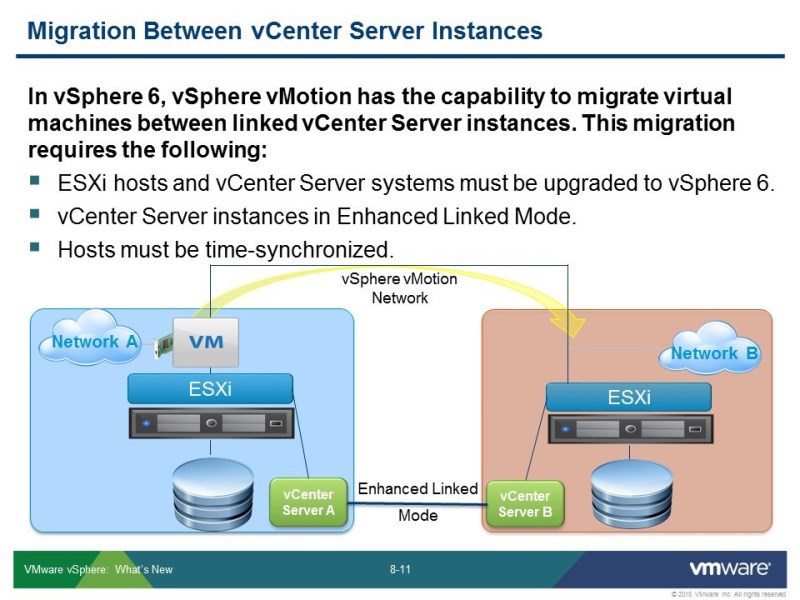

- everything should be the latest, 6th, version where this feature is available.

- V-centers must be interconnected so that they can transmit the necessary information to each other. The virtual machine moves from one infrastructure to another, and it is already "traveling with a suitcase of things." For example, if it was in a cluster, then there are settings relating to it. For example, any admission control rules - she moves with them.

- so that it all works, taking into account channel encryption, it is necessary that all this be synchronized in time.

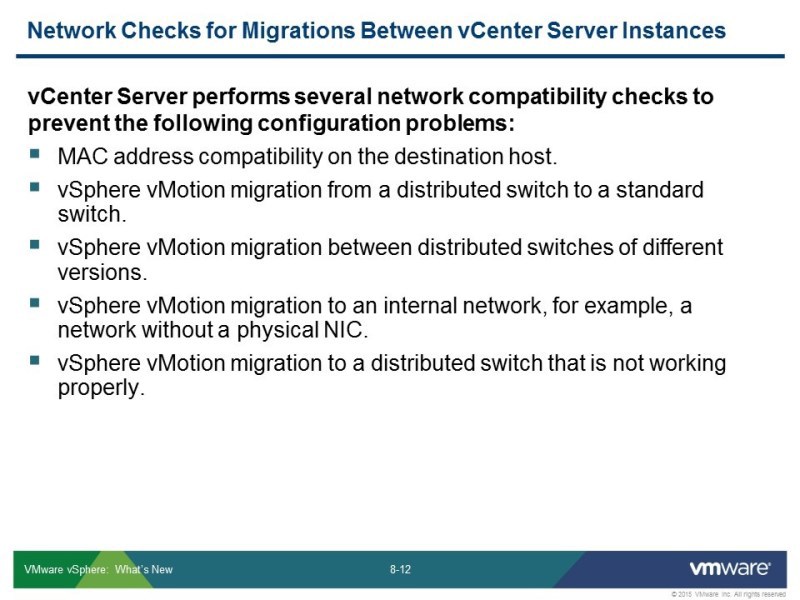

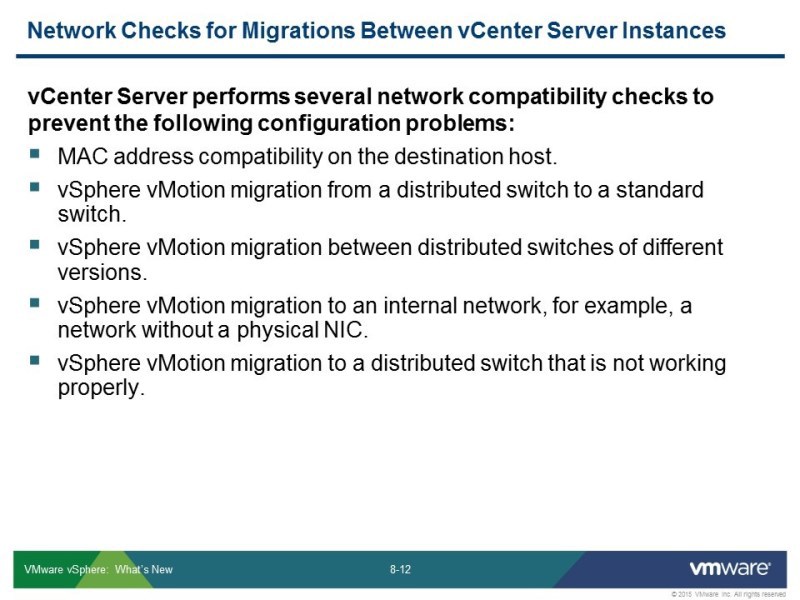

What does vCenter check before migration and what is saved?

- the virtual machine moves with its own settings, and you need to check that there is no conflict of MAC addresses, even if these addresses are generated automatically by the Sphere, they can still match, so you need to check.

Other standards requirements for vMotion for exchange not only between hosts but also between vCenter:

- so that the virtual machine does not lose the connection to the production network, it should not be connected to a switch that does not have uplink - it should not use the internal network, the network to which it is connected should be available at the new point as well.

Everything, absolutely everything, the virtual machine settings are moved along with it. Changing the cluster, respectively, you need to pass all these parameters

Fault Tolerance function

This function looks good at demonstrations, and is well perceived by the ear, but it was impossible to call it useful before this version of the implementation. Due to technical limitations. This feature could only be enabled for a single-core, single-processor machine that does not use more than 16 gig of RAM.

I remind you that the NA function, in order to use it, you need to create a cluster, and within it you can turn on Fault Tolerance, which protects the machine permanently. Switching from one copy to the second happens instantly, even ping is not lost, the network interaction is not disturbed, and the idle time is almost zero. The problem was that no car could be protected in this way.

Now the requirements are simplified. This function can be used in production - up to 4 processors, up to 64 gig RAM - can be for a machine for which vMotion can be enabled.

Secondly, the algorithm itself has changed. Other protocols are used, and a little differently it all works, easier, but there are some points. Part of the requirements has tightened: for example, network requirements, because more data needs to be transferred. On the other hand, some of the requirements have been simplified: now you don’t need to, as before, adjust everything to full performance. If in the previous version of vSphere, you turned on Fault Tolerance, then your thin disks were moved apart into thick ones - only they were used, the memory was set to the maximum reserve. Now this is all not necessary, now, at least all thin disks remain as thin.

And now another synchronization algorithm (shown on the slide).

- without data loss

- no downtime

- without loss of network connection

That is almost instant switch to backup.

The function also works within a cluster, and is used here as a mechanism that signals a host failure. This feature also protects against reverse failure. In the event of a failure of the primary host, an instantaneous transfer to the spare host occurs.

Here is a comparison, as it was in previous versions, and as in this version.

- processors - up to 4

- one homogeneous cluster is not obligatory, a certain set of checks occurs, but less stringent requirements

- some functions of the new processors are used, in particular, there should be support for hardware virtualization 2 versions

- disks can be any, it is not necessary to push them

VMDK redun - what is at stake. If earlier, the machine that Fault Tolerance protects must lie on the shared storage (copying only the host memory and processor status) - these are two virtual machines that are counted on 2 hosts, which should be as close as possible in terms of parameters. Now the machine can lie entirely on one host, on the second host an entire copy of it in RAM, and files on disks. The presence of balls is required, but not for storing the virtual machine. Synchronization occurs as data on disks, and RAM. Naturally, you have to pay for it - you need 10 gigabits to enable it. Do not violate the laws of physics - the more data you need to transfer, the more network bandwidth is needed.

The number of machines per host has not changed - up to 4, only 4. The high-level architecture has not changed: there is a main machine, and one that is protected, respectively, there can be no more than 4 per host, nothing has changed here.

vSphere DRS - not yet fully supported. The old function Fault Tolerance supported DRS, it was possible to make vMotion for any machine, primary-secondary and DRS could work with it, but now vMotion is preserved, but the cluster has not yet learned how to work with it. Again, this is logical, given that now in-share storing and here something similar to the Enhanced vMotion analogue works, and it does not work for clusters, therefore DRS is not used.

In any case, you need to configure it, because it is still used as a mechanism that says that there was a problem with the host. And it will work out the standard mechanism, which restarts the machine, and will work out the mechanism Fault Tolerance, which simply switches to the spare one, to the copy.

DRS knows about such settings Fault Tolerance, and when it is turned on it will take into account that primary and secondary must be on different hosts. In any case, ON and DRS will scatter machines on different hosts. As I said before: high-level architecture has not changed.

This slide is about the fact that the machine is not required should be on the shared storage. All virtual machine files are synchronized: vmdk files and configuration files. With Fault Tolerance, you can protect a virtual machine that resides in local storage. It is clear that the amount of data in this case will be transferred more - this is another one of the reasons why you need 10 gigabits, but shared storage is only needed for configuration purposes.

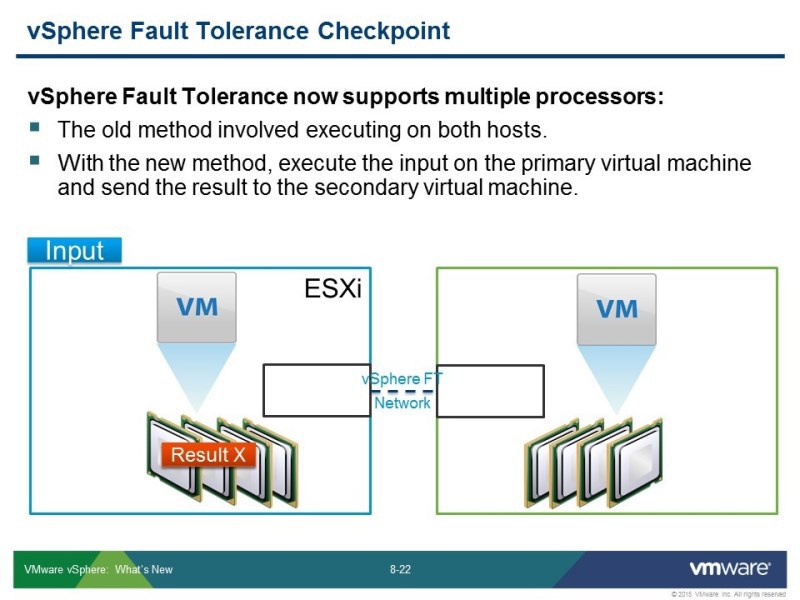

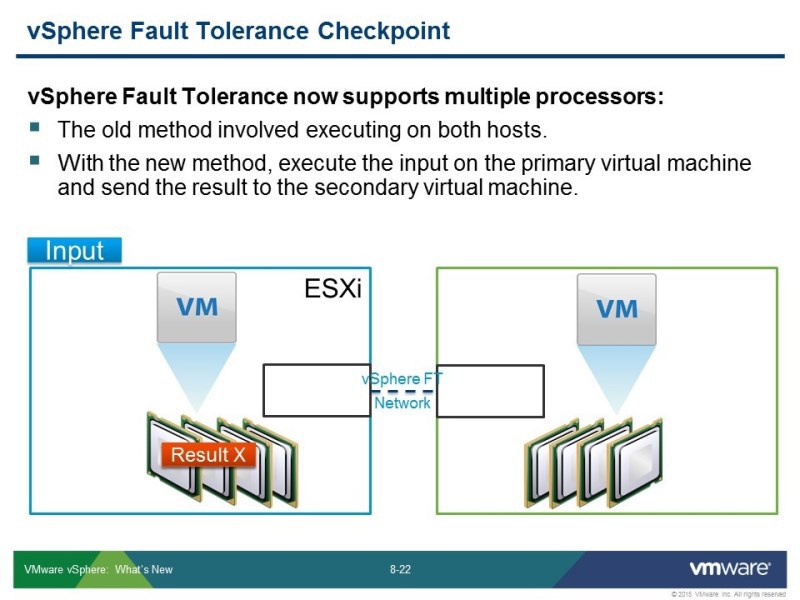

Changed the approach to the work of the algorithms. In the previous version, it was the synchronization of two working, counting processors on two hosts. Now everything happens on the main (primary) machine, the calculations are made by one host, and with a short period of time the data is transferred to the additional (secondary) machine. What from the point of view of a person looks like synchronous, simultaneous transmission. That is, it is not necessary to synchronize the state of the processor, as a matter of fact, instant vMotion is obtained. You run vMotion, but the source is not deleted, and it constantly synchronizes data on one host and on the second. At the same time, it synchronizes both the state of the RAM and the state of the vmdk files.

Here is a graphical representation of how this algorithm is used. In the second Fault Tolerance there was something similar, but there was a special protocol for synchronizing calculations, now this is a specific use of the vMotion function. During this procedure, at certain intervals, memory pages are copied from one host to another, which are marked in the table as either “not currently used” or “least frequently used”. If they have changed at the time of copying, the process of copying starts.

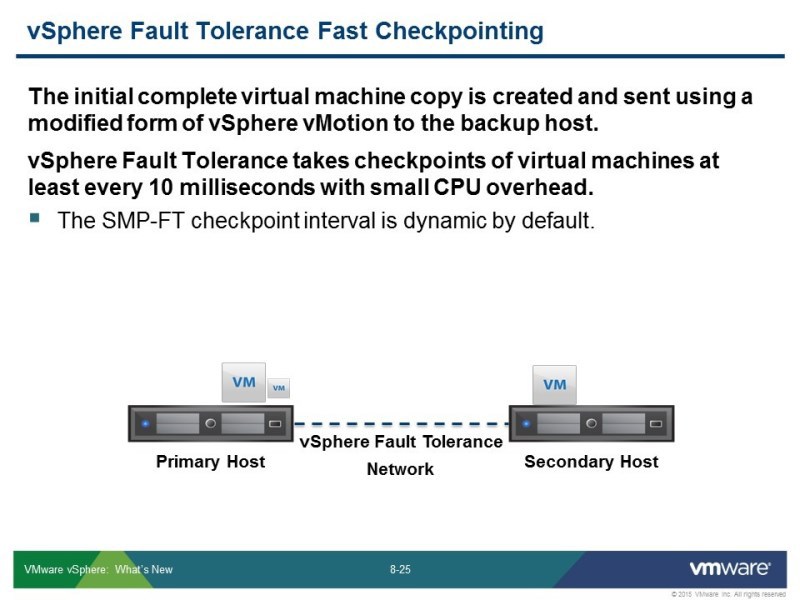

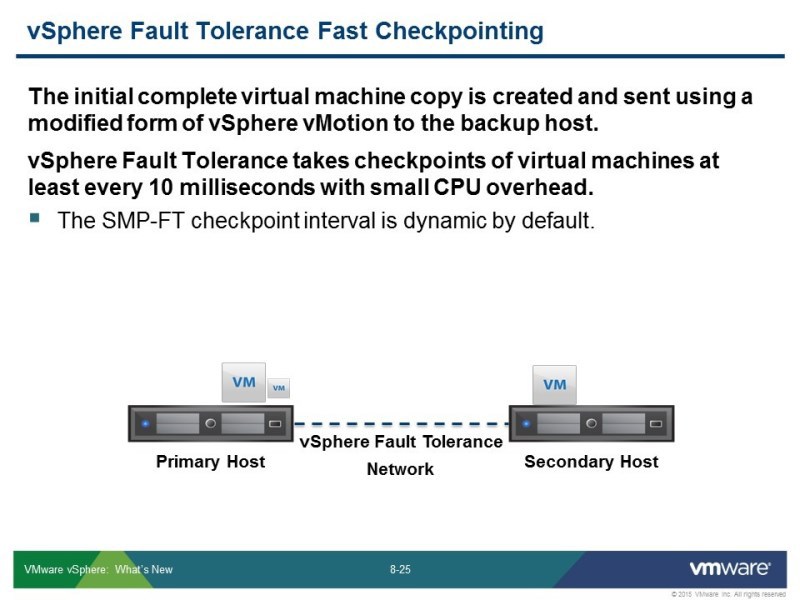

It uses a similar approach, but uses the fast checkpoint mechanism. These synchronizations are done after a clear period of time - after 10 milliseconds, the data that has changed during this time is transmitted to the second machine, to the neighboring host, which rotates on the next machine. This feature is constantly running, which keeps track of these checkpoints.

This happens all the time while the Fault Tolerance feature is on. A couple of years ago there was a preview, on such a machine - 4 cores, 64 gigabytes, with a medium load database, there the Fault Tolerance traffic on the network was about 3-4 gigabits. Accordingly, from 10 it can "eat" a good piece. It depends on the data in the virtual machine, the database is a good example to see how much bandwidth can go on it.

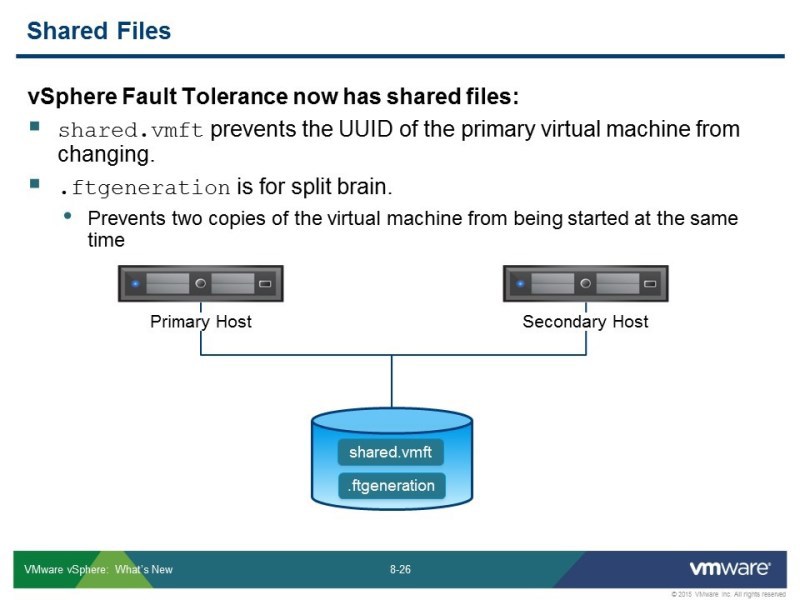

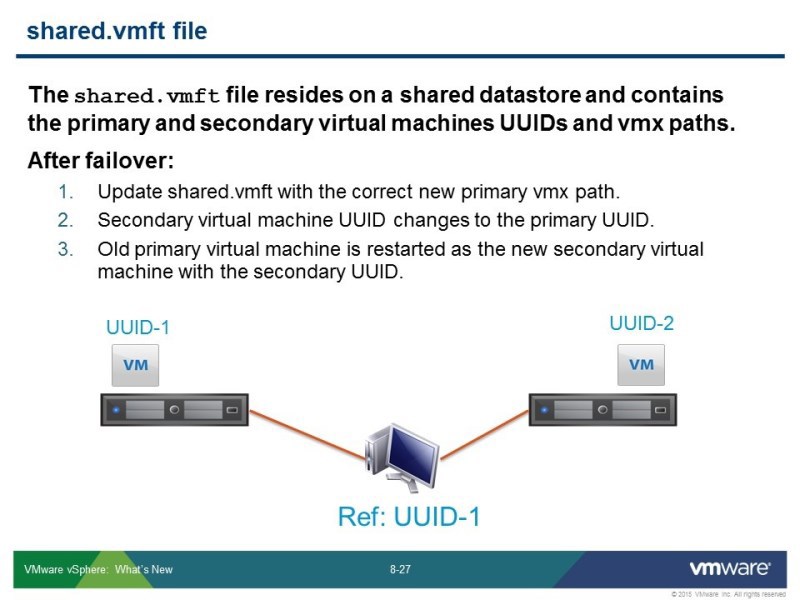

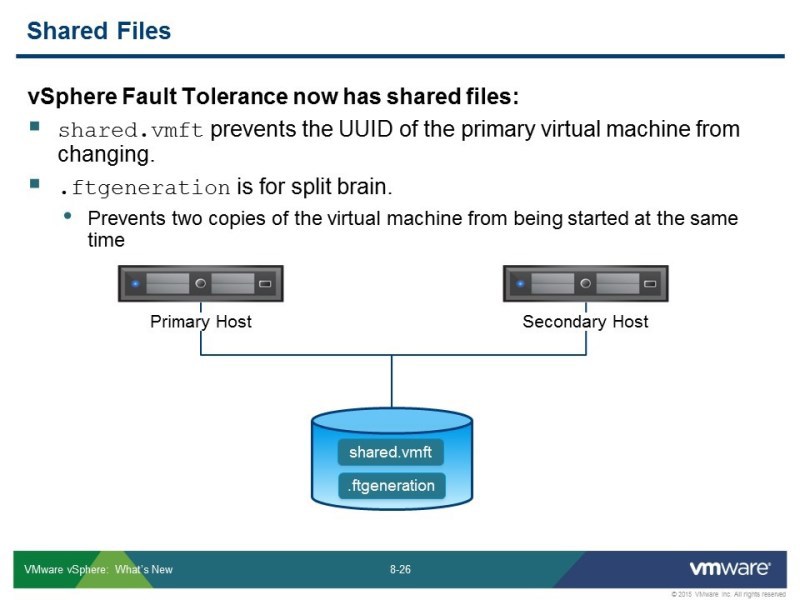

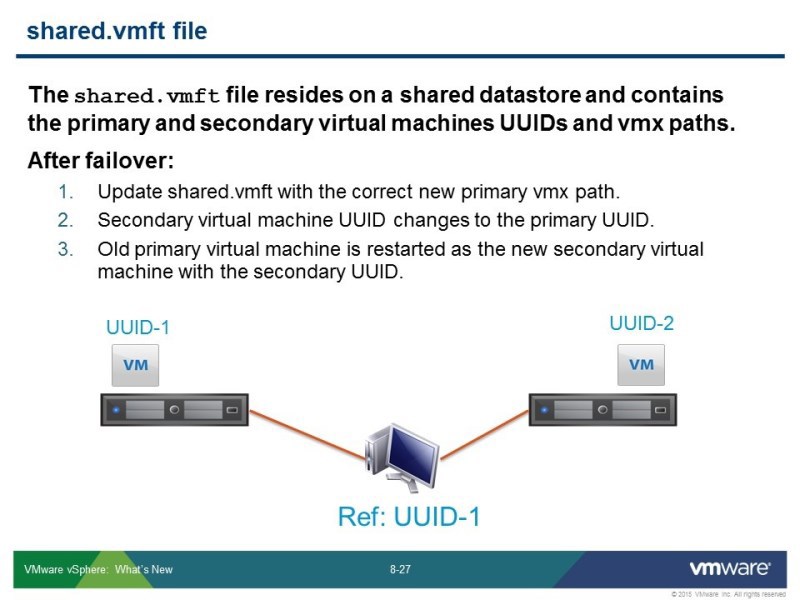

Still, shared storage is needed - for what? To synchronize the work of this mechanism. 2 files are created: shared.vmft and .ftgeneration - each has its own task. shared.vmft is the transfer of some configuration. In order for the host, in the case of the ON mode to operate, say where to turn on which machine. Ftgeneration is a protection against so-called. "Schizophrenia" split of the brain, from a standard problem that occurs in different types of cluster so that there is no duplication of service. In order not to accidentally determine the host as a failed one, and 2 copies of the machine with the same IP addresses and network settings do not turn on right away - this will not lead to anything good. This datastor may not be large, there are no large performance requirements for it, but for it to work, you still need a shared datastor to put these configuration files.

In shared.vmft, there are information and IDs of “virtualoks”, and paths in vmx files where virtual machines are currently located: which one to include. When moving from host to host, the information in these files changes.

If there are 2 hosts in a cluster, then if they fail, they will simply (as it were) be swapped. And if you have a cluster of 10 hosts, then if your host breaks down on which the primary machine was on, your job switches to secondary — it becomes primary, because your host is not turned off, and it immediately creates an automatic copy. And the defense continues to work, as long as there are enough hosts for it, or as long as there are enough resources.

If you have 3 hosts - the primary machine, the secondary machine, if the primary host is broken, then on the 3rd machine a secondary machine will be created, and the secondary one will become primary and defend on the 3rd host.

If you have 2 hosts - if the host fails, the protection will work, but then, on the second host, which was secondary, it became primary, then the protection will not work on it for the next iteration. If the first host reboots, it will go back to the NA, Fault Tolerance will turn on, it will become secondary, and the second will remain primary. An outside observer will think that they have changed places, but in fact the system has been re-created. Just the task of the ftgeneration file, so that on 2 hosts the primary machine does not start. He tries to rename the file, if the log is live, then it just will not succeed. At the current time, the primary machine uses this file for itself and will not allow it to be renamed - the standard approach with file locking. If the primary machine is not present, the file will be released, the time-out will be released by the log, or it will be possible to look at the time-stamp and then it will be clear that you need to start the second primary machine.

In our virtual environment, getting 10 gigabits is not a problem. But what additional keys are clearly needed, since a second level of virtualization is required, plus hardware support must be second generation. Some additional checks are disabled by some keys through advanced settings, but so far VMware does not say anything about them. For the previous version of Fault Tolerance - that it can be run in a virtual environment - the information appeared only after some time. They obviously tested it, an unsupported mode for training purposes - it was convenient, maybe it will appear here in the next releases, maybe it is impossible in principle, then we will see later.

Regarding ON, a function such as Virtual Machine Component Protection appeared - he learned to understand the state of the storage. ON - can protect against problems with connecting to the repository, if the storage is gone - then up to version 6, it would not show it as a problem that tries to restart the "virtual machine" on another host, that is, the host continues to work, but the "virtual" on it dead, and the host would continue to support it.

Now he keeps track of these problems, and he will try to restart the system on another host that has connection to the repository.

ON - can now protect against more problems.

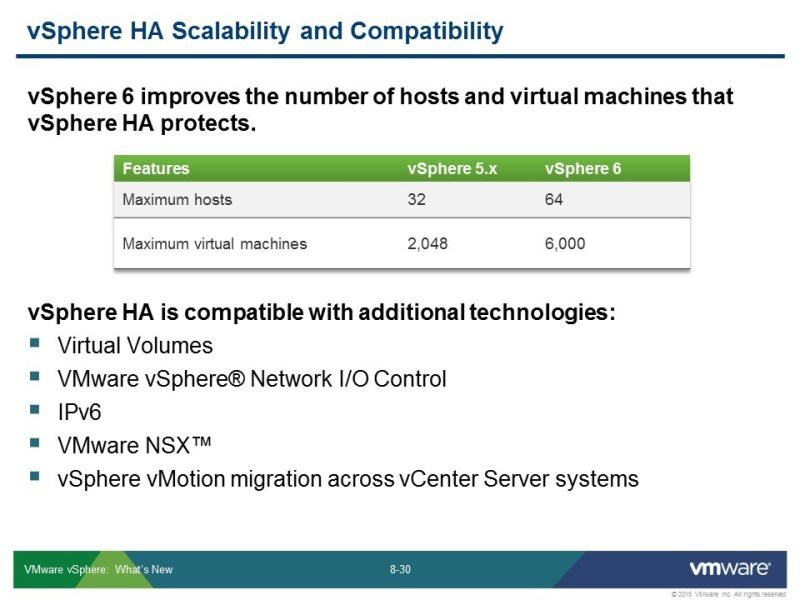

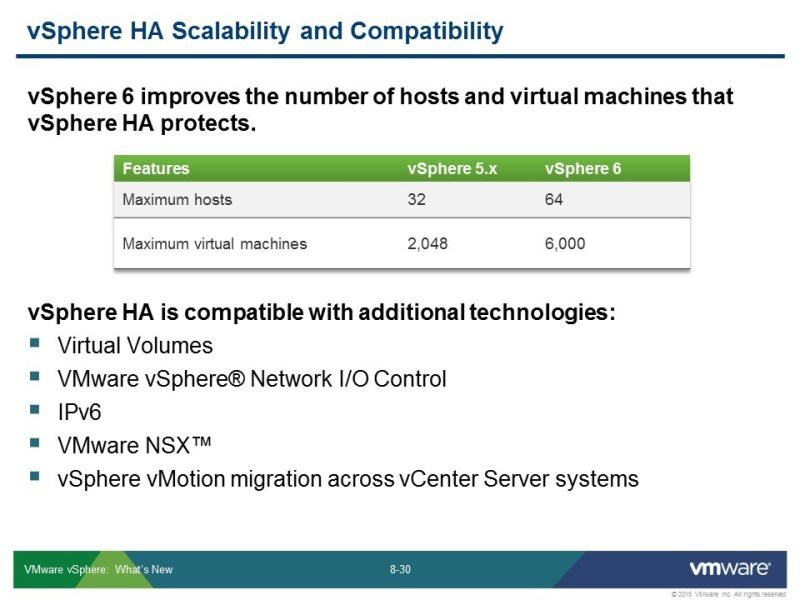

Clusters became larger, respectively, and ON became more, and all clusters became larger. Now up to 64 and more (up to 6000) number of machines can be protected with the help of AT including.

Virtual Volumes can work and further down the list, NSX appeared here - virtual networks built with its help - ON will also work with them. There is such a problem - if there is no vCenter, there is no controlling controller, then you need a machine restarted on another host, when there is no control part, to include a group in some port - into a network that is built using NSX, that is, a virtual network.

There is a mechanism that allows you to do this. For a distributor switch, this information is stored in a folder in the datastore, then for the NSX there is also a way to get the configuration from the port group.

vCloud Air is a VMware cloud, it has a rather difficult fate, VMware is one of the first companies that released a public cloud, it was 5 years ago, then Amazon was still developing. There were a couple of unsuccessful launches, they did not work out with a load, then there was a pause, the cloud was renamed. Now rebranding again - vCloud Air. During this time, competitors have gone ahead. The VMware cloud has a specific niche. With the release of the market they have a delay. The company is relatively small, compared to the same Amazon, they did not build their data centers, found suitable, rented facilities - and therefore they entered the international market for a long time. For us, this became relevant several months ago, when VMware announced the opening of a data center in Germany, this is not far from us, and this can already be viewed as a cloud option where you can buy resources. Purchasing a point in which you can use vCloud Air is in any channel, from any distributor. Where you buy VMware products, you can buy vCloud Air packs, here you can use your points.

Connection from the platform. C -, « vCloud Air», , , , . , , – , , . , , , . «», , , . – , , , - , .

VMware – , , , – VSPP, vCloud Air Network. , . . , VMware, vCloud Air Network – , VMware, .

vCloud Air. – - ( – -). – My VMware. , My VMware , . - – My VMware – .

, . – : , , – -, , vCloud Air Network , .

, , , , [ ] , — .

, , , , , «» -. - , - , , .

vCloud Air vCloud , VMware, , . vCloud Director , , VMware , -. , , VMware Lise Automation open stack, VMware – . - . 6 – VMware Lise Automation, , .

– .

:

UPD :

VMware , , ,

VMware

:

15-19 , VMware vSphere 5.5: Fast Track

1-3 , Managing 3PAR Disk Arrays

4-5 , anaging HP 3PAR Disk Arrays: Replication and Performance

8-10 , ITIL V3 Foundation: ITIL -

MUK-Service - all types of IT repair: warranty, non-warranty repair, sale of spare parts, contract service

Under the cut is the decryption of the module, and the video for those who do not want to read the long decoding.

Now we will look at the section on fault tolerance, this is HA, there is also related to planned migration, so protection from planned downtime is a function of vMotion and its new features in the current version of vSphere and a feature like Fault Tolerance. This is now in more detail.

')

This is the most popular thing in vSphere 6. You could already meet with this function, now in more detail about it.

In order to be able to use, for example, vMotion - the migration of virtual machines between V-centers. To do this, it was necessary to solve one problem that was architectural for the subsystem of transferring virtual machines to the “hot” one. The vMotion network - it was the L2 network, so that it worked there had to be one broadcast domain, routing was not supported. Accordingly, in order to transmit over long distances, there was nothing else but a mechanism with large delays; now this delay amounts to 100-150 milliseconds. To do this, it was necessary to make architectural changes to the vMotion network and to the core itself. Plus, you had to add the ability to route to the vMotion network.

For this, the opportunity to create a new TCP / IP stack, which serves for vMotion tasks, has appeared. In fact, custom TCPP stack - it was supported in previous versions, but it was not a supported option, it could be started from the command line, but in order to find out how - it was necessary to rummage through blogs. Now this feature is available immediately. The second IP stack is by default in the system, and it is used to use all the new vMotion news that has appeared.

It turns out that this additional stack does not violate and does not change the vMotion architecture, just before the default gateway was for the internal network, another gateway was needed to go outside, there you set up the channel through which vMotion will communicate. And you do not touch anything that happened before, just add a new function.

What does it allow to do? Migrate virtual machines, the L3 network is used for this - this is the first thing, and secondly, you can transfer machines in a large variant of settings:

- between switches

- between V-centers (not necessarily within the same management domain)

Now it is possible to transfer over long distances. What it can serve as:

- for permanent migration from site to site. For example, if there are 2 data centers, you need to turn off one for servicing, which means the machine can be transferred to another site without stopping, even without shutting down.

- the same can be used as a proactive decision from some kind of catastrophes of a different nature, if you expect this kind of problems - disaster recovery solution (for example, if there is a danger of some kind of storm, then you can transfer everything to another place).

What you need to transfer?

The virtual machines themselves are still the L2 network, because during vMotion you do not run additional scripts that reconfigure the network subsystem of the machine. Therefore, the IP will be the same.

The vMotion network is already the L3 network. To work with such delays, it is necessary to approach the strip more carefully. Now the requirements for the vMotion band are 250 Mbps, which is 3 times lower than in previous versions - 75% of gigabit was used for vMotion to work properly. The reduction in the band requirement must be met in order to run this function.

There are some additional conditions for this to work:

- everything should be the latest, 6th, version where this feature is available.

- V-centers must be interconnected so that they can transmit the necessary information to each other. The virtual machine moves from one infrastructure to another, and it is already "traveling with a suitcase of things." For example, if it was in a cluster, then there are settings relating to it. For example, any admission control rules - she moves with them.

- so that it all works, taking into account channel encryption, it is necessary that all this be synchronized in time.

What does vCenter check before migration and what is saved?

- the virtual machine moves with its own settings, and you need to check that there is no conflict of MAC addresses, even if these addresses are generated automatically by the Sphere, they can still match, so you need to check.

Other standards requirements for vMotion for exchange not only between hosts but also between vCenter:

- so that the virtual machine does not lose the connection to the production network, it should not be connected to a switch that does not have uplink - it should not use the internal network, the network to which it is connected should be available at the new point as well.

Everything, absolutely everything, the virtual machine settings are moved along with it. Changing the cluster, respectively, you need to pass all these parameters

Fault Tolerance function

This function looks good at demonstrations, and is well perceived by the ear, but it was impossible to call it useful before this version of the implementation. Due to technical limitations. This feature could only be enabled for a single-core, single-processor machine that does not use more than 16 gig of RAM.

I remind you that the NA function, in order to use it, you need to create a cluster, and within it you can turn on Fault Tolerance, which protects the machine permanently. Switching from one copy to the second happens instantly, even ping is not lost, the network interaction is not disturbed, and the idle time is almost zero. The problem was that no car could be protected in this way.

Now the requirements are simplified. This function can be used in production - up to 4 processors, up to 64 gig RAM - can be for a machine for which vMotion can be enabled.

Secondly, the algorithm itself has changed. Other protocols are used, and a little differently it all works, easier, but there are some points. Part of the requirements has tightened: for example, network requirements, because more data needs to be transferred. On the other hand, some of the requirements have been simplified: now you don’t need to, as before, adjust everything to full performance. If in the previous version of vSphere, you turned on Fault Tolerance, then your thin disks were moved apart into thick ones - only they were used, the memory was set to the maximum reserve. Now this is all not necessary, now, at least all thin disks remain as thin.

And now another synchronization algorithm (shown on the slide).

- without data loss

- no downtime

- without loss of network connection

That is almost instant switch to backup.

The function also works within a cluster, and is used here as a mechanism that signals a host failure. This feature also protects against reverse failure. In the event of a failure of the primary host, an instantaneous transfer to the spare host occurs.

Here is a comparison, as it was in previous versions, and as in this version.

- processors - up to 4

- one homogeneous cluster is not obligatory, a certain set of checks occurs, but less stringent requirements

- some functions of the new processors are used, in particular, there should be support for hardware virtualization 2 versions

- disks can be any, it is not necessary to push them

VMDK redun - what is at stake. If earlier, the machine that Fault Tolerance protects must lie on the shared storage (copying only the host memory and processor status) - these are two virtual machines that are counted on 2 hosts, which should be as close as possible in terms of parameters. Now the machine can lie entirely on one host, on the second host an entire copy of it in RAM, and files on disks. The presence of balls is required, but not for storing the virtual machine. Synchronization occurs as data on disks, and RAM. Naturally, you have to pay for it - you need 10 gigabits to enable it. Do not violate the laws of physics - the more data you need to transfer, the more network bandwidth is needed.

The number of machines per host has not changed - up to 4, only 4. The high-level architecture has not changed: there is a main machine, and one that is protected, respectively, there can be no more than 4 per host, nothing has changed here.

vSphere DRS - not yet fully supported. The old function Fault Tolerance supported DRS, it was possible to make vMotion for any machine, primary-secondary and DRS could work with it, but now vMotion is preserved, but the cluster has not yet learned how to work with it. Again, this is logical, given that now in-share storing and here something similar to the Enhanced vMotion analogue works, and it does not work for clusters, therefore DRS is not used.

In any case, you need to configure it, because it is still used as a mechanism that says that there was a problem with the host. And it will work out the standard mechanism, which restarts the machine, and will work out the mechanism Fault Tolerance, which simply switches to the spare one, to the copy.

DRS knows about such settings Fault Tolerance, and when it is turned on it will take into account that primary and secondary must be on different hosts. In any case, ON and DRS will scatter machines on different hosts. As I said before: high-level architecture has not changed.

This slide is about the fact that the machine is not required should be on the shared storage. All virtual machine files are synchronized: vmdk files and configuration files. With Fault Tolerance, you can protect a virtual machine that resides in local storage. It is clear that the amount of data in this case will be transferred more - this is another one of the reasons why you need 10 gigabits, but shared storage is only needed for configuration purposes.

Changed the approach to the work of the algorithms. In the previous version, it was the synchronization of two working, counting processors on two hosts. Now everything happens on the main (primary) machine, the calculations are made by one host, and with a short period of time the data is transferred to the additional (secondary) machine. What from the point of view of a person looks like synchronous, simultaneous transmission. That is, it is not necessary to synchronize the state of the processor, as a matter of fact, instant vMotion is obtained. You run vMotion, but the source is not deleted, and it constantly synchronizes data on one host and on the second. At the same time, it synchronizes both the state of the RAM and the state of the vmdk files.

Here is a graphical representation of how this algorithm is used. In the second Fault Tolerance there was something similar, but there was a special protocol for synchronizing calculations, now this is a specific use of the vMotion function. During this procedure, at certain intervals, memory pages are copied from one host to another, which are marked in the table as either “not currently used” or “least frequently used”. If they have changed at the time of copying, the process of copying starts.

It uses a similar approach, but uses the fast checkpoint mechanism. These synchronizations are done after a clear period of time - after 10 milliseconds, the data that has changed during this time is transmitted to the second machine, to the neighboring host, which rotates on the next machine. This feature is constantly running, which keeps track of these checkpoints.

This happens all the time while the Fault Tolerance feature is on. A couple of years ago there was a preview, on such a machine - 4 cores, 64 gigabytes, with a medium load database, there the Fault Tolerance traffic on the network was about 3-4 gigabits. Accordingly, from 10 it can "eat" a good piece. It depends on the data in the virtual machine, the database is a good example to see how much bandwidth can go on it.

Still, shared storage is needed - for what? To synchronize the work of this mechanism. 2 files are created: shared.vmft and .ftgeneration - each has its own task. shared.vmft is the transfer of some configuration. In order for the host, in the case of the ON mode to operate, say where to turn on which machine. Ftgeneration is a protection against so-called. "Schizophrenia" split of the brain, from a standard problem that occurs in different types of cluster so that there is no duplication of service. In order not to accidentally determine the host as a failed one, and 2 copies of the machine with the same IP addresses and network settings do not turn on right away - this will not lead to anything good. This datastor may not be large, there are no large performance requirements for it, but for it to work, you still need a shared datastor to put these configuration files.

In shared.vmft, there are information and IDs of “virtualoks”, and paths in vmx files where virtual machines are currently located: which one to include. When moving from host to host, the information in these files changes.

If there are 2 hosts in a cluster, then if they fail, they will simply (as it were) be swapped. And if you have a cluster of 10 hosts, then if your host breaks down on which the primary machine was on, your job switches to secondary — it becomes primary, because your host is not turned off, and it immediately creates an automatic copy. And the defense continues to work, as long as there are enough hosts for it, or as long as there are enough resources.

If you have 3 hosts - the primary machine, the secondary machine, if the primary host is broken, then on the 3rd machine a secondary machine will be created, and the secondary one will become primary and defend on the 3rd host.

If you have 2 hosts - if the host fails, the protection will work, but then, on the second host, which was secondary, it became primary, then the protection will not work on it for the next iteration. If the first host reboots, it will go back to the NA, Fault Tolerance will turn on, it will become secondary, and the second will remain primary. An outside observer will think that they have changed places, but in fact the system has been re-created. Just the task of the ftgeneration file, so that on 2 hosts the primary machine does not start. He tries to rename the file, if the log is live, then it just will not succeed. At the current time, the primary machine uses this file for itself and will not allow it to be renamed - the standard approach with file locking. If the primary machine is not present, the file will be released, the time-out will be released by the log, or it will be possible to look at the time-stamp and then it will be clear that you need to start the second primary machine.

In our virtual environment, getting 10 gigabits is not a problem. But what additional keys are clearly needed, since a second level of virtualization is required, plus hardware support must be second generation. Some additional checks are disabled by some keys through advanced settings, but so far VMware does not say anything about them. For the previous version of Fault Tolerance - that it can be run in a virtual environment - the information appeared only after some time. They obviously tested it, an unsupported mode for training purposes - it was convenient, maybe it will appear here in the next releases, maybe it is impossible in principle, then we will see later.

Regarding ON, a function such as Virtual Machine Component Protection appeared - he learned to understand the state of the storage. ON - can protect against problems with connecting to the repository, if the storage is gone - then up to version 6, it would not show it as a problem that tries to restart the "virtual machine" on another host, that is, the host continues to work, but the "virtual" on it dead, and the host would continue to support it.

Now he keeps track of these problems, and he will try to restart the system on another host that has connection to the repository.

ON - can now protect against more problems.

Clusters became larger, respectively, and ON became more, and all clusters became larger. Now up to 64 and more (up to 6000) number of machines can be protected with the help of AT including.

Virtual Volumes can work and further down the list, NSX appeared here - virtual networks built with its help - ON will also work with them. There is such a problem - if there is no vCenter, there is no controlling controller, then you need a machine restarted on another host, when there is no control part, to include a group in some port - into a network that is built using NSX, that is, a virtual network.

There is a mechanism that allows you to do this. For a distributor switch, this information is stored in a folder in the datastore, then for the NSX there is also a way to get the configuration from the port group.

vCloud Air is a VMware cloud, it has a rather difficult fate, VMware is one of the first companies that released a public cloud, it was 5 years ago, then Amazon was still developing. There were a couple of unsuccessful launches, they did not work out with a load, then there was a pause, the cloud was renamed. Now rebranding again - vCloud Air. During this time, competitors have gone ahead. The VMware cloud has a specific niche. With the release of the market they have a delay. The company is relatively small, compared to the same Amazon, they did not build their data centers, found suitable, rented facilities - and therefore they entered the international market for a long time. For us, this became relevant several months ago, when VMware announced the opening of a data center in Germany, this is not far from us, and this can already be viewed as a cloud option where you can buy resources. Purchasing a point in which you can use vCloud Air is in any channel, from any distributor. Where you buy VMware products, you can buy vCloud Air packs, here you can use your points.

Connection from the platform. C -, « vCloud Air», , , , . , , – , , . , , , . «», , , . – , , , - , .

VMware – , , , – VSPP, vCloud Air Network. , . . , VMware, vCloud Air Network – , VMware, .

vCloud Air. – - ( – -). – My VMware. , My VMware , . - – My VMware – .

, . – : , , – -, , vCloud Air Network , .

, , , , [ ] , — .

, , , , , «» -. - , - , , .

vCloud Air vCloud , VMware, , . vCloud Director , , VMware , -. , , VMware Lise Automation open stack, VMware – . - . 6 – VMware Lise Automation, , .

– .

:

UPD :

VMware , , ,

VMware

:

15-19 , VMware vSphere 5.5: Fast Track

1-3 , Managing 3PAR Disk Arrays

4-5 , anaging HP 3PAR Disk Arrays: Replication and Performance

8-10 , ITIL V3 Foundation: ITIL -

MUK-Service - all types of IT repair: warranty, non-warranty repair, sale of spare parts, contract service

Source: https://habr.com/ru/post/259417/

All Articles