Increase performance with SO_REUSEPORT in NGINX 1.9.1

NGINX version 1.9.1 introduces a new feature that allows you to use the

(In NGINX Plus, this functionality will appear in release 7, which will be released later this year.)

The

As shown in the figure, without

')

With the

This allows for multi-core systems to reduce blocking when multiple workflows simultaneously accept connections. However, this also means that when one of the workflows is blocked by a long operation, this will affect not only the connections that it already processes, but also those that are still waiting in the queue.

To enable

Specifying

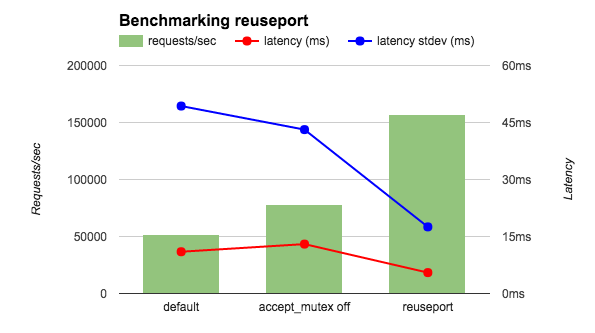

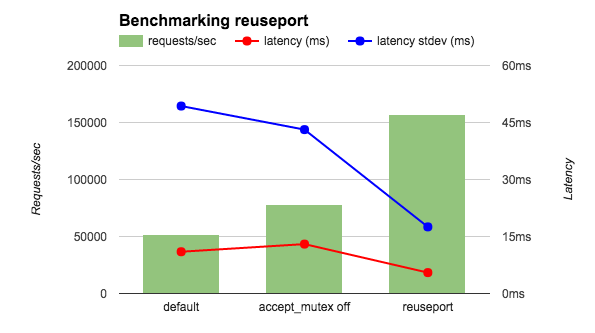

Testing performance with

Measurements were made using wrk using 4 NGINX workflows on the 36th nuclear AWS instance. To minimize network costs, the client and server worked through a loopback interface, and NGINX was configured to return the

Measurements were also taken when the client and server were running on different machines and an html file was requested. As can be seen from the table, with

In these tests, the frequency of requests was extremely high, and they did not require any complex processing. Various observations confirm that the greatest effect of using the

Thanks to Sepherosa Ziehau and Yingqi Lu, each of whom proposed their own solution for running

SO_REUSEPORT socket option, which is available in modern versions of operating systems such as DragonFly BSD and Linux (kernels 3.9 and newer). This option allows you to open several listening sockets at the same address and port at once. At the same time, the kernel will distribute incoming connections between them.(In NGINX Plus, this functionality will appear in release 7, which will be released later this year.)

The

SO_REUSEPORT has many potential applications for solving various problems. So, some applications can use it to update executable code on the fly (NGINX has always had this capability from time immemorial using a different mechanism). In NGINX, enabling this option increases performance in some cases by reducing locks on the locks.As shown in the figure, without

SO_REUSEPORT one listening socket is divided between multiple workflows, and each of them tries to accept new connections from it:')

With the

SO_REUSEPORT option, we have a lot of listening sockets, one for each workflow. The kernel of the operating system distributes to which of them a new connection falls (and thus which of the working processes will eventually receive it):

This allows for multi-core systems to reduce blocking when multiple workflows simultaneously accept connections. However, this also means that when one of the workflows is blocked by a long operation, this will affect not only the connections that it already processes, but also those that are still waiting in the queue.

Configuration

To enable

SO_REUSEPORT in http or stream modules, it is enough to specify the reuseport directive parameter in the example, as shown in the example: http { server { listen 80 reuseport; server_name example.org; ... } } stream { server { listen 12345 reuseport; ... } } Specifying

reuseport will automatically disable accept_mutex for this socket, since no mutex is needed in this mode.Testing performance with reuseport

Measurements were made using wrk using 4 NGINX workflows on the 36th nuclear AWS instance. To minimize network costs, the client and server worked through a loopback interface, and NGINX was configured to return the

OK string. Three configurations were compared: with accept_mutex on (default), with accept_mutex off and with reuseport . As can be seen in the diagram, the inclusion of reuseport increases the number of requests per second by 2-3 times and reduces delays, as well as their fluctuations.

Measurements were also taken when the client and server were running on different machines and an html file was requested. As can be seen from the table, with

reuseport there is a decrease in delays, similar to the previous measurement, and their spread decreases even more (almost by an order of magnitude). Other tests also show good results from using the option. Using reuseport load was distributed evenly across workflows. With the accept_mutex directive accept_mutex there was an imbalance at the beginning of the test, and in the event of a shutdown, all workflows took up more CPU time.| Latency (ms) | Latency stdev (ms) | CPU Load | |

|---|---|---|---|

| Default | 15.65 | 26.59 | 0.3 |

| accept_mutex off | 15.59 | 26.48 | ten |

| reuseport | 12.35 | 3.15 | 0.3 |

In these tests, the frequency of requests was extremely high, and they did not require any complex processing. Various observations confirm that the greatest effect of using the

reuseport option reuseport achieved when the load responds to this pattern. Thus, the reuseport option reuseport not available for the mail module, since mail traffic definitely does not meet these conditions. We recommend that everyone make their own measurements to ensure that the effect of the reuseport , and not blindly turn on the option wherever possible. Some tips on testing the performance of NGINX can be found in the speech of Konstantin Pavlov at the nginx.conf 2014 conference.Thanks

Thanks to Sepherosa Ziehau and Yingqi Lu, each of whom proposed their own solution for running

SO_REUSEPORT in NGINX. The NGINX team used their ideas for implementation, which we consider to be ideal.Source: https://habr.com/ru/post/259403/

All Articles