Cackle comment system: how we did the analytics

Hello! In our previous post about the cloud service Cackle, we talked about architecture, technology and loads in general. Today we want to share how under the conditions of such heavy loads and already accumulated information (30,000,000 comments from 2011) we did a detailed analytics for the Cackle comment system . Our method of collecting statistics is universal and I think it will be interesting, in terms of practical application, to all those who are faced with the task of developing analytics, but for the time being do not quite know where to start.

Since the Cackle comment system has been in operation since 2011, about 30 million comments have been accumulated. About 100,000 are published per day, on peaks, per second, up to 1000 simultaneous.

All this in the database is stored in the comment table of 15GB size. The table is indexed by the site_id field, which is the client's site identifier. Total registered 35,000 sites. The largest number of comments is rusvesna.su (9 million), svpressa.ru (800 thousand), 3dnews.ru (500 thousand), carambatv.ru (450 thousand).

Requirements for analytics are the collection and updating of daily statistics for each site:

All this should work quickly regardless of the site, large or very small.

')

As we wrote earlier , as a database we have PostgreSQL with replication into several data centers distributed throughout Russia and Europe. PostgreSQL copes with the load and it was chosen as the main component of the business logic for collecting comment statistics.

In short, the collection is done in two stages.

Suppose a client came into the administration panel, chose one of their sites and moved to Analytics:

1. If the selected site has comments and no statistics on them, then PostgreSQL executes an SQL request for the initial collection of statistics on all the necessary parameters;

2. Next, the same SQL query will be put in the heading and executed every 15 minutes to update the data.

So we need to collect daily statistics of comments for all the time for: the selected site (site_id), for all (total), approved (approved), pending (pending), spam (spam), deleted (deleted) comments, likes (up) , dizlaykam (down), as well as for each social provider (vk, ok, fb, tw, gp, etc.), anonymous (anonym) or SSO (sso) user from the comment table and mc_user (user table) . The comment.created field is the time the comment was created.

The distribution of the query execution time depends on the number of site comments and looks like this:

1. Comments up to 100 000 - request time up to 5 seconds;

2. Comments up to 1 000 000 - request time up to 1 min;

3. Comments up to 9 000 000 - request time up to 2 minutes;

It is clear that every time chasing this SQL is suicide and therefore we need to create additional tables to store the data received by this query.

Next, add the INSERT INTO comment_stats to the first SQL query.

Now we will get the analytics data directly from the comment_stats table:

There was a problem updating data. It is impossible to simply take and execute the SQL of the initial collection of statistics all the time, because if analytics are connected to several large sites, the database performance will disappear.

The simplest and most effective solution is to add a new comment_id field to the comment_stats table, which stores the maximum comment id for each day. When updating data, the collection of statistics will begin with this id. Given all this, we modify the initial request:

If anyone noticed, then this construction

Now everything is OK, except that in this form the data update request will not work. Since the first call for already collected statistics will result in an exception caused by an attempt to insert data with the already existing private key comment_stats_pkey. In other words, we collected the initial statistics, 15 minutes had passed, the update data was launched with the condition id> last comment_id from comment_stats and if someone published new comments during this time, our query will try to insert data with the same day and site_id.

There is a very simple solution (without any Rules on INSERT ) - before requesting data update, delete the last line in the comment_stats table:

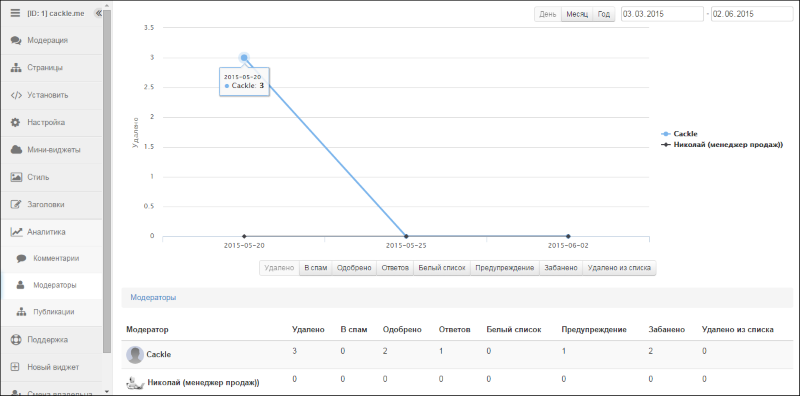

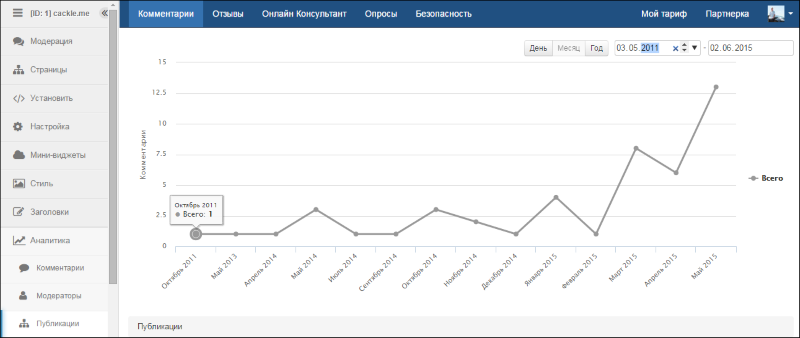

The described mechanism is very simple and effective. It has simple logic, which is suitable for collecting almost any statistics of objects in a relational database. For example, we, using all the same 2 SQL queries (deletion, collection), collect statistics of moderators, publications (posts), and very soon the same analytics will appear for the Cackle Reviews review system.

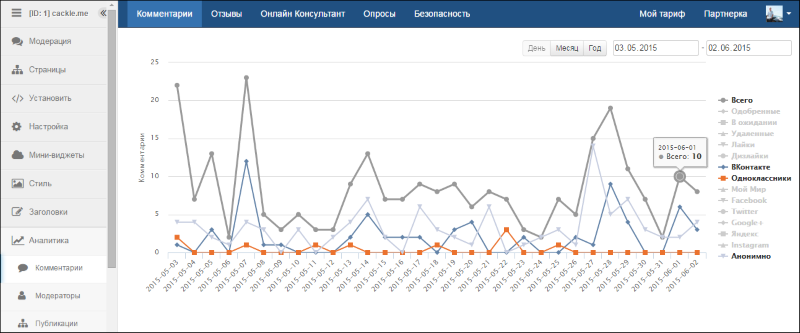

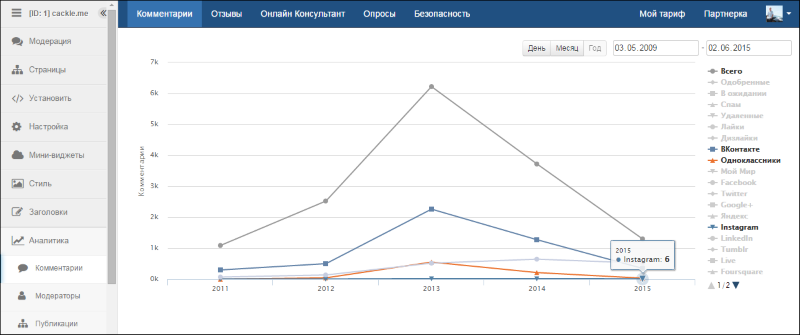

In the client browser, we use HighCharts to display charts. This is a paid library (for commercial projects) charting. I have to say that before choosing HighCharts, we looked at many similar frameworks, and none was better.

From what I liked most: the lack of lags even at 33,000 thousand points, mobile adaptability, clever time interval narrowing, easy integration, good API. By the way, for startups they have a discount, you can ask for it in a letter.

A few screenshots of how this ultimately looks.

If you have additional questions on the above technology or on our system, we will be happy to answer hi@cackle.me.

Thanks for attention!

Not a lot of source data

Since the Cackle comment system has been in operation since 2011, about 30 million comments have been accumulated. About 100,000 are published per day, on peaks, per second, up to 1000 simultaneous.

All this in the database is stored in the comment table of 15GB size. The table is indexed by the site_id field, which is the client's site identifier. Total registered 35,000 sites. The largest number of comments is rusvesna.su (9 million), svpressa.ru (800 thousand), 3dnews.ru (500 thousand), carambatv.ru (450 thousand).

Requirements for analytics are the collection and updating of daily statistics for each site:

- Comments (total, published, pending, spam, deleted);

- Likes and dislikes;

- Comments from social providers, anonymous and SSO (authorized through a single authorization mechanism).

All this should work quickly regardless of the site, large or very small.

')

Server part - PostgreSQL

As we wrote earlier , as a database we have PostgreSQL with replication into several data centers distributed throughout Russia and Europe. PostgreSQL copes with the load and it was chosen as the main component of the business logic for collecting comment statistics.

In short, the collection is done in two stages.

Suppose a client came into the administration panel, chose one of their sites and moved to Analytics:

1. If the selected site has comments and no statistics on them, then PostgreSQL executes an SQL request for the initial collection of statistics on all the necessary parameters;

2. Next, the same SQL query will be put in the heading and executed every 15 minutes to update the data.

1. Initial collection of statistics for all comments.

So we need to collect daily statistics of comments for all the time for: the selected site (site_id), for all (total), approved (approved), pending (pending), spam (spam), deleted (deleted) comments, likes (up) , dizlaykam (down), as well as for each social provider (vk, ok, fb, tw, gp, etc.), anonymous (anonym) or SSO (sso) user from the comment table and mc_user (user table) . The comment.created field is the time the comment was created.

SELECT DATE_TRUNC('day', t.created) as day, :siteId as site_id, COUNT(t) as total, SUM(CASE WHEN t.status = 1 THEN 1 ELSE 0 END) as approved, SUM(CASE WHEN t.status = 0 THEN 1 ELSE 0 END) as pending, SUM(CASE WHEN t.status = 3 THEN 1 ELSE 0 END) as spam, SUM(CASE WHEN t.status = 2 THEN 1 ELSE 0 END) as deleted, SUM(CASE WHEN t.rating > 0 THEN 1 ELSE 0 END) as up, SUM(CASE WHEN t.rating < 0 THEN 1 ELSE 0 END) as down, SUM(CASE WHEN t.provider = 'vkontakte' THEN 1 ELSE 0 END) as vk, SUM(CASE WHEN t.provider = 'odnoklassniki' THEN 1 ELSE 0 END) as ok, SUM(CASE WHEN t.provider = 'facebook' THEN 1 ELSE 0 END) as fb, SUM(CASE WHEN t.provider = 'twitter' THEN 1 ELSE 0 END) as tw, ... SUM(CASE WHEN t.provider = 'sso' THEN 1 ELSE 0 END) as sso, SUM(CASE WHEN t.anonym > 0 THEN 1 ELSE 0 END) as anonym FROM ( SELECT c.id, c.created, c.status, c.rating, c.anonym, u.provider FROM comment c LEFT JOIN mc_user u ON c.author = u.id WHERE c.site_id = :siteId ) t GROUP BY DATE_TRUNC('day', t.created) ORDER BY DATE_TRUNC('day', t.created); The distribution of the query execution time depends on the number of site comments and looks like this:

1. Comments up to 100 000 - request time up to 5 seconds;

2. Comments up to 1 000 000 - request time up to 1 min;

3. Comments up to 9 000 000 - request time up to 2 minutes;

It is clear that every time chasing this SQL is suicide and therefore we need to create additional tables to store the data received by this query.

CREATE TABLE comment_stats ( day date NOT NULL, site_id bigint NOT NULL, total integer, approved integer, pending integer, spam integer, deleted integer, up integer, down integer, vk integer, ok integer, fb integer, tw integer, ... sso integer, anonym integer, CONSTRAINT comment_stats_pkey PRIMARY KEY (day, site_id) ); Next, add the INSERT INTO comment_stats to the first SQL query.

INSERT INTO comment_stats SELECT DATE_TRUNC('day', t.created) as day, :siteId as site_id, COUNT(t) as total, SUM(CASE WHEN t.status = 1 THEN 1 ELSE 0 END) as approved, ... Now we will get the analytics data directly from the comment_stats table:

select * from comment_stats where site_id = :siteId .2. Update data

There was a problem updating data. It is impossible to simply take and execute the SQL of the initial collection of statistics all the time, because if analytics are connected to several large sites, the database performance will disappear.

The simplest and most effective solution is to add a new comment_id field to the comment_stats table, which stores the maximum comment id for each day. When updating data, the collection of statistics will begin with this id. Given all this, we modify the initial request:

INSERT INTO comment_stats SELECT DATE_TRUNC('day', t.created) as day, :siteId as site_id, -- id MAX(t.id) as comment_id, COUNT(t) as total, SUM(CASE WHEN t.status = 1 THEN 1 ELSE 0 END) as approved, ... SUM(CASE WHEN t.anonym > 0 THEN 1 ELSE 0 END) as anonym FROM ( SELECT c.id, c.created, c.status, c.rating, c.anonym, u.provider FROM comment c LEFT JOIN mc_user u ON c.author = u.id WHERE c.site_id = :siteId -- id comment_id comment_stats AND c.id > (SELECT COALESCE(MAX(comment_id), 0) FROM comment_stats WHERE site_id = :siteId) ) t GROUP BY DATE_TRUNC('day', t.created) ORDER BY DATE_TRUNC('day', t.created); If anyone noticed, then this construction

COALESCE(MAX(comment_id), 0) allows you to make one request both for the initial collection of statistics, as well as for updating data. That is, if there is nothing in comment_stats, then we return 0 and the collection goes through the entire comment table for site_id, if there is data, then the collection starts only from the last comment_id.Now everything is OK, except that in this form the data update request will not work. Since the first call for already collected statistics will result in an exception caused by an attempt to insert data with the already existing private key comment_stats_pkey. In other words, we collected the initial statistics, 15 minutes had passed, the update data was launched with the condition id> last comment_id from comment_stats and if someone published new comments during this time, our query will try to insert data with the same day and site_id.

There is a very simple solution (without any Rules on INSERT ) - before requesting data update, delete the last line in the comment_stats table:

DELETE FROM comment_stats WHERE site_id = :siteId AND day IN (SELECT day FROM comment_stats WHERE site_id = :siteId ORDER BY day DESC LIMIT 1) Summary code

The comment_stats table contains all the statistics for all sites:

Single request for the initial collection or updating of statistics:

Before updating it is necessary to start:

CREATE TABLE comment_stats ( day date NOT NULL, site_id bigint NOT NULL, total integer, approved integer, pending integer, spam integer, deleted integer, up integer, down integer, vk integer, ok integer, fb integer, tw integer, ... sso integer, anonym integer, CONSTRAINT comment_stats_pkey PRIMARY KEY (day, site_id) ); Single request for the initial collection or updating of statistics:

INSERT INTO comment_stats SELECT DATE_TRUNC('day', t.created) as day, :siteId as site_id, MAX(t.id) as comment_id, COUNT(t) as total, SUM(CASE WHEN t.status = 1 THEN 1 ELSE 0 END) as approved, SUM(CASE WHEN t.status = 0 THEN 1 ELSE 0 END) as pending, SUM(CASE WHEN t.status = 3 THEN 1 ELSE 0 END) as spam, SUM(CASE WHEN t.status = 2 THEN 1 ELSE 0 END) as deleted, SUM(CASE WHEN t.rating > 0 THEN 1 ELSE 0 END) as up, SUM(CASE WHEN t.rating < 0 THEN 1 ELSE 0 END) as down, SUM(CASE WHEN t.provider = 'vkontakte' THEN 1 ELSE 0 END) as vk, SUM(CASE WHEN t.provider = 'odnoklassniki' THEN 1 ELSE 0 END) as ok, SUM(CASE WHEN t.provider = 'facebook' THEN 1 ELSE 0 END) as fb, SUM(CASE WHEN t.provider = 'twitter' THEN 1 ELSE 0 END) as tw, ... SUM(CASE WHEN t.provider = 'sso' THEN 1 ELSE 0 END) as sso, SUM(CASE WHEN t.anonym > 0 THEN 1 ELSE 0 END) as anonym FROM ( SELECT c.id, c.created, c.status, c.rating, c.anonym, u.provider FROM comment c LEFT JOIN mc_user u ON c.author = u.id WHERE c.site_id = :siteId AND c.id > (SELECT COALESCE(MAX(comment_id), 0) FROM comment_stats WHERE site_id = :siteId) ) t GROUP BY DATE_TRUNC('day', t.created) ORDER BY DATE_TRUNC('day', t.created); Before updating it is necessary to start:

DELETE FROM comment_stats WHERE site_id = :siteId AND day IN (SELECT day FROM comment_stats WHERE site_id = :siteId ORDER BY day DESC LIMIT 1) The described mechanism is very simple and effective. It has simple logic, which is suitable for collecting almost any statistics of objects in a relational database. For example, we, using all the same 2 SQL queries (deletion, collection), collect statistics of moderators, publications (posts), and very soon the same analytics will appear for the Cackle Reviews review system.

Client part - HighCharts

In the client browser, we use HighCharts to display charts. This is a paid library (for commercial projects) charting. I have to say that before choosing HighCharts, we looked at many similar frameworks, and none was better.

From what I liked most: the lack of lags even at 33,000 thousand points, mobile adaptability, clever time interval narrowing, easy integration, good API. By the way, for startups they have a discount, you can ask for it in a letter.

Javascript integration code

new Highcharts.Chart({ chart: { type: 'line', // <div id="chart"></div> renderTo: 'chart' }, title: { text: '', style: { //title display: 'none' } }, xAxis: { // days ( comment_stats) // ['2015-05-03', '2015-05-04', ... '2015-06-03'] categories: days }, yAxis: { min:0, title: { // Y text: MESSAGES.comments } }, legend: { layout: 'vertical', align: 'right', verticalAlign: 'middle', borderWidth: 0 }, //series : // , , , , .. series: series // credits: { enabled: false } }); // series //Params - var Params = [ { name: 'total', index: 3, color: '#999' }, { name: 'approved', index: 4, color: '#9edd69' }, { name: 'pending', index: 5, color: '#ffbb3d' }, { name: 'spam', index: 6, color: '#ff95af' }, { name: 'deleted', index: 7, color: '#666' }, { name: 'up', index: 8, color: '#239600' }, { name: 'down', index: 9, color: '#ff2f2f' }, { name: 'vk', index: 10, color: '#6383a8' }, { name: 'ok', index: 11, color: '#eb722e' }, { name: 'fb', index: 13, color: '#4e69a2' }, { name: 'tw', index: 14, color: '#55acee' }, ... { name: 'sso', index: 22, color: '#17c200' }, { name: 'anonym', index: 23, color: '#c6cde0' } ]; for (var p in Params) { //stats //: stats.total, stats.spam, stats.vk, stats.anony .. var param = Params[p], stat = stats[param.name]; if (stat) { series.push({ nick: param.name, name: MESSAGES[param.name], // data: stat, color: param.color }); } } A few screenshots of how this ultimately looks.

If you have additional questions on the above technology or on our system, we will be happy to answer hi@cackle.me.

Thanks for attention!

Source: https://habr.com/ru/post/259301/

All Articles