A few tips on OpenMP

OpenMP is a standard that defines a set of compiler directives, library procedures, and environment variables for creating multi-threaded programs.

Many articles articles were on OpenMP. However, the article contains some tips to help avoid some errors. These tips are not often featured in lectures or books.

')

1. Name the critical sections.

In the queue, sons of bitches, in the queue! // M. A. Bulgakov "Heart of a Dog"

With the help of the directive critical we can specify a piece of code that will be executed only by one thread at a time. If one of the threads has started the execution of the critical section with the given name, then the other threads that start the execution of the same section will be blocked. They will wait for their turn. As soon as the first thread completes the execution of the section, one of the blocked threads will enter it. The choice of the next thread that will perform the critical section will be random.

#pragma omp critical [()] Critical sections can be named or unnamed. Improves performance in various situations. According to the standard, all critical sections without a name will be associated with one name. Assigning a name allows you to simultaneously perform two or more critical sections simultaneously.

Example:

#pragma omp critical (first) { workA(); } #pragma omp critical (second) { workB(); } // workA() workB() #pragma omp critical { workC(); } #pragma omp critical { workD(); } // workC() , workD() When assigning a name, be careful not to assign the names of system functions or the names that have already been used. If your critical sections work with the same resource (output in one file, output to the screen) you should assign the same name or not assign it at all.

2. Do not use! = In cycle management

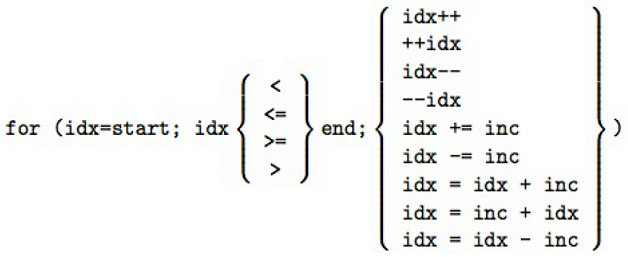

The for directive imposes restrictions on the structure of the corresponding loop. Definitely, the corresponding cycle should be canonical.

Developer Response from the OpenMP Architecture Review Board

If we allow! =, Programmers can get an indefinite number of loop iterations. The problem in the compiler when it generates code to calculate the number of iterations.

For a simple loop like:

for( i = 0; i < n; ++i ) You can determine the number of iterations, n if n> = 0, and zero iterations if n <0.

for( i = 0; i != n; ++i ) n iterations can be defined if n> = 0; if n <0, we do not know the number of iterations.

for( i = 0; i < n; i += 2 ) the number of iterations is the integer part of (((n + 1) / 2) if n> = 0, and 0 if n <0.

for( i = 0; i != n; i += 2 ) cannot determine when i equals n. What if n is an odd number?

for( i = 0; i < n; i += k ) the number of iterations is the largest integer from (((n + k-1) / k) if n> = 0, and 0 if n <0; if k <0, this is not a valid OpenMP program.

for( i = 0; i != n; i += k ) i increases or decreases? Will there be equality? This all can lead to an endless loop.

3. Carefully install nowait

If Hachiko wants to wait, he must wait. // Hachiko: The most loyal friend

If the clause nowait is not specified, then the for construction will implicitly end with barrier synchronization. At the end of the parallel loop, an implicit barrier synchronization of parallel threads running occurs: their further execution occurs only when all of them reach the given point; if there is no need for such a delay, the nowait option allows threads that have already reached the end of the cycle to continue execution without synchronization with the others.

Example:

#pragma omp parallel shared(n,a,b,c,d,sum) private(i) schedule(dynamic) { #pragma omp for nowait for (i = 0; i < n; i++) a[i] += b[i]; #pragma omp for nowait for (i = 0; i < n; i++) c[i] += d[i]; #pragma omp for nowait reduction(+:sum) for (i = 0; i < n; i++) sum += a[i] + c[i]; } This example has an error; it is in schedule (dynamic) . The point is that nowait dependent data cycles allow only c schedule (static) . Only in this method of work scheduling does the standard guarantee that nowait works correctly for data dependent cycles. In our case, it is enough to erase schedule (dynamic) in most implementations, the default is schedule (static) .

4. Carefully check the code before using task untied

int dummy; #pragma omp threadprivate(dummy) void foo() {dummy = …; } void bar() {… = dummy; } #pragma omp task untied { foo(); bar(); } task untied specifies that the task is not bound to the thread that began to execute it. Another thread can continue execution of the task after pausing. In this example, the misuse of task untied . The programmer assumes that both functions in the task will be executed by one thread. However, if, after pausing the task, bar () will be executed by another. Due to the fact that each thread has its own dummy variable (in our case it is threadprivate ). The assignment in bar () will be incorrect.

I hope these tips will help beginners.

Useful links:

OpenMP 4.0 pdf examples

OpenMP 4.0 github examples

OpenMP 4.0 standard pdf

All directives on 4 sheets C ++ pdf

All directives on 4 sheets Fortran pdf

One of the best OpenMP slides in Russian from Mikhail Kurnosov pdf

Source: https://habr.com/ru/post/259153/

All Articles