When should we expect the apocalypse of network infrastructure?

Approximately 2.5 billion people on the planet are constantly on the Internet, and every year, month, day this number is steadily growing, both in absolute terms and in percentage. More users, more network-connected devices, more online services are generating an ever-increasing amount of traffic that runs on Internet highways that are routed around the entire Earth. So called “Channel Explosion”, which is recalled in one of the analytical reports of the IEEE (Institute of Electrical and Electronics Engineers), led to a situation when there is a “shortage” of throughput capacity. The insufficient growth of the commissioning of a new IT infrastructure is a direct path to a fall in the speed of access to the network by ordinary users.

As is known, according to the laws of economics, the lack of a product or service often creates a “rush” on it, and this is naturally reflected in the prices at which it is provided. Moreover, the situation is aggravated by the fact that sometimes a user who is accustomed to the cheapness of the Internet is actually unable to abandon the use of the usual services, Internet resources, and the way of life that has developed on their basis. According to the presented IEEE report, an unfavorable trend in the traffic infrastructure infrastructure has existed since 2010 and in the very near future it can turn into global events, the main one among which can be called a serious redistribution of the existing IT market. Is it all so pitiable, as experts of a respected institution foresee, and is there still an opportunity to “smooth out” the consequences of the human leap into the world of “greater traffic”, this will be further discussed in the presented article.

')

Being the head of the network capacity assessment department at IEEE, as well as being the former head of the unit, the same IEEE, for developing infrastructure based on Internet channels 40–100 Gb / s - John D'Ambrosia is a man who is in the forefront promoting the ideas of a radical increase in IT infrastructure capacity. Taking different positions in organizations, communities whose activities are aimed directly at the creation and operation of modern IT infrastructure, this person authoritatively substantiates his position.

“This is the question that keeps me awake at night,” says John, being the head of the Ethernet Alliance industry group already mentioned “titles”. “In my activities, I often found myself in an absurd situation, when finishing a project, say 100 Gigabit Internet channel, we already at that moment faced with the need to expand it. Not having time for reality, our planning, given the difficultly predictable needs of users of Internet backbones, in conditions of a radical increase in traffic, often led to repeated costs for improving newly commissioned facilities, which naturally reflected on the company's profits and laid down a burden on additional costs for consumers. ”

Also, when it came to points where it would be possible to reverse the current state of affairs, John said: “The main thing we can only hope for now is technological progress, now there are quite a few organizations that use the Internet and network infrastructure of the next level. Continuing development in this direction, programmable network parameters plus an increase in investments in underwater Internet channels and of course Internet 2, these are areas in development that will help us smooth the consequences of the “Channel Explosion” that we are seeing. ”

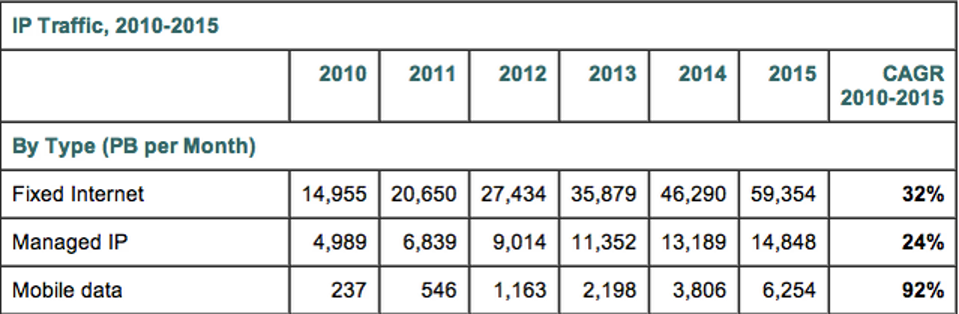

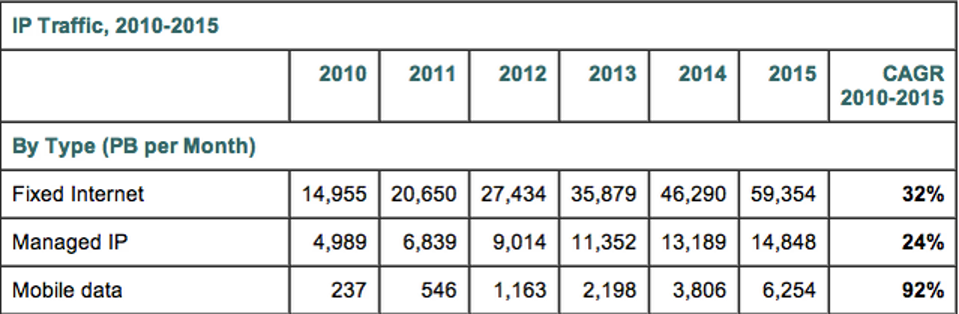

To answer this question, statistical data are needed and they are there. Thanks to such a respected organization as Cisco and their VICN index (Visual Index Cisco Networking), we can look into the past of Internet traffic and are comparable to the current, quite correctly, predict the foreseeable future. After analyzing the existing reports of Cisco from 2010 until 2015, we can trace a very interesting pattern of growth in global traffic. If at the dawn of tenths, researchers fixed up to 20.2 Exabytes of monthly traffic generation and up to 242 Exabytes in annual terms, after five years, traffic actually increased fourfold. As of 2015, at the end of the year, according to the existing forecast, which is based on the existing dynamics, we will get close to the close to 966 Exabyte (almost 1 Zetabyte). This volume of traffic could be generated if, say, every four minutes, all movies ever shot by humanity were broadcast through the network.

Given the fact that 1 Exabyte equals 1 x 1 000 Petabytes, or 1 x 1 000 000 Terabytes, it becomes even more obvious that a huge burden that lay on the existing information highways.

More users on the network - more traffic, this simple truth has been particularly acute in recent years. Very unexpected statistics showed the usual "home" consumers of the Internet. According to estimates by Cisco researchers, the needs of household consumers in developed countries in 2015 already reached 1 terabyte of traffic per month. With such a high rate and an impressive number of users, they broke into the top of the most voracious consumers of Internet channels.

A lot of Internet services are responsible for petabytes generated data, but the palm, of course, remains for the video. Already in 2010, the video came out on top in generating traffic on the network; file sharing and torrents played a huge role here. In 2015, the situation was even more aggravated, according to very competent estimates, the transportation of various kinds of video materials through the network will create more than 50% of all global traffic. If you translate this volume into more tangible concepts, then it can be compared with three billion DVDs, or with one million minutes of video transmitted through the network in just one second.

“In this case, the traffic generation is growing not so much due to the video materials we’ve previously used to, but rather due to streaming video, the main part of which is generated by rapidly growing applications that are gaining ever-increasing popularity - video conferencing,” based on years of research, he voiced this thought Barnet (Thomas Barnett) - Manager for the delivery of services offered by Cisco.

Also one of the reasons that gave rise to the “Channel Explosion” was the mass availability of smartphones and tablets with the ability to use broadband contactless connection. According to Cisco analytics, in 2016 the number of active mobile devices on the planet will exceed the number of residents, reaching a quantitative mark of 10 billion pieces.

Being on the verge of a technological catastrophe, a banal inability to keep under control an increase in traffic flows, IT companies, as well as research institutes, over the past years, have been trying to turn the tide and prevent the collapse of IT infrastructure. Let's take a closer look at those tools that we already have and developments that can drastically change the very gloomy prospect of drowning in Zetabytes of data.

With the widespread use of wireless technologies, ordinary users of the network have the illusion that cable infrastructure is a thing of the past, but this is not the end. WiFi networks in homes, parks and subways are just a tiny tip of the iceberg when the issue reaches the real network infrastructure.

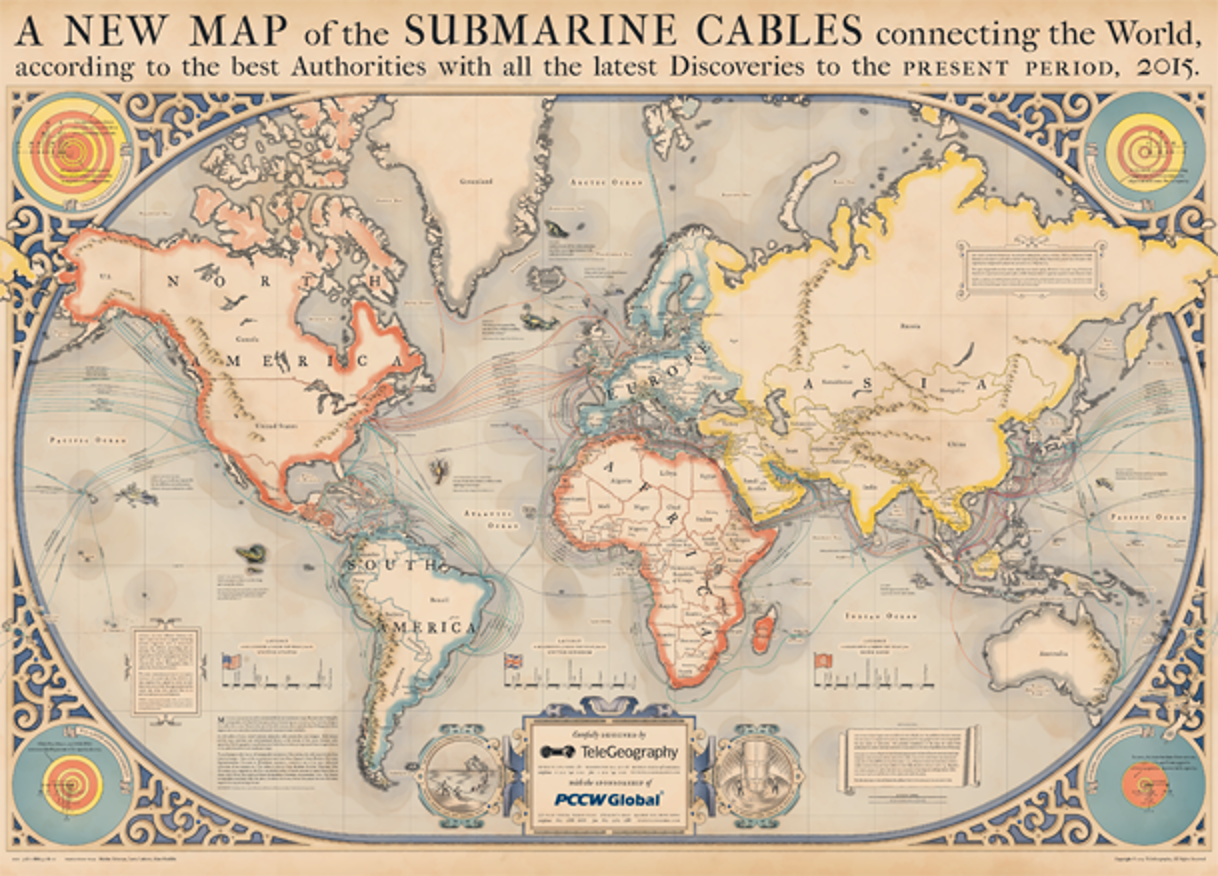

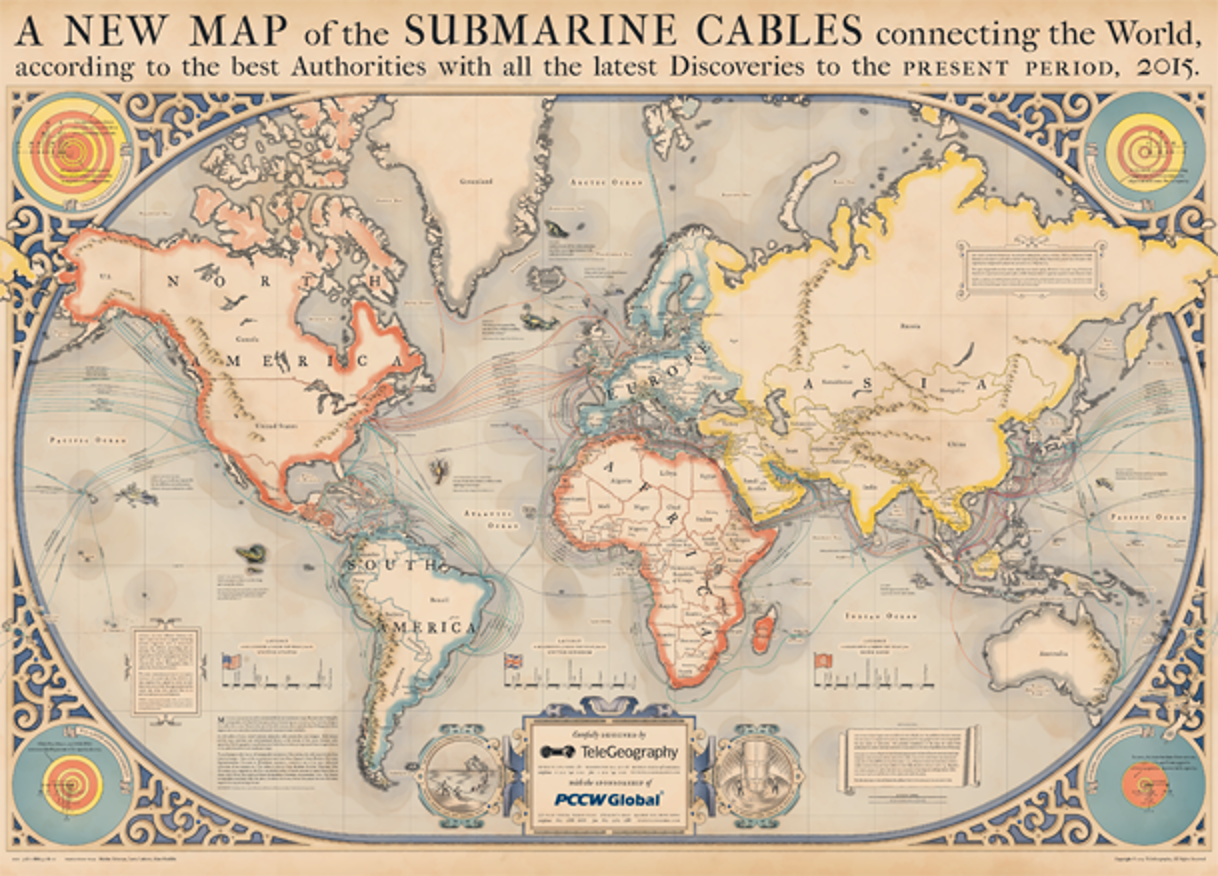

“Wireless Internet is just like that until the moment when mobile equipment reaches the base station and a completely different story begins here,” says Alan Moauldin, director of research company TeleGeography. “No matter how convenient non-contact connections were, fiber-optic communication networks were, are and will be in the foreseeable future, the backbone of the IT infrastructure. Cables under the ground, under the water column, have already chained the whole Earth into their arms, and there is no alternative to this right now. ”

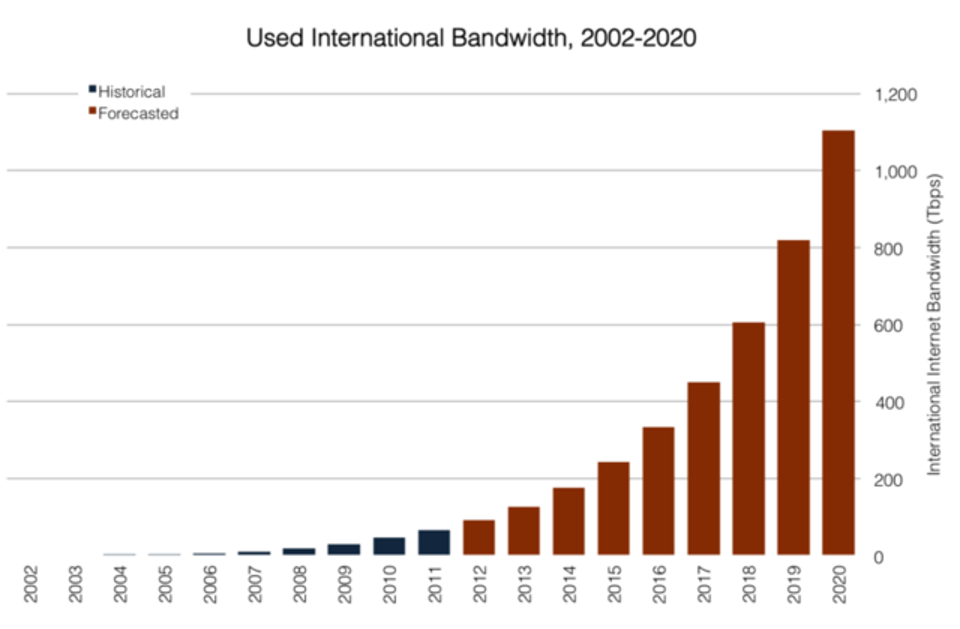

The company Alana Moldina is directly involved in researching the development of submarine IT highways and their data on the increase in throughput capacity is very optimistic. Cables laid under all the world's oceans are aimed at solving the problem with the transfer of traffic not so much between local sites as between regions of the world. This application largely determines their gigantic capacity, the amount of investment and the scope for the commissioning of new lines of communication that we have seen in the last decade.

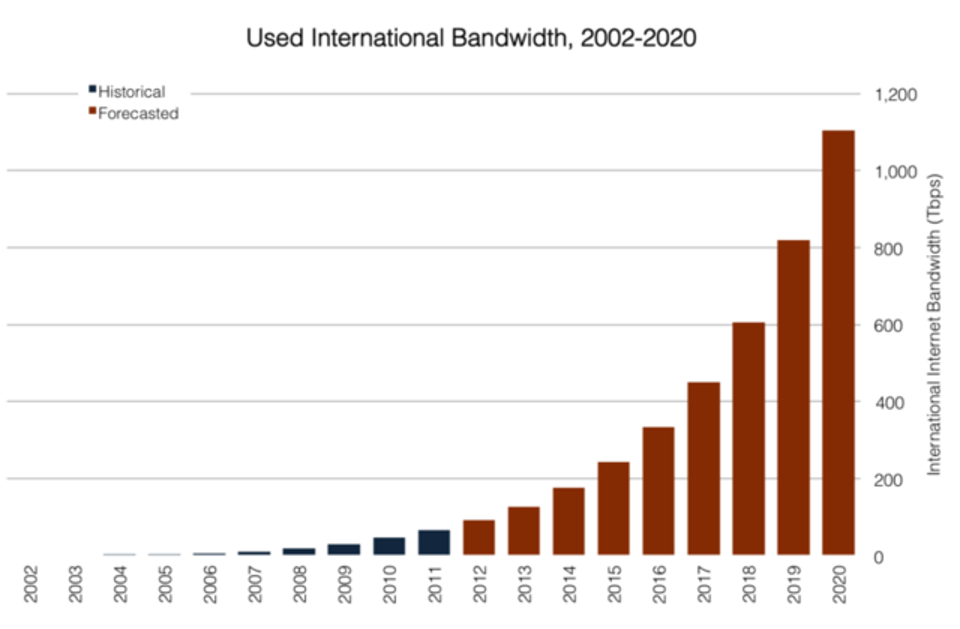

As can be seen on the presented graph, the width of IT highways in the world is growing at an accelerated pace from year to year. Starting from 1.4 Terabit per second in 2002, this figure already in 2006 rose to 6.7 Terabit, but now the network capacity is at the level of 200 Terabit. According to current forecasts, by 2020, at least 1,103.3 terabits will be transported through existing channels.

In order to understand the problems and prospects for the growth of IT infrastructure, you need to have an idea of the regional uneven distribution of existing Internet capacities. According to the same report provided by TeleGeography, the permanent leaders of the list of the most developed infrastructure are of course Europe and the USA. The African continent has become the most backward region, even despite the rapid development of networks in recent years. As of 2011, before the surge in work to improve the infrastructure of the black continent, undersea highways provided a speed of only 700 Gigabits per second, by which time the European Region was provided with channels with a total speed of just under 50 Terabits per second.

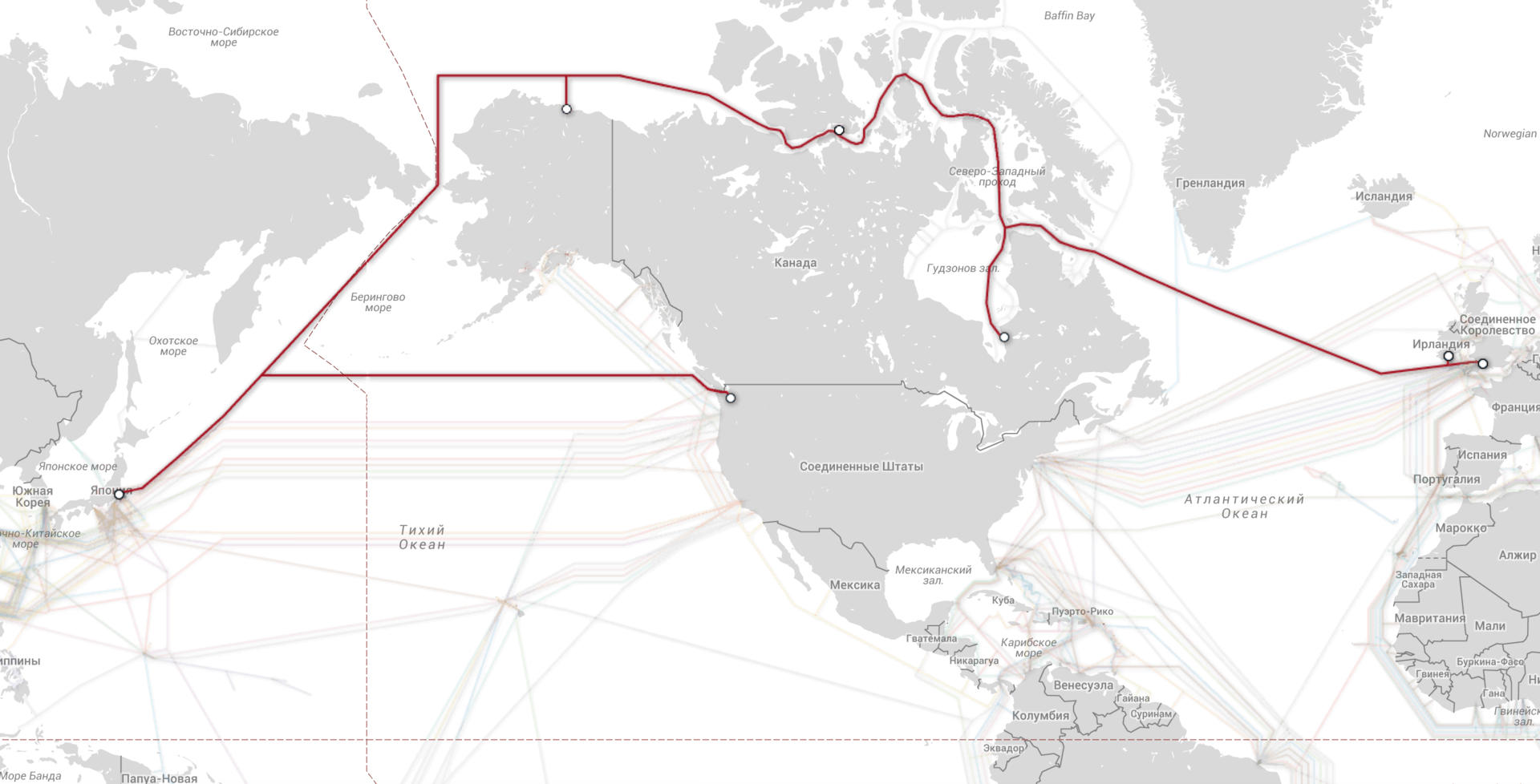

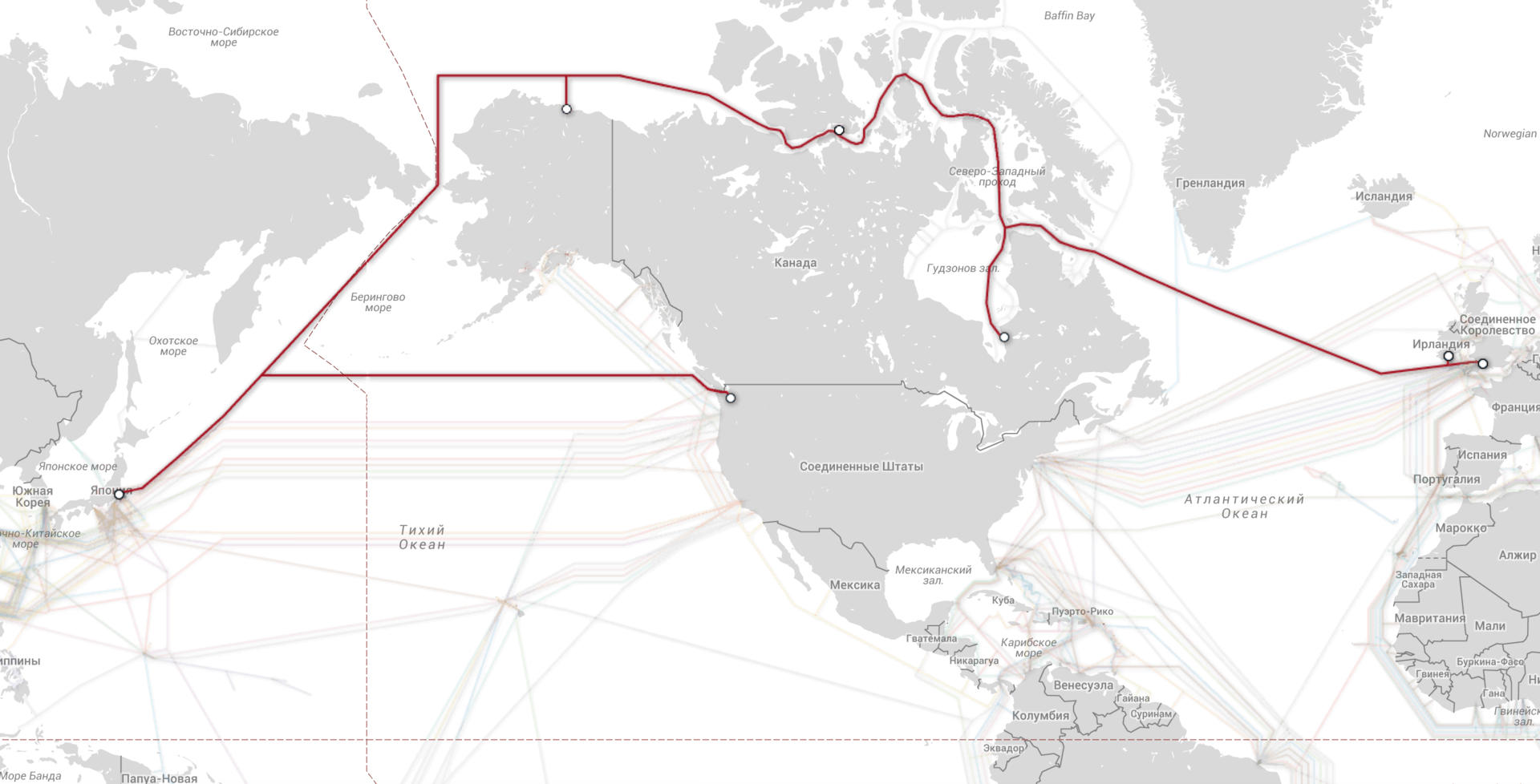

In addition to channel width, network latency is also a very important feature. Although for the average Internet user the difference is not a special role PING if it is within, say 200 ms, but there are cases when every millisecond is counted. Not so long ago, a project was launched to lay a submarine cable through the Arctic region, the main goal of which is only to reduce the network delay to 60 ms between the London and Tokyo stock exchanges, the project budget is $ 1.5 billion, the main line will be launched in 2016 year Also indicative in this vein was South America.

Having in general broad IT highways to the world, all of them were even recently tied to North America, which appeared very unfavorably on the delay of the network. Geographically, being only 3 thousand kilometers from Africa, the inhabitants of South America were forced to use highways passing through two other continents, but at the moment with the advent of highways in the southern Atlantic and Pacific Ocean, the situation is changing for the better.

“Yet the problem of connectivity is not so acute. Integration into the civilized world of such rogue states as the former social countries. The camps, especially Russia, provide an opportunity to connect Europe and Asia not only through the Arctic, but also by land, thereby complementing the new “land” highways “underwater”. As it is with the Asian region, Europe and the United States have a shortage of Internet channel width, ”Alan Moldin formulated his own thought.

The need to build new networks is further aggravated by the fact that existing cables do not always meet the requirements of the times and many of the previously laid out are irretrievably outdated. According to the experts of the IEEE Institute, at the moment, the lines erected no more than 25 years ago remain relevant for the most efficient use. “These lines of communication are fully functional, but they are losing their commercial effect,” continues Moldin, “The future of networks is tightly connected with the development of the technology of coherent filtering of the signal.” Polarizing the signal, and after filtering out its different phases, we can significantly increase the capacity of existing highways, this is the technology that can fundamentally resolve the existing shortage of networks in the near future. The lines originally designed for transporting 10 Gb / s of data can be increased to 40 and can even be up to 100 Gb / s. All that is needed for this is the replacement of the equipment that encodes / decodes the signal and amplifies it, hundreds of thousands of kilometers of the optical fiber itself, laid around the world, remains the same. ”

In addition to the quantitative improvement of the network, it is also necessary to remember about its qualitative component. Currently, the most widely publicized technology is gaining OpenFlow. This system for optimizing packet forwarding has already been successfully used both locally, in research centers, as an example of the CERN network, as well as among commercially successful participants of the IT market, the most striking example here is Google, which uses OpenFlow in its data centers.

“We are able to organize the work of the programmed network on a small scale,” says Phil DeMar, a network engineer at the Fermi Institute in Illinois, USA. The institute is one of 11 institutions that receive data directly from CERN. After receiving this data, they are further transferred to the open network at more than 160 sites of 2 priority levels and many times more sites with a lower priority level. “If it were not for the implemented OpenFlow technology, our existing IT infrastructure would simply be paralyzed by the huge amount of data we receive from CERN. However, it is not worthwhile to idealize the mentioned technology, this is not the top, but only the first step to it. ” But when the conversation turned to the possibility of mass implementation of network programming technology, Phil was somewhat skeptical - “Our institute has two dedicated lines of 10 GB / s each with CERN, and that’s enough for us, but considering all the problems with implementing OpenFlow and maintaining it it is less expensive to increase the channels to 20-30 Gb / s, the technology is not yet ready for widespread use. ”

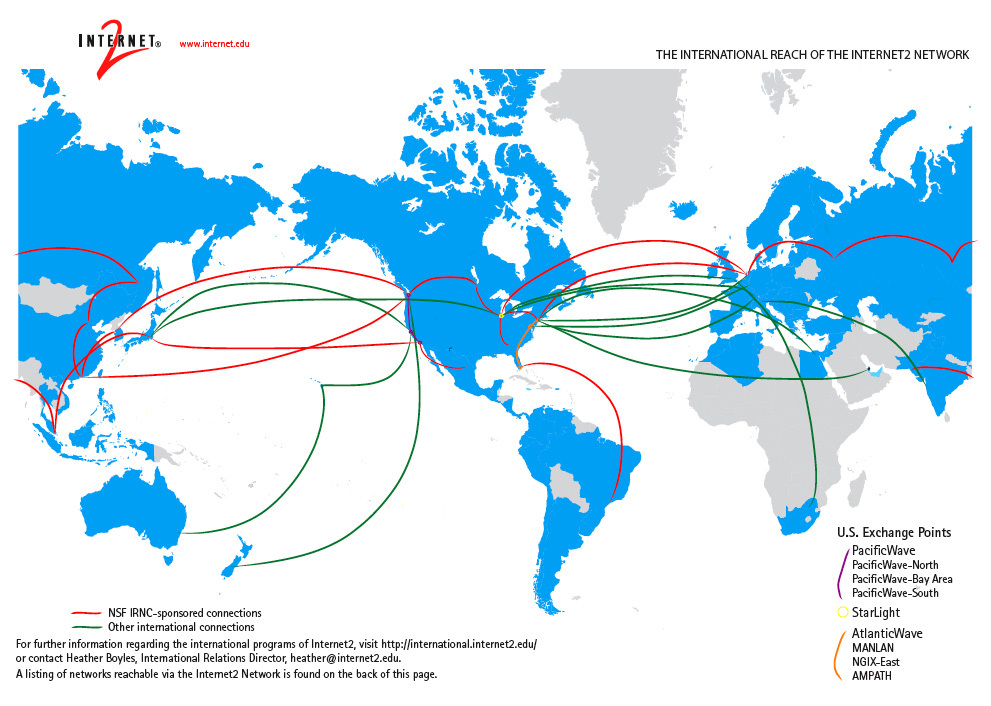

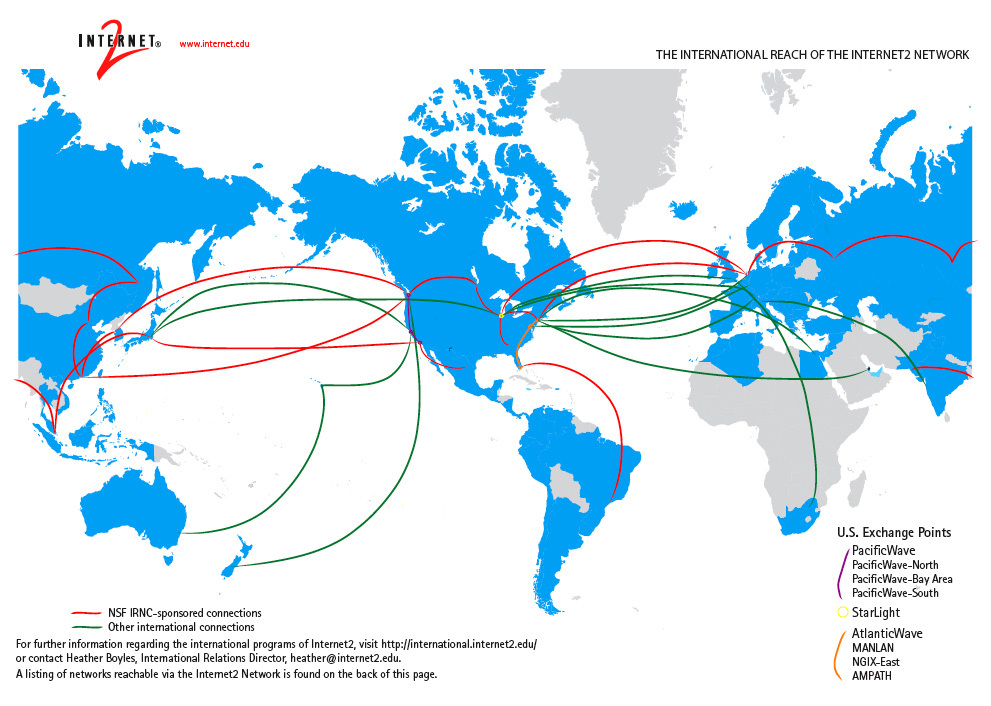

For network engineers, it is certainly not a secret that the principles on which the familiar Internet is based are generally not monopolistic, programmable packet forwarding on more optimal principles is implemented in the already mentioned OpenFlow. The existence of a non-profit consortium of research and production institutions - Internet 2 is aimed at modernizing the very principles of the network. The peculiarities of the developing network are that it already functions exclusively on the basis of IPv6, uses the broadcast channel, supports the system of priority transmission of traffic. Although this network is present at most only in the United States, the innovations used in it and the experimental technologies, after passing in the run-in, gradually migrate to the familiar Internet.

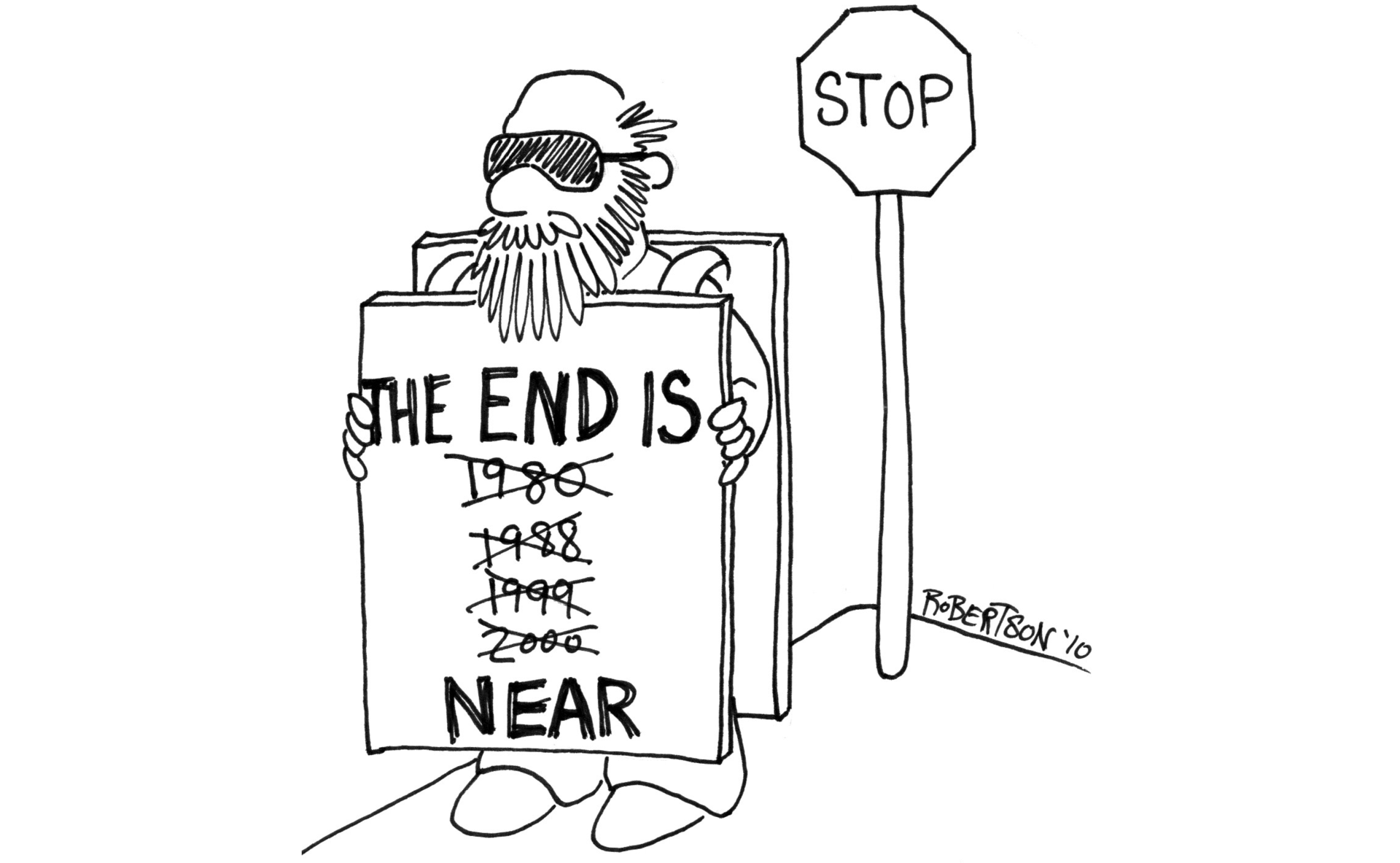

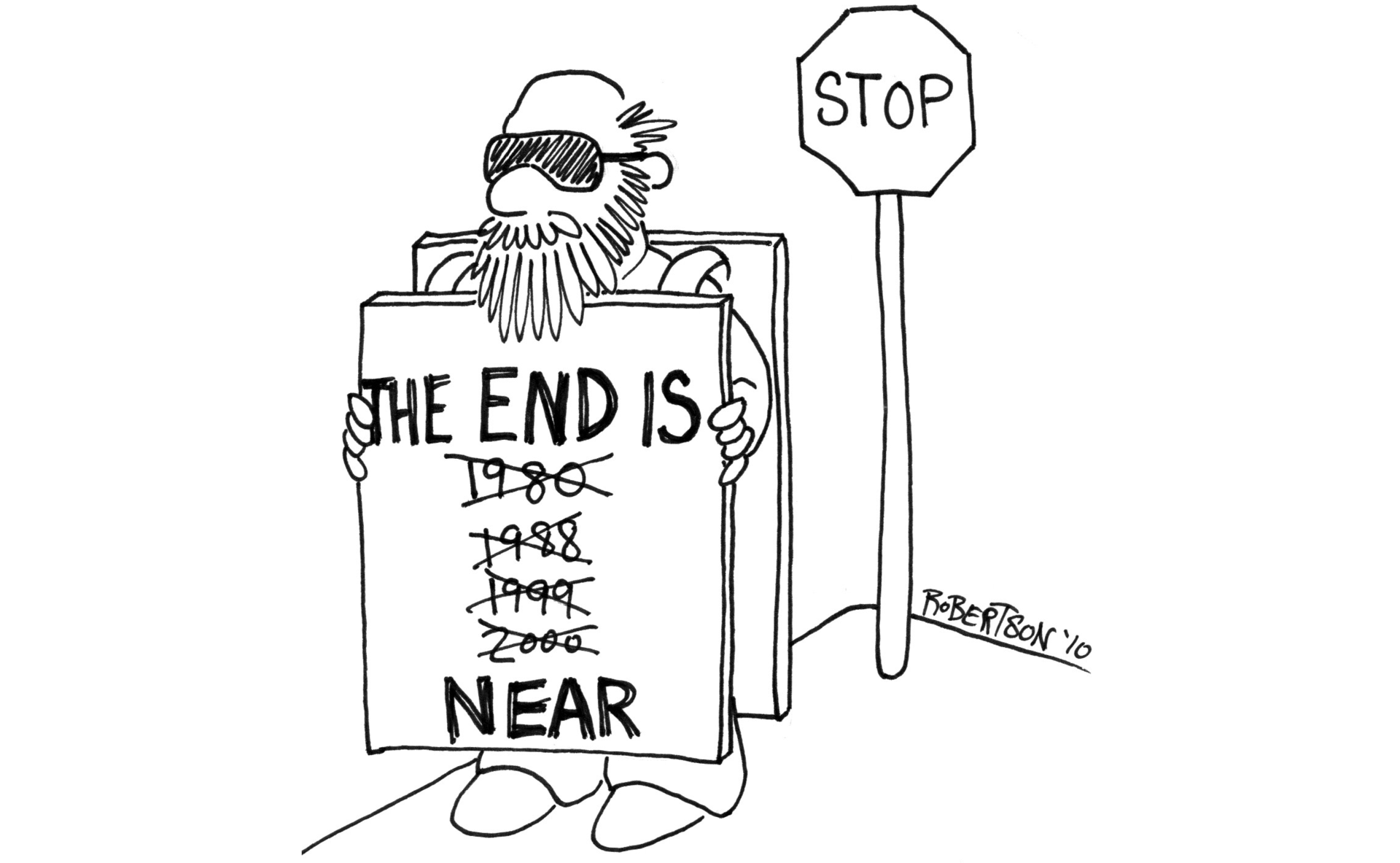

The key question remains: will technical progress be able to digest the growth in the volume of transported data generated by it? Andrei Odlyzhko, a professor at the University of Minnesota and a former head of the network department at AT & T, tried to give an answer to this question: “I wouldn’t hysterise about the uncontrolled growth of traffic, a fact which we will not dispute. The fact is that somehow I already got burned on this slippery topic, back in 1997, working at AT & T. Although almost two decades have passed since those distant times, but now I see the state of things has not changed much. We then, just as now, predicted a “network collapse” in the next 5 years, we also noted the lag in the introduction of new network capacities from the growth of digital information generated in the world, ”Andrew recalled with a mysterious smile,“ But as you can see no collapse happened. Additional investments in technology research, infrastructure development, and universal technical progress with the invention of new and cheaper existing materials helped to postpone the “network apocalypse,” which for many, very competent people, was already a fact. A new time will surely bring new solutions, even if we don’t yet know anything about them, ”Andrei Odlyzhko ironically summed up.

As is known, according to the laws of economics, the lack of a product or service often creates a “rush” on it, and this is naturally reflected in the prices at which it is provided. Moreover, the situation is aggravated by the fact that sometimes a user who is accustomed to the cheapness of the Internet is actually unable to abandon the use of the usual services, Internet resources, and the way of life that has developed on their basis. According to the presented IEEE report, an unfavorable trend in the traffic infrastructure infrastructure has existed since 2010 and in the very near future it can turn into global events, the main one among which can be called a serious redistribution of the existing IT market. Is it all so pitiable, as experts of a respected institution foresee, and is there still an opportunity to “smooth out” the consequences of the human leap into the world of “greater traffic”, this will be further discussed in the presented article.

')

Being the head of the network capacity assessment department at IEEE, as well as being the former head of the unit, the same IEEE, for developing infrastructure based on Internet channels 40–100 Gb / s - John D'Ambrosia is a man who is in the forefront promoting the ideas of a radical increase in IT infrastructure capacity. Taking different positions in organizations, communities whose activities are aimed directly at the creation and operation of modern IT infrastructure, this person authoritatively substantiates his position.

“This is the question that keeps me awake at night,” says John, being the head of the Ethernet Alliance industry group already mentioned “titles”. “In my activities, I often found myself in an absurd situation, when finishing a project, say 100 Gigabit Internet channel, we already at that moment faced with the need to expand it. Not having time for reality, our planning, given the difficultly predictable needs of users of Internet backbones, in conditions of a radical increase in traffic, often led to repeated costs for improving newly commissioned facilities, which naturally reflected on the company's profits and laid down a burden on additional costs for consumers. ”

Also, when it came to points where it would be possible to reverse the current state of affairs, John said: “The main thing we can only hope for now is technological progress, now there are quite a few organizations that use the Internet and network infrastructure of the next level. Continuing development in this direction, programmable network parameters plus an increase in investments in underwater Internet channels and of course Internet 2, these are areas in development that will help us smooth the consequences of the “Channel Explosion” that we are seeing. ”

How many channels do we need?

To answer this question, statistical data are needed and they are there. Thanks to such a respected organization as Cisco and their VICN index (Visual Index Cisco Networking), we can look into the past of Internet traffic and are comparable to the current, quite correctly, predict the foreseeable future. After analyzing the existing reports of Cisco from 2010 until 2015, we can trace a very interesting pattern of growth in global traffic. If at the dawn of tenths, researchers fixed up to 20.2 Exabytes of monthly traffic generation and up to 242 Exabytes in annual terms, after five years, traffic actually increased fourfold. As of 2015, at the end of the year, according to the existing forecast, which is based on the existing dynamics, we will get close to the close to 966 Exabyte (almost 1 Zetabyte). This volume of traffic could be generated if, say, every four minutes, all movies ever shot by humanity were broadcast through the network.

Given the fact that 1 Exabyte equals 1 x 1 000 Petabytes, or 1 x 1 000 000 Terabytes, it becomes even more obvious that a huge burden that lay on the existing information highways.

More users on the network - more traffic, this simple truth has been particularly acute in recent years. Very unexpected statistics showed the usual "home" consumers of the Internet. According to estimates by Cisco researchers, the needs of household consumers in developed countries in 2015 already reached 1 terabyte of traffic per month. With such a high rate and an impressive number of users, they broke into the top of the most voracious consumers of Internet channels.

A lot of Internet services are responsible for petabytes generated data, but the palm, of course, remains for the video. Already in 2010, the video came out on top in generating traffic on the network; file sharing and torrents played a huge role here. In 2015, the situation was even more aggravated, according to very competent estimates, the transportation of various kinds of video materials through the network will create more than 50% of all global traffic. If you translate this volume into more tangible concepts, then it can be compared with three billion DVDs, or with one million minutes of video transmitted through the network in just one second.

“In this case, the traffic generation is growing not so much due to the video materials we’ve previously used to, but rather due to streaming video, the main part of which is generated by rapidly growing applications that are gaining ever-increasing popularity - video conferencing,” based on years of research, he voiced this thought Barnet (Thomas Barnett) - Manager for the delivery of services offered by Cisco.

Also one of the reasons that gave rise to the “Channel Explosion” was the mass availability of smartphones and tablets with the ability to use broadband contactless connection. According to Cisco analytics, in 2016 the number of active mobile devices on the planet will exceed the number of residents, reaching a quantitative mark of 10 billion pieces.

Being on the verge of a technological catastrophe, a banal inability to keep under control an increase in traffic flows, IT companies, as well as research institutes, over the past years, have been trying to turn the tide and prevent the collapse of IT infrastructure. Let's take a closer look at those tools that we already have and developments that can drastically change the very gloomy prospect of drowning in Zetabytes of data.

Submarine cables

With the widespread use of wireless technologies, ordinary users of the network have the illusion that cable infrastructure is a thing of the past, but this is not the end. WiFi networks in homes, parks and subways are just a tiny tip of the iceberg when the issue reaches the real network infrastructure.

“Wireless Internet is just like that until the moment when mobile equipment reaches the base station and a completely different story begins here,” says Alan Moauldin, director of research company TeleGeography. “No matter how convenient non-contact connections were, fiber-optic communication networks were, are and will be in the foreseeable future, the backbone of the IT infrastructure. Cables under the ground, under the water column, have already chained the whole Earth into their arms, and there is no alternative to this right now. ”

The company Alana Moldina is directly involved in researching the development of submarine IT highways and their data on the increase in throughput capacity is very optimistic. Cables laid under all the world's oceans are aimed at solving the problem with the transfer of traffic not so much between local sites as between regions of the world. This application largely determines their gigantic capacity, the amount of investment and the scope for the commissioning of new lines of communication that we have seen in the last decade.

As can be seen on the presented graph, the width of IT highways in the world is growing at an accelerated pace from year to year. Starting from 1.4 Terabit per second in 2002, this figure already in 2006 rose to 6.7 Terabit, but now the network capacity is at the level of 200 Terabit. According to current forecasts, by 2020, at least 1,103.3 terabits will be transported through existing channels.

In order to understand the problems and prospects for the growth of IT infrastructure, you need to have an idea of the regional uneven distribution of existing Internet capacities. According to the same report provided by TeleGeography, the permanent leaders of the list of the most developed infrastructure are of course Europe and the USA. The African continent has become the most backward region, even despite the rapid development of networks in recent years. As of 2011, before the surge in work to improve the infrastructure of the black continent, undersea highways provided a speed of only 700 Gigabits per second, by which time the European Region was provided with channels with a total speed of just under 50 Terabits per second.

In addition to channel width, network latency is also a very important feature. Although for the average Internet user the difference is not a special role PING if it is within, say 200 ms, but there are cases when every millisecond is counted. Not so long ago, a project was launched to lay a submarine cable through the Arctic region, the main goal of which is only to reduce the network delay to 60 ms between the London and Tokyo stock exchanges, the project budget is $ 1.5 billion, the main line will be launched in 2016 year Also indicative in this vein was South America.

Having in general broad IT highways to the world, all of them were even recently tied to North America, which appeared very unfavorably on the delay of the network. Geographically, being only 3 thousand kilometers from Africa, the inhabitants of South America were forced to use highways passing through two other continents, but at the moment with the advent of highways in the southern Atlantic and Pacific Ocean, the situation is changing for the better.

“Yet the problem of connectivity is not so acute. Integration into the civilized world of such rogue states as the former social countries. The camps, especially Russia, provide an opportunity to connect Europe and Asia not only through the Arctic, but also by land, thereby complementing the new “land” highways “underwater”. As it is with the Asian region, Europe and the United States have a shortage of Internet channel width, ”Alan Moldin formulated his own thought.

The need to build new networks is further aggravated by the fact that existing cables do not always meet the requirements of the times and many of the previously laid out are irretrievably outdated. According to the experts of the IEEE Institute, at the moment, the lines erected no more than 25 years ago remain relevant for the most efficient use. “These lines of communication are fully functional, but they are losing their commercial effect,” continues Moldin, “The future of networks is tightly connected with the development of the technology of coherent filtering of the signal.” Polarizing the signal, and after filtering out its different phases, we can significantly increase the capacity of existing highways, this is the technology that can fundamentally resolve the existing shortage of networks in the near future. The lines originally designed for transporting 10 Gb / s of data can be increased to 40 and can even be up to 100 Gb / s. All that is needed for this is the replacement of the equipment that encodes / decodes the signal and amplifies it, hundreds of thousands of kilometers of the optical fiber itself, laid around the world, remains the same. ”

Network software configuration

In addition to the quantitative improvement of the network, it is also necessary to remember about its qualitative component. Currently, the most widely publicized technology is gaining OpenFlow. This system for optimizing packet forwarding has already been successfully used both locally, in research centers, as an example of the CERN network, as well as among commercially successful participants of the IT market, the most striking example here is Google, which uses OpenFlow in its data centers.

“We are able to organize the work of the programmed network on a small scale,” says Phil DeMar, a network engineer at the Fermi Institute in Illinois, USA. The institute is one of 11 institutions that receive data directly from CERN. After receiving this data, they are further transferred to the open network at more than 160 sites of 2 priority levels and many times more sites with a lower priority level. “If it were not for the implemented OpenFlow technology, our existing IT infrastructure would simply be paralyzed by the huge amount of data we receive from CERN. However, it is not worthwhile to idealize the mentioned technology, this is not the top, but only the first step to it. ” But when the conversation turned to the possibility of mass implementation of network programming technology, Phil was somewhat skeptical - “Our institute has two dedicated lines of 10 GB / s each with CERN, and that’s enough for us, but considering all the problems with implementing OpenFlow and maintaining it it is less expensive to increase the channels to 20-30 Gb / s, the technology is not yet ready for widespread use. ”

Internet 2

For network engineers, it is certainly not a secret that the principles on which the familiar Internet is based are generally not monopolistic, programmable packet forwarding on more optimal principles is implemented in the already mentioned OpenFlow. The existence of a non-profit consortium of research and production institutions - Internet 2 is aimed at modernizing the very principles of the network. The peculiarities of the developing network are that it already functions exclusively on the basis of IPv6, uses the broadcast channel, supports the system of priority transmission of traffic. Although this network is present at most only in the United States, the innovations used in it and the experimental technologies, after passing in the run-in, gradually migrate to the familiar Internet.

The key question remains: will technical progress be able to digest the growth in the volume of transported data generated by it? Andrei Odlyzhko, a professor at the University of Minnesota and a former head of the network department at AT & T, tried to give an answer to this question: “I wouldn’t hysterise about the uncontrolled growth of traffic, a fact which we will not dispute. The fact is that somehow I already got burned on this slippery topic, back in 1997, working at AT & T. Although almost two decades have passed since those distant times, but now I see the state of things has not changed much. We then, just as now, predicted a “network collapse” in the next 5 years, we also noted the lag in the introduction of new network capacities from the growth of digital information generated in the world, ”Andrew recalled with a mysterious smile,“ But as you can see no collapse happened. Additional investments in technology research, infrastructure development, and universal technical progress with the invention of new and cheaper existing materials helped to postpone the “network apocalypse,” which for many, very competent people, was already a fact. A new time will surely bring new solutions, even if we don’t yet know anything about them, ”Andrei Odlyzhko ironically summed up.

Source: https://habr.com/ru/post/258395/

All Articles