Commercial site security research

Our colleagues from SiteSecure conducted a study on the security of commercial sites in the .ru zone in the first quarter of 2015. His results were very interesting, so we decided to publish them in our blog. The purpose of the study is not only to determine the security status of sites in the .ru domain zone, but also the role of search engines in this.

Google and Yandex are still slowly identifying dangerous sites.

This is both bad and good news. The bad news for users is that they can get to dangerous or virus-infected sites without receiving the usual warning “This site is not safe” from a search engine. The good news for site owners is that they can have time to react and fix the problem faster than the site gets blacklisted by the search engine, but under one condition - if they find the problem themselves faster than the search engine does.

')

The share of sites where the security problem was discovered, but the sites were not included in the black lists of search engines:

Our observation has shown that Google and Yandex are still slowly and not fully identifying the presence of security problems and threats to users on the site. (In one of the following studies we will check who is faster - traditional antiviruses or Google with Yandex). Compared to last year, Yandex has increased its capabilities - it used to reveal problems two times more often than Google, and now it’s already 2.5 times more often. However, it’s not enough for website owners to rely only on diagnostics using Yandex and Google webmasters — when the search engine sends an alert about a problem, the site will already be blacklisted, and much of the traffic to the site will be lost every hour or day until the solution problems will bring loss of leads, sales and reputation of the site.

It should be noted that the statistics shown in the graph was obtained during a one-time site scan, so we cannot now reliably tell how long the site owners had to correct the problem before the sites were blacklisted by the search engine. We plan to learn this in the course of our further research.

Summarizing, we can say the following, that at the time of our scanning sites:

- Yandex has not yet found from 30 to 65 percent of problems on sites

- Google has not yet found from 50 to 85 percent of problems on sites.

The main differences of the 2015 study

Having conducted the first site security research in early 2014, we decided to conduct research on a regular basis and deployed a permanent infrastructure for this, which is located in the Amazon cloud and represents a cluster of machines that are scalable to hundreds of servers, connected by the task manager RabbitMQ and allowing monitoring of several hundreds thousands of sites. During the first quarter of 2015, we collected and analyzed 2.5 million records of incidents detected during ongoing monitoring of more than 80,000 sites.

Additionally, specifically for this study, we, together with the iTrack analytical data collection service, conducted a one-time scan of 240 thousand sites with domains in the .ru zone, registered by legal entities (presumably for commercial purposes).

Thus, compared with the previous study, we increased the number of sites to be checked from 30,000 to more than 300,000, and also watched some of the sites continuously for four months. Focusing on business sites is another difference from last year’s study, in which a sample of sites was randomly made from a number of domains in the .ru zone and could include personal home pages and abandoned sites.

Summary of the 2014 study

According to a study conducted in October 2013 - January 2014, every seventh site in RuNet is at risk of financial loss due to security problems. And if major market players solve the problem by hiring specialized specialists and using industrial monitoring and protection systems, the SMB sector for the most part simply does not think about solving such problems in advance, and has to react to the fact of losses already incurred. The main results of the previous research:

- Free CMS sites get infected 4 times more often than paid sites.

- Upgrading the CMS version reduces the risk of problems by 2 times

- Yandex blacklisting sites in two 2 more often than Google

- The intersection of blacklists Google and Yandex only 10%

- More than half of the site owners were not aware of the problems

- A third continued to spend money on website promotion

- 15% of businesses ceased to exist, and owners of 10% of sites, as a result of infection, had to redo their website

After the publication of these data, we received many clarifying questions that we tried to take into account when finalizing the methodology and setting the task for the next study.

Key findings of the 2015 study

To begin with, we will briefly present the main results of the research, which we thought were especially important to draw the attention of the audience.

Eliminating the problems identified requires considerable time - on average, the site is on the black list for a week, which is equivalent to losing a quarter of the monthly revenue for an online business.

The average response time of the site owner or webmaster to the problem found by search engines and removing the site from the blacklist is 1 week. That is, the Internet business is experiencing a week of downtime, and the online store loses a quarter of its monthly revenue. And this is without taking into account the loss of funds invested in advertising (according to the results of a previous study, confirmed this time, about half of Internet business owners continued to invest in website promotion, which had security problems leading to the site being blocked by search engines).

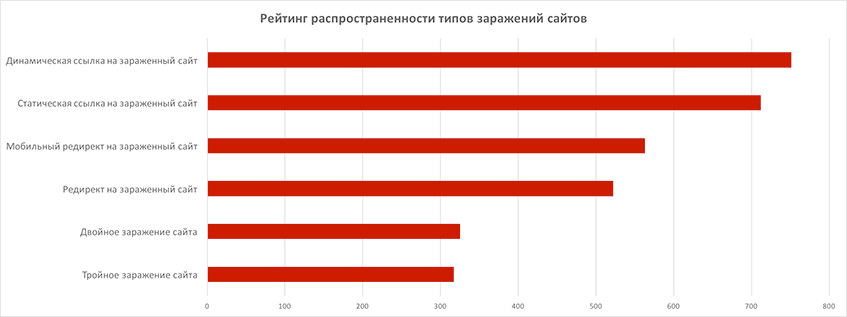

Threat rating and the situation with repeated and repeated infections

According to the results of checking sites for infections, we made a rating of the popularity of infections of a certain type. Quite often (more than in 40% of cases) there are cases of repeated infection - double and triple. By repeated infection, in this case, we mean the introduction of a dangerous object on a site different in its method (for example, a static link and a mobile redirect, a dynamic link and a static link - different ways). It is worth noting that repeated instances of the site being blacklisted are rare, especially in online stores. This is probably due to the increased importance that the owners and administrators of commercial websites give to protection after blacklisting of the site.

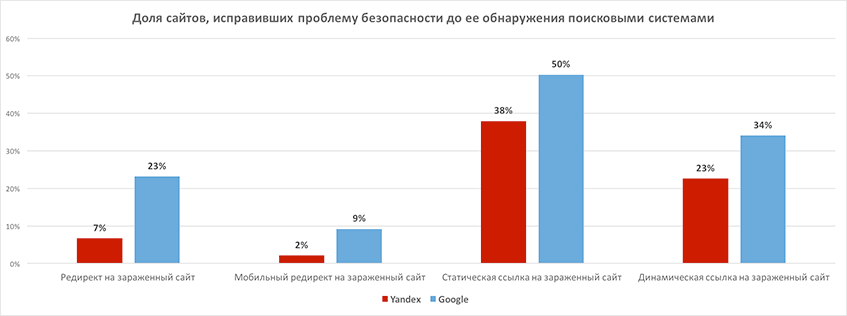

Treatment alone is not enough. Observation and Prevention

Most of all the sites that have eliminated the detected security problems managed to get into the black lists of search engines, and then withdrawn from there. The greatest success site owners and administrators achieve in the fight against links to infected sites. Nearly half of all such incidents were eliminated before detection by search engines. We attribute this to the fact that when promoting a site, tools are often used to monitor static external links, and dynamic external links are correctly detected by desktop antiviruses, and information about this goes to site owners. The most difficult thing is to identify redirects, and only less than 10% of site owners were able to identify a mobile redirect themselves. This is due to the fact that malicious redirects are able to hide their presence and are much worse detected by “improvised means”, while search engines that care about protecting users, on the contrary, have learned quite effectively how to determine complex mobile and disguised redirects.

Share of sites that fixed the problem before detection by search engines:

In general, this means that businesses that did not have sufficient own funds to control the site’s security had significant losses - on average, a week of inactivity, as noted above, without taking into account the loss of traffic from redirects from the moment they appeared on the site until the moment they were detected. search engine. If site owners and webmasters had tools for proactive diagnosis and prevention of sites, these losses could have been avoided by using the slowness of the search engines we detected - the problem can be detected and resolved before the search robot visits the site and mark it as dangerous .

The impact of the type of CMS on security was not so great for business sites

In the past study, in which randomly selected sites participated, we found an obvious dependency - sites on free CMS were infected with viruses four times more often on commercial systems. Leaving only business sites in the sample, we found that the share of sites based on commercial CMS in blacklists is only two times less than the share of sites on free CMS. Thus, commercial sites on free CMS "on average" can be called safer compared to all sites on free CMS in general.

In our opinion, many cases of hacking and infection of sites arise due to common security problems - weak passwords, uncontrolled access to the site code, the involvement of untested freelancers and other web development problems associated with the general state of web security. With this we associate such a fact as the absence of clear leaders among paid and free CMS by the number of problems. In general, the share of problem sites on the selected CMS corresponds to the share occupied by this CMS in the market.

In relation to the graphs above, it is necessary to make an explanation: for technical reasons, we were unable to determine the CMS of some of the sites in the sample, so the data was obtained under the assumption that the CMS uncertainty error was randomly distributed. The list of sites does not contain many well-known CMS, such as NetCat and Umi, because we only show the top common CMS found in the study, and this top generally coincides with the CMS popularity statistics published by the iTrack service.

Shares of various CMS on infected sites:

Internet business owners still lack awareness

Even having reduced the sample to commercial sites, we found sites that were in the black lists of search engines for a long time. Thus, among the sites of online stores, we found more than 300 incidents that were not eliminated during the entire observation period (3 months). Most of them are mobile redirects, which can lead to losses from 25% to 40% of traffic, depending on the prevalence of mobile access to sites in a particular region of Russia. A similar pattern was observed in a sample of sites in the .ru zone that are in the top 1 million sites registered on Alexa. However, there were practically no such sites among the Top 10000 group under study, according to Liveinternet, which we tend to associate with increased attention to site traffic by site owners participating in the rating.

The main facts of the study 2015. Top 3 problems

- The most common and dangerous problem in our opinion is a redirect to external sites, including both mobile and regular redirects. In 90% of all cases of a redirect, we determined that it was a redirect to an infected site. Thus, not only the Internet business that loses traffic to other sites, but also users who get to infected sites suffers. And if the fact of the transition to an infected site detects the antivirus installed by the user, then the reputation of the site that he initially wanted to visit will suffer. Both mobile and regular redirects are not easily detected, because how to properly use the techniques of hiding and in addition do not work for all IP-addresses and types of mobile devices.

In our sample of more than 300,000 sites, Google identified less than 20% of the problems, and Yandex identified only 33% of the redirects that were installed on the sites when we scanned them. At the same time, if Yandex detects a malicious mobile redirect, in 100% of cases the site will be blacklisted.

We have already noted above that only about 10% of the owners and administrators self-eliminated redirects before they were detected by Google and Yandex. - The second most common problem is dynamic links to infected sites. It should be noted that in 100% of cases after the discovery of such a link, the site is blacklisted by Google (which means it will be blocked in web browsers, which use the SafeBrowsing API for defining unsafe Google websites).

Dynamic links to infected sites independently identified and eliminated only 20-30% of all sites that were included in the study. - In third place is the popularity of a static external link to an infected site. This type of problem turned out to be the easiest to identify and eliminate - from 40% to 50% of all sites were able to cope with the problem without waiting for it to be detected by search engines.

Statistics on the slice of "online shopping"

A total of 2247 incidents were found, affecting 5% of sites.

- 247 online stores were in blacklists, 68 of them - throughout the course of the study

- The average time spent by the site in the blacklist is 1 week

- Repeated infections are rare, but do occur (3 cases out of 247)

- 243 sites had a normal redirect to an infected site (more than half of these sites were not considered by Google or Yandex to be dangerous, although in reality they are dangerous)

- 372 sites had dynamic links to infected sites (At the time of our crawling, Google did not have time to notice 75% of such sites, Yandex only 60%)

- More than 50% do not have SSL certificate

Top problems not resolved throughout the observation period

- Mobile redirect to another site (in 72% of cases to an infected one)

- Dynamic links to an infected site

- Static redirect to the infected site

Top active problems for the entire observation period

- Dynamic link to an infected site

- Mobile redirect to an external site

- Normal redirect to an infected site

Statistics on cut "Top-10000 Liveinternet"

Total detected 1166 incidents affecting 10% of sites

Top active problems for the entire observation period:

- Dynamic link to an infected site

- Mobile redirect to an external site

- Static link to an infected site

Statistics on the slice "Sites in the zone. Ru, included in the Top 1 million Alexa sites"

A total of 3292 incidents were found, affecting about 8% of all sites.

Sites are twice more often in blacklists than online stores

Top active problems for the entire observation period

- Mobile redirect to an external site

- Static link to an infected site

- Dynamic link to an infected site

Summary Statistics for All Cuts

Thus, during the study, problems were found in 10% of sites from the LiveInternet list, in 8% of sites in the Alexa list, and in 5% of online stores. The proportion of sites that have problems in this study has decreased, compared to the previous study, because we have eliminated problems with spamming to black lists and focused only on the most dangerous problems that directly lead to loss of traffic, infection of users or loss of Internet reputation. business. However, in the future we plan to re-include in our research the problems associated with spam mailing lists, as they, in some cases, can also lead to site downtime due to the disconnection of the hosting provider, loss of leads and lower conversion due to non-delivery of letters with confirmation of orders in online stores or sites actively used for lead generation.

Conclusions and recommendations

The increase in the depth and duration of observation, as well as the narrowing of the sample to sites that can be considered commercial, allowed us to refine and slightly adjust the results of the previous study. However, in general, we are seeing the same, including disturbing trends regarding the problems of site security as a source of downtime and losses for Internet businesses.

Based on the results of a previous study of the state of security of web development in studios, as well as on the data of the study just conducted, we recommend, regardless of the type of CMS system used, to pay special attention to compliance with basic security measures, such as regular password changes, avoiding insecure FTP protocols in favor of SSH, protecting access to the administration panel using an SSL certificate, and others, and also recommend training dedicated experts in the field of reagents Security Incidents - removing sites from blacklists, enhancing protection by setting up the CMS environment and server, and other measures to counter hacking and infection of sites that cause Internet business to incur losses.

Research methodology

For the study, a sample of about 320.000 sites was specially created:

More than 80.000 sites for research in the dynamics during the 1st quarter of 2015, of which:

- 36.750 online stores

- 37.233 Russian-language sites from the top 1 million Alexa sites

- Top 10.000 LiveInternet ranking

About 240.000 sites of commercial firms randomly selected from the total number of active (delegated) domain names in the .ru zone registered to legal entities for a one-time scan are similar to the 2014 survey.

During the study, the following parameters were observed:

- Type of CMS on which the site is made

- Availability of the site in the black lists of Google and Yandex

- Availability of redirects on the site (server and client) (mobile redirect to an external or infected site, search redirect);

- External links to infected sites (static or dynamic);

- SSL certificate;

- Configuration errors;

Monitoring of 80.000 sites of the first part of the sample was conducted continuously throughout the quarter (all parameters were polled and values were recorded in a database where all historical information about changes in parameters during each scan was accumulated). We applied various approaches to the analysis of historical data to this sample of sites, to determine the correlations of various events we detected. The second part of the sample was scanned once and only statistical analysis methods were applied to it.

Descriptions of the problems that we analyzed during the study:

- We defined the redirect to an infected site as an automatic redirection of a regular browser user to a third-party site that has already been blacklisted by search engines.

- We defined mobile redirection as automatic redirection of a mobile device user to a third-party site that has already been blacklisted by search engines.

- A static link to an infected site is a link that is directly written in the page code located on the web server.

- A dynamic link to an infected site is a link that occurs during the display of a web page in a user's browser as a result of execution, for example, Javascript code.

The site is an important asset of the company, its success depends on the success of the promoted product or service, and the attitude to the company as a whole. If the site does not have proper control, it can be hacked at any time, both for the sake of selfish purposes, and for the sake of many others, reputational risks can be quite large, which is completely unacceptable for business. Constant and professional monitoring of the integrity of the site, its components and their proper functioning and timely response to emerging security threats are required.

Research page and expert comments: https://sitesecure.ru/securityreport1q2015 .

Source: https://habr.com/ru/post/258297/

All Articles