Part 2 - Synesis. Why is the demonstration of video analytics in offices so different from the real work in life?

After the first publication in the discussion of the article, a question reference appeared, to which I would like to respond with another detailed article. Its author - under the name "psazhin" - just hit the mark, citing the example of the classic "tough" video analytics - as we remember, developed and buried by Intel, reincarnated with high-tech advertising. Although, it is difficult to miss the mark here, because 90% of the entire market of video analytics is like an Intel Open Open library like water droplets. Well, since the choice fell on the firm Sinesis, then we will conduct a specific analysis of its "intelligent" algorithms based on the already developed methodology.

We are just a little more thoughtful read what is written on the advertising site of this company. We take the first most important setting.

It is proposed to set the dimensions of a person in order to distinguish his detection frame from other objects and on the basis of this to classify objects. But we said in the last article:

')

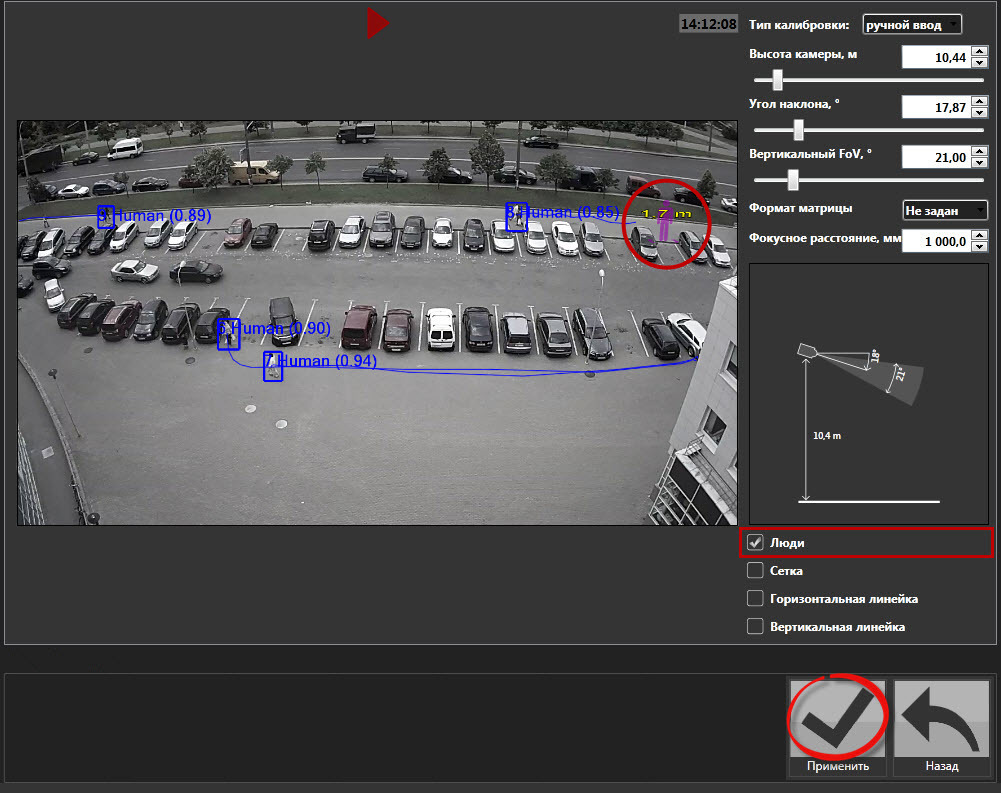

1. A two-dimensional camera cannot theoretically determine the size of objects, since she has no perspective. But here a pseudo perspective is proposed, based on the difference and the known position of the heights. It is assumed that all movement goes only along the ground, and theoretically it is possible to build such logic for “earthlings”. If it were not for the birds that fly, as well as insects that crawl around the chamber. And their sizes are not calibrated.

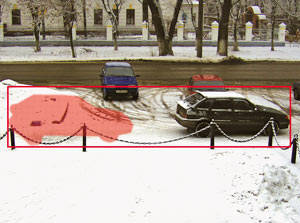

2. The contrast detector sees only what is different from the background. If you pay attention to the person on the left (on which the red line), then it is easy to notice that his torso completely (for the camera - completely) merges with the background. Those. the computer will only see white pants and a walking black head.

Although, if you set the sensitivity to full, then you can and try to find at least some differences, but then the interference will score the entire archive. Even digitization artifacts will give drawbacks.

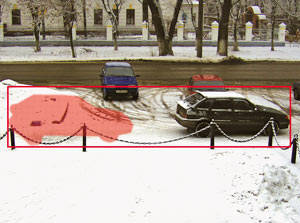

3. The dimensions of detection, even on the most contrasting target, are determined by the totality of the closed region of motion, i.e. several intersecting people will form larger figures than one person.

4. When any object moves, the detection zone is not only the place where it is at the moment, but also the same area in which it was located one frame back. The detection process is a comparison of frames: subsequent with previous ones. Accordingly, in the future, the place to which the person has moved and the place that he has freed will be changed. The length of this zone will depend on the speed of movement: the farther the object has moved during two frames, the larger the zone. For a car, this may be the entire frame area.

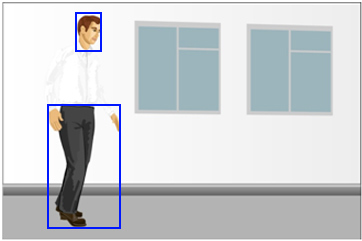

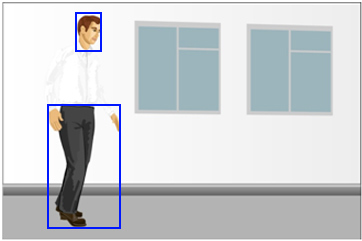

5. As an addition, you need to say about a blatant blunder advertising high-tech, this picture from the settings of the same Synesis:

As can be seen, the detection zone is both vertical and, oddly enough, horizontally very different from the human figure. Apparently, the developers themselves do not strongly believe in what they write.

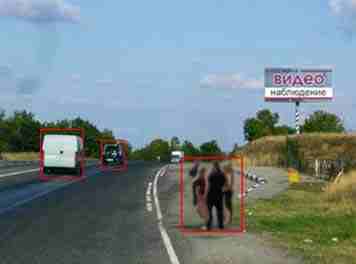

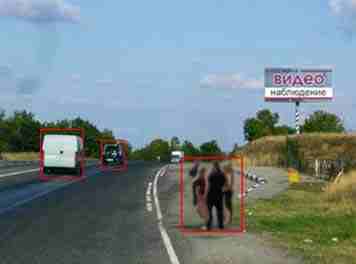

And here, when it is necessary to distinguish a person from a car, the writers of the advertisement of Synesis show clearly elongated figures vertically:

Logically, yes, the cars will be stretched horizontally, if of course they also wake up only horizontally. But in the upper right corner we again notice a blunder. There is a framed figure of two people. Accordingly, if 3-4 people go together, then it will already be recognized as a machine. Well, what can you do, you can not fool the object detector!

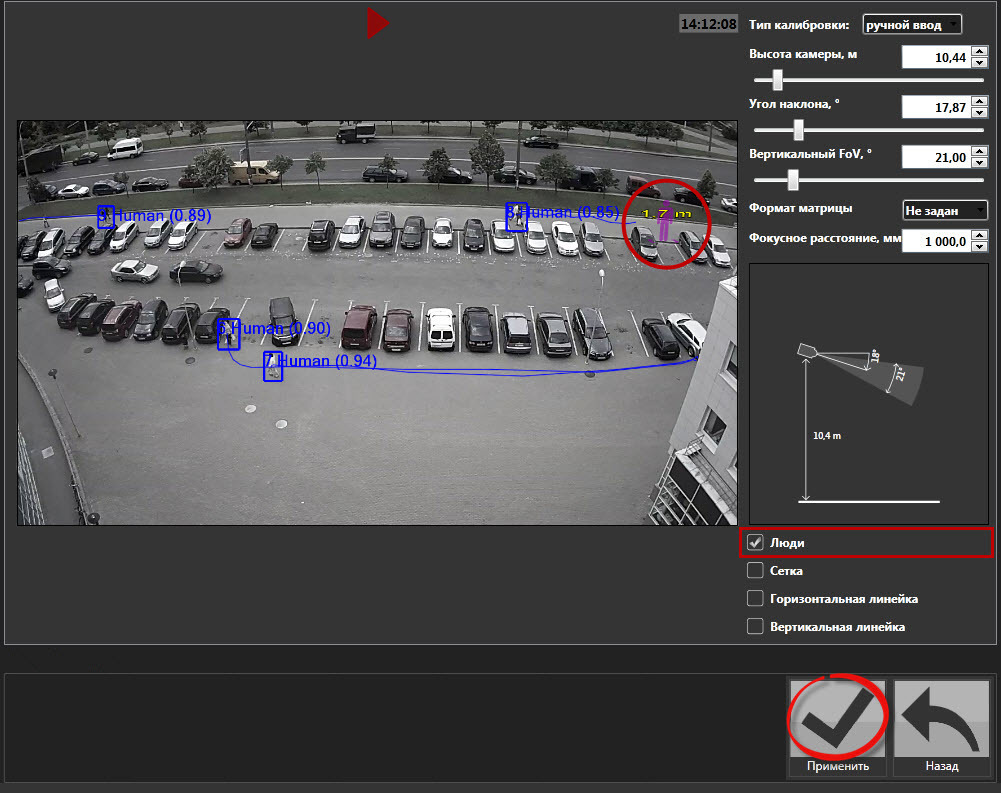

In fact, all further settings of the Kipod video analytics modules are based on an attempt to calculate the size of objects wiki.allprojects.info/pages/viewpage.action?pageId=31785131

Those. Almost all video technology is built on a principle that is obviously not working in real conditions.

From the mass of other companies that have linked their business with video analytics, Synesis is anti-practical. One feels who invented his cunning algorithms, never seriously tested them. We take the next setting:

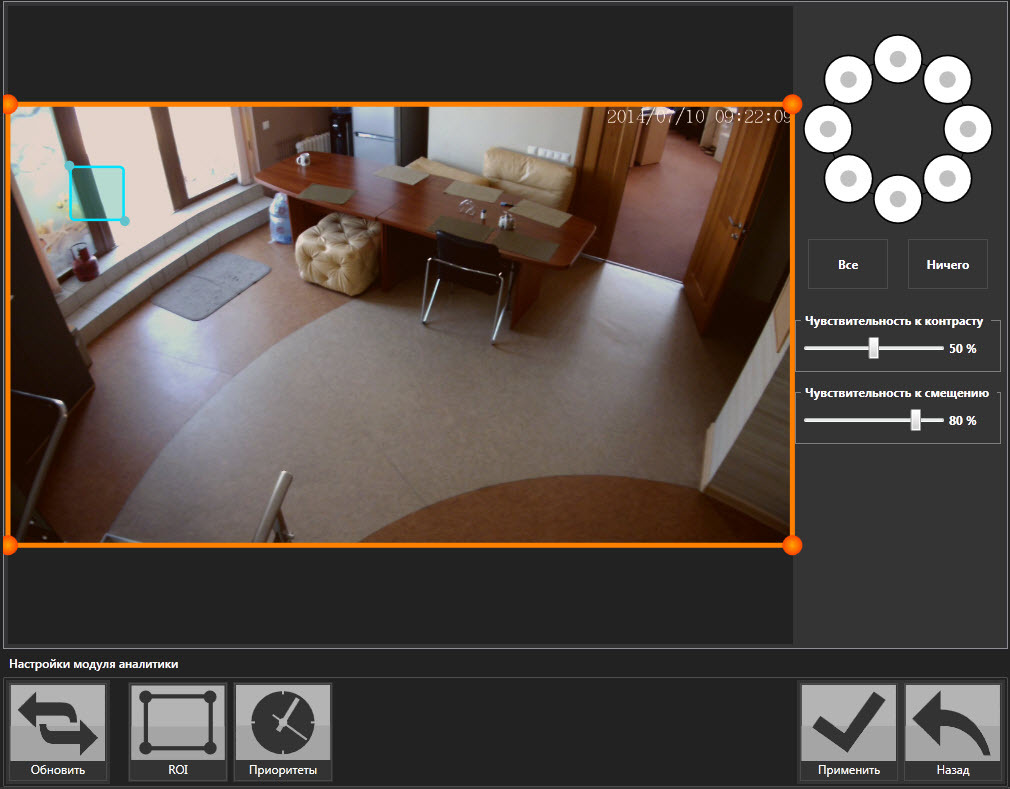

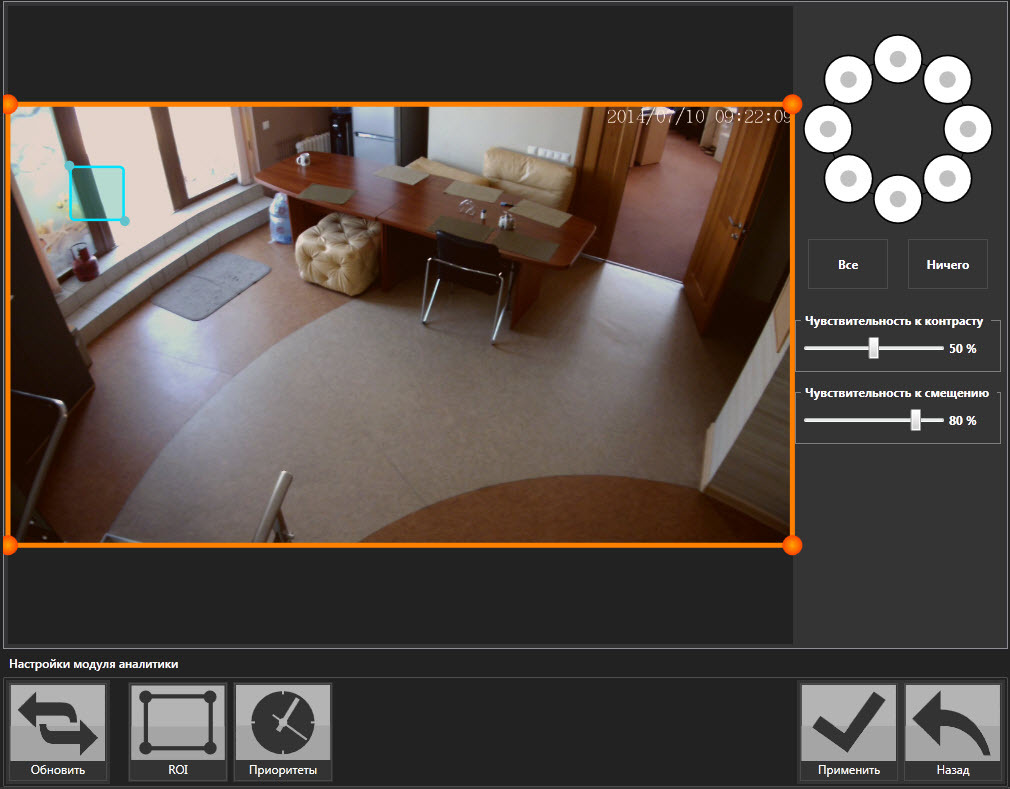

The so-called zones of interest, you can set the contrast level for the drawdown - sensitivity. Great thing - it works for all 100, if you do not change the lighting. But in this case we are shown a room where in the evening it is necessary to set a higher sensitivity, and in the morning - a smaller one. In the 2nd (blue) zone, opposite values are generally needed: in daylight the window is bright, in artificial light it is dark. So think about it!

It is obvious that during the demonstration the lighting will not change dramatically, which means that the seller has a long chance to convince the buyer of intellectual abilities.

A little more about the 2nd (blue) zone. One must be very far from the practice in order to set the place of detection on the window. The sun is an unpredictable object, its mood changes constantly, so on sunny days it is better not to hold a demonstration, the 2nd zone will react constantly.

But Synesis has the ability to test, it's a sin not to try. That's what worked for the day.

At the same time, multiple repeats were cut out, spiders and sausages were constantly sausage, therefore only an example is in the video.

To be continued…

We are just a little more thoughtful read what is written on the advertising site of this company. We take the first most important setting.

It is proposed to set the dimensions of a person in order to distinguish his detection frame from other objects and on the basis of this to classify objects. But we said in the last article:

')

1. A two-dimensional camera cannot theoretically determine the size of objects, since she has no perspective. But here a pseudo perspective is proposed, based on the difference and the known position of the heights. It is assumed that all movement goes only along the ground, and theoretically it is possible to build such logic for “earthlings”. If it were not for the birds that fly, as well as insects that crawl around the chamber. And their sizes are not calibrated.

2. The contrast detector sees only what is different from the background. If you pay attention to the person on the left (on which the red line), then it is easy to notice that his torso completely (for the camera - completely) merges with the background. Those. the computer will only see white pants and a walking black head.

Although, if you set the sensitivity to full, then you can and try to find at least some differences, but then the interference will score the entire archive. Even digitization artifacts will give drawbacks.

3. The dimensions of detection, even on the most contrasting target, are determined by the totality of the closed region of motion, i.e. several intersecting people will form larger figures than one person.

4. When any object moves, the detection zone is not only the place where it is at the moment, but also the same area in which it was located one frame back. The detection process is a comparison of frames: subsequent with previous ones. Accordingly, in the future, the place to which the person has moved and the place that he has freed will be changed. The length of this zone will depend on the speed of movement: the farther the object has moved during two frames, the larger the zone. For a car, this may be the entire frame area.

5. As an addition, you need to say about a blatant blunder advertising high-tech, this picture from the settings of the same Synesis:

As can be seen, the detection zone is both vertical and, oddly enough, horizontally very different from the human figure. Apparently, the developers themselves do not strongly believe in what they write.

And here, when it is necessary to distinguish a person from a car, the writers of the advertisement of Synesis show clearly elongated figures vertically:

Logically, yes, the cars will be stretched horizontally, if of course they also wake up only horizontally. But in the upper right corner we again notice a blunder. There is a framed figure of two people. Accordingly, if 3-4 people go together, then it will already be recognized as a machine. Well, what can you do, you can not fool the object detector!

In fact, all further settings of the Kipod video analytics modules are based on an attempt to calculate the size of objects wiki.allprojects.info/pages/viewpage.action?pageId=31785131

Those. Almost all video technology is built on a principle that is obviously not working in real conditions.

From the mass of other companies that have linked their business with video analytics, Synesis is anti-practical. One feels who invented his cunning algorithms, never seriously tested them. We take the next setting:

The so-called zones of interest, you can set the contrast level for the drawdown - sensitivity. Great thing - it works for all 100, if you do not change the lighting. But in this case we are shown a room where in the evening it is necessary to set a higher sensitivity, and in the morning - a smaller one. In the 2nd (blue) zone, opposite values are generally needed: in daylight the window is bright, in artificial light it is dark. So think about it!

It is obvious that during the demonstration the lighting will not change dramatically, which means that the seller has a long chance to convince the buyer of intellectual abilities.

A little more about the 2nd (blue) zone. One must be very far from the practice in order to set the place of detection on the window. The sun is an unpredictable object, its mood changes constantly, so on sunny days it is better not to hold a demonstration, the 2nd zone will react constantly.

But Synesis has the ability to test, it's a sin not to try. That's what worked for the day.

At the same time, multiple repeats were cut out, spiders and sausages were constantly sausage, therefore only an example is in the video.

To be continued…

Source: https://habr.com/ru/post/258025/

All Articles