Artificial Intelligence in Wolfram Language: Image Identification Project

Translation of Stephen Wolfram's post (Stephen Wolfram) " Wolfram Language Artificial Intelligence: The Image Identification Project ".

I express my deep gratitude to Kirill Guzenko for his help in translating.

“What is depicted in this picture?” People almost immediately can answer this question, and earlier it seemed that this was an impossible task for computers. For the past 40 years I have known that computers will learn how to solve such problems, but did not know when this would happen.

I created systems that give computers different components of the intellect, and these components are often far beyond human capabilities. From a long time ago we integrate the development of artificial intelligence in Wolfram Language .

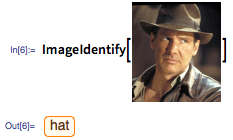

And now I am very pleased to report that we have crossed the new frontier: a new Wolfram Language function - ImageIdentify , which you can ask - “what is shown in the picture?”, Was released and get an answer.

')

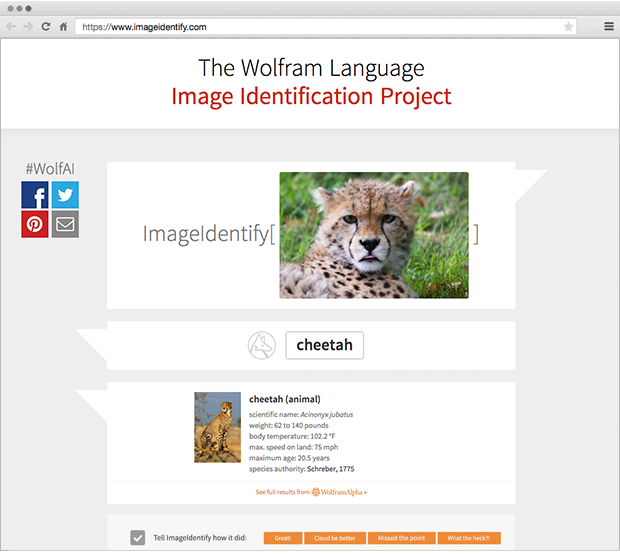

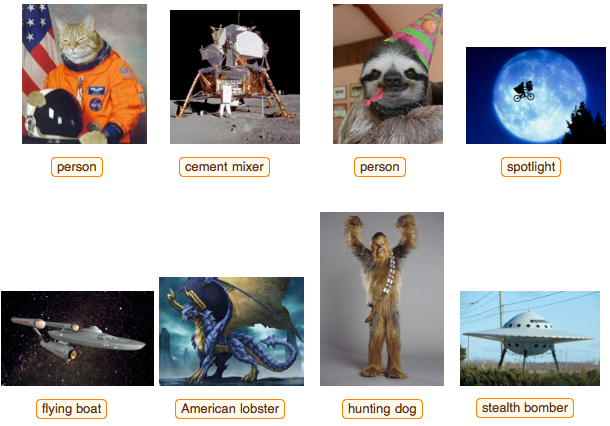

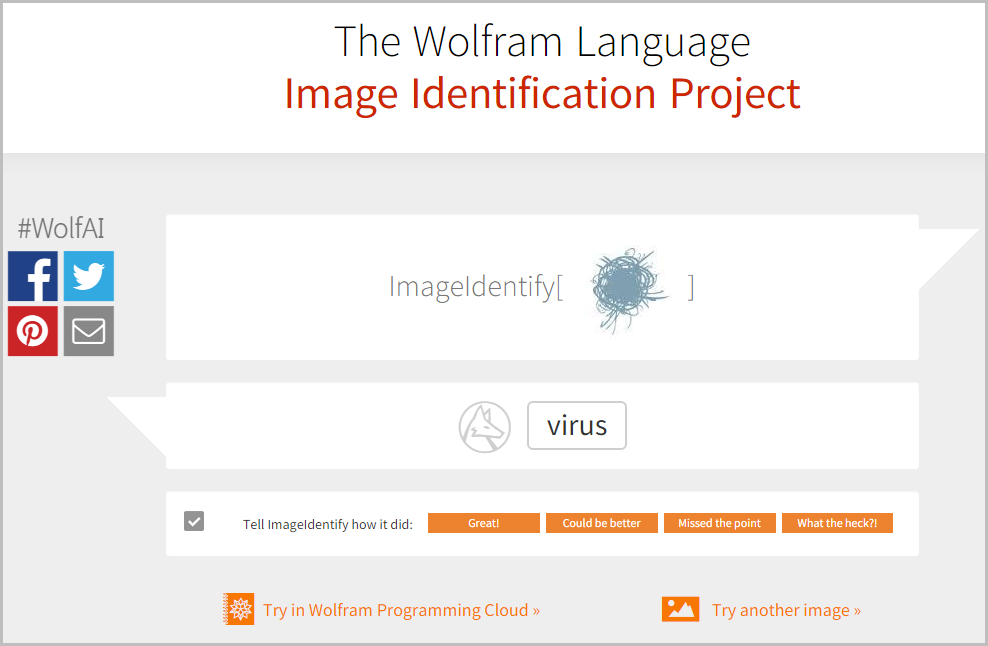

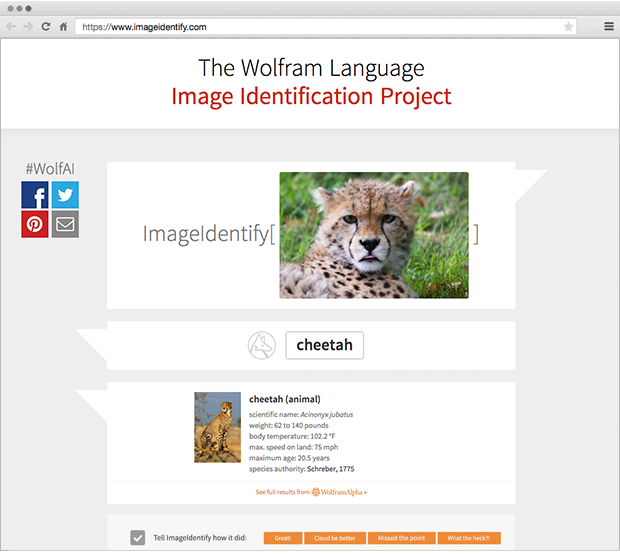

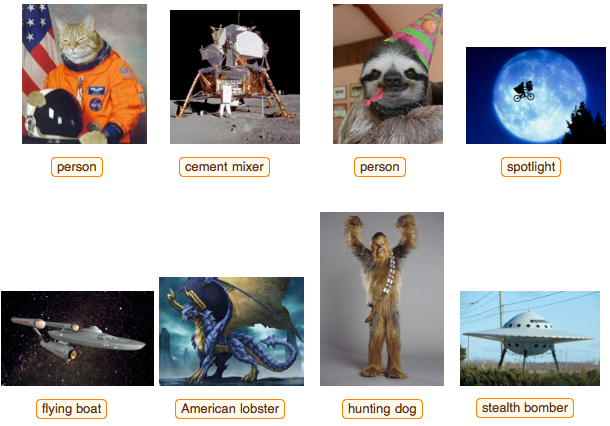

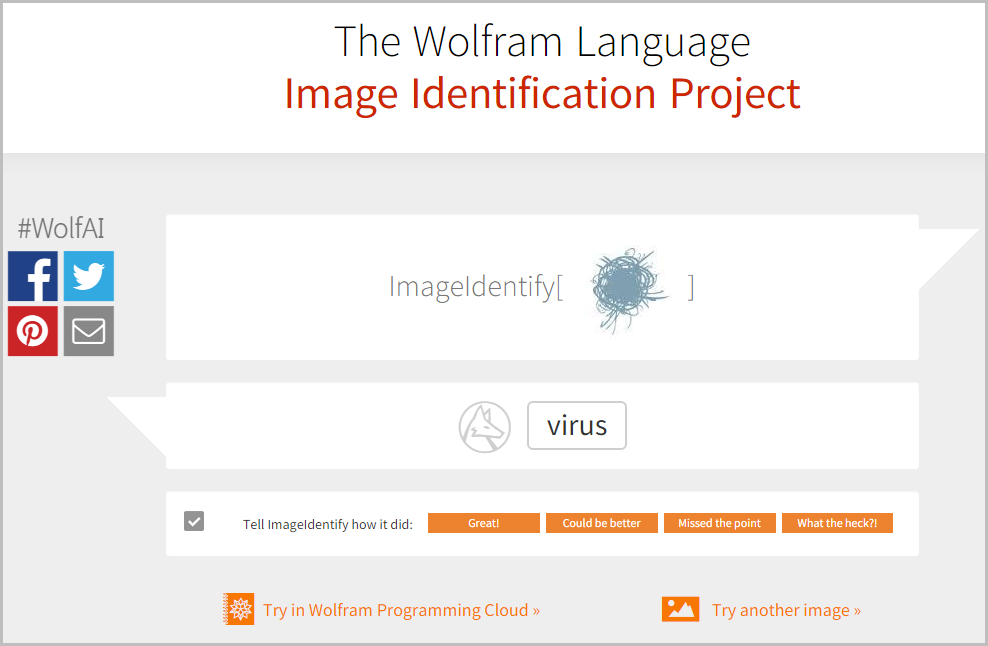

Today we are launching the Wolfram Language Image Identification Project , an image identification project that works over the Internet. You can send an image from the phone’s camera, from the browser, or drag-and-drop it into the appropriate form, or simply upload the file. After that, ImageIdentify will return its result:

Now in Wolfram Language

Personal background

Machine learning

All this is connected with attractors.

Automatically created programs

Why now?

I see only a hat

We lost anteaters!

Back to nature

Of course, the answer will not always be correct, but in most cases the function works very well. And the fact that if the function makes any mistakes is remarkable, they are similar to the ones that a person would make.

This is a good practical example of artificial intelligence. However, for me, the more important point is that we integrate such interaction with artificial intelligence directly into Wolfram Language - another powerful stone of the foundation of the knowledge-based programming paradigm.

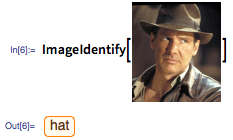

In order to recognize an image when working with the Wolfram Language, you just need to apply the ImageIdentify function to this image:

![In [1]: = ImageIdentify [image: giant anteater] In[1]:= ImageIdentify[image:giant anteater]](https://habrastorage.org/getpro/habr/post_images/a15/ec9/a68/a15ec9a689f0db2f132ff7d962dd601c.png)

At the exit, you get some character object with which you can continue to work in the Wolfram Language. As in this example, find out that this is an animal, a mammal, and so on. Or just ask the definition:

![In [2]: = giant anteater [& quot; Definition & quot;] In[2]:= giant anteater ["Definition"]](https://habrastorage.org/getpro/habr/post_images/374/b9b/fd6/374b9bfd6d298d9fbf8c1693519e21f3.png)

Or, for example, generate a cloud from the words found in the Wikipedia article devoted to the object in the picture:

![In [3]: = WordCloud [DeleteStopwords [WikipediaData [giant anteater]]] In[3]:= WordCloud[DeleteStopwords[WikipediaData[giant anteater]]]](https://habrastorage.org/getpro/habr/post_images/723/9ff/c2a/7239ffc2a8002c8cbe51e9fe46876097.png)

If you have an array of photos, then you can almost instantly write a program on the Wolfram Language, which, for example, would give out statistics about which animals, devices, boards - no matter what - how often they are found in this array .

With the help of the ImageIdentify function, built directly into the Wolfram Language, it is very easy to create some kind of API, applications in which it is used. And using the Wolfram Cloud is very easy to create websites - like, for example, the Wolfram Language Image Identification Project website.

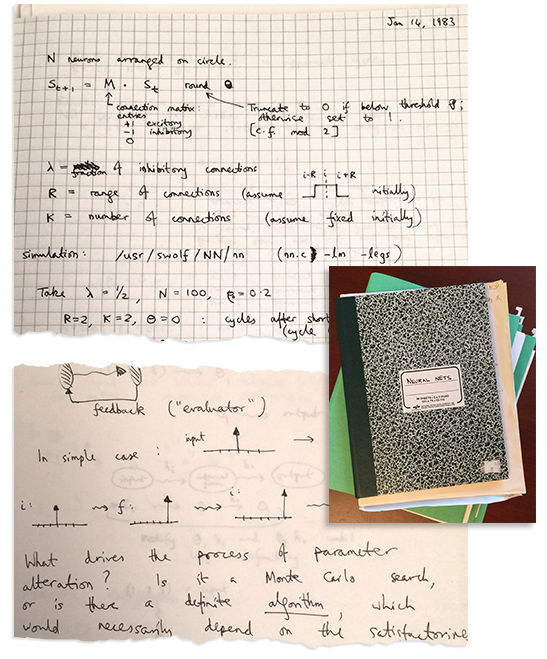

Personally, I waited a long time for ImageIdentify . Somewhere 40 years ago I was reading a book called The Computer and the Brain , which was penetrated by a thought - sooner or later we will create artificial intelligence, most likely by emulating electrical connections in the brain. And in 1980, after I had achieved some success with my first computer language , I began to reflect on what needs to be done in order to create a full-fledged artificial intelligence.

I was inspired by the ideas that were later implemented in the Wolfram Language - an idea in symbolic comparison with samples , which, I thought, could reflect some aspects of human thinking. But I knew that if image recognition is based on pattern matching, then something else is needed - a fuzzy juxtaposition.

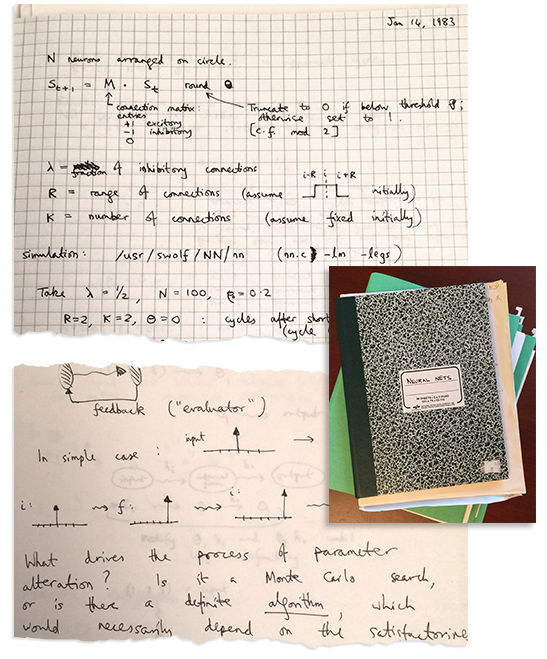

I tried to create fuzzy caching algorithms. And he never stopped thinking about how the brain realizes all this. We must borrow its principles of operation. And this led me to begin the study of idealized neural networks and their behavior.

In the meantime, I also thought about some fundamental issues in the natural sciences - about cosmology , about how large-scale structures arise in our universe, how particles self-organize.

And at some point I realized that both neural networks and self-attracting particle clusters are examples of systems that, although they had simple basic components, for some reason achieved complex collective behavior. Digging deeper, I got to cellular automata , which led me to all the ideas and discoveries that resulted in the book A New Kind of Science .

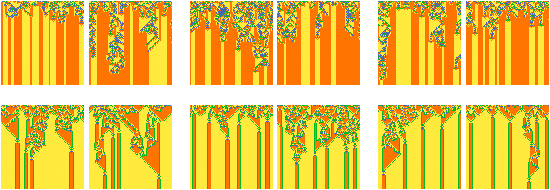

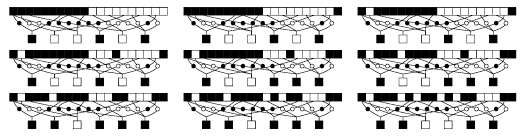

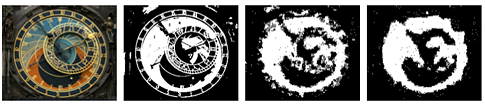

So what about neural networks? They were not my favorite systems — they seemed too arbitrary and complex in their structure compared to other systems that I researched in the computing world. However, I continued to think about them again and again, conducted simulations in order to better understand the basics of their behavior, tried to use them for some specific tasks, like, for example, a fuzzy comparison with a sample:

The history of neural networks was associated with a series of ups and downs. Networks suddenly appear in the 1940s. However, by the 60s, interest in them had decreased, and there was a perception that they were rather useless and there was little that could be done with their help.

However, this was true only for a single-layer perceptron (one of the first models of a neural network). The revival of interest came at the beginning of the eighties - models of neural networks with a hidden layer appeared. And despite the fact that I know many of the foremost in this direction, I still remained somewhat skeptical. I did not leave the impression that those problems that are solved using neural networks can be solved much more easily in many other ways.

And it seemed to me that neural networks were too complex formal systems; somehow I even tried to develop my own alternative . However, I still supported people at the neural network research center and included their articles in my journal, Complex Systems .

Indeed, there were some practical applications for neural networks — for example, visual character recognition — however, there were few of them and they were scattered. Years passed and, it seemed, almost nothing new appeared in this area.

In the meantime, we were developing a set of powerful applied data analysis algorithms in Mathematica and then turned into the Wolfram Language . And a few years ago we came to the conclusion that it was time to move on and try to integrate highly automated machine learning into the system. The idea was to create very powerful and common functions; for example, the Classify function, which will categorize things of any kind: say, which photo is the day, and at what night , the sounds of various musical instruments, the importance of email messages, and so on.

We use a large number of modern methods. But, more importantly, we tried to achieve complete automation, so that users could not know anything about machine learning at all: just call Classify .

At first I was not sure that it would work. But it works, and very well.

You can use almost anything as training data, and the Wolfram Language will work with classifiers automatically. We also introduce more and more various classifiers already built in: for example, for languages, flags of countries:

![In [4]: = Classify [& quot; Language & quot ;, {& quot; 欢迎 光临 & quot ;, & quot; Welcome & quot ;, & quot; Bienvenue & quot ;, & quot; Welcome & quot ;, & quot; Bienvenidos & quot;}] In[4]:= Classify["Language", {"欢迎光临", "Welcome", "Bienvenue", " ", "Bienvenidos"}]](https://habrastorage.org/getpro/habr/post_images/d19/8e2/cfc/d198e2cfc0ed5b3636293646831d5fd2.png)

![In [5]: = Classify [& quot; CountryFlag & quot ;, {images: flags}] In[5]:= Classify["CountryFlag", {images:flags}]](https://habrastorage.org/getpro/habr/post_images/6bb/57a/fdd/6bb57afddf560c433f94bd162cf161b9.png)

And some time ago we came to the conclusion that it was time to tackle the large-scale problem of classification - image recognition. And our result is ImageIdentify .

What is image recognition? In the world there are a large number of the most diverse things that people gave the name. The essence of recognition is to determine which of these things are represented in this image. If it is more formal - to display all possible images in a certain set of object names in symbolic form.

That is, we have no way to describe, for example, a chair. But we can give many examples of how a chair looks, as if to say: “everything that looks like this here, let it be defined by the system as a chair.” That is, we want all images that contain something similar to a chair to have a match with the word chair, while other images would not have such a match.

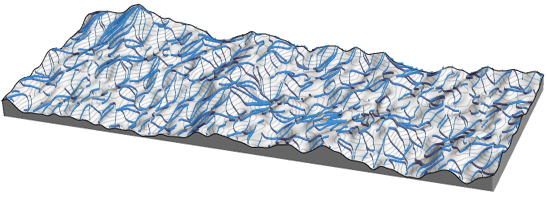

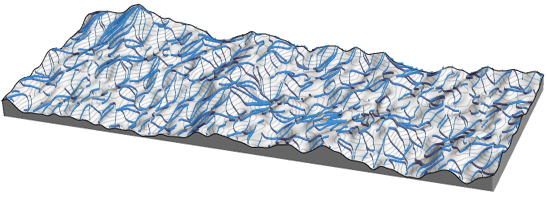

There are many different systems with similar " attractor " behavior. As an example from the physical world, one can cite the mountainside. Raindrops can fall on any part of the mountain, however (at least in the ideal model) they will flow down to the lowest possible points. Drops that are nearby, will tend to the same points. Far away drops will flow to different points.

Raindrops are like images, and points of the foot of a mountain are like types of objects. By raindrops we mean some physical objects that move under the influence of gravity. However, the images are composed of pixels. And instead of physical movement, we need to think about how these digital values should be processed by the program.

And exactly the same attractor behavior occurs here. For example, there are many cellular automata in which each automaton can change the colors of several neighboring cells, but in any case it will end in some steady state (most cellular automata actually have more interesting behavior that does not have any final state , but then we would not be able to draw an analogy to the tasks of recognition).

So what happens if we take an image and apply cellular automata algorithms to it? In fact, when we process images, some of the usual operations (on computers or using visual inspection by humans) are simply algorithms of two-dimensional cellular automata.

It is easy to make cellular automata discern some features of an image like dark spots, for example. However, image recognition requires more. If we again apply that analogy with the mountain, then we need to create a mountainside with all its properties so that the drops from the corresponding part of the mountain flow down to the corresponding part of its base.

So how do we do this? In the case of digital data such as images - no one knows how to do it in one fell swoop. This is an iterative process. At the beginning we have some preparation, and then constantly change its shape, fashioning what we need.

Many things in this process are hidden from us. I diligently thought about this, how it all relates to discrete programs like cellular automata, Turing machines and the like. And I am sure that here you can get very interesting results. But I never understood - how exactly?

For systems with a finite real number of parameters, there is an excellent method called back propagation, which is based on calculations. It is, in fact, a variant of a very simple method - the gradient inheritance method, in which derivatives are calculated, and then used to determine how to change the parameters so that the system has the desired behavior.

So what type of system should we use? Somewhat unexpectedly, but the main option is neural networks. The name evokes thoughts about the brain and about something biological. However, in our case, neural networks are formal computing systems that consist of some combination of functions from many arguments with continuous parameters and discrete threshold values.

How easy is it to make such a neural network perform some interesting tasks? It's hard to say, really. At least for the last 20 years, I have believed that neural networks can only do those things that can be implemented without any other, simpler methods.

But a few years ago, everything began to change. And now you can often hear about some other successful application of neural networks to solve some applied problems like, say, image recognition.

How did this happen? Computers (and especially linear algebra in graphics processors) became fast enough to cope with various algorithmic tricks, including cellular automata - it became possible to create neural networks with millions of neurons (and yes, now these are deep neural networks with many levels) . And all this gave rise to many different new applications.

I don’t think it’s a coincidence that it happened just when the number of artificial neurons became comparable to the number of neurons in the corresponding parts of our brain.

And the point is not that quantity in itself means something. Rather, the fact is that if we solve some tasks like image recognition, those tasks that the human brain solves, it is not surprising that we need a system of the appropriate scale.

People can easily recognize thousands of different objects - about as many as there are nouns in the language that can be portrayed. Other animals distinguish far fewer objects. But if we try to recognize images in the way that a person does, effectively transforming them into words that exist in human languages, then we will encounter the whole scale of the problem. The key to its solution is the neural network of the scale of the human brain.

Undoubtedly, there are differences between computational and biological neural networks, although after the network is trained, the process of obtaining a result from an image is very similar. But the methods used to train computational neural networks are significantly different from those that should be used in biological networks.

However, during the development of ImageIdentify, I was really amazed how much her behavior resembled a biological neural network. First of all, the number of images for training - several tens of millions - is comparable with the number of objects that people encounter in the first couple of years of their life.

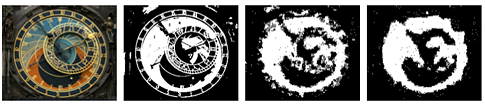

There were also features in training that are very similar to those that arise for biological neural networks. For example, we somehow made a mistake by not putting images of people's faces in a training compilation. And when we showed the picture, which was Indiana Jones , the system did not understand at all that there was some kind of face and issued that the picture shows a hat. Perhaps this is not surprising, but as it resembles a classic experiment with kittens, who throughout their life have only seen vertical bands, after which they could not see horizontal ones.

Probably, like the brain, the neural network ImageIdentify has many layers that contain neurons of different types (the general structure, of course, is well described by the symbolic representation of the Wolfram Language).

It is difficult to say something meaningful about what is happening inside the network. But if you look into the upper layers, you can select some features that are distinguished by the system. And, perhaps, they are very similar to those features that differ in real neurons in the primary visual cortex.

I myself have long been interested in such things as visual textural recognition, (the definition of some texture primitives, like the base color) and I believe that we can now understand a lot from this. I also think it would be very interesting to look into the deeper layers of the neural network and see what happens there. We could find there some concepts that actually describe classes of objects, including those for which so far there are no words in natural human languages.

As with many other projects for Wolfram Language, when creating an ImageIdentify, we needed to do many different things. Work with a lot of educational images. Development of ontology of rendered objects and its transfer to Wolfram Language. Analysis of the dynamics of neural networks using methods that are used in physics. Tedious optimization of parallel code. Even some research in the style of A New Kind of Science for programs of the computing world. And a lot of subjective opinions on how to introduce functionality that would be useful in practice.

At the very beginning, I was not sure whether ImageIdentify would work. And at the very beginning the number of absolutely incorrectly recognized images was very high. However, step by step, we gradually approached the moment when using ImageIdentify it was already possible to get something useful.

But there are still a lot of unsolved problems. The system coped well with some things, but seriously skidded in others. We changed something, tuned something, and then there were new failures and a flurry of messages in the “we lost the anteater again!” Style (for example , how those images that ImageIdentify used for correct recognition of anteaters were recognized as something completely different).

Debugging ImageIdentify was a very exciting process. What can be considered meaningful input data? And what is meaningful data at the output? How to choose - a more general and reliable result, or more specific, but less reliable (just a dog, or a hunting dog, or a beagle)?

There have been things that at first glance seemed completely crazy. A pig that was identified as a harness. A piece of stonework was defined as a moped. But the good news was that we always found the reason — for example, that some irrelevant objects were constantly on the training images (the only masonry that ImageIdentify saw was Asian, against which mopeds were constantly present).

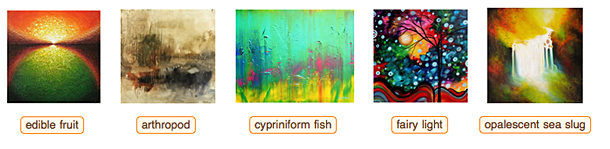

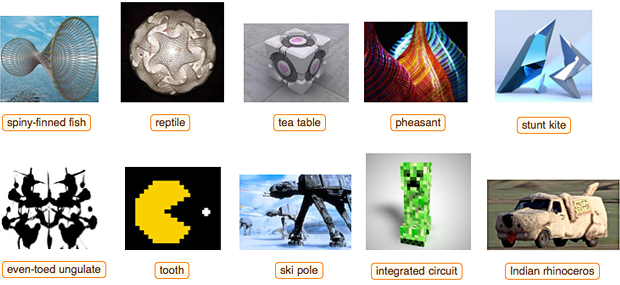

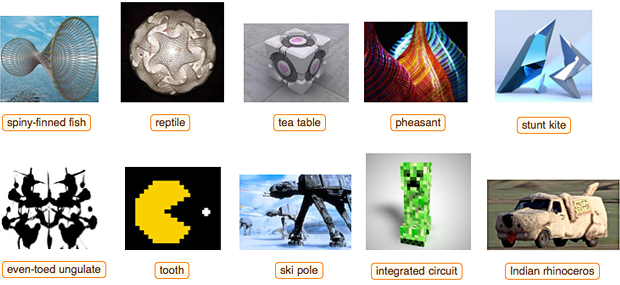

To test the system, I often tried non-standard images:

And I was struck by what I discovered. Yes, ImageIdentify may be wrong. But for some reason the mistakes seemed very understandable and in some sense human. It seemed that ImageIdentify very successfully copies some aspects of how a person himself recognizes images.

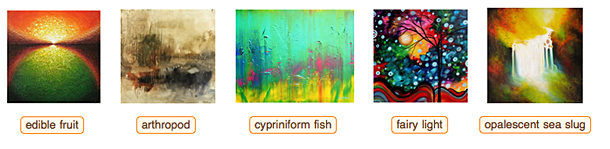

What about abstract art? This is something like the Rorshak test for both machines and people - a very interesting insight into the features of ImageIdentify .

Projects such as creating an ImageIdentify never end. But a couple of months ago (see the article on Habrahabr " Stephen Wolfram: Frontiers of computational thinking (report from the SXSW festival) ") we released a preliminary version on the Wolfram Language. And today we have released a new version and used it as the basis for the Wolfram Language Image Identification Project .

We will continue the training and development of ImageIdentify , especially focusing on feedback and statistics from the site. As for Wolfram | Alpha in the field of natural language understanding , without active use by people, there is no way to realistically assess progress, or even determine what the goals for the recognition of real images should be.

I must say that I find it fun to even play with the Wolfram Language Image Identification Project. It excites - to see how, after many years of work, artificial intelligence has turned out, which really works. Moreover, when you see that ImageIdentify responds to an unusual or complex image, it feels as if it is a person who makes guesses or just jokes.

Under the hood of all this, of course, is just a code, with very simple cycles. This code is almost the same as the one I, for example, wrote for my neural networks in the eighties (of course, this is now the code on Wolfram Language, not on the low-level C).

This is a very unusual example from the history of ideas - neural networks have been explored for more than seventy years, but interest in them has repeatedly faded. For us, neural networks are what led us to success in solving such an exemplary artificial intelligence problem as image recognition. I believe that such leaders in the study of neural networks such as Warren McCulloch and Walter Pitts would be somewhat surprised by what the Wolfram Language Image Identification Project core does. They would probably be amazed that it took as much as 70 years.

But for me, much more important is how things like ImageIdentify can be built into the Wolfram Language symbol structure. What ImageIdentify does is what people do from generation to generation. But the symbolic language gives us the opportunity to present all the baggage of intellectual achievements of mankind. And to make all this computational, I believe, will be something so ambitious that I am only now beginning to realize the significance of this.

As for the current moment, I hope that you will like the Wolfram Language Image Identification Project . Consider it as a holiday to achieve new frontiers of artificial intelligence. Consider it as a rest for the mind, evoking thoughts about the future of artificial intelligence. But one should not forget the most important, in my opinion: it is also an applied technology that you can use here and now in the Wolfram Language and unload it wherever you want.

Once on Habrahabr, you get sick in the good sense of the word and ImageIdentify seems to know why.

I express my deep gratitude to Kirill Guzenko for his help in translating.

“What is depicted in this picture?” People almost immediately can answer this question, and earlier it seemed that this was an impossible task for computers. For the past 40 years I have known that computers will learn how to solve such problems, but did not know when this would happen.

I created systems that give computers different components of the intellect, and these components are often far beyond human capabilities. From a long time ago we integrate the development of artificial intelligence in Wolfram Language .

And now I am very pleased to report that we have crossed the new frontier: a new Wolfram Language function - ImageIdentify , which you can ask - “what is shown in the picture?”, Was released and get an answer.

')

Today we are launching the Wolfram Language Image Identification Project , an image identification project that works over the Internet. You can send an image from the phone’s camera, from the browser, or drag-and-drop it into the appropriate form, or simply upload the file. After that, ImageIdentify will return its result:

Content

Now in Wolfram Language

Personal background

Machine learning

All this is connected with attractors.

Automatically created programs

Why now?

I see only a hat

We lost anteaters!

Back to nature

Of course, the answer will not always be correct, but in most cases the function works very well. And the fact that if the function makes any mistakes is remarkable, they are similar to the ones that a person would make.

This is a good practical example of artificial intelligence. However, for me, the more important point is that we integrate such interaction with artificial intelligence directly into Wolfram Language - another powerful stone of the foundation of the knowledge-based programming paradigm.

Now in Wolfram Language

In order to recognize an image when working with the Wolfram Language, you just need to apply the ImageIdentify function to this image:

![In [1]: = ImageIdentify [image: giant anteater] In[1]:= ImageIdentify[image:giant anteater]](https://habrastorage.org/getpro/habr/post_images/a15/ec9/a68/a15ec9a689f0db2f132ff7d962dd601c.png)

At the exit, you get some character object with which you can continue to work in the Wolfram Language. As in this example, find out that this is an animal, a mammal, and so on. Or just ask the definition:

![In [2]: = giant anteater [& quot; Definition & quot;] In[2]:= giant anteater ["Definition"]](https://habrastorage.org/getpro/habr/post_images/374/b9b/fd6/374b9bfd6d298d9fbf8c1693519e21f3.png)

Or, for example, generate a cloud from the words found in the Wikipedia article devoted to the object in the picture:

![In [3]: = WordCloud [DeleteStopwords [WikipediaData [giant anteater]]] In[3]:= WordCloud[DeleteStopwords[WikipediaData[giant anteater]]]](https://habrastorage.org/getpro/habr/post_images/723/9ff/c2a/7239ffc2a8002c8cbe51e9fe46876097.png)

If you have an array of photos, then you can almost instantly write a program on the Wolfram Language, which, for example, would give out statistics about which animals, devices, boards - no matter what - how often they are found in this array .

With the help of the ImageIdentify function, built directly into the Wolfram Language, it is very easy to create some kind of API, applications in which it is used. And using the Wolfram Cloud is very easy to create websites - like, for example, the Wolfram Language Image Identification Project website.

Personal background

Personally, I waited a long time for ImageIdentify . Somewhere 40 years ago I was reading a book called The Computer and the Brain , which was penetrated by a thought - sooner or later we will create artificial intelligence, most likely by emulating electrical connections in the brain. And in 1980, after I had achieved some success with my first computer language , I began to reflect on what needs to be done in order to create a full-fledged artificial intelligence.

I was inspired by the ideas that were later implemented in the Wolfram Language - an idea in symbolic comparison with samples , which, I thought, could reflect some aspects of human thinking. But I knew that if image recognition is based on pattern matching, then something else is needed - a fuzzy juxtaposition.

I tried to create fuzzy caching algorithms. And he never stopped thinking about how the brain realizes all this. We must borrow its principles of operation. And this led me to begin the study of idealized neural networks and their behavior.

In the meantime, I also thought about some fundamental issues in the natural sciences - about cosmology , about how large-scale structures arise in our universe, how particles self-organize.

And at some point I realized that both neural networks and self-attracting particle clusters are examples of systems that, although they had simple basic components, for some reason achieved complex collective behavior. Digging deeper, I got to cellular automata , which led me to all the ideas and discoveries that resulted in the book A New Kind of Science .

So what about neural networks? They were not my favorite systems — they seemed too arbitrary and complex in their structure compared to other systems that I researched in the computing world. However, I continued to think about them again and again, conducted simulations in order to better understand the basics of their behavior, tried to use them for some specific tasks, like, for example, a fuzzy comparison with a sample:

The history of neural networks was associated with a series of ups and downs. Networks suddenly appear in the 1940s. However, by the 60s, interest in them had decreased, and there was a perception that they were rather useless and there was little that could be done with their help.

However, this was true only for a single-layer perceptron (one of the first models of a neural network). The revival of interest came at the beginning of the eighties - models of neural networks with a hidden layer appeared. And despite the fact that I know many of the foremost in this direction, I still remained somewhat skeptical. I did not leave the impression that those problems that are solved using neural networks can be solved much more easily in many other ways.

And it seemed to me that neural networks were too complex formal systems; somehow I even tried to develop my own alternative . However, I still supported people at the neural network research center and included their articles in my journal, Complex Systems .

Indeed, there were some practical applications for neural networks — for example, visual character recognition — however, there were few of them and they were scattered. Years passed and, it seemed, almost nothing new appeared in this area.

Machine learning

In the meantime, we were developing a set of powerful applied data analysis algorithms in Mathematica and then turned into the Wolfram Language . And a few years ago we came to the conclusion that it was time to move on and try to integrate highly automated machine learning into the system. The idea was to create very powerful and common functions; for example, the Classify function, which will categorize things of any kind: say, which photo is the day, and at what night , the sounds of various musical instruments, the importance of email messages, and so on.

We use a large number of modern methods. But, more importantly, we tried to achieve complete automation, so that users could not know anything about machine learning at all: just call Classify .

At first I was not sure that it would work. But it works, and very well.

You can use almost anything as training data, and the Wolfram Language will work with classifiers automatically. We also introduce more and more various classifiers already built in: for example, for languages, flags of countries:

![In [4]: = Classify [& quot; Language & quot ;, {& quot; 欢迎 光临 & quot ;, & quot; Welcome & quot ;, & quot; Bienvenue & quot ;, & quot; Welcome & quot ;, & quot; Bienvenidos & quot;}] In[4]:= Classify["Language", {"欢迎光临", "Welcome", "Bienvenue", " ", "Bienvenidos"}]](https://habrastorage.org/getpro/habr/post_images/d19/8e2/cfc/d198e2cfc0ed5b3636293646831d5fd2.png)

![In [5]: = Classify [& quot; CountryFlag & quot ;, {images: flags}] In[5]:= Classify["CountryFlag", {images:flags}]](https://habrastorage.org/getpro/habr/post_images/6bb/57a/fdd/6bb57afddf560c433f94bd162cf161b9.png)

And some time ago we came to the conclusion that it was time to tackle the large-scale problem of classification - image recognition. And our result is ImageIdentify .

All this is connected with attractors.

What is image recognition? In the world there are a large number of the most diverse things that people gave the name. The essence of recognition is to determine which of these things are represented in this image. If it is more formal - to display all possible images in a certain set of object names in symbolic form.

That is, we have no way to describe, for example, a chair. But we can give many examples of how a chair looks, as if to say: “everything that looks like this here, let it be defined by the system as a chair.” That is, we want all images that contain something similar to a chair to have a match with the word chair, while other images would not have such a match.

There are many different systems with similar " attractor " behavior. As an example from the physical world, one can cite the mountainside. Raindrops can fall on any part of the mountain, however (at least in the ideal model) they will flow down to the lowest possible points. Drops that are nearby, will tend to the same points. Far away drops will flow to different points.

Raindrops are like images, and points of the foot of a mountain are like types of objects. By raindrops we mean some physical objects that move under the influence of gravity. However, the images are composed of pixels. And instead of physical movement, we need to think about how these digital values should be processed by the program.

And exactly the same attractor behavior occurs here. For example, there are many cellular automata in which each automaton can change the colors of several neighboring cells, but in any case it will end in some steady state (most cellular automata actually have more interesting behavior that does not have any final state , but then we would not be able to draw an analogy to the tasks of recognition).

So what happens if we take an image and apply cellular automata algorithms to it? In fact, when we process images, some of the usual operations (on computers or using visual inspection by humans) are simply algorithms of two-dimensional cellular automata.

It is easy to make cellular automata discern some features of an image like dark spots, for example. However, image recognition requires more. If we again apply that analogy with the mountain, then we need to create a mountainside with all its properties so that the drops from the corresponding part of the mountain flow down to the corresponding part of its base.

Automatically created programs

So how do we do this? In the case of digital data such as images - no one knows how to do it in one fell swoop. This is an iterative process. At the beginning we have some preparation, and then constantly change its shape, fashioning what we need.

Many things in this process are hidden from us. I diligently thought about this, how it all relates to discrete programs like cellular automata, Turing machines and the like. And I am sure that here you can get very interesting results. But I never understood - how exactly?

For systems with a finite real number of parameters, there is an excellent method called back propagation, which is based on calculations. It is, in fact, a variant of a very simple method - the gradient inheritance method, in which derivatives are calculated, and then used to determine how to change the parameters so that the system has the desired behavior.

So what type of system should we use? Somewhat unexpectedly, but the main option is neural networks. The name evokes thoughts about the brain and about something biological. However, in our case, neural networks are formal computing systems that consist of some combination of functions from many arguments with continuous parameters and discrete threshold values.

How easy is it to make such a neural network perform some interesting tasks? It's hard to say, really. At least for the last 20 years, I have believed that neural networks can only do those things that can be implemented without any other, simpler methods.

But a few years ago, everything began to change. And now you can often hear about some other successful application of neural networks to solve some applied problems like, say, image recognition.

How did this happen? Computers (and especially linear algebra in graphics processors) became fast enough to cope with various algorithmic tricks, including cellular automata - it became possible to create neural networks with millions of neurons (and yes, now these are deep neural networks with many levels) . And all this gave rise to many different new applications.

Why now?

I don’t think it’s a coincidence that it happened just when the number of artificial neurons became comparable to the number of neurons in the corresponding parts of our brain.

And the point is not that quantity in itself means something. Rather, the fact is that if we solve some tasks like image recognition, those tasks that the human brain solves, it is not surprising that we need a system of the appropriate scale.

People can easily recognize thousands of different objects - about as many as there are nouns in the language that can be portrayed. Other animals distinguish far fewer objects. But if we try to recognize images in the way that a person does, effectively transforming them into words that exist in human languages, then we will encounter the whole scale of the problem. The key to its solution is the neural network of the scale of the human brain.

Undoubtedly, there are differences between computational and biological neural networks, although after the network is trained, the process of obtaining a result from an image is very similar. But the methods used to train computational neural networks are significantly different from those that should be used in biological networks.

However, during the development of ImageIdentify, I was really amazed how much her behavior resembled a biological neural network. First of all, the number of images for training - several tens of millions - is comparable with the number of objects that people encounter in the first couple of years of their life.

I see only a hat

There were also features in training that are very similar to those that arise for biological neural networks. For example, we somehow made a mistake by not putting images of people's faces in a training compilation. And when we showed the picture, which was Indiana Jones , the system did not understand at all that there was some kind of face and issued that the picture shows a hat. Perhaps this is not surprising, but as it resembles a classic experiment with kittens, who throughout their life have only seen vertical bands, after which they could not see horizontal ones.

Probably, like the brain, the neural network ImageIdentify has many layers that contain neurons of different types (the general structure, of course, is well described by the symbolic representation of the Wolfram Language).

It is difficult to say something meaningful about what is happening inside the network. But if you look into the upper layers, you can select some features that are distinguished by the system. And, perhaps, they are very similar to those features that differ in real neurons in the primary visual cortex.

I myself have long been interested in such things as visual textural recognition, (the definition of some texture primitives, like the base color) and I believe that we can now understand a lot from this. I also think it would be very interesting to look into the deeper layers of the neural network and see what happens there. We could find there some concepts that actually describe classes of objects, including those for which so far there are no words in natural human languages.

We lost anteaters!

As with many other projects for Wolfram Language, when creating an ImageIdentify, we needed to do many different things. Work with a lot of educational images. Development of ontology of rendered objects and its transfer to Wolfram Language. Analysis of the dynamics of neural networks using methods that are used in physics. Tedious optimization of parallel code. Even some research in the style of A New Kind of Science for programs of the computing world. And a lot of subjective opinions on how to introduce functionality that would be useful in practice.

At the very beginning, I was not sure whether ImageIdentify would work. And at the very beginning the number of absolutely incorrectly recognized images was very high. However, step by step, we gradually approached the moment when using ImageIdentify it was already possible to get something useful.

But there are still a lot of unsolved problems. The system coped well with some things, but seriously skidded in others. We changed something, tuned something, and then there were new failures and a flurry of messages in the “we lost the anteater again!” Style (for example , how those images that ImageIdentify used for correct recognition of anteaters were recognized as something completely different).

Debugging ImageIdentify was a very exciting process. What can be considered meaningful input data? And what is meaningful data at the output? How to choose - a more general and reliable result, or more specific, but less reliable (just a dog, or a hunting dog, or a beagle)?

There have been things that at first glance seemed completely crazy. A pig that was identified as a harness. A piece of stonework was defined as a moped. But the good news was that we always found the reason — for example, that some irrelevant objects were constantly on the training images (the only masonry that ImageIdentify saw was Asian, against which mopeds were constantly present).

To test the system, I often tried non-standard images:

And I was struck by what I discovered. Yes, ImageIdentify may be wrong. But for some reason the mistakes seemed very understandable and in some sense human. It seemed that ImageIdentify very successfully copies some aspects of how a person himself recognizes images.

What about abstract art? This is something like the Rorshak test for both machines and people - a very interesting insight into the features of ImageIdentify .

Back to nature

Projects such as creating an ImageIdentify never end. But a couple of months ago (see the article on Habrahabr " Stephen Wolfram: Frontiers of computational thinking (report from the SXSW festival) ") we released a preliminary version on the Wolfram Language. And today we have released a new version and used it as the basis for the Wolfram Language Image Identification Project .

We will continue the training and development of ImageIdentify , especially focusing on feedback and statistics from the site. As for Wolfram | Alpha in the field of natural language understanding , without active use by people, there is no way to realistically assess progress, or even determine what the goals for the recognition of real images should be.

I must say that I find it fun to even play with the Wolfram Language Image Identification Project. It excites - to see how, after many years of work, artificial intelligence has turned out, which really works. Moreover, when you see that ImageIdentify responds to an unusual or complex image, it feels as if it is a person who makes guesses or just jokes.

Under the hood of all this, of course, is just a code, with very simple cycles. This code is almost the same as the one I, for example, wrote for my neural networks in the eighties (of course, this is now the code on Wolfram Language, not on the low-level C).

This is a very unusual example from the history of ideas - neural networks have been explored for more than seventy years, but interest in them has repeatedly faded. For us, neural networks are what led us to success in solving such an exemplary artificial intelligence problem as image recognition. I believe that such leaders in the study of neural networks such as Warren McCulloch and Walter Pitts would be somewhat surprised by what the Wolfram Language Image Identification Project core does. They would probably be amazed that it took as much as 70 years.

But for me, much more important is how things like ImageIdentify can be built into the Wolfram Language symbol structure. What ImageIdentify does is what people do from generation to generation. But the symbolic language gives us the opportunity to present all the baggage of intellectual achievements of mankind. And to make all this computational, I believe, will be something so ambitious that I am only now beginning to realize the significance of this.

As for the current moment, I hope that you will like the Wolfram Language Image Identification Project . Consider it as a holiday to achieve new frontiers of artificial intelligence. Consider it as a rest for the mind, evoking thoughts about the future of artificial intelligence. But one should not forget the most important, in my opinion: it is also an applied technology that you can use here and now in the Wolfram Language and unload it wherever you want.

Finally from the Wolfram Blog team ...

Once on Habrahabr, you get sick in the good sense of the word and ImageIdentify seems to know why.

Source: https://habr.com/ru/post/258003/

All Articles