Microsoft SQL Server Data Tools

In this article I would like to tell about a set of useful additions to Visual Studio, which can greatly facilitate the development of databases based on MS SQL Server.

The main advantages of using SSDT I would highlight the following:

Of course, the advantages of using SSDT do not end there, but the rest is not as impressive as the one mentioned above. If you are interested in how to take advantage of these and other benefits - I ask for cat.

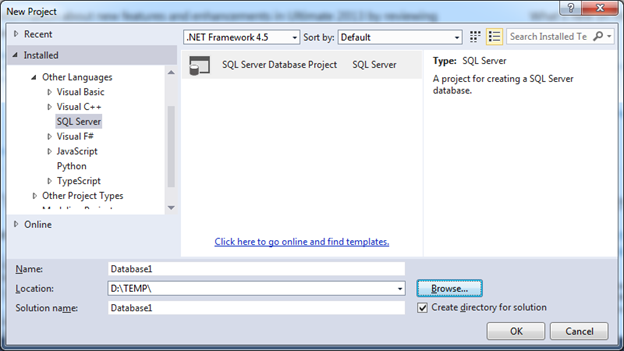

Everything you need to install can be found on the download page in the Data Developer Center. Having chosen the necessary version, you can easily install the tools on your computer and describe it does not see the point. After installation, a new project type will appear in the create new project window:

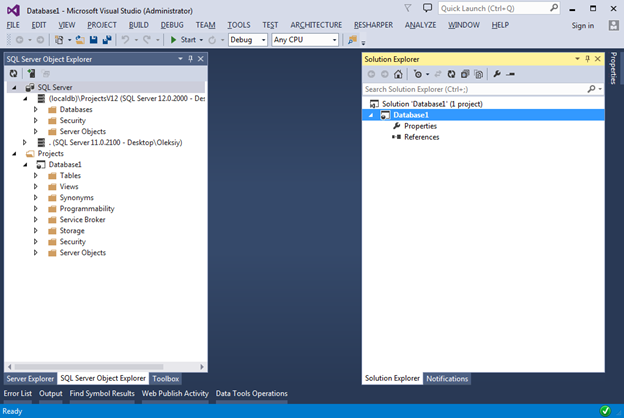

Creating a new project you will see the following:

')

In the SQL Server Object Explorer panel (menu View -> SQL Server Object Explorer) we see something very similar to Object Explorer in SQL Server Management Studio, from which everything that does not make much sense at the database development stage is removed.

By connecting to an existing database, you can develop a database in the so-called Connected mode. This is not much different from the classical approach used in SQL Server Management Studio and will not be discussed in this article.

This development mode is most interesting to us, since It is this one that allows you to get the main benefits of using SSDT

The work is based on a very simple idea - to allow developers to store all the scripts for creating database objects (tables, views, store procedures, etc.) in a project of a special type as part of an existing or new solution (solution). Based on scripts, Visual Studio can generate a DACPAC file, which is essentially a zip archive with all t-sql scripts. Having a DACPAC file, it will be possible to publish (publish) the required database instance by comparing the schema described in DACPAC and the schema in the target database. During the publication, special mechanisms make comparisons, as a result of which migration scripts are automatically created to apply changes without losing data.

In order to see this in action, I suggest to look at the following examples.

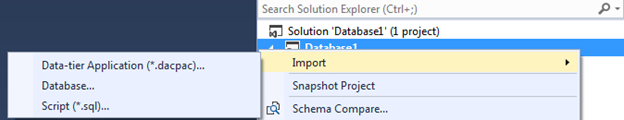

Let's start with the possibility of import. Call the project context menu and see 3 possible options:

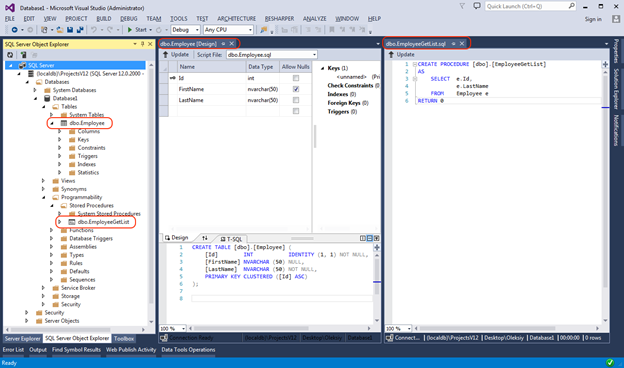

We will select the “Database ...” option and import the local database. It contains one table and one stored procedure. In SQL Server Object Explorer, the source database looks like this:

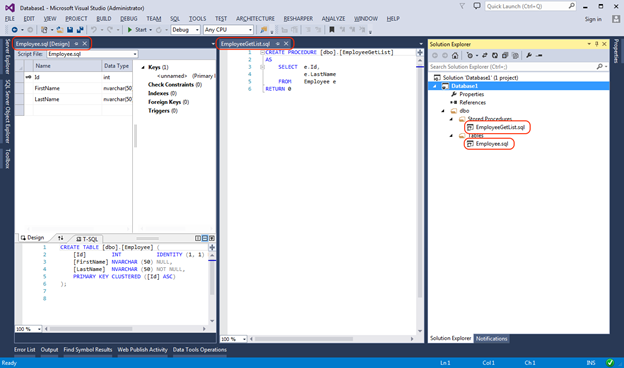

After the import is complete, we will see an extremely similar picture, with the only difference that the database structure will be presented in Solution Explorer as * .sql files.

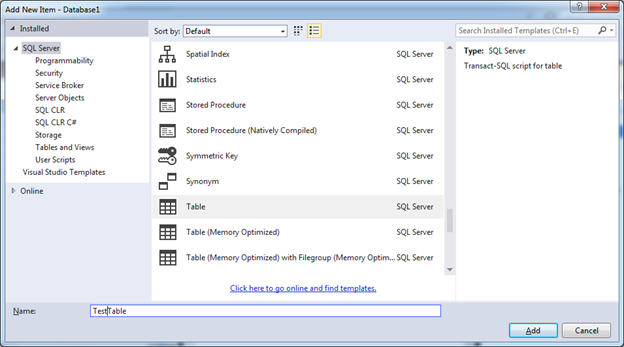

Also, we can always add new items using the Add New Item dialog box, which lists all possible database objects:

Add the TestTable table. A new script file TestTable.sql will be added to the project root and for convenience we will transfer it to the Tables folder.

To create a table schema, we can use both the designer panel and the T-SQL panel. All changes made on one panel will be immediately displayed in another.

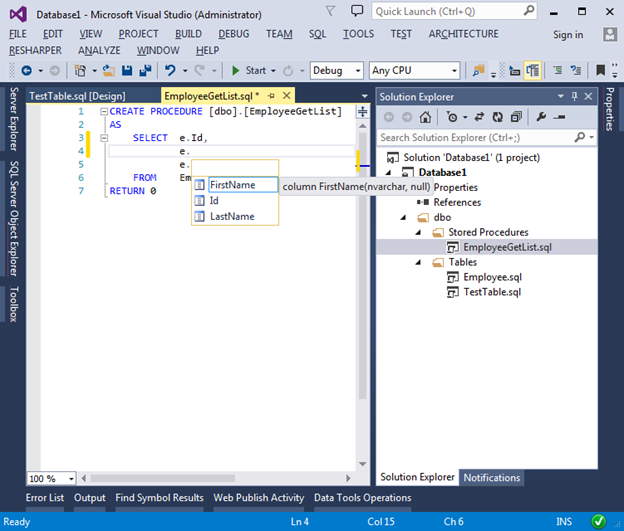

We can also modify existing scripts. Visual Studio for this provides a convenient and beloved by all IntelliSense. Since we are not connected to the physical database, Visual Studio for IntelliSence to work correctly parses all the scripts in the project, which allows it to instantly reflect the latest changes made to the database schema.

I want to draw attention to the fact that we do not have to worry about incremental changes to our base. Instead, we always create scripts as if objects were created anew. When publishing a DACPAC package, migration scripts will be generated automatically by comparing the DACPAC file and the schema in the target database.

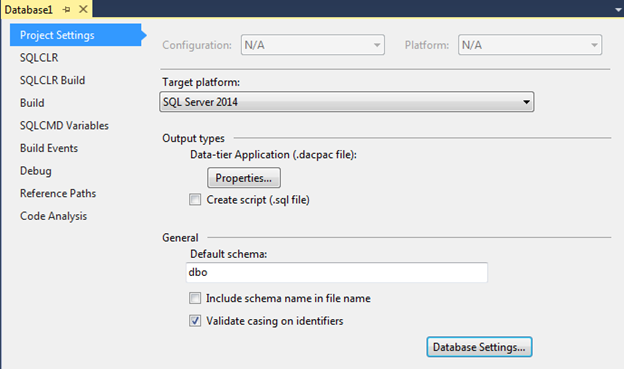

As already mentioned, DACPAC contains not only the schema and data, but also a number of useful settings, for viewing / editing of which we can use the properties window of our project.

The Target platform property allows you to set the version of the database for which scripts in the project will be validated. The minimum supported version of MS SQL Server 2005. For example, if you specify the version of the database 2005 and try to create a column of the Geography type, then we will receive the following message when compiling:

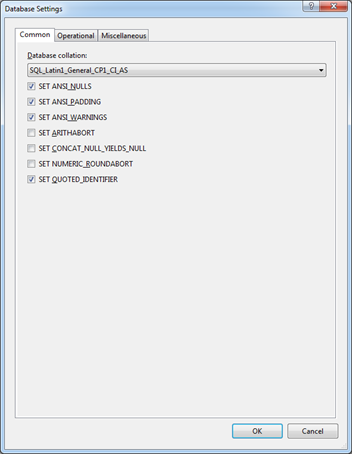

On the Project Settings tab, we can set the database settings by clicking on the Database Settings button. Clicking on it we will see a dialog with settings similar to those we used to see in SQL Server Management Studio:

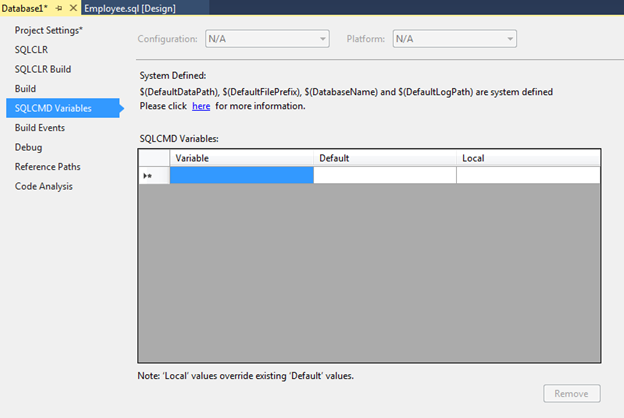

I would also like to mention the SQLCMD Variables tab, on which we can set various variables for further use in our scripts.

After all the settings are specified and the * .sql scripts are added / updated, we can apply the changes to the target database. To do this, go to the menu Build-> Publish, or choose a similar item in the context menu of the project.

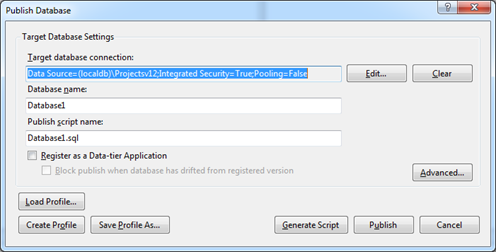

In the dialog box that appears, we set the connection string to the target database (target database) and, if necessary, additional settings by clicking the Advanced button:

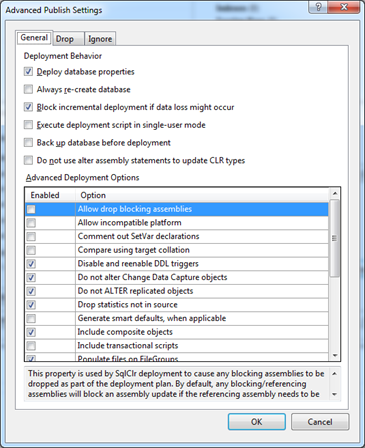

Most of the settings are understandable without additional description, so we will not dwell on them in detail, but I recommend you to familiarize yourself with them, so that if it is impossible to successfully “fill in” the project, you know what the problem may be.

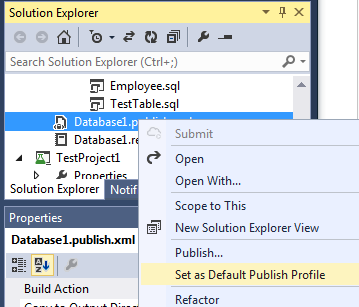

If you want to publish to the target database more than once, then the settings can be saved to the publish profile by clicking the Create Profile button. This will add a file with the extension * .publish.xml to our project and in the future we will be able to publish without the need to enter the settings again. If any of the publication profiles should be used by default, then you can select Set As Default Publish Profile in the context menu of the publication file. This profile will automatically load into the Publish dialog.

All necessary changes can be applied immediately by clicking on the Publish button. And you can postpone until later by generating the corresponding migration script (button Generate Script) - it will contain all the necessary instructions to bring the destination database to the desired state.

If we do not have access to the database, then we can transfer the results of our work as a DACPAC file, which is created by compiling the project and located in ../bin/Debug/Database1.dacpac. Having given a file, for example, to a database administrator, he, in turn, will be able to use any convenient way to apply changes to the target database.

Methods of publishing DACPAC (publishing):

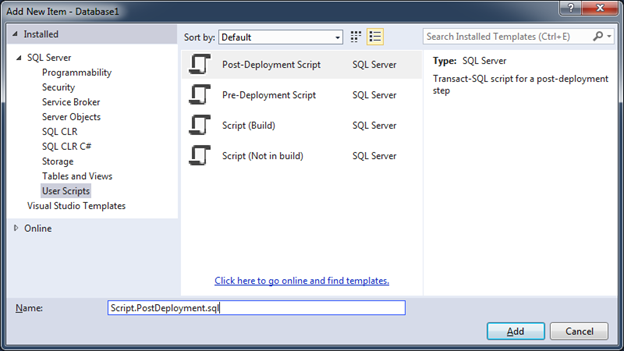

In our project we will create a DataSeeding folder (the name does not matter) and add a new script to it.

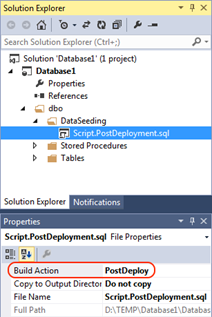

In fact, all types in the User Script section are normal * .sql scripts and differ only in the value of the “Build Action” property of the newly created file.

The logic from the PostDeployment.sql file will be executed after applying all changes to the database schema. In the case of creating PreDeployment.sql, the logic will be executed before applying the schema changes.

The value of the Build Action property for files created through the Script template (Not in Build) will be set to “None”. They are useful for conveniently structuring commands in separate files that are called from Pre or Post Deployment scripts.

Files created through the Script template have a Build Action value of “Build”, and their contents are added to the resulting script, which is executed when the DACPAC file is published at the time the database is changed.

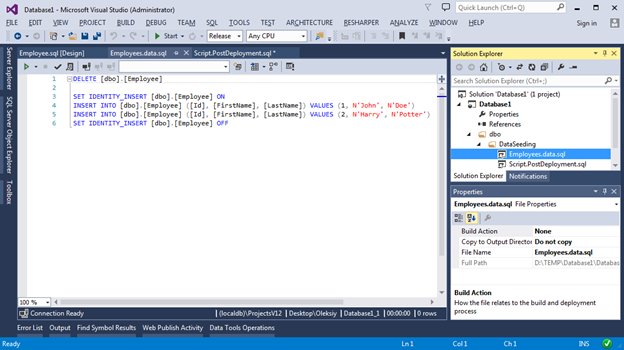

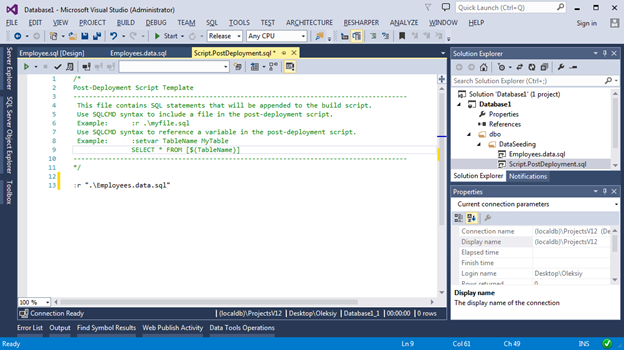

Since there can be only one Post Deployment script in a project and its size can quickly grow, it is recommended that the data insertion logic be placed into separate scripts. That is why we will add a Script file (Not in Build), and in the Post Deployment script we will add a link to it. Here is what it will look like:

Now when publishing our project, 2 records will always be inserted into the Employees table in the database.

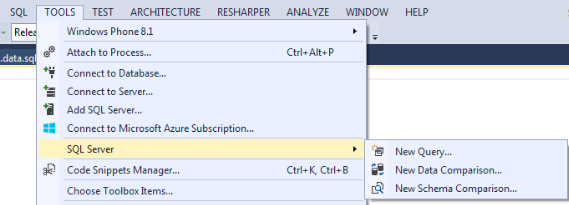

Along with the ability to create a Database project, installing SSDT adds a number of useful tools available from the Tools menu.

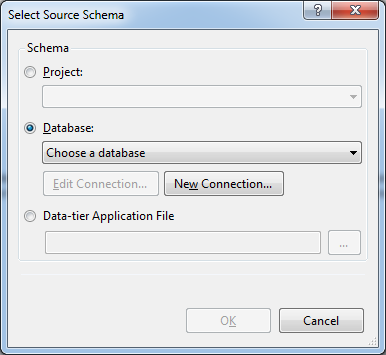

I think that it is clear from the title that each of the points does. As an example, I will show a convenient graphical schema comparison tool. As a source and target object, you can choose one of three options:

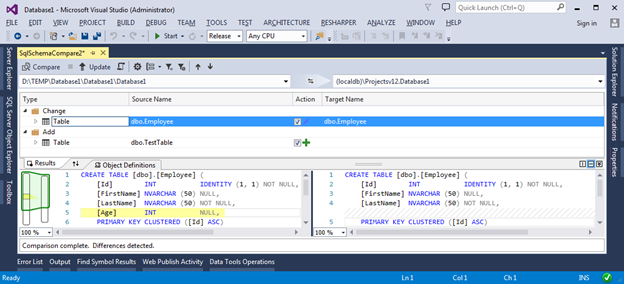

We will compare our project with a local database. The result of the comparison will be as follows:

In the resulting window, we can apply various grouping methods (according to the scheme, according to the type of objects and according to the required action) to more conveniently view the proposed changes and select the objects that need to be updated. In order to apply the migration script, you must click the Update button - this will lead the Target DB to the state of our project.

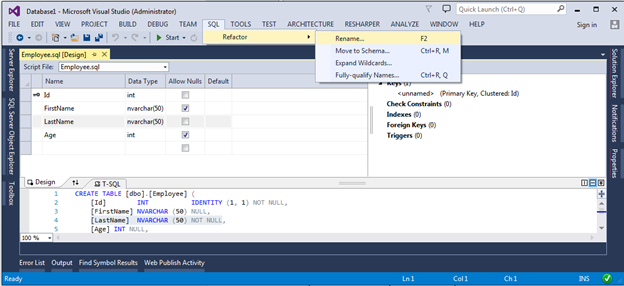

This is my favorite feature. For example, we will show how to rename the LastName column in the Employees table. To do this, open the table creation script, select the LastName column in the table editor and select the Rename item in the SQL menu -> Refactor:

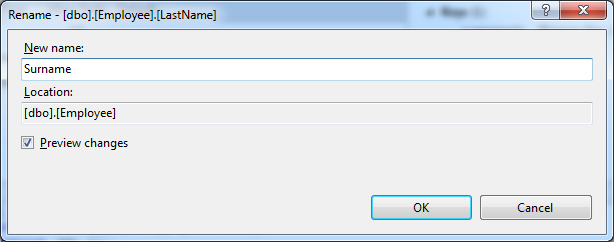

Set a new name:

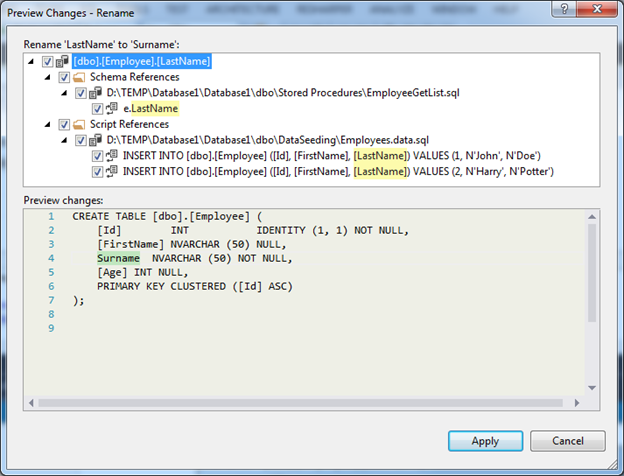

Review the effects of renaming and apply the proposed changes:

As a result, all scripts will be changed and after the first refactoring a special * .refactoring file will be added to the project. It will save all schema changes in historical order in an XML document format. This data will be useful in generating a migration script and will allow you to migrate the schema and data more correctly.

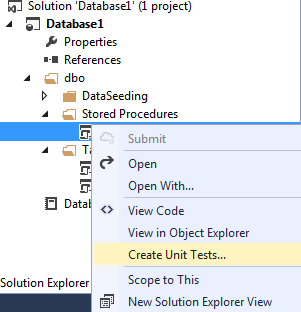

Let's create our first unit test. To do this, call the context menu for the stored procedure that we want to test:

In the dialog box that appears, we will have the opportunity to select additional objects (if any) and set the type and name of the test project and the name of the class containing the unit test code:

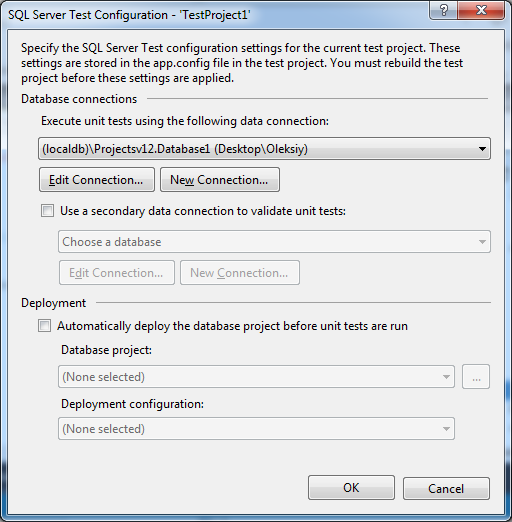

Having created a project, we will be asked to select a database on which tests will be run, as well as some project settings:

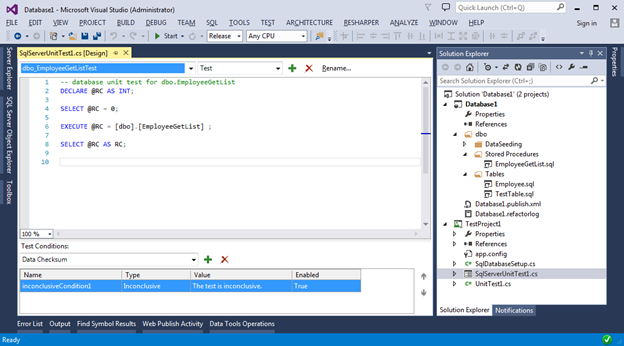

After successful creation, we will open the graphical editor of the unit test, at the bottom of which various checks for the tested object will be presented. In our case, this is the EmployeeGetList stored procedure.

Our task is to write the necessary Sql script and set the required verification conditions, which will be performed after the script code execution. Checks can be different: execution time, number of rows returned, checksum returned data, etc. A complete list of checks can be found in the drop-down menu under the text of the script and above the table checks. For each check, you can set a number of settings through the standard Properties panel. To call it, select the Properties item in the context menu of a specific scan.

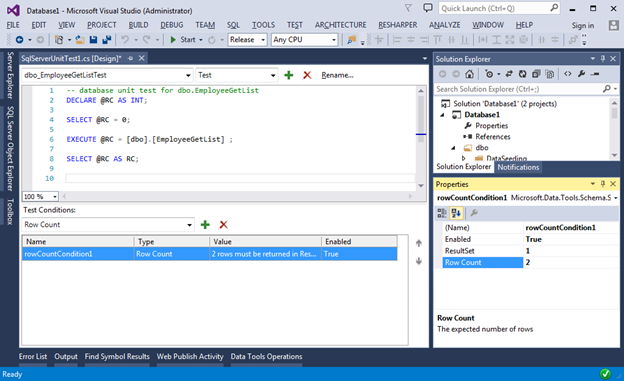

For example, this is how the verification of the returned number of lines will look like:

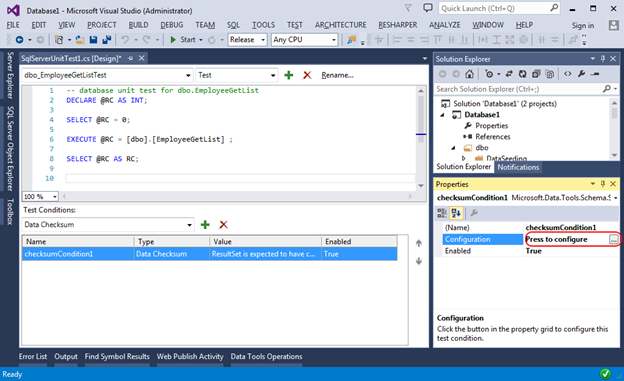

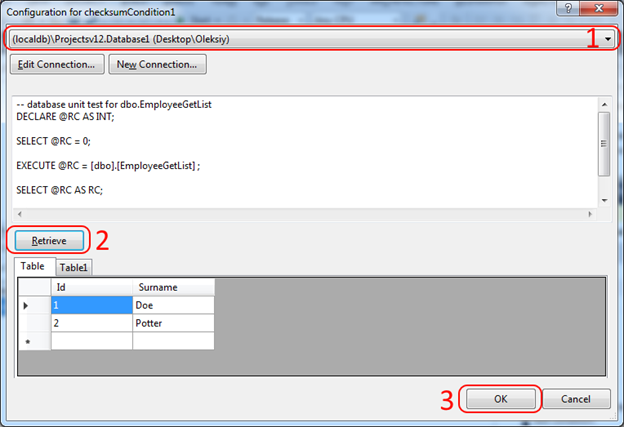

And this is how you can check Checksum:

In fact, this check is performed by our script (it gets 2 rows from the Employees table) and finds the Checksum on the received data. Our task at the stage of test creation is to find reference data, calculate Checksum for them, and in the future this result will be verified with this value. In other words, this is a convenient way to ensure that the result of the stored procedure does not change. To get the Checksum control value, you need to use the button in the Properties window, which will allow you to select a reference database and get the Cheksum reference value:

I hope this brief review has provided an overview of what SSDT is and how they can be useful in your project. Of course there were not considered all the details. But you, as a developer, don't need this. You should just have a general idea of the list of opportunities, and I hope that their further use will be intuitive because The SSDT developers did a great job and supplied the tools with a huge number of wizards and contextual clues.

The main advantages of using SSDT I would highlight the following:

- the possibility of a simple change (refactoring) of the base scheme (you can rename the table column and all Views, Functions and Stored Procedures that refer to it will automatically be corrected to reflect the changes)

- creating unit tests for database

- storing database structure in source control

- comparison of the schema / data with the ability to generate a script to bring the schema / data to the desired state

Of course, the advantages of using SSDT do not end there, but the rest is not as impressive as the one mentioned above. If you are interested in how to take advantage of these and other benefits - I ask for cat.

Installation and first acquaintance

Everything you need to install can be found on the download page in the Data Developer Center. Having chosen the necessary version, you can easily install the tools on your computer and describe it does not see the point. After installation, a new project type will appear in the create new project window:

Creating a new project you will see the following:

')

In the SQL Server Object Explorer panel (menu View -> SQL Server Object Explorer) we see something very similar to Object Explorer in SQL Server Management Studio, from which everything that does not make much sense at the database development stage is removed.

By connecting to an existing database, you can develop a database in the so-called Connected mode. This is not much different from the classical approach used in SQL Server Management Studio and will not be discussed in this article.

Disconnected mode

This development mode is most interesting to us, since It is this one that allows you to get the main benefits of using SSDT

The work is based on a very simple idea - to allow developers to store all the scripts for creating database objects (tables, views, store procedures, etc.) in a project of a special type as part of an existing or new solution (solution). Based on scripts, Visual Studio can generate a DACPAC file, which is essentially a zip archive with all t-sql scripts. Having a DACPAC file, it will be possible to publish (publish) the required database instance by comparing the schema described in DACPAC and the schema in the target database. During the publication, special mechanisms make comparisons, as a result of which migration scripts are automatically created to apply changes without losing data.

In order to see this in action, I suggest to look at the following examples.

Let's start with the possibility of import. Call the project context menu and see 3 possible options:

- Script (* .sql) - adds one or several * .sql files from the specified location to the project structure;

- Data-tier Application (* .dacpac) - adds * .sql files, as well as various database settings from the special DACPAC file described above; may contain not only the base scheme, but also data and various base settings;

- Database ... - is similar to the previous version, but the data source is the existing database

We will select the “Database ...” option and import the local database. It contains one table and one stored procedure. In SQL Server Object Explorer, the source database looks like this:

After the import is complete, we will see an extremely similar picture, with the only difference that the database structure will be presented in Solution Explorer as * .sql files.

Also, we can always add new items using the Add New Item dialog box, which lists all possible database objects:

Add the TestTable table. A new script file TestTable.sql will be added to the project root and for convenience we will transfer it to the Tables folder.

To create a table schema, we can use both the designer panel and the T-SQL panel. All changes made on one panel will be immediately displayed in another.

We can also modify existing scripts. Visual Studio for this provides a convenient and beloved by all IntelliSense. Since we are not connected to the physical database, Visual Studio for IntelliSence to work correctly parses all the scripts in the project, which allows it to instantly reflect the latest changes made to the database schema.

I want to draw attention to the fact that we do not have to worry about incremental changes to our base. Instead, we always create scripts as if objects were created anew. When publishing a DACPAC package, migration scripts will be generated automatically by comparing the DACPAC file and the schema in the target database.

As already mentioned, DACPAC contains not only the schema and data, but also a number of useful settings, for viewing / editing of which we can use the properties window of our project.

The Target platform property allows you to set the version of the database for which scripts in the project will be validated. The minimum supported version of MS SQL Server 2005. For example, if you specify the version of the database 2005 and try to create a column of the Geography type, then we will receive the following message when compiling:

On the Project Settings tab, we can set the database settings by clicking on the Database Settings button. Clicking on it we will see a dialog with settings similar to those we used to see in SQL Server Management Studio:

I would also like to mention the SQLCMD Variables tab, on which we can set various variables for further use in our scripts.

Publishing the DACPAC file (publishing)

After all the settings are specified and the * .sql scripts are added / updated, we can apply the changes to the target database. To do this, go to the menu Build-> Publish, or choose a similar item in the context menu of the project.

In the dialog box that appears, we set the connection string to the target database (target database) and, if necessary, additional settings by clicking the Advanced button:

Most of the settings are understandable without additional description, so we will not dwell on them in detail, but I recommend you to familiarize yourself with them, so that if it is impossible to successfully “fill in” the project, you know what the problem may be.

If you want to publish to the target database more than once, then the settings can be saved to the publish profile by clicking the Create Profile button. This will add a file with the extension * .publish.xml to our project and in the future we will be able to publish without the need to enter the settings again. If any of the publication profiles should be used by default, then you can select Set As Default Publish Profile in the context menu of the publication file. This profile will automatically load into the Publish dialog.

All necessary changes can be applied immediately by clicking on the Publish button. And you can postpone until later by generating the corresponding migration script (button Generate Script) - it will contain all the necessary instructions to bring the destination database to the desired state.

If we do not have access to the database, then we can transfer the results of our work as a DACPAC file, which is created by compiling the project and located in ../bin/Debug/Database1.dacpac. Having given a file, for example, to a database administrator, he, in turn, will be able to use any convenient way to apply changes to the target database.

Methods of publishing DACPAC (publishing):

- Free edition of Visual Studio with installed SSDT (in particular, for publish, the client tools included in the DAC Framework that are installed with SSDT are used)

- MS SQL Server Management Studio + DAC Framework

- Console utility SqlPackage.exe

- Windows PowerShell ( example )

- The Data-tier Application Framework (DACFx) allows you to put a DACPAC file by calling methods from a C # program ( documentation and example )

Data seeding

In our project we will create a DataSeeding folder (the name does not matter) and add a new script to it.

In fact, all types in the User Script section are normal * .sql scripts and differ only in the value of the “Build Action” property of the newly created file.

The logic from the PostDeployment.sql file will be executed after applying all changes to the database schema. In the case of creating PreDeployment.sql, the logic will be executed before applying the schema changes.

The value of the Build Action property for files created through the Script template (Not in Build) will be set to “None”. They are useful for conveniently structuring commands in separate files that are called from Pre or Post Deployment scripts.

Files created through the Script template have a Build Action value of “Build”, and their contents are added to the resulting script, which is executed when the DACPAC file is published at the time the database is changed.

Since there can be only one Post Deployment script in a project and its size can quickly grow, it is recommended that the data insertion logic be placed into separate scripts. That is why we will add a Script file (Not in Build), and in the Post Deployment script we will add a link to it. Here is what it will look like:

Now when publishing our project, 2 records will always be inserted into the Employees table in the database.

Tools -> SQL Server

Along with the ability to create a Database project, installing SSDT adds a number of useful tools available from the Tools menu.

I think that it is clear from the title that each of the points does. As an example, I will show a convenient graphical schema comparison tool. As a source and target object, you can choose one of three options:

We will compare our project with a local database. The result of the comparison will be as follows:

In the resulting window, we can apply various grouping methods (according to the scheme, according to the type of objects and according to the required action) to more conveniently view the proposed changes and select the objects that need to be updated. In order to apply the migration script, you must click the Update button - this will lead the Target DB to the state of our project.

Refactoring

This is my favorite feature. For example, we will show how to rename the LastName column in the Employees table. To do this, open the table creation script, select the LastName column in the table editor and select the Rename item in the SQL menu -> Refactor:

Set a new name:

Review the effects of renaming and apply the proposed changes:

As a result, all scripts will be changed and after the first refactoring a special * .refactoring file will be added to the project. It will save all schema changes in historical order in an XML document format. This data will be useful in generating a migration script and will allow you to migrate the schema and data more correctly.

Unit testing

Let's create our first unit test. To do this, call the context menu for the stored procedure that we want to test:

In the dialog box that appears, we will have the opportunity to select additional objects (if any) and set the type and name of the test project and the name of the class containing the unit test code:

Having created a project, we will be asked to select a database on which tests will be run, as well as some project settings:

After successful creation, we will open the graphical editor of the unit test, at the bottom of which various checks for the tested object will be presented. In our case, this is the EmployeeGetList stored procedure.

Our task is to write the necessary Sql script and set the required verification conditions, which will be performed after the script code execution. Checks can be different: execution time, number of rows returned, checksum returned data, etc. A complete list of checks can be found in the drop-down menu under the text of the script and above the table checks. For each check, you can set a number of settings through the standard Properties panel. To call it, select the Properties item in the context menu of a specific scan.

For example, this is how the verification of the returned number of lines will look like:

And this is how you can check Checksum:

In fact, this check is performed by our script (it gets 2 rows from the Employees table) and finds the Checksum on the received data. Our task at the stage of test creation is to find reference data, calculate Checksum for them, and in the future this result will be verified with this value. In other words, this is a convenient way to ensure that the result of the stored procedure does not change. To get the Checksum control value, you need to use the button in the Properties window, which will allow you to select a reference database and get the Cheksum reference value:

Conclusion

I hope this brief review has provided an overview of what SSDT is and how they can be useful in your project. Of course there were not considered all the details. But you, as a developer, don't need this. You should just have a general idea of the list of opportunities, and I hope that their further use will be intuitive because The SSDT developers did a great job and supplied the tools with a huge number of wizards and contextual clues.

Source: https://habr.com/ru/post/256653/

All Articles