Worm apples [WITHOUT JailBreak]

Nobody can be surprised today with stories about malware for the Android OS, except about rootkit technologies or about new concepts, sharpened for a new runtime environment ART. With malware for iOS, the opposite is true : if anyone has heard of it, it is usually only in the context of the jailbreak . In 2014 there was a general boom of such programs (AdThief, Unflod, Mekie, AppBuyer, Xsser). But in this article we will talk about malware and its capabilities for iOS without jailbreak ...

Let's immediately agree on the initial conditions regarding our Apple device:

- IOS device on board;

- IOS latest version;

- Device without jailbreak.

Such rigid initial conditions are specified (so that life does not seem like honey). Simply, if the device already has a jailbreak or the attacker can easily install it from public sources, then it is uninteresting and immediately game over: the user himself is angry Pinocchio. The operating system security model is completely broken, and writing malicious code for such an Apple device will not differ in general from writing malicious code for Android, Windows or Mac.

The main protective measures due to which the malicious code does not fall in large quantities (does not fall into it) on Apple devices:

- Strict verification of applications upon receipt in the AppStore;

- The inability to dynamically load code from external sources;

- Only the code signed by Apple can be executed on the device.

But let's get everything in order. First, let's look at what code and so you can generally run on non-jailbroken devices.

First, you can install applications from the AppStore: they are signed by the distribution-certificate and can be run on an unlimited number of devices, but can only be distributed via the AppStore after a thorough check.

Secondly, through the TestFlight application (from the AppStore) to test beta versions of applications. This version of the application is signed by a distribution-certificate with a beta entitlement, available for 1000 users (binding by Apple ID, so there may be more devices) and passing the Beta App Review (takes less time, so is it as thorough as for the AppStore, unclear...).

Thirdly, of course, on devices you can run code signed by a developer-certificate issued to an Apple developer for a specific device (no more than 100 pre-specified devices — knowledge of the UUID is required) for testing on real devices (Ad Hoc distribution). The same rules are played and services HockeyApp, Ubertesters, Fabric (Crashlytics). At the same time, as you understand, there is no verification code from Apple;)

Fourth, applications signed by the enterprise certificate. Such applications can be distributed from anywhere (in-house-distribution) and run on an unlimited number of devices (ProvisionsAllDevices = true). And Apple’s code review is also missing!

')

What does it mean to distribute the application from anywhere? This means that you can put the application on a web portal (internal or on the Internet) and when accessing it, simply install it on the device. In the pictures for the user installation of the application looks like this:

You can find such applications on the Internet by keyword:

itms-services://?action=downloadmanifest As I think you have already guessed, the essence of the problem is in the complete trust in applications signed by a developer or enterprise certificate. In general, the entire Apple security model is based on trust in certificates ...

It is clear that an attacker can somehow get these certificates: to create a fake company or a one-day company or just steal certificates - this is a matter of technology and a question of preparing for an attack. Apple has the ability to revoke enterprise and developer certificates (uses OSCP), but there are a number of tricks that counteract this process. And in the case of developer certificates, this is generally not an easy task for Apple.

Let's consider which attack vectors now open up to the attacker (aware means protected) and in general there are for non-jailbroken devices.

1) A malicious present. They simply give a person a device with a preinstalled set (they don’t look a gift horse in the mouth) “useful” programs from safe messengers to picture editors. To do this, as a rule, any well-known program from the AppStore is taken, unpacked and decrypted, additional functionality is added to it, signed with the appropriate certificate and installed on the device. At the same time, as you understand, the application continues to function normally and perform its original functions. No matter how ridiculous and primitive this method is, we already know of cases when such devices were presented to a certain circle of people in Russia.

2) Through the infected computer. The device connects to the infected computer that it trusts (with which pairing is installed), and the malicious code on the system installs the malicious application already on the mobile device (for example, using the libimobiledevice library).

3) A couple of seconds in the wrong hands. Lending a phone to an attacker / foe / jealous girl for a couple of seconds will also be enough to install a malicious program on the phone. The attacker pre-places his application somewhere on the Internet and, while the phone is in his hands, via Safari, follows the link and installs it, and you don’t need to know any passwords!

4) Himself Pinocchio. You yourself can put yourself an application from some third-party source, for example, in an effort to save on buying a game in the AppStore. As far as I know, for example, in China (Tongbu) they like to buy, break games and then distribute them for free (or cheaper), having oversubscribed them with their certificate.

5) Hacked developer. Malicious code can get into the project and quietly spread to the devices through the same HockeyApp, Ubertesters, Fabric (Crashlytics), if they are, of course, used. Well, or the attacker simply steals the certificates he needs from the developer.

6) Insider. This item is relevant only for the companies themselves, which have internal development and distribution of corporate applications. The problem is that the developer in such a situation is free to use not only Apple-enabled features ... but more on that later.

7) Through application vulnerability. The most beautiful and most complex attack vector at the same time with a minimum of suspicion. At the same time, the application can even be distributed via the AppStore. A similar concept has already been described in “ Jekyll on iOS: When Benign Apps Become Evil ”.

8) Due to the vulnerability in iOS. This was already previously found , for example, by the Charlie Miller researcher and allowed him to dynamically load and execute unsigned code on the device.

A reader familiar with iOS security mechanisms may note that after an application hits an iOS device, it works in its own limited, separated from all sandbox ...

In principle, it is really so, BUT it is worth considering that the malicious file downloaded by the attacker by Apple’s policy has not been verified in any way and in fact can use more functionality than any application from the AppStore. And all thanks to the private API! Apple itself programs with such functionality will not miss, and then no one asks.

So, an application that was installed not from the AppStore, from a security point of view, can have the following differences:

- Can use private API - additional functionality;

- Can communicate with surrounding services (such as mach-ports);

- Receives an increased attack surface for jailbreak on the device.

So, how can a private API be useful to an attacker? Right off the bat:

| Call | Description |

|---|---|

| Get the text of the incoming SMS message |

| Register handler for incoming SMS-messages and calls |

| Get plist-information about installed applications |

| Pop-up dialog on top of all screens. |

| Get user input from dialog box |

| Get a list of bundle ID of installed applications |

| Get program name by bundle ID |

For a general understanding of how much is hidden and not allowed to ordinary developers under iOS, see the project iOS-Runtime-Headers , which is a header file for iOS generated at runtime. Or the project RuntimeBrowser , which, in principle, is used to generate such header files, but allows you to immediately work with calls. It should be noted that private API is both in the private frameworks and in the public frameworks. And also, in addition to private Objective-C-classes and methods, there are also very interesting C-functions that the RuntimeBrowser does not find.

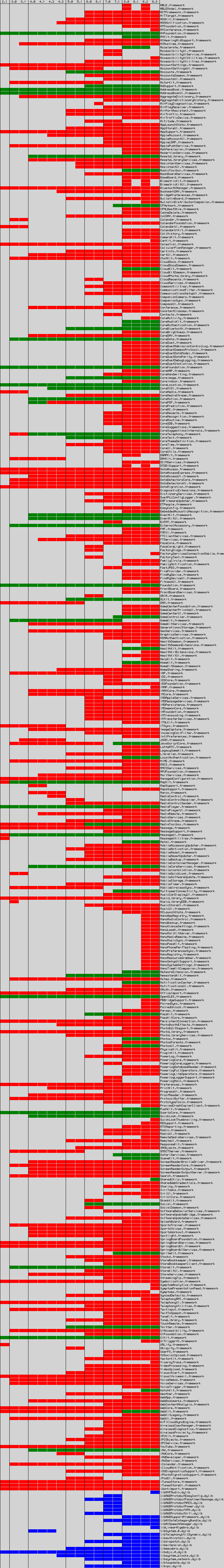

Very indicative picture:

All this one way or another can use an attacker. At the same time, his hands are also free to apply various techniques of dynamic code loading through NSBundle and dlopen ().

If you think that all of the above is just ideas and concepts, then I hurry to upset you ... This has already happened in a combat environment - for example, malicious Wirelurker , who among other things conducted the so-called Masque Attack . The meaning of Masque Attack (CVE-2014-4493 and CVE-2014-4494 ) was that the malicious application was placed on top of the original (messenger, mail, client bank, etc., except for the pre-installed iOS applications) and accessed his data.

Safety recommendations:

- Do not install applications from third-party sources;

- Update the OS;

- Control profiles on the device (Settings -> General -> Profiles);

- Keep your certificates safe (for developers);

- Control the code that is written for in-house distribution (for customers).

PS For people interested in malware samples for iOS, I advise you to contact this resource: contagiominidump.blogspot.ru/search?q=iOS .

Useful materials on the topic:

Source: https://habr.com/ru/post/256407/

All Articles