How we taught iPhone football clubs to recognize

Hi, Habr!

My name is Igor Litvinenko, I have been developing for mobile devices for more than three years, mainly for iOS. In DataArt I study the promotion of various tasks related to computer vision: image processing, the development of augmented reality programs, the use of neural networks, and so on, with specifics for mobile devices. Today I want to tell you about our research / fun project related to football.

')

Instead of introducing and a long speech on the development of modern technologies and pattern recognition, let us immediately proceed to the formulation of the problem.

Formulation of the problem

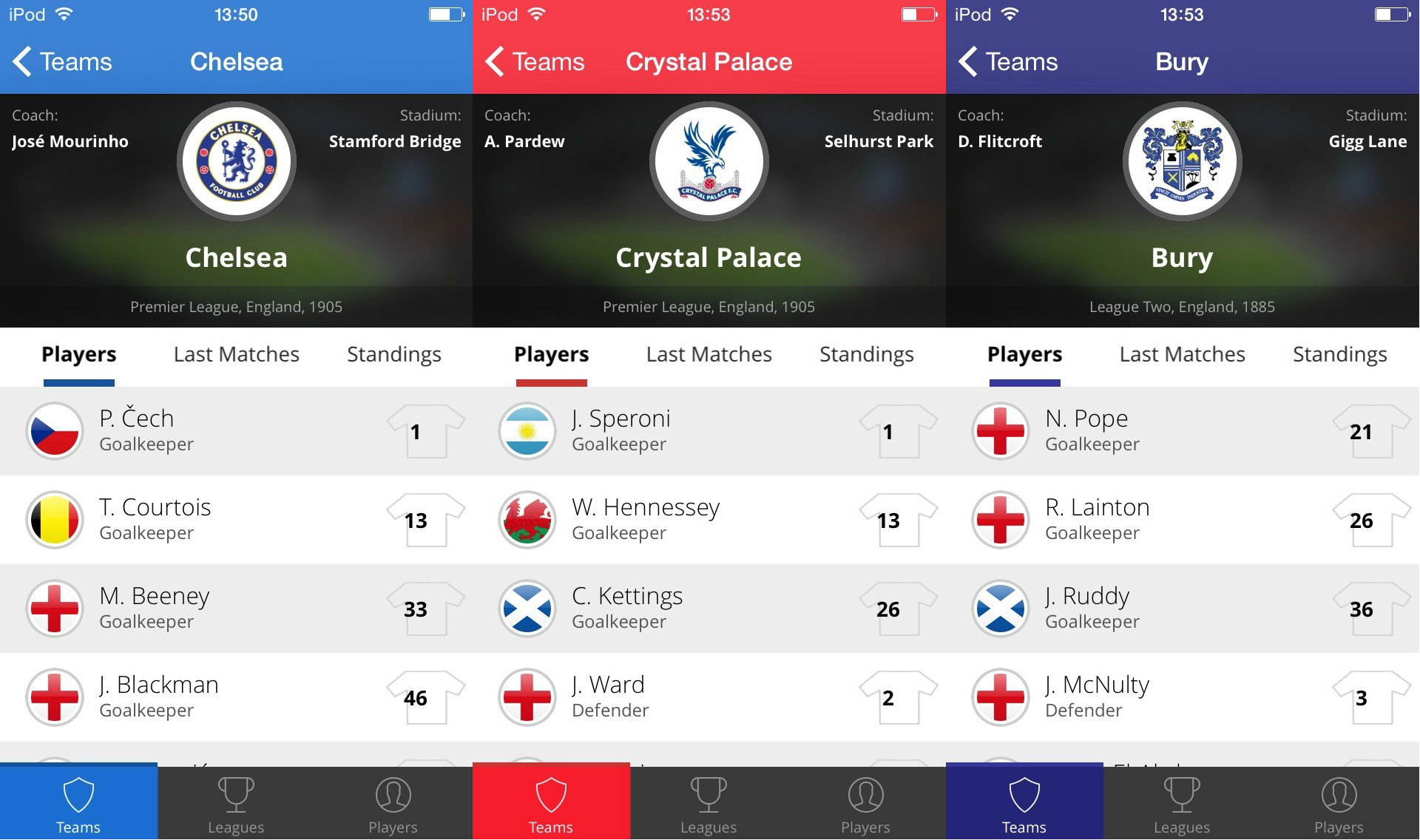

It is necessary to develop a mobile application that would show current information on football clubs, teams, the latest matches. For ease of use of the original killer feature of the application will be the ability to display information about the team when you hover the phone on its logo. The application should also work without an internet connection. The estimated number of recognizable teams is about a hundred (we were no longer choosing here. We just wanted to cover all the clubs in the main football leagues in England). Another feature is the ability to "repaint" the user interface based on the last recognized logo.

Requirements analysis and search for ready-made solutions

It is clear that it will be necessary to solve the problem of recognizing a particular image in the video stream. However, the requirement to work offline, to be honest, seriously reduces the set of solutions. So, we can no longer use ready-made cloud solutions or perform complex recognition on the server side. Those. all calculations should be done only on the client side.

The second problem is the large number and complexity of recognition objects. It's no secret that the logos of football teams rarely resemble each other. Moreover, few logos have a simple form and can be applied on any surface (in a magazine with a glare, on an arbitrary background, slightly changed colors, and even in the form of a monochrome tattoo).

An additional complicating factor is that the image on the flag will definitely be deformed: the flag is not a solid surface.

One last thing: the logo always has a certain angle of rotation in comparison with the reference view. In the simple case, it will be a turn along one axis, in the worst case, a turn along all three axes.

At some point we realized that we had a task in our hands, very similar to the creation of marker augmented reality, where the logos of football clubs would be markers. I will a little explain this moment.

By and large, the huge task of augmented reality can be divided into two subtasks: finding the marker, determining the rotation angle in 3D, displaying content on top of the marker, applying the necessary transformations to it. We need only the results of solving the first problem, and then we ourselves.

We decided not to reinvent the wheel, but to take a ready-made math engine. But, as usual, the devil is in the details. As a rule, all ready-made commercial engines put a limit on the number of markers that can be found simultaneously in one session. I prefer not to say about non-commercial ones: that the quality of recognition, that the stability of recognition there is clearly not good enough. The maximum with which they can work well is to find simple markers surrounded by a black frame, which is clearly not our option.

You could still dig in the direction of OpenCV, but it would take a lot of time, and the result would be a little better than communicating with most non-commercial engines. After a brief digging, it turned out that the ideal solution to our problem is Vuforia from Qualcomm. How, what and why - read below.

Why we chose Vuforia

Vuforia is one of the few engines that provide exactly marker recognition, tracking (tracking in a video frame) and the calculation of rotation angles. KFOR does not contain any code to simplify the rendering of your objects and only uses OpenGL ES in the examples. In fact, this was not necessary in our project: we show an information screen. However, if we were solving the classical problem of augmented reality, this would be a serious disadvantage. Although Vuforia has the Unity plugin for such a task, I still find it more convenient to use a complex solution (the same MetaIO is much more convenient in this matter). We have highlighted the following Vuforia benefits:

- First, the product has been around for quite some time, and is being developed by its manufacturer of mobile processors. This suggests that the product is optimized for mobile phones.

- Secondly, absolutely free without restrictions. Of course, there are a lot of paid services like recognition in the cloud and so on, but it is quite possible to do with the free version.

- Thirdly, the documentation, the developer forum and the community. There you can often find answers to questions. And, of course, examples of the use of each opportunity engine

- Cherry on the cake - there is a version for most of the popular mobile platforms, in case we need to partie our solution.

The technical part of the integration

Vuforia itself is the library we need to add to the project. However, for its operation, it is necessary to change some compilation flags. The most painless way to connect Vuforia is to get an example from the official site and isolate the code for interaction from there. This code is common to all projects, t. H. Just bring it into a more reusable form.

To start the code for EAGLView

A lot of code under the spoiler

MSImageRecognitionEAGLView.mm - (void)dealloc { [self deleteFramebuffer]; if ([EAGLContext currentContext] == _context) { [EAGLContext setCurrentContext:nil]; } } - (id)initWithCoder:(NSCoder *)aDecoder{ self = [super initWithCoder:aDecoder]; if (self){ vapp = [MSImageRecognitionSession sharedSession]; [self setup]; } return self; } - (id)initWithFrame:(CGRect)frame{ self = [super initWithFrame:frame]; if (self){ vapp = [MSImageRecognitionSession sharedSession]; [self setup]; } return self; } - (id)initWithFrame:(CGRect)frame appSession:(MSImageRecognitionSession *) app { self = [super initWithFrame:frame]; if (self) { vapp = app; [self setup]; } return self; } - (void)setup { if (YES == [vapp isRetinaDisplay]) { [self setContentScaleFactor:2.0f]; } _context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES2]; if (_context != [EAGLContext currentContext]) { [EAGLContext setCurrentContext:_context]; } offTargetTrackingEnabled = NO; } - (void)finishOpenGLESCommands { if (_context) { [EAGLContext setCurrentContext:_context]; glFinish(); } } - (void)freeOpenGLESResources { [self deleteFramebuffer]; glFinish(); } - (void) setOffTargetTrackingMode:(BOOL) enabled { offTargetTrackingEnabled = enabled; } - (void)renderFrameQCAR { [self setFramebuffer]; glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT); QCAR::State state = QCAR::Renderer::getInstance().begin(); QCAR::Renderer::getInstance().drawVideoBackground(); glEnable(GL_DEPTH_TEST); if (offTargetTrackingEnabled) { glDisable(GL_CULL_FACE); } else { glEnable(GL_CULL_FACE); } glCullFace(GL_BACK); if(QCAR::Renderer::getInstance().getVideoBackgroundConfig().mReflection == QCAR::VIDEO_BACKGROUND_REFLECTION_ON) glFrontFace(GL_CW); else glFrontFace(GL_CCW); glDisable(GL_DEPTH_TEST); glDisable(GL_CULL_FACE); glDisableVertexAttribArray(vertexHandle); glDisableVertexAttribArray(normalHandle); glDisableVertexAttribArray(textureCoordHandle); QCAR::Renderer::getInstance().end(); [self presentFramebuffer]; } - (void)createFramebuffer { if (_context) { glGenFramebuffers(1, &_defaultFramebuffer); glBindFramebuffer(GL_FRAMEBUFFER, _defaultFramebuffer); glGenRenderbuffers(1, &_colorRenderbuffer); glBindRenderbuffer(GL_RENDERBUFFER, _colorRenderbuffer); [_context renderbufferStorage:GL_RENDERBUFFER fromDrawable:(CAEAGLLayer*)self.layer]; GLint framebufferWidth; GLint framebufferHeight; glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_WIDTH, &framebufferWidth); glGetRenderbufferParameteriv(GL_RENDERBUFFER, GL_RENDERBUFFER_HEIGHT, &framebufferHeight); glGenRenderbuffers(1, &depthRenderbuffer); glBindRenderbuffer(GL_RENDERBUFFER, depthRenderbuffer); glRenderbufferStorage(GL_RENDERBUFFER, GL_DEPTH_COMPONENT16, framebufferWidth, framebufferHeight); glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_RENDERBUFFER, _colorRenderbuffer); glFramebufferRenderbuffer(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_RENDERBUFFER, depthRenderbuffer); glBindRenderbuffer(GL_RENDERBUFFER, _colorRenderbuffer); } } - (void)deleteFramebuffer { if (_context) { [EAGLContext setCurrentContext:_context]; if (_defaultFramebuffer) { glDeleteFramebuffers(1, &_defaultFramebuffer); _defaultFramebuffer = 0; } if (_colorRenderbuffer) { glDeleteRenderbuffers(1, &_colorRenderbuffer); _colorRenderbuffer = 0; } if (depthRenderbuffer) { glDeleteRenderbuffers(1, &depthRenderbuffer); depthRenderbuffer = 0; } } } - (void)setFramebuffer { if (_context != [EAGLContext currentContext]) { [EAGLContext setCurrentContext:_context]; } if (!_defaultFramebuffer) { [self performSelectorOnMainThread:@selector(createFramebuffer) withObject:self waitUntilDone:YES]; } glBindFramebuffer(GL_FRAMEBUFFER, _defaultFramebuffer); } - (BOOL)presentFramebuffer { glBindRenderbuffer(GL_RENDERBUFFER, _colorRenderbuffer); return [_context presentRenderbuffer:GL_RENDERBUFFER]; } MSImageRecognitionSession.mm #import "MSImageRecognitionSession.h" #import <QCAR/QCAR.h> #import <QCAR/QCAR_iOS.h> #import <QCAR/Tool.h> #import <QCAR/Renderer.h> #import <QCAR/CameraDevice.h> #import <QCAR/VideoBackgroundConfig.h> #import <QCAR/UpdateCallback.h> #import <QCAR/TrackerManager.h> #import <QCAR/ImageTracker.h> #import <QCAR/Trackable.h> #import <QCAR/DataSet.h> #import <QCAR/TrackableResult.h> #import <QCAR/TargetFinder.h> #import <QCAR/Trackable.h> #import <QCAR/ImageTarget.h> #import <AVFoundation/AVFoundation.h> namespace { // --- Data private to this unit --- // NSerror domain for errors coming from the Sample application template classes static NSString * const MSImageRecognitionSessionErrorDomain = @"ImageRecognitionSessionErrorDomain"; static const int MS_QCARInitFlags = QCAR::GL_20; // instance of the seesion used to support the QCAR callback there should be only one instance of a session at any given point of time static MSImageRecognitionSession* __sharedInstance = nil; static BOOL __initialized = NO; // camera to use for the session QCAR::CameraDevice::CAMERA mCamera = QCAR::CameraDevice::CAMERA_DEFAULT; // class used to support the QCAR callback mechanism class VuforiaApplication_UpdateCallback : public QCAR::UpdateCallback { virtual void QCAR_onUpdate(QCAR::State& state); } qcarUpdate; } static inline void MSDispatchMain(void (^block)(void)) { dispatch_async(dispatch_get_main_queue(), block); } NSString * const MSImageRecognitionCloudAccessKey = @"MSImageRecognitionCloudAccessKey"; NSString * const MSImageRecognitionCloudSecretKey = @"MSImageRecognitionCloudSecretKey"; @interface MSImageRecognitionSession () { CGSize _boundsSize; UIInterfaceOrientation _interfaceOrientation; dispatch_queue_t _dispatchQueue; QCAR::DataSet **_dataSets; NSInteger _dataSetsCount; BOOL _isCloudRecognition; } @property (atomic, readwrite) BOOL cameraIsActive; @property (nonatomic, copy) MSImageRecognitionBlock recognitionBlock; @end @implementation MSImageRecognitionSession // Determine whether the device has a retina display + (BOOL)isRetinaDisplay { // If UIScreen mainScreen responds to selector displayLinkWithTarget:selector: and the scale property is 2.0, then this is a retina display return ([[UIScreen mainScreen] respondsToSelector:@selector(displayLinkWithTarget:selector:)] && 2.0 == [UIScreen mainScreen].scale); } - (BOOL) isRetinaDisplay{ return [[self class] isRetinaDisplay]; } #pragma mark - Cleanup - (void)cleanupSession:(MSImageRecognitionCompletionBlock)completion; { MSImageRecognitionCompletionBlock blockCopy = completion ? [completion copy] : nil; dispatch_async(_dispatchQueue, ^{ self.recognitionBlock = nil; if(_dataSets) { delete[] _dataSets; _dataSets = NULL; } if(blockCopy) { MSDispatchMain(^{ blockCopy(YES, nil); }); } }); } #pragma mark - Init + (instancetype)sharedSession { static dispatch_once_t onceToken; dispatch_once(&onceToken, ^{ __sharedInstance = [MSImageRecognitionSession new]; }); return __sharedInstance; } - (id)init { self = [super init]; if(self) { QCAR::registerCallback(&qcarUpdate); _interfaceOrientation = UIInterfaceOrientationPortrait; _dispatchQueue = dispatch_queue_create("imageRecognitionSession.queue.FlagRecognition", DISPATCH_QUEUE_SERIAL); } return self; } - (void)initializeSessionOnCloud:(BOOL)isCloudRecognition withCompletion:(MSImageRecognitionCompletionBlock)completion { dispatch_async(_dispatchQueue, ^{ _isCloudRecognition = isCloudRecognition; NSError *error = nil; MSImageRecognitionCompletionBlock blockCopy = completion ? [completion copy] : nil; if(!__initialized && ![self initialize:&error]) { NSLog(@"Failed to initialize image recognition session: %@", error); } MSDispatchMain(^{ if(blockCopy) blockCopy(error == nil, error); }); }); } // Initialize the Vuforia SDK - (BOOL)initialize:(NSError **)error { DLog(@""); NSParameterAssert(error); self.cameraIsActive = NO; self.cameraIsStarted = NO; // If this device has a retina display, we expect the view bounds to have been scaled up by a factor of 2; this allows it to calculate the size and position of // the viewport correctly when rendering the video background. The ARViewBoundsSize is the dimension of the AR view as seen in portrait, even if the orientation is landscape CGSize screenSize = [[UIScreen mainScreen] bounds].size; if ([MSImageRecognitionSession isRetinaDisplay]) { screenSize.width *= 2.0; screenSize.height *= 2.0; } _boundsSize = screenSize; // Initialising QCAR is a potentially lengthy operation, so perform it on a background thread QCAR::setInitParameters(MS_QCARInitFlags); // QCAR::init() will return positive numbers up to 100 as it progresses towards success. Negative numbers indicate error conditions NSInteger initSuccess = 0; do { initSuccess = QCAR::init(); } while (0 <= initSuccess && 100 > initSuccess); if(initSuccess != 100) { *error = [self errorWithCode:E_INITIALIZING_QCAR]; return NO; } [self prepareAR]; __initialized = YES; return YES; } - (void)prepareAR { // Tell QCAR we've created a drawing surface QCAR::onSurfaceCreated(); // Frames from the camera are always landscape, no matter what the orientation of the device. Tell QCAR to rotate the video background (and // the projection matrix it provides to us for rendering our augmentation) by the proper angle in order to match the EAGLView orientation switch (_interfaceOrientation) { case UIInterfaceOrientationPortrait: QCAR::setRotation(QCAR::ROTATE_IOS_90); break; case UIInterfaceOrientationPortraitUpsideDown: QCAR::setRotation(QCAR::ROTATE_IOS_270); break; case UIInterfaceOrientationLandscapeLeft: QCAR::setRotation(QCAR::ROTATE_IOS_180); break; case UIInterfaceOrientationLandscapeRight: QCAR::setRotation(1); break; } if(UIInterfaceOrientationIsPortrait(_interfaceOrientation)) { QCAR::onSurfaceChanged(_boundsSize.width, _boundsSize.height); } else { QCAR::onSurfaceChanged(_boundsSize.height, _boundsSize.width); } } #pragma mark - AR control - (void)resumeAR:(MSImageRecognitionCompletionBlock)block { dispatch_async(_dispatchQueue, ^{ NSError *error = nil; MSImageRecognitionCompletionBlock blockCopy = block ? [block copy] : nil; QCAR::onResume(); // if the camera was previously started, but not currently active, then we restart it if ((self.cameraIsStarted) && (! self.cameraIsActive)) { // initialize the camera if (! QCAR::CameraDevice::getInstance().init(mCamera)) { [self errorWithCode:E_INITIALIZING_CAMERA error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } // start the camera if (!QCAR::CameraDevice::getInstance().start()) { [self errorWithCode:E_STARTING_CAMERA error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } self.cameraIsActive = YES; } MSDispatchMain(^{ if(blockCopy) blockCopy(YES, nil); }); }); } - (void)pauseAR:(MSImageRecognitionCompletionBlock)block { dispatch_async(_dispatchQueue, ^{ MSImageRecognitionCompletionBlock blockCopy = block ? [block copy] : nil; if (self.cameraIsActive) { NSError *error = nil; // Stop and deinit the camera if(! QCAR::CameraDevice::getInstance().stop()) { [self errorWithCode:E_STOPPING_CAMERA error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } if(! QCAR::CameraDevice::getInstance().deinit()) { [self errorWithCode:E_DEINIT_CAMERA error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } self.cameraIsActive = NO; } QCAR::onPause(); MSDispatchMain(^{ if(blockCopy) blockCopy(YES, nil); }); }); } - (void)startAR:(MSImageRecognitionCompletionBlock)block recognitionBlock:(MSImageRecognitionBlock)recognitionBlock; { __block NSError * error_ = nil; __block BOOL isSuccess = YES; MSImageRecognitionCompletionBlock blockCopy = block ? [block copy] : nil; self.recognitionBlock = recognitionBlock; if ([AVCaptureDevice respondsToSelector:@selector(requestAccessForMediaType:completionHandler:)]) { // Completion handler will be dispatched on a separate thread [AVCaptureDevice requestAccessForMediaType:AVMediaTypeVideo completionHandler:^(BOOL granted) { if (YES == granted) { isSuccess = [self startCamera:QCAR::CameraDevice::CAMERA_BACK viewWidth:_boundsSize.width andHeight:_boundsSize.height error:&error_]; if (isSuccess) { self.cameraIsActive = YES; self.cameraIsStarted = YES; } } else { UIAlertView * alert = [[UIAlertView alloc] initWithTitle:@"Permissions Error" message:@"Please allow to use camera in Settings > Privacy > Camera" delegate:nil cancelButtonTitle:@"Okay" otherButtonTitles: nil]; [alert show]; } self.cameraIsActive = NO; self.cameraIsStarted = NO; MSDispatchMain(^{ if(blockCopy) blockCopy(isSuccess, error_); }); }]; } else { isSuccess = [self startCamera:QCAR::CameraDevice::CAMERA_BACK viewWidth:_boundsSize.width andHeight:_boundsSize.height error:&error_]; if (isSuccess) { self.cameraIsActive = YES; self.cameraIsStarted = YES; } if(blockCopy) blockCopy(isSuccess, error_); } } // Stop QCAR camera - (void)stopAR:(MSImageRecognitionCompletionBlock)block { dispatch_async(_dispatchQueue, ^{ NSError *error = nil; MSImageRecognitionCompletionBlock blockCopy = block ? [block copy] : nil; // Stop the camera if (self.cameraIsActive) { // Stop and deinit the camera QCAR::CameraDevice::getInstance().stop(); QCAR::CameraDevice::getInstance().deinit(); self.cameraIsActive = NO; } self.cameraIsStarted = NO; // ask the application to stop the trackers if(![self stopTrackers]) { [self errorWithCode:E_STOPPING_TRACKERS error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } // ask the application to unload the data associated to the trackers if(![self deactivateDataSets]) { [self errorWithCode:E_UNLOADING_TRACKERS_DATA error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } // ask the application to deinit the trackers [self deinitTrackers]; // Pause and deinitialise QCAR QCAR::onPause(); // QCAR::deinit(); MSDispatchMain(^{ if(blockCopy) blockCopy(YES, nil); }); }); } // stop the camera - (void)stopCamera:(MSImageRecognitionCompletionBlock)block { dispatch_async(_dispatchQueue, ^{ NSError *error = nil; MSImageRecognitionCompletionBlock blockCopy = block ? [block copy] : nil; if (self.cameraIsActive) { // Stop and deinit the camera QCAR::CameraDevice::getInstance().stop(); QCAR::CameraDevice::getInstance().deinit(); self.cameraIsActive = NO; } else { [self errorWithCode:E_CAMERA_NOT_STARTED error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } self.cameraIsStarted = NO; // Stop the trackers if(![self stopTrackers]) { [self errorWithCode:E_STOPPING_TRACKERS error:&error]; MSDispatchMain(^{ if(blockCopy) blockCopy(NO, error); }); return; } MSDispatchMain(^{ if(blockCopy) blockCopy(YES, nil); }); }); } // Start QCAR camera with the specified view size - (BOOL)startCamera:(QCAR::CameraDevice::CAMERA)camera viewWidth:(float)viewWidth andHeight:(float)viewHeight error:(NSError **)error { // initialize the camera if (! QCAR::CameraDevice::getInstance().init(camera)) { [self errorWithCode:-1 error:error]; return NO; } // start the camera if (!QCAR::CameraDevice::getInstance().start()) { [self errorWithCode:-1 error:error]; return NO; } // we keep track of the current camera to restart this // camera when the application comes back to the foreground mCamera = camera; // ask the application to start the tracker(s) if(![self startTrackers]) { [self errorWithCode:-1 error:error]; return NO; } // configure QCAR video background [self configureVideoBackgroundWithViewWidth:viewWidth andHeight:viewHeight]; // Cache the projection matrix const QCAR::CameraCalibration& cameraCalibration = QCAR::CameraDevice::getInstance().getCameraCalibration(); _projectionMatrix = QCAR::Tool::getProjectionGL(cameraCalibration, 2.0f, 5000.0f); return YES; } #pragma mark - Trackers management /*! * @brief Trying to connect to cloud database * @param keys Dictionary that should contains access keys for the cloud DB. Must contain values for MSImageRecognitionCloudAccessKey and MSImageRecognitionCloudSecretKey keys. Cannot be nil. * @param completion Completion block. */ - (void)loadCloudTrackerForKeys:(NSDictionary *)keys withCompletion:(MSImageRecognitionCompletionBlock)completion { NSParameterAssert(keys[MSImageRecognitionCloudAccessKey] && keys[MSImageRecognitionCloudSecretKey]); MSImageRecognitionCompletionBlock blockCopy = completion ? [completion copy] : nil; NSError *error = nil; if([self initTracker:&error]) { [self loadCloudTrackerWithAccessKey:keys[MSImageRecognitionCloudAccessKey] andPrivateKey:keys[MSImageRecognitionCloudSecretKey] error:&error]; } MSDispatchMain(^{ if(blockCopy) blockCopy(error == nil, error); }); } - (void)loadBundledDataSets:(NSArray *)dataSetFilesNames withCompletion:(MSImageRecognitionCompletionBlock)completion { dispatch_async(_dispatchQueue, ^{ NSError *error = nil; MSImageRecognitionCompletionBlock blockCopy = completion ? [completion copy] : nil; if([self initTracker:&error]) { _dataSetsCount = dataSetFilesNames.count; _dataSets = new QCAR::DataSet*[_dataSetsCount]; NSInteger idx = 0; for(NSString *fileName in dataSetFilesNames) { QCAR::DataSet *dataSet = [self loadBundleDataSetWithName:fileName]; if (dataSet == NULL) { NSLog(@"Failed to load datasets"); error = [self errorWithCode:E_LOADING_TRACKERS_DATA]; break; } if (![self activateDataSet:dataSet]) { NSLog(@"Failed to activate dataset"); error = [self errorWithCode:E_LOADING_TRACKERS_DATA]; break; } _dataSets[idx++] = dataSet; } if(error) { if(_dataSets) { delete[] _dataSets; } } } MSDispatchMain(^{ if(blockCopy) blockCopy(error == nil, error); }); }); } - (BOOL)initTracker:(NSError **)error { NSParameterAssert(error); QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::Tracker* trackerBase = trackerManager.initTracker(QCAR::ImageTracker::getClassType()); if (!trackerBase){ trackerBase = trackerManager.getTracker(QCAR::ImageTracker::getClassType()); } if (!trackerBase) { NSLog(@"Failed to initialize ImageTracker."); *error = [self errorWithCode:E_INIT_TRACKERS]; return NO; } if(_isCloudRecognition) { QCAR::TargetFinder* targetFinder = static_cast<QCAR::ImageTracker*>(trackerBase)->getTargetFinder(); if (!targetFinder) { NSLog(@"Failed to get target finder."); *error = [self errorWithCode:E_INIT_TRACKERS]; return NO; } } NSLog(@"Successfully initialized ImageTracker."); return YES; } - (void)deinitTrackers { QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); trackerManager.deinitTracker(QCAR::ImageTracker::getClassType()); } - (BOOL)startTrackers { QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::Tracker* tracker = trackerManager.getTracker(QCAR::ImageTracker::getClassType()); if(tracker == 0) { return NO; } tracker->start(); if(_isCloudRecognition) { QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(tracker); QCAR::TargetFinder* targetFinder = imageTracker->getTargetFinder(); assert (targetFinder != 0); targetFinder->startRecognition(); } return YES; } - (BOOL)stopTrackers { QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); if (!imageTracker) { NSLog(@"ERROR: failed to get the tracker from the tracker manager"); return NO; } imageTracker->stop(); DLog(@"INFO: successfully stopped tracker"); if(_isCloudRecognition) { // Stop cloud based recognition: QCAR::TargetFinder* targetFinder = imageTracker->getTargetFinder(); assert(targetFinder != 0); targetFinder->stop(); DLog(@"INFO: successfully stopped cloud tracker"); } return YES; } #pragma mark - DataSet loading - (BOOL)loadCloudTrackerWithAccessKey:(NSString *)accessKey andPrivateKey:(NSString *)privateKey error:(NSError **)error { QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); if (imageTracker == NULL) { NSLog(@">doLoadTrackersData>Failed to load tracking data set because the ImageTracker has not been initialized."); *error = [self errorWithCode:E_LOADING_TRACKERS_DATA]; return NO; } // Initialize visual search: QCAR::TargetFinder* targetFinder = imageTracker->getTargetFinder(); if (targetFinder == NULL) { NSLog(@">doLoadTrackersData>Failed to get target finder."); *error = [self errorWithCode:E_LOADING_TRACKERS_DATA]; return NO; } NSDate *start = [NSDate date]; // Start initialization: if (targetFinder->startInit([accessKey cStringUsingEncoding:NSUTF8StringEncoding], [privateKey cStringUsingEncoding:NSUTF8StringEncoding])) { targetFinder->waitUntilInitFinished(); NSDate *methodFinish = [NSDate date]; NSTimeInterval executionTime = [methodFinish timeIntervalSinceDate:start]; NSLog(@"waitUntilInitFinished Execution Time: %f", executionTime); } int resultCode = targetFinder->getInitState(); if ( resultCode != QCAR::TargetFinder::INIT_SUCCESS) { if (resultCode == QCAR::TargetFinder::INIT_ERROR_NO_NETWORK_CONNECTION) { NSLog(@"CloudReco error:QCAR::TargetFinder::INIT_ERROR_NO_NETWORK_CONNECTION"); } else if (resultCode == QCAR::TargetFinder::INIT_ERROR_SERVICE_NOT_AVAILABLE) { NSLog(@"CloudReco error:QCAR::TargetFinder::INIT_ERROR_SERVICE_NOT_AVAILABLE"); } else { NSLog(@"CloudReco error:%d", resultCode); } int initErrorCode = (resultCode == QCAR::TargetFinder::INIT_ERROR_NO_NETWORK_CONNECTION ? QCAR::TargetFinder::UPDATE_ERROR_NO_NETWORK_CONNECTION : QCAR::TargetFinder::UPDATE_ERROR_SERVICE_NOT_AVAILABLE); *error = [self cloudRecognitionErrorForCode:initErrorCode]; return NO; } else { NSLog(@"cloud target finder initialized"); return YES; } } - (QCAR::DataSet *)loadBundleDataSetWithName:(NSString *)dataFile { DLog(@"loadImageTrackerDataSet (%@)", dataFile); QCAR::DataSet * dataSet = NULL; QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); if (NULL == imageTracker) { NSLog(@"ERROR: failed to get the ImageTracker from the tracker manager"); return NULL; } else { dataSet = imageTracker->createDataSet(); if (NULL != dataSet) { DLog(@"INFO: successfully loaded data set"); // Load the data set from the app's resources location if (!dataSet->load([dataFile cStringUsingEncoding:NSASCIIStringEncoding], QCAR::DataSet::STORAGE_APPRESOURCE)) { NSLog(@"ERROR: failed to load data set"); imageTracker->destroyDataSet(dataSet); dataSet = NULL; } } else { NSLog(@"ERROR: failed to create data set"); } } return dataSet; } - (BOOL)activateDataSet:(QCAR::DataSet *)theDataSet { BOOL success = NO; // Get the image tracker: QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); if (imageTracker == NULL) { NSLog(@"Failed to load tracking data set because the ImageTracker has not been initialized."); } else { // Activate the data set: if (!imageTracker->activateDataSet(theDataSet)) { NSLog(@"Failed to activate data set."); } else { NSLog(@"Successfully activated data set."); success = YES; } } return success; } - (BOOL)deactivateDataSets { // Get the image tracker: QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); if (imageTracker == NULL) { NSLog(@"Failed to unload tracking data set because the ImageTracker has not been initialized."); return NO; } if(_isCloudRecognition) { QCAR::TargetFinder* finder = imageTracker->getTargetFinder(); finder->deinit(); } else { for(int i = 0; i < _dataSetsCount; i++) { QCAR::DataSet *dataSet = _dataSets[i]; if(dataSet != NULL) { if (imageTracker->deactivateDataSet(dataSet)) { DLog(@"Dataset was deactivated succesfullty"); if(imageTracker->destroyDataSet(dataSet)) { DLog(@"Dataset was destroyed succesfullty"); } else { NSLog(@"Failed to destroy dataset"); } } else { NSLog(@"Failed to deactivate data set."); } } } } return YES; } #pragma mark - Camera setup // Configure QCAR with the video background size - (void)configureVideoBackgroundWithViewWidth:(float)viewWidth andHeight:(float)viewHeight { // Get the default video mode QCAR::CameraDevice& cameraDevice = QCAR::CameraDevice::getInstance(); QCAR::VideoMode videoMode = cameraDevice.getVideoMode(QCAR::CameraDevice::MODE_DEFAULT); // Configure the video background QCAR::VideoBackgroundConfig config; config.mEnabled = true; config.mSynchronous = true; config.mPosition.data[0] = 0.0f; config.mPosition.data[1] = 0.0f; // Determine the orientation of the view. Note, this simple test assumes // that a view is portrait if its height is greater than its width. This is // not always true: it is perfectly reasonable for a view with portrait // orientation to be wider than it is high. The test is suitable for the // dimensions used in this sample if (UIInterfaceOrientationIsPortrait(_interfaceOrientation)) { // --- View is portrait --- // Compare aspect ratios of video and screen. If they are different we // use the full screen size while maintaining the video's aspect ratio, // which naturally entails some cropping of the video float aspectRatioVideo = (float)videoMode.mWidth / (float)videoMode.mHeight; float aspectRatioView = viewHeight / viewWidth; if (aspectRatioVideo < aspectRatioView) { // Video (when rotated) is wider than the view: crop left and right // (top and bottom of video) // --============-- // - = = _ // - = = _ // - = = _ // - = = _ // - = = _ // - = = _ // - = = _ // - = = _ // --============-- config.mSize.data[0] = (int)videoMode.mHeight * (viewHeight / (float)videoMode.mWidth); config.mSize.data[1] = (int)viewHeight; } else { // Video (when rotated) is narrower than the view: crop top and // bottom (left and right of video). Also used when aspect ratios // match (no cropping) // ------------ // - - // - - // ============ // = = // = = // = = // = = // = = // = = // = = // = = // ============ // - - // - - // ------------ config.mSize.data[0] = (int)viewWidth; config.mSize.data[1] = (int)videoMode.mWidth * (viewWidth / (float)videoMode.mHeight); } } else { // --- View is landscape --- float temp = viewWidth; viewWidth = viewHeight; viewHeight = temp; // Compare aspect ratios of video and screen. If they are different we // use the full screen size while maintaining the video's aspect ratio, // which naturally entails some cropping of the video float aspectRatioVideo = (float)videoMode.mWidth / (float)videoMode.mHeight; float aspectRatioView = viewWidth / viewHeight; if (aspectRatioVideo < aspectRatioView) { // Video is taller than the view: crop top and bottom // -------------------- // ==================== // = = // = = // = = // = = // ==================== // -------------------- config.mSize.data[0] = (int)viewWidth; config.mSize.data[1] = (int)videoMode.mHeight * (viewWidth / (float)videoMode.mWidth); } else { // Video is wider than the view: crop left and right. Also used // when aspect ratios match (no cropping) // ---====================--- // - = = - // - = = - // - = = - // - = = - // ---====================--- config.mSize.data[0] = (int)videoMode.mWidth * (viewHeight / (float)videoMode.mHeight); config.mSize.data[1] = (int)viewHeight; } } // Calculate the viewport for the app to use when rendering TagViewport viewport; viewport.posX = ((viewWidth - config.mSize.data[0]) / 2) + config.mPosition.data[0]; viewport.posY = (((int)(viewHeight - config.mSize.data[1])) / (int) 2) + config.mPosition.data[1]; viewport.sizeX = config.mSize.data[0]; viewport.sizeY = config.mSize.data[1]; self.viewport = viewport; DLog(@"VideoBackgroundConfig: size: %d,%d", config.mSize.data[0], config.mSize.data[1]); DLog(@"VideoMode:w=%dh=%d", videoMode.mWidth, videoMode.mHeight); DLog(@"width=%7.3f height=%7.3f", viewWidth, viewHeight); DLog(@"ViewPort: X,Y: %d,%d Size X,Y:%d,%d", viewport.posX,viewport.posY,viewport.sizeX,viewport.sizeY); // Set the config QCAR::Renderer::getInstance().setVideoBackgroundConfig(config); } #pragma mark - Error handling // build a NSError - (NSError *)errorWithCode:(int) code { return [NSError errorWithDomain:MSImageRecognitionSessionErrorDomain code:code userInfo:nil]; } - (void)errorWithCode:(int) code error:(NSError **) error{ if (error != NULL) { *error = [self errorWithCode:code]; } } - (NSError *)cloudRecognitionErrorForCode:(int)code { NSString *description; NSString *suggestion; switch (code) { case QCAR::TargetFinder::UPDATE_ERROR_NO_NETWORK_CONNECTION: description = @"Network Unavailable"; suggestion = @"Please check your internet connection and try again."; break; case QCAR::TargetFinder::UPDATE_ERROR_REQUEST_TIMEOUT: description = @"Request Timeout"; suggestion = @"The network request has timed out, please check your internet connection and try again."; break; case QCAR::TargetFinder::UPDATE_ERROR_SERVICE_NOT_AVAILABLE: description = @"Service Unavailable"; suggestion = @"The cloud recognition service is unavailable, please try again later."; break; case QCAR::TargetFinder::UPDATE_ERROR_UPDATE_SDK: description = @"Unsupported Version"; suggestion = @"The application is using an unsupported version of Vuforia."; break; case QCAR::TargetFinder::UPDATE_ERROR_TIMESTAMP_OUT_OF_RANGE: description = @"Clock Sync Error"; suggestion = @"Please update the date and time and try again."; break; case QCAR::TargetFinder::UPDATE_ERROR_AUTHORIZATION_FAILED: description = @"Authorization Error"; suggestion = @"The cloud recognition service access keys are incorrect or have expired."; break; case QCAR::TargetFinder::UPDATE_ERROR_PROJECT_SUSPENDED: description = @"Authorization Error"; suggestion = @"The cloud recognition service has been suspended."; break; case QCAR::TargetFinder::UPDATE_ERROR_BAD_FRAME_QUALITY: description = @"Poor Camera Image"; suggestion = @"The camera does not have enough detail, please try again later"; break; default: description = @"Unknown error"; suggestion = [NSString stringWithFormat:@"An unknown error has occurred (Code %d)", code]; break; } return [NSError errorWithDomain:MSImageRecognitionSessionErrorDomain code:code userInfo:@{NSLocalizedDescriptionKey : description, NSLocalizedRecoverySuggestionErrorKey : suggestion}]; } #pragma mark - QCAR callback - (void) QCAR_onUpdate:(QCAR::State *) state { if(_isCloudRecognition) { QCAR::TrackerManager& trackerManager = QCAR::TrackerManager::getInstance(); QCAR::ImageTracker* imageTracker = static_cast<QCAR::ImageTracker*>(trackerManager.getTracker(QCAR::ImageTracker::getClassType())); QCAR::TargetFinder* finder = imageTracker->getTargetFinder(); // Check if there are new results available: const int statusCode = finder->updateSearchResults(); if (statusCode < 0) { // Show a message if we encountered an error: NSLog(@"update search result failed:%d", statusCode); if (statusCode == QCAR::TargetFinder::UPDATE_ERROR_NO_NETWORK_CONNECTION) { //TODO } } else if (statusCode == QCAR::TargetFinder::UPDATE_RESULTS_AVAILABLE) { for (int i = 0; i < finder->getResultCount(); ++i) { const QCAR::TargetSearchResult* result = finder->getResult(i); // Check if this target is suitable for tracking: if (result->getTrackingRating() > 0) { // Create a new Trackable from the result: QCAR::Trackable* newTrackable = finder->enableTracking(*result); if (newTrackable != 0) { // Avoid entering on ContentMode when a bad target is found // (Bad Targets are targets that are exists on the CloudReco database but not on our own book database) NSLog(@"Successfully created new trackable '%s' with rating '%d'.", newTrackable->getName(), result->getTrackingRating()); NSString *name = [[NSString alloc] initWithUTF8String:newTrackable->getName()]; if(name.length) { DLog(@"recognized image with name: %@", name); MSDispatchMain(^{ self.recognitionBlock(name); }); } } else { NSLog(@"Failed to create new trackable."); } } } } } else { for (int i = 0; i < state->getNumTrackableResults(); ++i) { // Get the trackable const QCAR::TrackableResult* result = state->getTrackableResult(i); const QCAR::Trackable& trackable = result->getTrackable(); NSString *name = [[NSString alloc] initWithUTF8String:trackable.getName()]; if(name.length) { DLog(@"recognized image with name: %@", name); MSDispatchMain(^{ self.recognitionBlock(name); }); } } } } //////////////////////////////////////////////////////////////////////////////// // Callback function called by the tracker when each tracking cycle has finished void VuforiaApplication_UpdateCallback::QCAR_onUpdate(QCAR::State& state) { if (__sharedInstance != nil) { [__sharedInstance QCAR_onUpdate:&state]; } } @end #endif In our ViewController, you first need to load the dataset (a little more about it). To do this, do the following:

[[MSImageRecognitionSession sharedSession] loadBundledDataSets:[self.class dataSets] withCompletion:^(BOOL success, NSError *error) { MSImageRecognitionViewController *strongSelf = weakSelf; if(!strongSelf || !_isAppeared) return; [strongSelf onLoadARDoneWithResult:success error:error]; }]; And the final settings, if everything is loaded:

- (void)onLoadARDoneWithResult:(BOOL)success error:(NSError *)error { if(success) { __weak MSImageRecognitionViewController *weakSelf = self; [[MSImageRecognitionSession sharedSession] startAR:^(BOOL success, NSError *error) { weakSelf.activityIndicator.hidden = YES; weakSelf.cloudRecognitionSwitch.enabled = YES; if(!_isAppeared) return; if(error) { [weakSelf showAlertWithError:error]; } } recognitionBlock:^(NSString *recognizedName) { MSImageRecognitionViewController *strongSelf = weakSelf; if(!strongSelf || !_isAppeared) return; [MSFlurryAnalytics sendScreenName:kFlurryScreenSuccessRecognition]; performCompletionBlockWithData(strongSelf.recognizeCompletion, YES, nil, recognizedName); [strongSelf.presentingViewController dismissViewControllerAnimated:YES completion:nil]; }]; } if(error) { [self showAlertWithError:error]; self.activityIndicator.hidden = YES; self.cloudRecognitionSwitch.enabled = YES; self.view.backgroundColor = nil; } } This technical integration is over. Now let's talk about data organization.

Vuforia cannot use raw pictures as markers. They need to be preprocessed and formed into datasets, which we will later put into the application bundle and load. To create a dataset, you need to go to the portal

Develop -> Target Manager -> Create \ Select Database .

And then add all the pictures. After that just click Download Database . You will get two files on output: xml with a description and bin with highlighted key points.

For the connection of the logo and the command in the database, each logo as a tag has an id command in the database. Thus, when finding a picture, Vuforia tells us that it has found a target with a tag like this (method - (void) QCAR_onUpdate: (QCAR :: State *) state in the MSImageRecognitionSession class). We follow this tag to the base and get the team we need.

That's all for today. Next time I will tell you how we added Today Extension and Watch Extension to the application.

Github: github.com/DataArt/FootballClubsRecogniser/tree/master/iOS

AppStore: itunes.apple.com/us/app/football-clubs-recognizer/id658920969?mt=8

Source: https://habr.com/ru/post/256385/

All Articles