Data carriers. What we have year 2015 and what should we expect beyond its horizon?

Increasing volumes of generated data require more and more sophisticated methods of storing them, while technological progress makes it cheaper to store information, which in turn stimulates the generation of more and more information. As a result, we have a clear picture, pushed from all sides, the development of data carriers is steadily going up. Scientific thought works in two principal directions, on the one hand, this is the development of information coding methods, on the other hand, the improvement of the hardware component. The most widely represented technologies among storage media at the moment are HDD and SSD drives.

Manufacturers of classic "hard drives" continue to invest huge amounts of money in the development of this technology, squeezing more and more performance out of it. Starting its journey from the very first hard disk, weighing a ton and 5 MB in size - IBM 350, after 60 years, mass-ready disks are already ready to be received into the palm of your hand, having an impressive 10 TB on board. The most advanced Shigled Magnetic Recording (SMR) technology, according to which the first announced 10 TB hard drive will be produced, has a significant potential for growth in the amount of data being placed, which will make it possible to get hard drives up to 20 TB in the coming years.

')

This technology is very progressive, its use has allowed more efficient use of the area of the metal plates themselves placed inside the hard disk. Instead of spending the valuable surface of metal discs under the delimiting elements separating the recording sectors, it was decided to take another way of forming the plate itself. Due to the layering of a multitude of balls, the material that will be rewritten, it was possible to increase the efficiency of writing to discs by a quarter, while the cost of production of such a “tiled” structure increased slightly. But having eliminated the boundaries of the sectors, the engineers were faced with the problem of a significant drop in the speed of information processing by the storage unit, which was still to be fought.

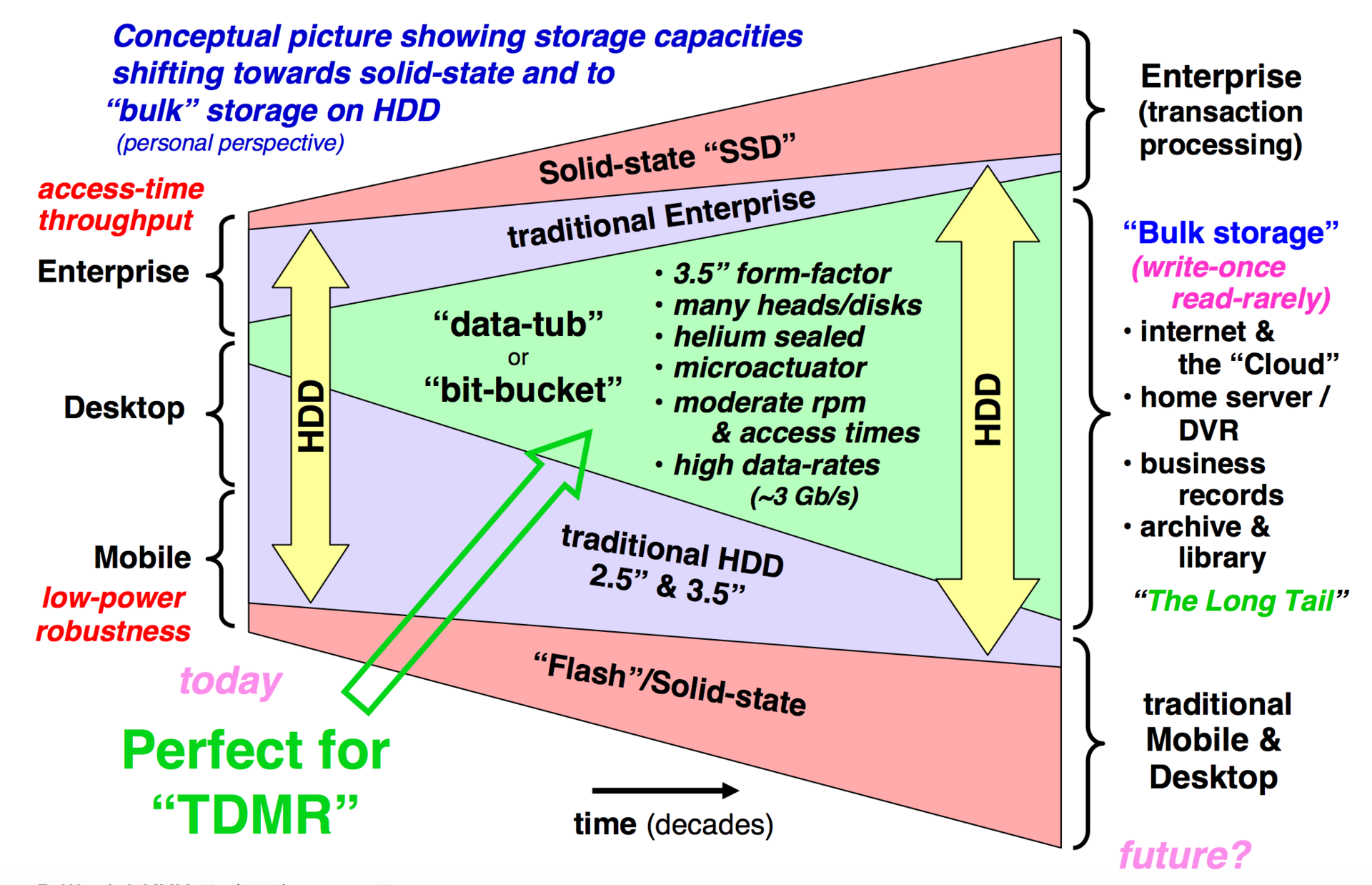

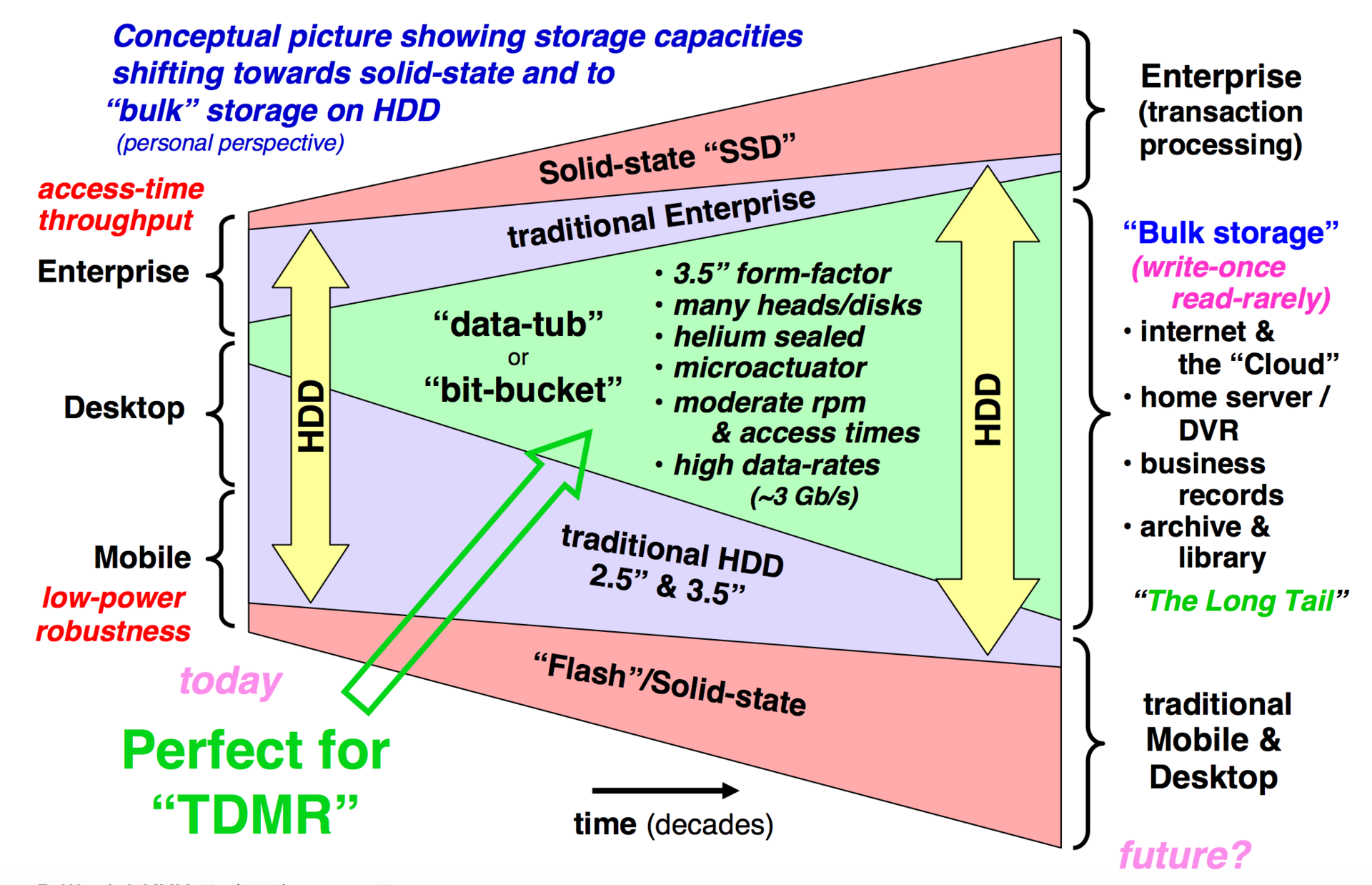

Speaking of SMR technology, one cannot help but recall another technical trick, after the application of which it really became commercially successful. It was possible to solve the problem of insufficient read / write speed of the “tiled” surface structure of the disks due to another advanced development - Two-Dimensional Magnetic Recording (TDMR). The system of two-dimensional magnetic recording has eliminated the problem of blurring the magnetic signal deposited on the surface of the disk. The fact is that earlier, by eliminating the clear boundaries between the adjacent sectors of the sectors on the disk, with their partial overlap, the head of the reading mechanism needed to spend more time to obtain an unambiguous result in the determined magnetized sector. The solution was the use of multiple read heads. By expanding the area of registration of the magnetization of the disk, it became possible to obtain more detailed information about a specific sector. After mathematical processing of a more holistic image of the captured data, the engineers eliminated the “magnetic noise” from the neighboring zones and get an unambiguous result in a reasonable time.

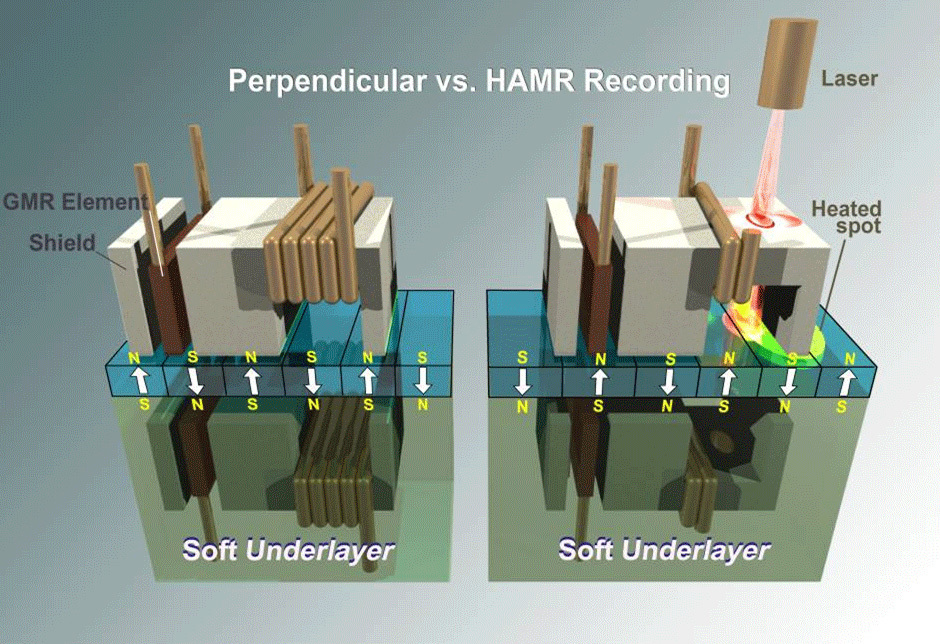

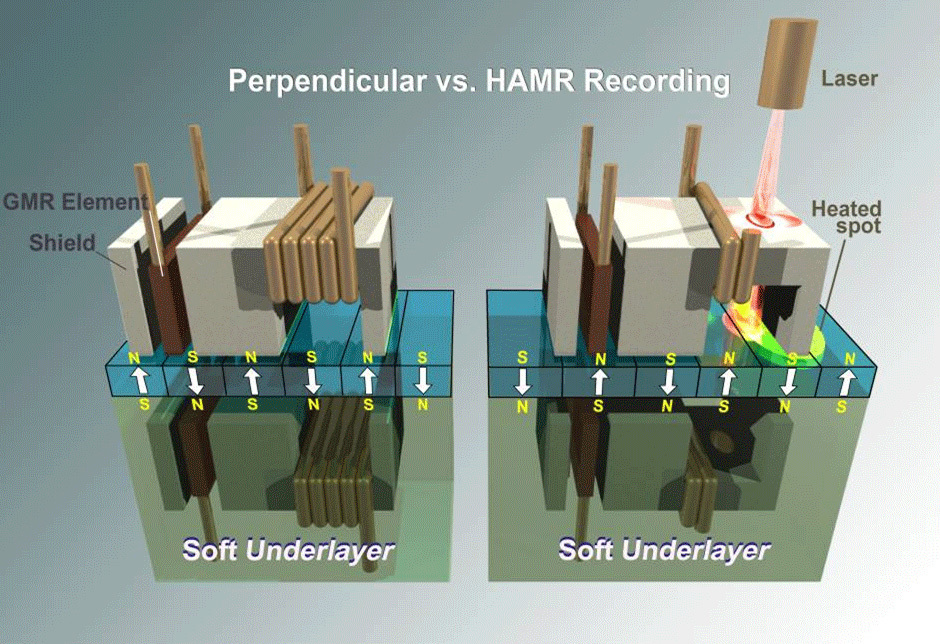

The third technological impulse for HDD was the development of a method of recording data with preheating of the sector that can be recorded on the media, this method is called Heat-Assisted Magnetic Recording (HAMR). The recording technique itself provides for the placement on the writing heads of a new “winchester” laser, which will, prior to direct magnetization, heat the metal surface. Thanks to this specific recording method, as a result, the engineers managed to increase the sharpness of the magnetization, which also allowed to get rid of unnecessary noise and increase the recording concentration. Mass production of data carriers using the HAMR principle was previously planned by Seagate for 2015, but recently this date was moved as early as 2017, apparently with the technology not everything is as smooth as it was seen at the beginning to Seagate employees.

In the meantime, Hitachi, one of the leaders among producers of data carriers, went a bit different way. Replacing the familiar air to helium in the hermetic carrier case, the technicians could significantly reduce the viscosity of the medium, and this made it possible to place metal disks closer to one to one than was available before. The result of this decision was the increased capacity of the entire carrier, with the remaining external dimensions.

All the latest innovations make data carriers - HDD very competitive in the IT market. But the problem of this technology is becoming more acute. The very initial principle underlying the HDD - magnetic registration of information on the plates, in fact, has exhausted itself entirely. Even the “tiled” arrangement of the rewritable sectors and all the tricks associated with it are unlikely to be able to successfully withstand the increasingly accessible and productive flash technology in three to four years.

Over the past two decades, we have been witnessing an ever increasing speed of flash memory development. The emergence of this technology is truly similar to a bright flash of light. Stacked by a multitude of streams - technologies, from different spheres of technological progress, into a single stream of Solid-State Drive (SSD), the result has now become impressive.

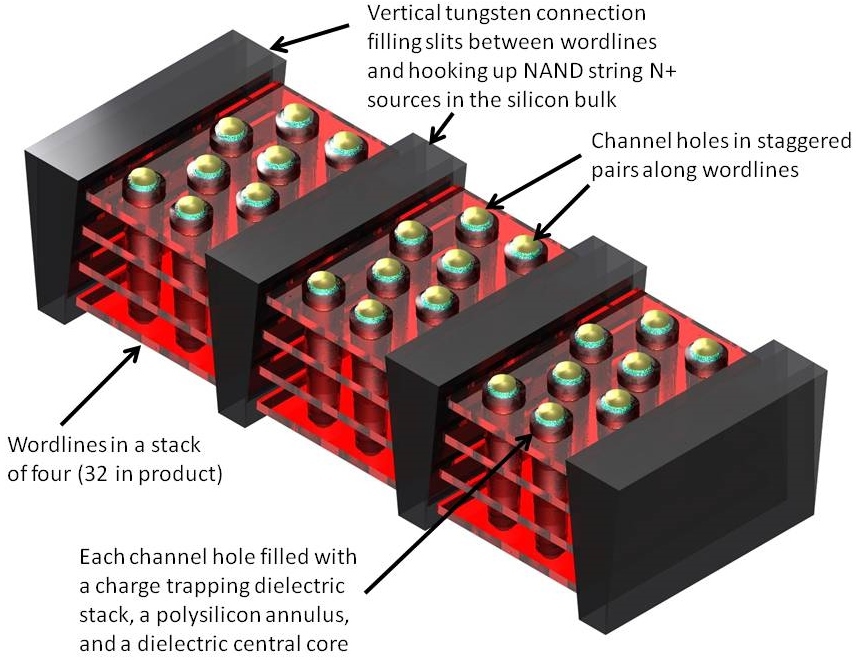

Such companies as Intel and Samsung foresee big dividends from those invested in the SSD-related 3D NAND technology. Thanks to this development, engineers were able to assemble flash memory crystals not only horizontally but also vertically, that is, to form three-dimensional structures from semiconductors. Already there is information about the existence of test samples created by 3D NAND technology from Samsung, in which chips can have up to 24 layers. Intel and its partner Micron predict the release of chips with a capacity of up to 48 GB in late 2015 - early 2016. The proposed chip must be created using 32-depth 3D NAND technology with a multicellular system (MLC), which allows you to double the amount of information carrying one single semiconductor. The successes of Micron engineers in the direction of the development of MLC, give the opportunity to its employees to assert that very soon they will be able to start producing 1 TB of SSD disks, which in this case will become extremely compact. In addition to the advantages of compactness, according to the forecasts of a subsidiary of Intel, the price will also be quite affordable, by 2018 SSD memory, in the superfine segment, it will fall every 5.

Another technology that should ripen in 2015 is the 3-level structure of the flash - TLC. When it comes to flash memory, a memory cell is usually represented as an object with or without electric charge, which in the usual sense equates to binary code 1 / 0. Innovation engineers looked at the matter from a slightly different angle, discarding the fixed interpretation and wondered What if we start to consider not just the presence of a charge in a cell, but to measure the very range of voltage that it carries? By equating a specific value of voltage to one, and any other range of it to zero, the binary system of calculus, it is also possible to easily encode the cells of the data carrier. The correct question has borne fruit. Due to the fact that the materials used support a wide range of voltages, it has become possible to make coarser recording of cells, while maintaining a high readability of the signal.

MLC - this multi-level structure has four voltage ranges, which correspond to binary coding 00/01/10/11 - thus actually being the equivalent of two cells of the first level, that is, the MLC technology allows the recording density to be doubled. Remembering the advantages of technology, it is impossible not to recall its drawbacks. Creating chips in this way is associated with various factors: additional costs during production, the process of writing data reading with this arrangement should be as accurate as possible. And of course the accelerated degradation of memory cells, as a result of which the product durability will go to the bottom, and the number of sector errors that occur will increase.

TLC is capable of operating with eight voltage levels, combining combinations of 1/0 on all three levels. This technology is able to increase the amount of information placed on the media by 50% more than MLC. The problem here lies in the fact that the structure of the carrier itself undergoes more fundamental structural changes than the MLC. A more complex product design carries with it a substantially greater cost, and in order for such a solution to adequately take its place in the market alongside its competitors, according to expert estimates, it must take at least five years.

At the moment, the samples of drives created on the basis of the combination of NAND 3D and TLC technologies are already on sale. A typical example is the SSD drive with a volume of 1 TB Samsung 850 EVO. The write / read speed of the media is about 530 MB / s, while the number of IOPS is more than 90 thousand. Combining large volume, performance, acceptable dimensions, reliability (manufacturer’s warranty on the product is 5 years), the price of a SSD miracle reaches $ 500.

Experts expect that in 2015, manufacturers of data carriers will focus on the improvement and optimization of TLC technology. The main points of improvement TLC will have to be ways to use chips and the struggle to reduce errors arising in the process of reading / writing cells. One of the promising ways to eliminate errors can be a chip developed by engineers at Silicon Motion, which has three logical levels of suppressing ambiguities.

The first level of Low Density Parity Check (LDPC) is a specially developed method of data coding, thanks to which at the very first stage it will be possible to eliminate a lot of errors. Due to the mathematical algorithm of data processing, it will probably be guaranteed to detect and eliminate write errors without any special loss in performance. The logic of the LDPC system itself was developed as early as the 1960s, but due to the weakness of technical means, it was not possible to fully realize its potential. In the 1990s, when the volume of data processed reached a certain, critical level, the high point came for the LDPC. Having found himself in the networks of WI-FI, 10 GB networks, digital television - the algorithm continued its service for the benefit of SSD.

In addition to data coding, LDPC can also become a tool for tracking and adjusting the voltage of electric charging of memory arrays, for more efficient operation of TLC. Electrotechnical properties of semiconductors, and arrays formed by them, over time, undergo some changes. Changes can be both short-term, associated with temperature, and long-term, associated with the degradation of the material. Based on statistical information, the LDPC algorithm helps to reduce the occurrence of errors, for the reasons given above, to a minimum.

Such a layering of technologies makes it possible to compensate most of the minuses of TLC technology and makes it more cost-effective. The most unresolved problem of SSD carriers manufactured using TLC technology is still the short lifetime of the recording cells. Considering the pros and cons, such discs have become highly demanded for consumers, that they use this kind of memory for information, which is often accessed and does not undergo any special changes.

At the moment it is difficult to see a potential competitor a bunch of flash memory and hard drives. Moreover, even serious works aimed at finding this competitor (s) are not visible. In the data carrier market, there is an extremely large rivalry, which forces the manufacturers of equipment to work with a minimum margin, while allocating huge funds to develop radically new ways of storing information that is impermissible luxury. All scientific progress is aimed at more local tasks - modernization of existing solutions, as a result, there is no need to talk about the revolution of data carriers in the foreseeable future.

Obviously, the progress of technology does not stand still, and of course we will live to see the day when data storage technologies, which are essentially canceled from existing ones, will be presented everywhere. Will it be holographic or polymer memory using a phase transition, or it will be FeRAM-based samples that are not clear now. One thing is clear, that this is all the perspective of the next decade, because you shouldn’t forget that even the most successful development should take place at least several years before it appears triumphant on the shelves of stores. Accordingly, all this time we will observe the solutions we are already familiar with.

Although it is difficult, with absolute certainty, in our hyperactive time to say where the whole industry will be in 10 years. At the same time, based on the decades before, something can be foreseen with complete confidence. With or without revolutions, the future of data carriers will move in a single key: faster, cheaper, safer, more spacious, where this path will lead us, time will tell.

Hard drives

Manufacturers of classic "hard drives" continue to invest huge amounts of money in the development of this technology, squeezing more and more performance out of it. Starting its journey from the very first hard disk, weighing a ton and 5 MB in size - IBM 350, after 60 years, mass-ready disks are already ready to be received into the palm of your hand, having an impressive 10 TB on board. The most advanced Shigled Magnetic Recording (SMR) technology, according to which the first announced 10 TB hard drive will be produced, has a significant potential for growth in the amount of data being placed, which will make it possible to get hard drives up to 20 TB in the coming years.

')

This technology is very progressive, its use has allowed more efficient use of the area of the metal plates themselves placed inside the hard disk. Instead of spending the valuable surface of metal discs under the delimiting elements separating the recording sectors, it was decided to take another way of forming the plate itself. Due to the layering of a multitude of balls, the material that will be rewritten, it was possible to increase the efficiency of writing to discs by a quarter, while the cost of production of such a “tiled” structure increased slightly. But having eliminated the boundaries of the sectors, the engineers were faced with the problem of a significant drop in the speed of information processing by the storage unit, which was still to be fought.

Speaking of SMR technology, one cannot help but recall another technical trick, after the application of which it really became commercially successful. It was possible to solve the problem of insufficient read / write speed of the “tiled” surface structure of the disks due to another advanced development - Two-Dimensional Magnetic Recording (TDMR). The system of two-dimensional magnetic recording has eliminated the problem of blurring the magnetic signal deposited on the surface of the disk. The fact is that earlier, by eliminating the clear boundaries between the adjacent sectors of the sectors on the disk, with their partial overlap, the head of the reading mechanism needed to spend more time to obtain an unambiguous result in the determined magnetized sector. The solution was the use of multiple read heads. By expanding the area of registration of the magnetization of the disk, it became possible to obtain more detailed information about a specific sector. After mathematical processing of a more holistic image of the captured data, the engineers eliminated the “magnetic noise” from the neighboring zones and get an unambiguous result in a reasonable time.

The third technological impulse for HDD was the development of a method of recording data with preheating of the sector that can be recorded on the media, this method is called Heat-Assisted Magnetic Recording (HAMR). The recording technique itself provides for the placement on the writing heads of a new “winchester” laser, which will, prior to direct magnetization, heat the metal surface. Thanks to this specific recording method, as a result, the engineers managed to increase the sharpness of the magnetization, which also allowed to get rid of unnecessary noise and increase the recording concentration. Mass production of data carriers using the HAMR principle was previously planned by Seagate for 2015, but recently this date was moved as early as 2017, apparently with the technology not everything is as smooth as it was seen at the beginning to Seagate employees.

In the meantime, Hitachi, one of the leaders among producers of data carriers, went a bit different way. Replacing the familiar air to helium in the hermetic carrier case, the technicians could significantly reduce the viscosity of the medium, and this made it possible to place metal disks closer to one to one than was available before. The result of this decision was the increased capacity of the entire carrier, with the remaining external dimensions.

All the latest innovations make data carriers - HDD very competitive in the IT market. But the problem of this technology is becoming more acute. The very initial principle underlying the HDD - magnetic registration of information on the plates, in fact, has exhausted itself entirely. Even the “tiled” arrangement of the rewritable sectors and all the tricks associated with it are unlikely to be able to successfully withstand the increasingly accessible and productive flash technology in three to four years.

Flush

Over the past two decades, we have been witnessing an ever increasing speed of flash memory development. The emergence of this technology is truly similar to a bright flash of light. Stacked by a multitude of streams - technologies, from different spheres of technological progress, into a single stream of Solid-State Drive (SSD), the result has now become impressive.

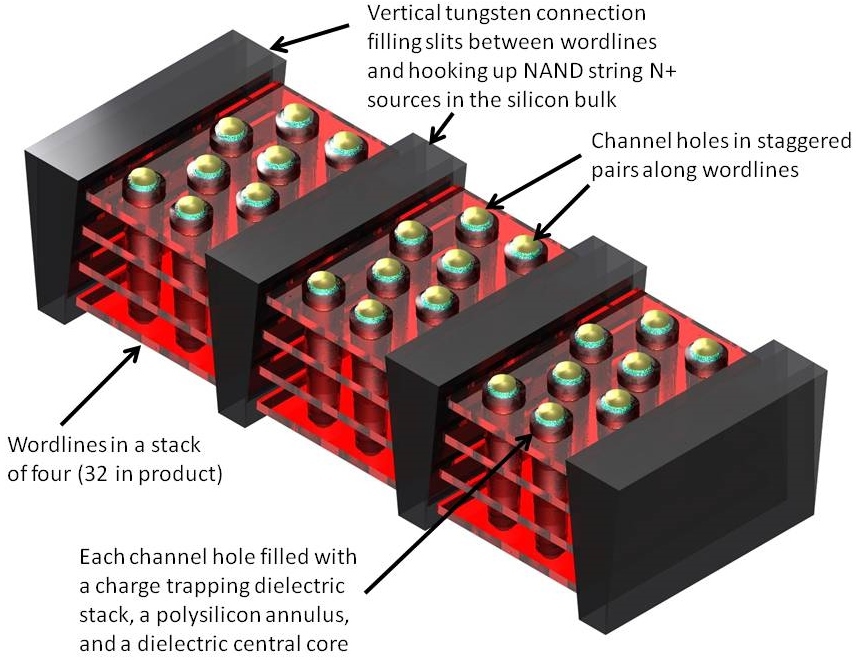

Such companies as Intel and Samsung foresee big dividends from those invested in the SSD-related 3D NAND technology. Thanks to this development, engineers were able to assemble flash memory crystals not only horizontally but also vertically, that is, to form three-dimensional structures from semiconductors. Already there is information about the existence of test samples created by 3D NAND technology from Samsung, in which chips can have up to 24 layers. Intel and its partner Micron predict the release of chips with a capacity of up to 48 GB in late 2015 - early 2016. The proposed chip must be created using 32-depth 3D NAND technology with a multicellular system (MLC), which allows you to double the amount of information carrying one single semiconductor. The successes of Micron engineers in the direction of the development of MLC, give the opportunity to its employees to assert that very soon they will be able to start producing 1 TB of SSD disks, which in this case will become extremely compact. In addition to the advantages of compactness, according to the forecasts of a subsidiary of Intel, the price will also be quite affordable, by 2018 SSD memory, in the superfine segment, it will fall every 5.

Another technology that should ripen in 2015 is the 3-level structure of the flash - TLC. When it comes to flash memory, a memory cell is usually represented as an object with or without electric charge, which in the usual sense equates to binary code 1 / 0. Innovation engineers looked at the matter from a slightly different angle, discarding the fixed interpretation and wondered What if we start to consider not just the presence of a charge in a cell, but to measure the very range of voltage that it carries? By equating a specific value of voltage to one, and any other range of it to zero, the binary system of calculus, it is also possible to easily encode the cells of the data carrier. The correct question has borne fruit. Due to the fact that the materials used support a wide range of voltages, it has become possible to make coarser recording of cells, while maintaining a high readability of the signal.

MLC - this multi-level structure has four voltage ranges, which correspond to binary coding 00/01/10/11 - thus actually being the equivalent of two cells of the first level, that is, the MLC technology allows the recording density to be doubled. Remembering the advantages of technology, it is impossible not to recall its drawbacks. Creating chips in this way is associated with various factors: additional costs during production, the process of writing data reading with this arrangement should be as accurate as possible. And of course the accelerated degradation of memory cells, as a result of which the product durability will go to the bottom, and the number of sector errors that occur will increase.

TLC is capable of operating with eight voltage levels, combining combinations of 1/0 on all three levels. This technology is able to increase the amount of information placed on the media by 50% more than MLC. The problem here lies in the fact that the structure of the carrier itself undergoes more fundamental structural changes than the MLC. A more complex product design carries with it a substantially greater cost, and in order for such a solution to adequately take its place in the market alongside its competitors, according to expert estimates, it must take at least five years.

At the moment, the samples of drives created on the basis of the combination of NAND 3D and TLC technologies are already on sale. A typical example is the SSD drive with a volume of 1 TB Samsung 850 EVO. The write / read speed of the media is about 530 MB / s, while the number of IOPS is more than 90 thousand. Combining large volume, performance, acceptable dimensions, reliability (manufacturer’s warranty on the product is 5 years), the price of a SSD miracle reaches $ 500.

Experts expect that in 2015, manufacturers of data carriers will focus on the improvement and optimization of TLC technology. The main points of improvement TLC will have to be ways to use chips and the struggle to reduce errors arising in the process of reading / writing cells. One of the promising ways to eliminate errors can be a chip developed by engineers at Silicon Motion, which has three logical levels of suppressing ambiguities.

The first level of Low Density Parity Check (LDPC) is a specially developed method of data coding, thanks to which at the very first stage it will be possible to eliminate a lot of errors. Due to the mathematical algorithm of data processing, it will probably be guaranteed to detect and eliminate write errors without any special loss in performance. The logic of the LDPC system itself was developed as early as the 1960s, but due to the weakness of technical means, it was not possible to fully realize its potential. In the 1990s, when the volume of data processed reached a certain, critical level, the high point came for the LDPC. Having found himself in the networks of WI-FI, 10 GB networks, digital television - the algorithm continued its service for the benefit of SSD.

In addition to data coding, LDPC can also become a tool for tracking and adjusting the voltage of electric charging of memory arrays, for more efficient operation of TLC. Electrotechnical properties of semiconductors, and arrays formed by them, over time, undergo some changes. Changes can be both short-term, associated with temperature, and long-term, associated with the degradation of the material. Based on statistical information, the LDPC algorithm helps to reduce the occurrence of errors, for the reasons given above, to a minimum.

Such a layering of technologies makes it possible to compensate most of the minuses of TLC technology and makes it more cost-effective. The most unresolved problem of SSD carriers manufactured using TLC technology is still the short lifetime of the recording cells. Considering the pros and cons, such discs have become highly demanded for consumers, that they use this kind of memory for information, which is often accessed and does not undergo any special changes.

The prospects of data carriers in the foreseeable future

At the moment it is difficult to see a potential competitor a bunch of flash memory and hard drives. Moreover, even serious works aimed at finding this competitor (s) are not visible. In the data carrier market, there is an extremely large rivalry, which forces the manufacturers of equipment to work with a minimum margin, while allocating huge funds to develop radically new ways of storing information that is impermissible luxury. All scientific progress is aimed at more local tasks - modernization of existing solutions, as a result, there is no need to talk about the revolution of data carriers in the foreseeable future.

Obviously, the progress of technology does not stand still, and of course we will live to see the day when data storage technologies, which are essentially canceled from existing ones, will be presented everywhere. Will it be holographic or polymer memory using a phase transition, or it will be FeRAM-based samples that are not clear now. One thing is clear, that this is all the perspective of the next decade, because you shouldn’t forget that even the most successful development should take place at least several years before it appears triumphant on the shelves of stores. Accordingly, all this time we will observe the solutions we are already familiar with.

Although it is difficult, with absolute certainty, in our hyperactive time to say where the whole industry will be in 10 years. At the same time, based on the decades before, something can be foreseen with complete confidence. With or without revolutions, the future of data carriers will move in a single key: faster, cheaper, safer, more spacious, where this path will lead us, time will tell.

Source: https://habr.com/ru/post/255925/

All Articles