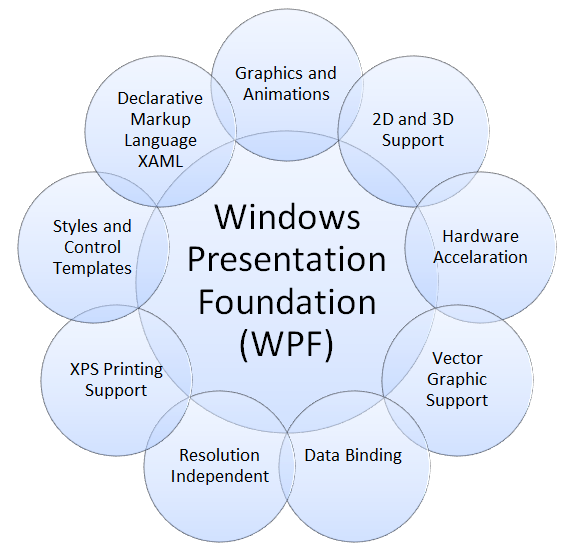

Deep immersion in the WPF rendering system

The translation of this article pushed me to the discussion of the posts “Why is WPF alive?” And “Seven years of WPF: what has changed?” The original article was written in 2011, when Silverlight was still alive, but the WPF information has not lost its relevance.

At first I did not want to publish this article. It seemed to me that it was impolite - one should speak either well or nothing about the dead. But a few conversations with people whose opinion I really appreciate made me change my mind. Having put a lot of effort into the Microsoft platform, developers need to be aware of the internal features of its work, so that, having come to a dead end, they can understand the causes of the incident and formulate suggestions to the platform developers more accurately. I think WPF and Silverlight are good technologies, but ... If you’ve followed my Twitter for the past few months, then some of the comments may have seemed to you to be a baseless attack on the performance of WPF and Silverlight. Why did I write this? After all, in the end, I have invested thousands and thousands of hours of my own time over many years, promoting the platform, developing libraries, helping community members, and so on. I am definitely personally interested. I want the platform to be better.

Performance, Productivity, Productivity

')

When developing, addictive, user-friendly, user interface, performance is most important to you. Without it, everything else is meaningless. How many times have you had to simplify the interface because it lags? How many times have you come up with a “new, revolutionary user interface model” that you had to throw in the trash because the existing technology did not allow it to be implemented? How many times have you told clients that you need a 2.4 GHz quad-core processor to complete the work? Customers repeatedly asked me why on WPF and Sliverlight they could not get the same smooth interface as in the iPad application, even with four times more powerful PC. These technologies may be suitable for business applications, but they are clearly not suitable for next-generation user applications.

But WPF uses hardware acceleration. Why do you think it is ineffective?

WPF does use hardware acceleration and some aspects of its internal implementation are very good. Unfortunately, the efficiency of using the GPU is much lower than it could be. The WPF rendering system uses very brute force. I hope to explain this statement below.

Analyzing a single pass rendering WPF

To analyze performance, we need to understand what is actually happening inside WPF. For this, I used the PIX, Direct3D profiler, shipped with the DirectX SDK. PIX runs your D3D application and injects a series of interceptors into all Direct3D calls for analysis and monitoring.

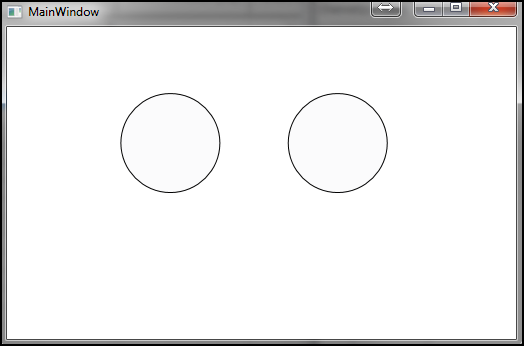

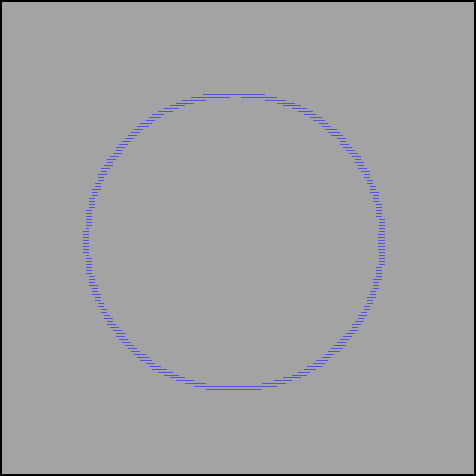

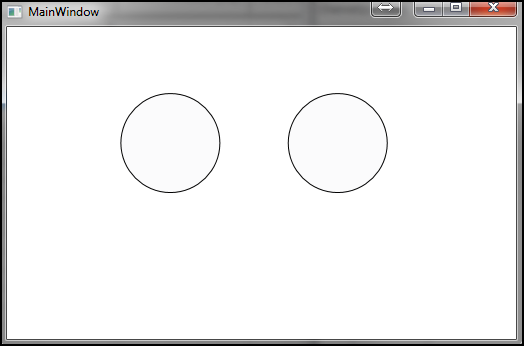

I created a simple WPF application in which two ellipses are displayed from left to right. Both ellipses are of the same color (# 55F4F4F5) with a black outline.

And how does WPF render it?

First of all, WPF clears (# ff000000) the dirty area it is going to redraw. Dirty areas are needed to reduce the number of pixels sent to the final merge stage (output merger stage) in the GPU pipeline. We can even assume that this reduces the amount of geometry that will have to be re-tessellated, more on this later. After cleaning the dirty area, our frame looks like this

After that, WPF does something incomprehensible. First, it fills the vertex buffer (vertex buffer), then draws something that looks like a rectangle on top of a dirty area. Now the frame looks like this (exciting, isn't it?):

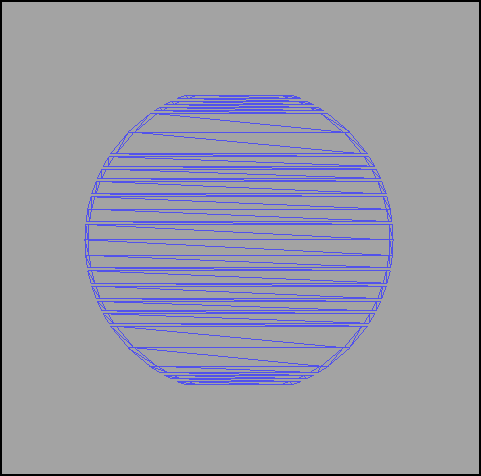

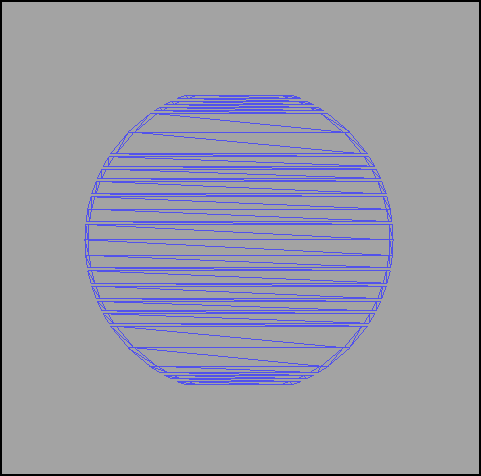

After that, it tessellates an ellipse on the CPU. Tessellation, as you may already know, is the transformation of the geometry of our 100x100 ellipse into a set of triangles. This is done for the following reasons: 1) triangles are a natural rendering unit for a GPU 2) an ellipse tessellation can result in just a few hundred triangles, which is much faster than rasterizing 10,000 pixels with antialiasing by means of CPU (which makes Silverlight). The screenshot below shows what a tessellation looks like. Readers familiar with 3D graphics may have noticed that these are triangle stripes. Note that in the tessellation, the ellipse looks unfinished. As a next step, WPF takes the tessellation, loads it into the vertex buffer of the GPU and makes another draw call using the pixel shader, which is configured to use the “brush” configured in XAML.

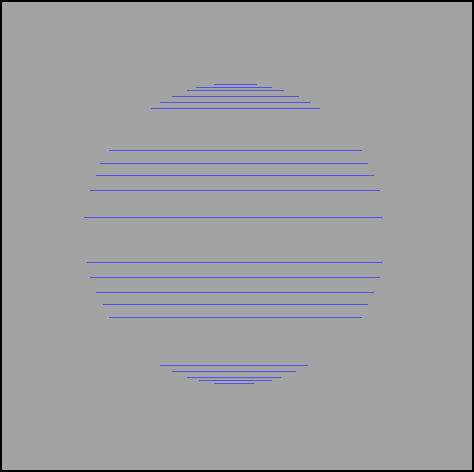

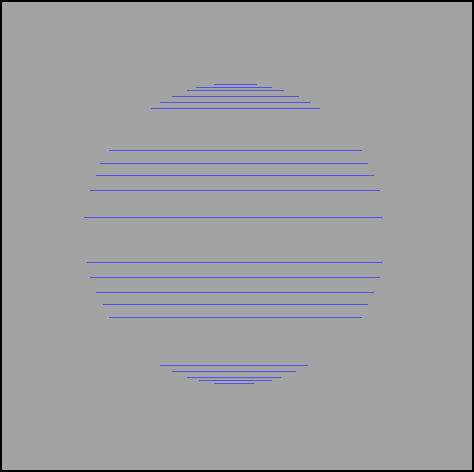

Remember that I noted the incompleteness of an ellipse? It really is. WPF generates what Direct3D programmers know as a “line list” (line list). The GPU understands the lines as well as the triangles. WPF fills the vertex buffer with these lines and guess what? Correctly, performs another draw call? The set of lines looks like this:

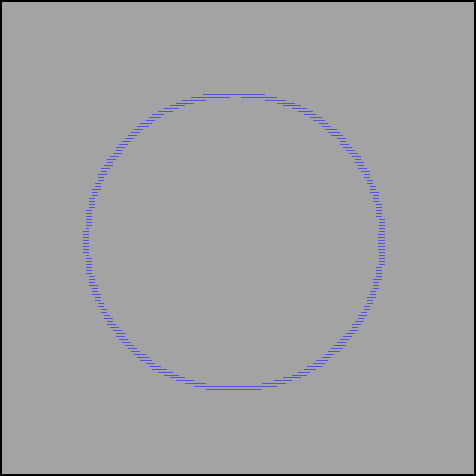

Now WPF has finished drawing an ellipse, right? Not! You forgot about the contour! A contour is also a collection of lines. It is also sent to the vertex buffer and executes another draw call. The contour looks like this

At this point, we drew one ellipse, so our frame looks like this:

The entire procedure must be repeated for each ellipse on the stage. In our case, two times.

I did not understand. Why is it bad for performance?

The first thing you could notice is that for rendering one ellipse, we needed three draw calls and two calls to the vertex buffer. To explain the inefficiency of this approach, I will have to tell you a little about how the GPU works. To begin with, modern GPUs work VERY FAST and asynchronously with the CPU. But for some operations, expensive switching from user mode to kernel mode (user-mode to kernel mode transitions) occurs. When filling the vertex buffer it should be blocked. If the buffer is currently used by the GPU, this forces the GPU to synchronize with the CPU and drastically reduces performance. A vertex buffer is created with D3DUSAGE_WRITEONLY | D3DUSAGE_DYNAMIC, but when it is locked (which happens often), D3DLOCK_DISCARD is not used. This can cause a loss of speed (synchronization of the GPU and CPU) in the GPU, if the buffer is already used by the GPU. In the case of a large number of draw calls, we have a high probability of getting a lot of transitions to kernel mode and a big load in the drivers. To improve performance, we need to send as much work to the GPU as possible, otherwise your CPU will be busy and the GPU will be idle. Do not forget that in this example it was only one frame. A typical WPF interface tries to output 60 frames per second! If you have ever tried to figure out why your rendering thread loads the processor so much, then you most likely found that most of the load comes from your GPU driver.

And what about cached construction (Cached Composition)? After all, it improves performance!

Without a doubt, raises. Cached construction or BitmapCache caches objects into the GPU texture. This means that your CPU does not need to peresteselirovat, and the GPU does not need to be re-rasterized. When performing one rendering pass, WPF simply uses the texture from the video memory, increasing performance. Here is the BitmapCache ellipse:

But WPF has dark sides in this case too. For each BitmapCache, it performs a separate draw call. I will not lie, sometimes you really need to perform a draw call to render a single object (visual). Anything can happen. But let's imagine a scenario in which we have a <Canvas /> with 300 animated BitmapCached-ellipses. The advanced system will understand that it needs to render 300 textures and they are all z-ordered (z-ordered) one after the other. After that, it will collect their packets of the maximum size, as I recall, the DX9 can receive up to 16 sampling inputs at a time . In this case, we get 16 draw calls instead of 300, which will noticeably reduce the load on the CPU. In terms of 60 frames per second, we will reduce the load from 18,000 draw calls per second to 1125. In Direct 3D 10, the number of incoming items is much higher .

Okay, I read this far. Tell me how WPF uses pixel shaders!

WPF has an extensible pixel shader API and some built-in effects. This allows developers to add truly unique effects to their user interface. When Directing a Shader to an existing texture, Direct 3D typically uses an intermediate rendering target (intermediate rendertarget) ... after all, you cannot use the texture from which you are writing as a sample! WPF also does this, but unfortunately it creates a completely new EACH FRAME texture and destroys it upon completion. Creating and destroying GPU resources is one of the slowest things you can do when processing each frame. I usually do not do this even with the allocation of system memory of similar volume. Reusing these intermediate surfaces could achieve a very significant increase in productivity. If you have ever wondered why your hardware-accelerated shaders put a significant load on the CPU, now you know the answer.

But maybe this is the way to render vector graphics on a GPU?

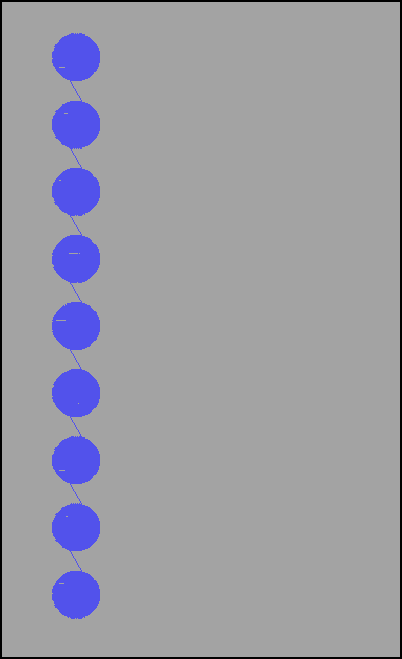

Microsoft has put a lot of effort into fixing these problems, unfortunately this was done not in WPF, but in Direct 2D. Look at this group of 9 ellipses rendered by Direct2D:

Remember how many render calls WPF needed to render a single ellipse with an outline? What about vertex buffer locks? Direct2D does this for ONE draw call. Tessellation looks like this

Direct 2D tries to draw as much as possible at a time, maximizing the use of the GPU and minimizing CPU usage. Read Insights: Direct2D Rendering at the end of this page ; Mark Mark Lawrence explains many of the internal details of Direct 2D. You may notice that despite the speed of Direct 2D there are even more areas where it will be improved in the second version. It is possible that version 2 Direct 2D will use DX11 tessellation hardware acceleration.

Looking at the API Direct 2D, it can be assumed that a significant part of the code was taken from WPF. Watch this old video about Avalon , in which Michael Wallent talks about the development of a GDI replacement based on this technology. It has a very similar geometric API and terminology. Inside it is similar, but very optimized and modern.

What about Silverlight?

I could do Silverlight, but that would be redundant. The rendering performance in Silverlight is also low, but the reasons are different. It uses CPU for rendering (even for shaders, as far as I remember, they are partially written in assembler), but the CPU is at least 10-30 times slower than the GPU. This leaves you with far less processor power for rendering the user interface and even less for the logic of your application. Its hardware acceleration is very poorly developed and almost exactly repeats the cached construction of WPF and behaves in a similar way, making a draw call for each object with BitmapCache (BitmapCached visual).

And what should we do now?

This question is very often asked to me by clients who have encountered problems with WPF and Silverlight. Unfortunately, I do not have a definite answer. Those who can, make their own frameworks, tailored to their specific needs. The rest have to accept, since there are no alternatives to WPF and SL in their niches. If my clients are just developing business applications, then they have not so many problems with speed and they just enjoy the performance of programmers. The real problems for those who want to build really interesting interfaces (that is, consumer apps or kiosk apps).

After the start of the translation, news appeared about the planned performance optimization and the use of DX10-11 in WPF 4.6. Whether the problems described in the article from the news will be solved is not entirely clear.

Original article: A Critical Deep Dive into the WPF Rendering System

At first I did not want to publish this article. It seemed to me that it was impolite - one should speak either well or nothing about the dead. But a few conversations with people whose opinion I really appreciate made me change my mind. Having put a lot of effort into the Microsoft platform, developers need to be aware of the internal features of its work, so that, having come to a dead end, they can understand the causes of the incident and formulate suggestions to the platform developers more accurately. I think WPF and Silverlight are good technologies, but ... If you’ve followed my Twitter for the past few months, then some of the comments may have seemed to you to be a baseless attack on the performance of WPF and Silverlight. Why did I write this? After all, in the end, I have invested thousands and thousands of hours of my own time over many years, promoting the platform, developing libraries, helping community members, and so on. I am definitely personally interested. I want the platform to be better.

Performance, Productivity, Productivity

')

When developing, addictive, user-friendly, user interface, performance is most important to you. Without it, everything else is meaningless. How many times have you had to simplify the interface because it lags? How many times have you come up with a “new, revolutionary user interface model” that you had to throw in the trash because the existing technology did not allow it to be implemented? How many times have you told clients that you need a 2.4 GHz quad-core processor to complete the work? Customers repeatedly asked me why on WPF and Sliverlight they could not get the same smooth interface as in the iPad application, even with four times more powerful PC. These technologies may be suitable for business applications, but they are clearly not suitable for next-generation user applications.

But WPF uses hardware acceleration. Why do you think it is ineffective?

WPF does use hardware acceleration and some aspects of its internal implementation are very good. Unfortunately, the efficiency of using the GPU is much lower than it could be. The WPF rendering system uses very brute force. I hope to explain this statement below.

Analyzing a single pass rendering WPF

To analyze performance, we need to understand what is actually happening inside WPF. For this, I used the PIX, Direct3D profiler, shipped with the DirectX SDK. PIX runs your D3D application and injects a series of interceptors into all Direct3D calls for analysis and monitoring.

I created a simple WPF application in which two ellipses are displayed from left to right. Both ellipses are of the same color (# 55F4F4F5) with a black outline.

And how does WPF render it?

First of all, WPF clears (# ff000000) the dirty area it is going to redraw. Dirty areas are needed to reduce the number of pixels sent to the final merge stage (output merger stage) in the GPU pipeline. We can even assume that this reduces the amount of geometry that will have to be re-tessellated, more on this later. After cleaning the dirty area, our frame looks like this

After that, WPF does something incomprehensible. First, it fills the vertex buffer (vertex buffer), then draws something that looks like a rectangle on top of a dirty area. Now the frame looks like this (exciting, isn't it?):

After that, it tessellates an ellipse on the CPU. Tessellation, as you may already know, is the transformation of the geometry of our 100x100 ellipse into a set of triangles. This is done for the following reasons: 1) triangles are a natural rendering unit for a GPU 2) an ellipse tessellation can result in just a few hundred triangles, which is much faster than rasterizing 10,000 pixels with antialiasing by means of CPU (which makes Silverlight). The screenshot below shows what a tessellation looks like. Readers familiar with 3D graphics may have noticed that these are triangle stripes. Note that in the tessellation, the ellipse looks unfinished. As a next step, WPF takes the tessellation, loads it into the vertex buffer of the GPU and makes another draw call using the pixel shader, which is configured to use the “brush” configured in XAML.

Remember that I noted the incompleteness of an ellipse? It really is. WPF generates what Direct3D programmers know as a “line list” (line list). The GPU understands the lines as well as the triangles. WPF fills the vertex buffer with these lines and guess what? Correctly, performs another draw call? The set of lines looks like this:

Now WPF has finished drawing an ellipse, right? Not! You forgot about the contour! A contour is also a collection of lines. It is also sent to the vertex buffer and executes another draw call. The contour looks like this

At this point, we drew one ellipse, so our frame looks like this:

The entire procedure must be repeated for each ellipse on the stage. In our case, two times.

I did not understand. Why is it bad for performance?

The first thing you could notice is that for rendering one ellipse, we needed three draw calls and two calls to the vertex buffer. To explain the inefficiency of this approach, I will have to tell you a little about how the GPU works. To begin with, modern GPUs work VERY FAST and asynchronously with the CPU. But for some operations, expensive switching from user mode to kernel mode (user-mode to kernel mode transitions) occurs. When filling the vertex buffer it should be blocked. If the buffer is currently used by the GPU, this forces the GPU to synchronize with the CPU and drastically reduces performance. A vertex buffer is created with D3DUSAGE_WRITEONLY | D3DUSAGE_DYNAMIC, but when it is locked (which happens often), D3DLOCK_DISCARD is not used. This can cause a loss of speed (synchronization of the GPU and CPU) in the GPU, if the buffer is already used by the GPU. In the case of a large number of draw calls, we have a high probability of getting a lot of transitions to kernel mode and a big load in the drivers. To improve performance, we need to send as much work to the GPU as possible, otherwise your CPU will be busy and the GPU will be idle. Do not forget that in this example it was only one frame. A typical WPF interface tries to output 60 frames per second! If you have ever tried to figure out why your rendering thread loads the processor so much, then you most likely found that most of the load comes from your GPU driver.

And what about cached construction (Cached Composition)? After all, it improves performance!

Without a doubt, raises. Cached construction or BitmapCache caches objects into the GPU texture. This means that your CPU does not need to peresteselirovat, and the GPU does not need to be re-rasterized. When performing one rendering pass, WPF simply uses the texture from the video memory, increasing performance. Here is the BitmapCache ellipse:

But WPF has dark sides in this case too. For each BitmapCache, it performs a separate draw call. I will not lie, sometimes you really need to perform a draw call to render a single object (visual). Anything can happen. But let's imagine a scenario in which we have a <Canvas /> with 300 animated BitmapCached-ellipses. The advanced system will understand that it needs to render 300 textures and they are all z-ordered (z-ordered) one after the other. After that, it will collect their packets of the maximum size, as I recall, the DX9 can receive up to 16 sampling inputs at a time . In this case, we get 16 draw calls instead of 300, which will noticeably reduce the load on the CPU. In terms of 60 frames per second, we will reduce the load from 18,000 draw calls per second to 1125. In Direct 3D 10, the number of incoming items is much higher .

Okay, I read this far. Tell me how WPF uses pixel shaders!

WPF has an extensible pixel shader API and some built-in effects. This allows developers to add truly unique effects to their user interface. When Directing a Shader to an existing texture, Direct 3D typically uses an intermediate rendering target (intermediate rendertarget) ... after all, you cannot use the texture from which you are writing as a sample! WPF also does this, but unfortunately it creates a completely new EACH FRAME texture and destroys it upon completion. Creating and destroying GPU resources is one of the slowest things you can do when processing each frame. I usually do not do this even with the allocation of system memory of similar volume. Reusing these intermediate surfaces could achieve a very significant increase in productivity. If you have ever wondered why your hardware-accelerated shaders put a significant load on the CPU, now you know the answer.

But maybe this is the way to render vector graphics on a GPU?

Microsoft has put a lot of effort into fixing these problems, unfortunately this was done not in WPF, but in Direct 2D. Look at this group of 9 ellipses rendered by Direct2D:

Remember how many render calls WPF needed to render a single ellipse with an outline? What about vertex buffer locks? Direct2D does this for ONE draw call. Tessellation looks like this

Direct 2D tries to draw as much as possible at a time, maximizing the use of the GPU and minimizing CPU usage. Read Insights: Direct2D Rendering at the end of this page ; Mark Mark Lawrence explains many of the internal details of Direct 2D. You may notice that despite the speed of Direct 2D there are even more areas where it will be improved in the second version. It is possible that version 2 Direct 2D will use DX11 tessellation hardware acceleration.

Looking at the API Direct 2D, it can be assumed that a significant part of the code was taken from WPF. Watch this old video about Avalon , in which Michael Wallent talks about the development of a GDI replacement based on this technology. It has a very similar geometric API and terminology. Inside it is similar, but very optimized and modern.

What about Silverlight?

I could do Silverlight, but that would be redundant. The rendering performance in Silverlight is also low, but the reasons are different. It uses CPU for rendering (even for shaders, as far as I remember, they are partially written in assembler), but the CPU is at least 10-30 times slower than the GPU. This leaves you with far less processor power for rendering the user interface and even less for the logic of your application. Its hardware acceleration is very poorly developed and almost exactly repeats the cached construction of WPF and behaves in a similar way, making a draw call for each object with BitmapCache (BitmapCached visual).

And what should we do now?

This question is very often asked to me by clients who have encountered problems with WPF and Silverlight. Unfortunately, I do not have a definite answer. Those who can, make their own frameworks, tailored to their specific needs. The rest have to accept, since there are no alternatives to WPF and SL in their niches. If my clients are just developing business applications, then they have not so many problems with speed and they just enjoy the performance of programmers. The real problems for those who want to build really interesting interfaces (that is, consumer apps or kiosk apps).

After the start of the translation, news appeared about the planned performance optimization and the use of DX10-11 in WPF 4.6. Whether the problems described in the article from the news will be solved is not entirely clear.

Original article: A Critical Deep Dive into the WPF Rendering System

Source: https://habr.com/ru/post/255683/

All Articles