Microsoft hackathon "IoT - Internet of Things" in Nizhny Novgorod

Reason to start

I want to tell you about the hackathon, which was conducted by Microsoft and Intel in Nizhny Novgorod during the Microsoft Developer Tour Technology Expedition. So say firsthand. As a member. I think this will be the most interesting.

The topic of the hackathon, which was supposed to be held in Nizhny Novgorod, is IoT - Internet of Things. To be honest, for me this term was new and I had to google to understand the basic principles. It turned out all quite simple, there is a device that collects information from some sensors and sends it to the Internet for access and processing.

The fact that the tour will be held hackathon, I learned shortly from the event. It was written that the hackathon will need to create something using the Intel Galileo board.

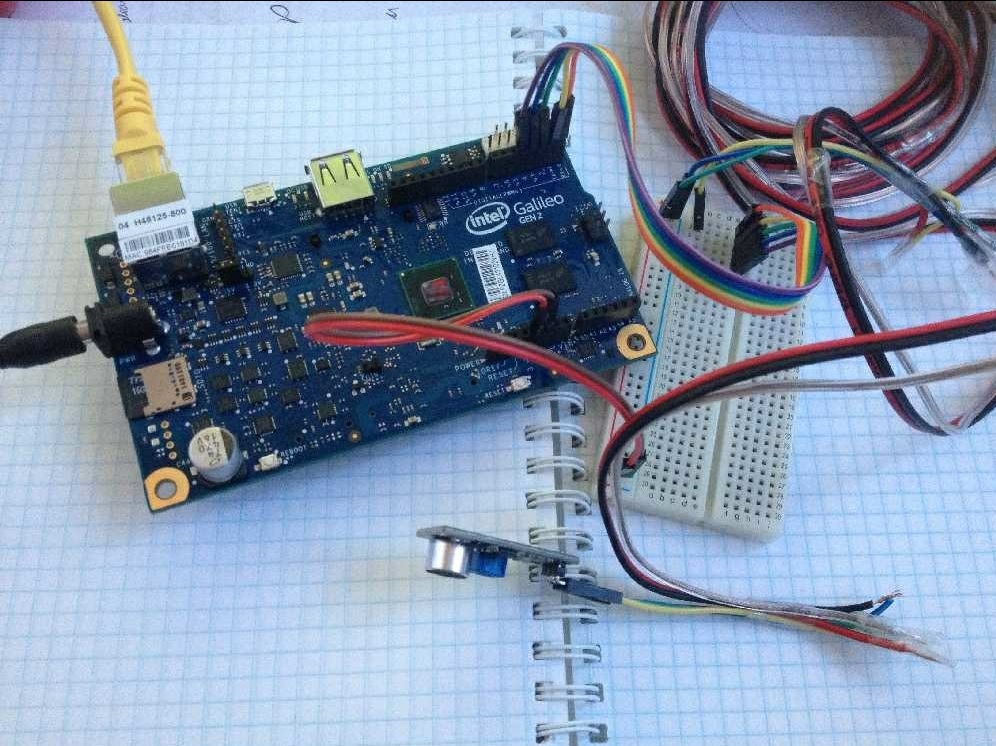

And how well it coincided that about a month ago I attended the Intel conference dedicated to Intel Galileo and Intel Edison. Where I got the Intel Galileo Gen 2 board. Thank you! But her hands did not reach her at all, and she just lay on the table. Of course, I ordered sensors and shields for it on the well-known Chinese website, but they still have not reached.

')

A few words about Intel Galileo and Intel Edison. These are cards that are compatible with Arduino Uno R3 contacts. You can insert standard sensors and shields into them. There are development tools to run arduino sketches. And then it may be asked why these boards are needed, if they are more expensive and consume more. I confess that I also thought so initially. But upon reflection, I came to the conclusion that the purpose of these boards is completely different. Compare Arduino with 16 MHz and Intel Galileo Gen 2 with 400 MHz. The RAM memory is 2 KB against 256 MGB DDR3. Even on the boards from Intel, there is a built-in 100 Mbit network interface and running Linux. They do not need to be compared, they are for different tasks.

Read more here: habrahabr.ru/company/intel/blog/248279

In general, I had an Intel Galileo Gen 2 motherboard, which just lay in a box. I didn’t have experience with this board as with Arduino. And here is the hackathon.

A few days before the hackathon, I decided to put Linux on my board with this instruction: habrahabr.ru/company/intel/blog/248893

I connected to the board via SSH, via a local network. There's normal Linux, nice. Everything is fine, everything works. I wrote a simple example in C ++ from the Internet, which flashes with a built-in LED. It didn't work immediately (I forgot to specify -lmraa in the compilation parameters). Hooray! Everything works, you can go to sleep.

Once the board is working, you can try to connect something. I went to the radio market to see if you can buy something for the arduino. Bought a breadboard, wires. Something on the little things, some sensors. But I did not test anything, again my hands did not reach.

Idea

In the past few days, I had a brainstorm that can be implemented on this board. I didn’t want something simple, like LED blinking, measuring the temperature in the room. All this has happened before, and Intel Galileo is too powerful for such tasks. I wanted something with big calculations, data processing, sending them to the server for further processing or analysis.

And then the next idea came to mind. Some years ago, in the journal Computerra, there was news about experimental technology for determining the position of a source of a loud sound, for example, a shot. There are microphones on the street. They perceive sound, and because sound reaches all microphones not simultaneously, but with a delay, you can find the position of the sound source. I decided to create such a system.

The idea is, you can go to the radio market to buy sensors. Googling, I found that there are two types of sound sensors. The first with a digital output, responds to a threshold overflow and has, respectively, three contacts (ground, +5, a threshold signal). The second sensor additionally has an analog output and, accordingly, four contacts (ground, +5, a threshold signal, an analog signal). I wanted a second one that produces an analog signal. Having received a signal from several sensors, it will be possible to superimpose them and find a match to accurately determine the delay. Well, either dynamically look for the trigger threshold. More to the sensors were needed wires. 10 meters each. So, just in case. On the market, I found sound sensors, but with only three conclusions. There were no others. The seller assured that their output is analog. Okay, believe it, although why is there a trimmer resistor? I took 5 sensors. Now the wires. 50 meters of a red-black sound wire 0.25 mm and 30 meters of light (0.25 mm, double). I was counting on each sensor to start up red and black for power and one of a pair of light for the signal.

Okay, everything is there. It was all on Thursday, and on Saturday it was already a conference.

At home I tried to connect sensors. The first time it's scary to stick something into the board, it will suddenly burn. But nothing, stuck, there is no smoke, the console works. I try to get analog values from the sensor. And here is a surprise, the sensor turns out to be binary, i.e. only responds to signal overshoot. And this level is set by the tuning resistor on the board. Aaaa! To run the next day, on Friday, it makes no sense to market. It is not known whether the correct sensors will be there. And there is no time, any business, also Visual Studio on to put and to adjust a grid. The market will take another half day. And on Saturday, a conference from 9 in the morning, I’d still be able to sleep.

Friday evening, coming home at 10 o'clock, I started to set things up.

For the hackathon, I decided to stock up on everything just in case. Extension, as there will be a lot of participants, and sockets may not be enough. Router, because The board must be connected via a network wire. A router is also needed to connect to the Internet via a USB modem. In general, complete autonomy. I thought to meet a couple of hours. Put on the installation of Visual Studio 2013 Community. From the installation of VC 2015 refused. So, the preparation and collection of all the glands that need to be taken to the hackathon, instead of the planned couple of hours, lasted until 4 am And yes, at 8 to get up.

As a result, I took with me: an extension cord, adhesive tape, blue electrical tape (yes, the same one), long wires, connecting wires for a breadboard and sensors, scissors, a router, 5 sound sensors, a USB modem, a vibration sensor, a tape measure. Everything, it's time to sleep, we will understand there.

Magical Mystery Tour

There were many reports at the conference. Diverse, interesting.

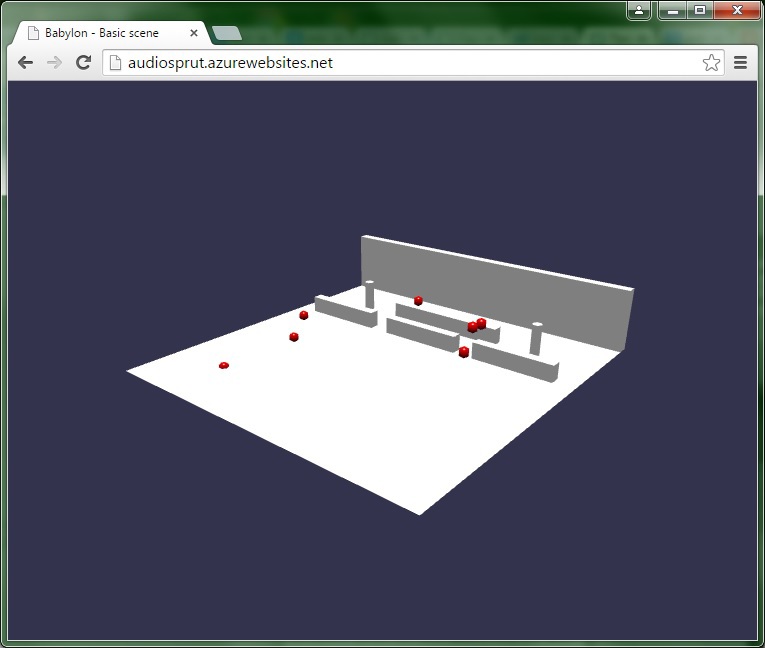

There was a report about WebGL and the Babylon JS library, with which you can easily draw in the browser in 3D. The idea is good, you can try to fasten it to your project later on the hackathon.

The conference ended at 19:00, the hackathon began at 20:00 in the Polytech building. You can walk on foot. On the way, I bought a pizza for a little snack.

Hackathon

So 20:00, I'm on the spot. The hall is big. From university I used to sit on the first row, so I occupy the first table in the center. For my glands just the whole table will be needed.

Photo from the hackathon events.techdays.ru/msdevtour/news#fe58625b-bcd9-498a-94e8-161ccc286f11

From 20:00 to 00:00 lectures about IoT and Azure from Microsoft and Intel.

One lecture was from Intel about Galileo and Edison. One thing about using Azure. What he knows how and how to use it. This was the most important thing, because until that time I had never worked with Azure.

Dmitry Soshnikov told how Microsoft sees IoT. This is a small device that collects some data from sensors, transmits them to the cloud and allows them to receive and analyze. My project just met all these requirements, it remained to implement it.

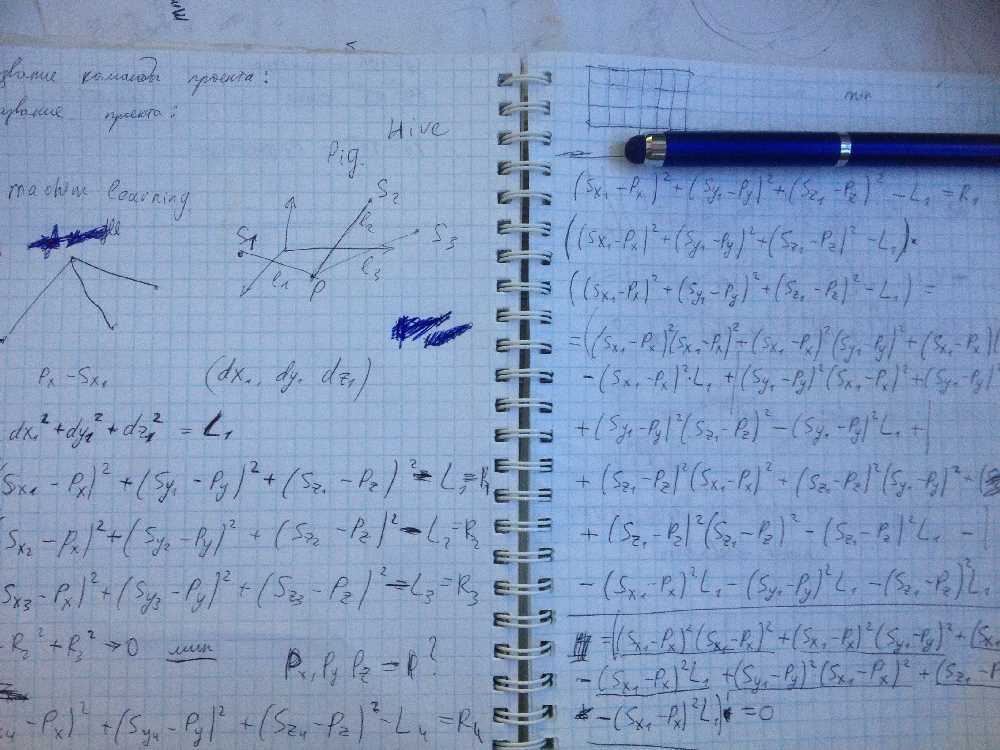

While there were lectures, so as not to waste time in vain, I decided to deduce the formulas that will be needed to determine the position of the signal source.

Our task is to find a point in space that best suits the received times of delays from the sensors. That is, an optimization problem in which there may be more variables than unknowns. Maybe something like the method of least squares.

Wrote dependencies, began to deduce. I used up two pages, and I realized that I’m lazy to solve 4-degree equations, and I can spend all my time on it, and there can also be mistakes. And it became somehow sad.

As a result, I decided to act by a universal method, a method of complete enumeration. I estimated the size of the audience, about 10 by 10 meters, let it be a cube 10x10x10, let it be 10 cm accuracy. We get 1 million points. A lot, but the processor can handle it. In which case, accuracy can be reduced.

So, the lectures are over, everyone should come up with projects and put together teams. There were 60 people in the hall.

The presentation of the teams has begun. I came out third. Briefly explained what my project is about and said that I already have a fee, and I plan to do the project alone. The name is Audio sprut, because the sound sensors are like tentacles.

All told about their projects. People began to walk and join projects.

Well, let's start. The first thing was to accurately determine the time. The first option is to count how many steps of the loop have been completed. But here it is necessary to determine how long each step of the cycle takes, and this is not very accurate. We had to find a high-precision timer. I remembered that there is something in C ++ 11. Searches on the Internet gave the decision.

So the algorithm for working with sensors is as follows. We interrogate all the sensors until one of them shows a signal. His time is taken as zero. Then we poll the remaining sensors and as soon as a signal appears at some signal, we record the current time for it. We perform this survey until all sensors give a signal. According to the obtained times for each sensor, we find its distance delta, multiplying the speed of sound by time.

Measurements showed that digital sensors are polled at a rate of 83 kHz. This gives an accuracy of about 2 cm for five sensors. Very good. For the sake of interest, I measured the speed of the analog read. About 4 kHz.

It seems that the sensors I bought are not the ones I wanted, but they simplify my task. Firstly, there is no need to look for a correspondence between analog signals, and secondly, accuracy is higher.

But we must also take into account that Linux is not a real-time operating system. Therefore, it is not guaranteed that the system will be occupied only by our code, and will not switch to something else. Intel Edison with two cores would certainly help here. But what is, that is.

For tests, I took two sensors located at a short distance, about 15 centimeters. And I clicked the boys from the side of one sensor, then from the other. In this case, it was clearly visible that the signal was delayed and the calculated delta of the distance was consistent with reality.

Since I had my own board and a configured router, I was not dependent on the work of WiFi. But there was a big problem, my internet was sometimes terrible. At times the speed dropped to 100 kbits / s.

Must be cloudy today

So, the program determines the position of the sound source, it remains to transmit this information to the cloud.

We were told that the simplest option is to send data through events. I am trying to figure out the Python script to send something to the server (I have not worked on python before). First, the script generates errors in the response from the server. The neighboring team is also trying to send data and to no avail. I tinker for a long time, and about a miracle, the answer is 201, it means there is no error and the data is transferred. I go to Azure, and there is no data visible. There are references, but I do not see the data. I have been trying to figure it out for a long time, I ask the organizers, and it turns out that events do not suit me. They are disposable, if it is considered it is deleted. Surprise! And what to use? Tables

Great, all over again. The tables are not so simple. The interface in Azure is not very intuitive. I do not remember the details, but I created the server, I created the table. Now write data there. And this already turned out to be not such a trivial task. Code similar to messages does not work. After talking with Tatiana Smetanina, who surprisingly managed to run between the teams and help everyone, it becomes clear that the database is standard and you can work with it as with any MySQL. It remains to connect to the database and make INSERT. Left a little. How to connect? From C ++, I have no desire. I don't know Python well. But on Gagileo there is perl. Fine! I try to write a small test script. But the DBI module is not installed. I run cpan. The Internet is slowing down for me, but something is swinging, it's already nice. But at the end of the installation in about 20 minutes, it turns out that some kind of library or modules are missing. I try to put this library in a similar way. I try to put something else. Time is running out, nothing works. It's a shame. As I understand it, the neighbors are similar. Everything is somehow difficult, but the presentation was simple, just a few lines. And it turns out that the database can be accessed via the REST protocol, for this you need to create a mobile client in Azure. Good try. Again a bunch of searches on the Internet. Found an example on python. And lo and behold, he writes to the database! On the Azure website you can see the data that Galileo sends. Great, add a call from C ++ to the system function, which runs the python script with parameters. Everything. You can forget about this part. Now we need to learn how to read data from the database through JavaScript.

Base, give the data!

If you enter the address of the table in the browser, the browser displays JSON with the data. All perfectly. It remains to parse the data. I am writing a small local html-ku with javascript, which should load the tablet and show it on the page. Does not work. No data. Something is coming, but it is not clear what. Begin to understand. The debugger in the browser writes something about some rights. What does it have to do with rights? At the usual address everything is shown. Tinkering, looking, poking around, looking. Nothing is clear why it does not work. After that, I don’t know what kind of attempt, I decide in steps to do what is written on the Azure page for working with mobile interfaces. There it is proposed to start a local web server. Delov for a couple of lines, but somehow I don’t like the server locally. Well, if nothing helps, read the instructions. I do everything as it is written, and everything works. The local script page receives the data and displays it on the screen. Hooray! Of course, I wanted a web server, which is on Azure on the Internet, but this is already good.

Babylon, but not 5, but JS

There is data, it is necessary to fasten a 3D visualization based on Babylon JS.

I measure the size of the table at which I sit, to make the correct 3D model of the room. 106 centimeters in length one section. That is, the whole table is 2 meters. Fine. Three blocks with tables plus a passage, it turns out 10 meters. Fine. I add on the front wall, tables of the first row, tables of the organizers. of the department. In the browser, everything looks fine. I think where to put the sensors. While doing the estimation it turns out that something does not converge. Tables, it turns out, consist not of two sections of 1 meter each, but of four. And three blocks from the tables do not fit in 10 meters. I go with a tape measure into the hallway, measure the size of the audience outside. 16 meters. It is necessary to redraw everything.

Dinner. No, thanks, I can be patient without lunch. Although half an hour, but it can help.

Everything is working. Data with the position of the points is read, you can still suffer with getting data from the site, and not from the local page. Looking, looking, looking for a long time. Then somewhere I notice in the security settings that access to the site is allowed only from localhost. Well, yes, exactly, I remember that MySQL has a similar effect. I add the address of the site to the allowed hosts and it all worked right away. Hooray. Everything had time. I still have more than an hour.

(It should be noted that while I was experimenting with adding and receiving data, the access was set up for all. Therefore, no access keys are specified in the data adding script. Now I have turned it off.)

Last preparations, time in bulk

Everything works, sensors receive data, the board calculates the position, sends it to the cloud, the site does a visualization. It only remained to attach the long wires to the sensors in order to place them far apart in the audience and set their absolute position in space in the settings file. I expected to place two sensors on the edges of my desk, two sensors on the chairs for the report and one in the center at the main table. There was a whole hour left for that. Time in bulk, I thought, and began to tear the double wires along. The wires to the first two sensors should have been three meters.

The wire broke. It is necessary to add a pair of wires with a single, so that they become three. I start to twist them together, fixing with scotch tape every half meter. All this took 10 minutes. It is clear that at this rate I will not have time. The wire is ready, but now you need to lengthen the wire from the sensor, including new wires in the gap. I clean, twist, wrap tape. I connect to the board, the sensor works. Great, go to the next. Since the time is not enough for everything, I decide to leave only 3 sensors. With wires 3 meters, 3 meters and 8 meters. The last eight-meter one already does not fix it with scotch tape, I just twist it a little. Hurry, and this is very bad. We clean the wire, it naturally breaks. Corrected. Made. I connect the sensor. And the LED on it is lit, although it should not. I look at the wires, and I +5 served on the sensor output, immediately to the LED. Fine, I think maybe burned. I change the wires correctly, the sensor still glows red. Just burned it! I take one more. Well there is a spare. I connect. It also glows red. The tuning resistor does not help. What's the matter? I understand. It turns out to be incorrectly connected to the board. Well, maybe the ports burned on the board. This is the end, there will be no demo. I check everything again, I connect everything correctly. And lo and behold, everything works. And just 16:00, the beginning of the reports. My second.

Reports

It’s good that I managed to launch a project on the Azure website, I don’t need to connect a laptop for a presentation. Moreover, it is connected to the board through a router and it would be difficult to disable it. I tell about the project. Showing a 3D audience model with balls, previous debug positions of signal sources. Clap your hands. In the logs from the console you can see that the data has gone, rebooting the browser. A new point should have appeared, but I honestly do not remember which were and which were not. Everything.

It is very pleasant to be one of the first, then you can sit and quietly listen to other reports. Reports were different.

That's all. Oh yeah, I became one of the winners of the hackathon and received a prize.

General impressions

Liked. Such a large crowd of people who are interested. I talked to some of them.

Thanks to the hackathon, but it is unknown when I would take up Intel Galileo. And to get to Azure, it is generally something impossible. A lot has been learned.

In general, thanks to Microsoft and Intel.

And in conclusion, I bring the code that I had on the hackathon without changes.

Files:

dist1.cpp

#include "mraa.h" #include <ctime> #include <ratio> #include <chrono> #include <vector> #include <iostream> #include <stdlib.h> #include "funcs.h" int main() { init(); int waitId = 0; const int numMics = NUM; // std::cout<<"Num sensors="<<numMics<<std::endl; // return 0; mraa_gpio_context gpio[numMics]; bool on[numMics]; int dist[numMics]; // std::chrono::high_resolution_clock::time_point times[numMics]; std::chrono::duration<double> times[numMics]; for( int k = 0; k < numMics; k++ ) { gpio[k] = mraa_gpio_init(k); mraa_gpio_dir(gpio[k],MRAA_GPIO_IN); } do { std::cout<<"Wait..."<<waitId<<std::endl; waitId++; for( int k = 0; k < numMics; k++ ) { on[k] = false; dist[k] = 0; } auto numOn = 0; int currStep = 0; std::chrono::high_resolution_clock::time_point startTime; do { for( int k = 0; k < numMics; k++ ) { if( ! on[k] ) { int v = mraa_gpio_read(gpio[k]); if( v == 0 ) { std::chrono::high_resolution_clock::time_point now = std::chrono::high_resolution_clock::now(); if( numOn == 0 ) { currStep = 0; startTime = now; } on[k] = true; dist[k] = currStep; times[k] = std::chrono::duration_cast<std::chrono::duration<double> >(now-startTime); numOn++; } } } currStep++; } while (numOn < numMics && (currStep < 8000 || numOn < 1) ); if( currStep >= 8000 ) continue; for( int k = 0; k < numMics; k++ ) { printf("%d ",dist[k]); } printf(" === time(s): "); for( int k = 0; k < numMics; k++ ) { printf("%f ",times[k].count()); } printf(" === dist(m): "); std::vector<float> L; for( int k = 0; k < numMics; k++ ) { float d = 300*times[k].count(); L.push_back(d); printf("%f ",d); } Point3 event = getPosition(L); std::cout<<"Event=("<<event.x<<","<<event.y<<","<<event.z<<")"<<std::endl; char str[300]; sprintf(str,"./test_rest_add.py %f %f %f",event.x,event.y,event.z); std::cout<<"send data"<<std::endl; system(str); std::cout<<"send ok"<<std::endl; // create dists sleep(1); } while (true); for( int k = 0; k < numMics; k++ ) { mraa_gpio_close(gpio[k]); } return 0; } funcs.h

#include <vector> struct Point3 { float x,y,z; }; struct DistPos { Point3 p; float dist; }; struct Box { Point3 p1,p2; float step; }; void init(); Point3 getPosition(std::vector<float> &dists); extern int NUM; funcs.cpp

#include <vector> #include <fstream> #include <iostream> #include "funcs.h" #include <math.h> std::vector<Point3> sensorPos; int NUM = 0; void readSensorPos() { if( sensorPos.size() > 0 ) { return; } std::ifstream file("positions.txt"); if( ! file ) { return; } int num = 0; file>>num; std::cout<<"Num="<<num<<std::endl; for( int k = 0; k < num; k++ ) { float x,y,z; file>>x>>y>>z; Point3 point; point.x = x; point.y = y; point.z = z; sensorPos.push_back( point ); std::cout<<"x="<<x<<" "; std::cout<<"y="<<y<<" "; std::cout<<"z="<<z<<std::endl; } NUM = num; } void init() { readSensorPos(); } Point3 getPosition( std::vector<DistPos> dists, Box box) { std::cout<<"Box=("; std::cout<<box.p1.x<<","<<box.p1.y<<","<<box.p1.z<<")-("; std::cout<<box.p2.x<<","<<box.p2.y<<","<<box.p2.z<<")"<<std::endl; std::cout<<"dists.size="<<dists.size()<<std::endl; Point3 bestPoint; bestPoint.x = 0; bestPoint.y = 0; bestPoint.z = 0; float minErr = 10e20; float normDist[NUM]; for( float tx = box.p1.x; tx <= box.p2.x; tx+= box.step ) { for( float ty = box.p1.y; ty <= box.p2.y; ty+= box.step ) { for( float tz = box.p1.z; tz <= box.p2.z; tz+= box.step ) { float currErr = 0; //std::vector<float> normDist(dists.size()); int kk = 0; for( auto iter = dists.begin(); iter != dists.end(); iter++, kk++ ) { float dx = iter->px - tx; float dy = iter->py - ty; float dz = iter->pz - tz; float d = sqrt(dx*dx + dy*dy + dz*dz); normDist[kk] = d; // float err = d - (iter->dist * iter->dist); // currErr += err*err; } float minVal = normDist[0]; for( int k = 1; k < NUM; k++ ) { if( normDist[k] < minVal ) minVal = normDist[k]; } for( int k = 0; k < NUM; k++ ) { normDist[k] -= minVal; } currErr = 0; for( int k = 0; k < NUM; k++ ) { float delta = normDist[k] - dists[k].dist; currErr = delta*delta; } if( currErr < minErr ) { minErr = currErr; Point3 p; px = tx; py = ty; pz = tz; bestPoint = p; } } } } return bestPoint; } Point3 getPosition(std::vector<float> &dists) { Point3 p1,p2; p1.x = -5; p1.y = 0; p1.z = -5; p2.x = 5; p2.y = 3; p2.z = 5; float step = 0.1; Box box; box.p1 = p1; box.p2 = p2; box.step = step; std::cout<<"DistPos="<<std::endl; std::vector<DistPos> distPos; for( int k = 0; k < dists.size(); k++ ) { DistPos dp; dp.p = sensorPos[k]; dp.dist = dists[k]; distPos.push_back(dp); std::cout<<"("<<dp.px<<","<<dp.py<<","<<dp.pz<<") d="<<dp.dist<<std::endl; } Point3 bestPoint = getPosition( distPos, box); return bestPoint; } //const int NUM = 3; //float dist[NUM]; positions.txt

3 2 1 3 -2 1 3 0 1 5 test_rest_add.py

#!/usr/bin/python import urllib import urllib2 import sys x = sys.argv[1] y = sys.argv[2] z = sys.argv[3] #print x," ",y," ",z,"\n" url = 'https://audiosprut.azure-mobile.net/tables/pos' params = urllib.urlencode({ "x": x, "y": y, "z": z, "present" : "true" }) response = urllib2.urlopen(url, params).read() Link: audiosprut.azurewebsites.net

Source: https://habr.com/ru/post/255447/

All Articles