Bag of words and sentiment analysis on R

This article is based on (first part) of the Bag of Words Kaggle study task, but this is not a translation. The original task is done in Python. I wanted to evaluate the possibilities of the R language for processing natural language texts and at the same time try implementing the Random Forest implementation in the wrapper of the R package caret.

The meaning of the task is to build a “machine” that will in a certain way handle film reviews in English and determine the tone of the review, relating it to one of two classes: negative / positive. As a training sample, the task uses a data set with twenty-five thousand revisions from the IMDB, marked up by unknown volunteers.

The code for the article and the task preparation can be found in my repository on GitHub. To do this, you will need R version 3.1.3 (if you ignore warnings from packages, then in 3.1.2 everything will work too). Additionally, you must install the following packages:

The task in abbreviated form (a thousand reviews and about a hundred of the most common terms) can be done on a machine with a 32-bit OS, but the full version will require 64 bits and 16 GB of RAM. During training, the model uses about 12 GB of physical memory.

')

A training set is a tab-delimited data set. The control sample also contains 25,000 revues, but of course without markup. Files can be downloaded from this page. This will require registration at Kaggle.

After downloading and unpacking, I recommend to check the dimension, it should be 25000 rows in three columns. I did not succeed immediately because of quotes in the text. I experimented on the first record. The corresponding review is quite long, 2304 characters, so I quote only its fragment:

The text has punctuation marks, HTML tags and special characters that you had to get rid of. For starters, I cleared the HTML review. Then he removed the punctuation marks, special characters, numbers. Generally speaking, with such an analysis, some constructions from punctuation marks, like emoticons, it is better not to delete, but convert to words, but I didn’t do this, I just converted all the characters to lower case and made out individual words. It remains to remove the words of general vocabulary that did not add meaning to the review. I took a list of such words for English from the tm package. For the sake of interest, I looked at the first 20:

“I” “me” “my” “myself” “we” “our” “ours” “we” “you” “your” “yours” “yourself” “yourselves” “he” “him” “his” “himself” "" She "" her "" hers "

Then I repeated the above operations for all 25,000 entries in the training set. At the same time removed one-letter words left over from constructions like MJ's.

A bag of words (or Bag of Words) is a model of texts in a natural language, in which each document or text looks like an unordered set of words without knowing the links between them. It can be represented as a matrix, each line in which corresponds to a separate document or text, and each column to a specific word. The cell at the intersection of the row and column contains the number of occurrences of the word in the corresponding document.

To prepare the word bag, I used the tm package. This package as an object works with the so-called linguistic corpus of the first order - a collection of texts united by a common feature. In our case - all texts are movie reviews. In order to create a body, you first need to convert the texts into a vector, each element of which represents a separate document. And then build on the basis of the housing matrix "document-term". She will become a bag of words.

First, I got a matrix of 25,000 documents and 73,759 terms. Obviously, there were too many terms. After lemmatization, in other words, bringing the word form to a lemma - the normal, vocabulary form, there are 49,549 terms left. It was still a lot. Therefore, I removed the words that are particularly rare in the reviews. In the original assignment, 5,000 words were used, unfortunately, the sparsity parameter has a non-linear effect on the number of terms, so, varying it, I stopped at the number 5072.

This is how the fragment of the Bag looked with the most frequently used words:

To build the model, I chose the randomForest package in the caret wrapper. Caret allows you to flexibly manage the learning process, has built-in capabilities for data preprocessing and quality control of training. Besides with caret you can use several processor cores at once.

On a real sample (25000 x 5072) with a specified number of trees, nree = 100, training took about 110 hours on a computer with OS X 10.8, 2.3 GHz Intel Core i7, 16 GB 1600 MHz DDR3.

I note that when using caret, three models with different mtry values are trained at once, and then the optimal one is chosen according to the Accuracy indicator. mtry sets the number of terms that are randomly selected with each branch of the tree.

By default, when training a model, it is supposed to use bootstrapping with 25 cycles. In order to save computer time, I replaced it with a sliding control with five groups, i.e. in each cycle, 80% is used for training, 20% for control.

The best model for a reduced sample showed an accuracy of 0.71. This meant that approximately 29% of all reviews as a result would be classified incorrectly. But it is on the control sample, even with a sliding control. On the test sample, the accuracy will be slightly worse. But for our abbreviated data set this is not so bad.

The varImp function from the caret package provides a list of indicators used to train the model, in descending order of importance. The first 10 looked quite expected:

term rate

I processed the test sample in the same way as the training sample with one exception - the dictionary obtained from the training sample was used to select terms in the test one.

After I had a matrix of documents / terms, I converted it into a data frame, which I sent to predict () for prediction using a trained model. The forecast was saved in csv and sent via Kaggle via the form on the website.

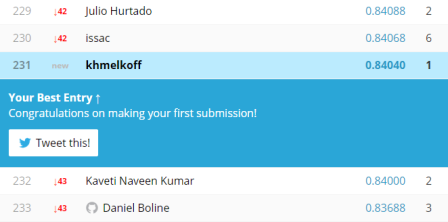

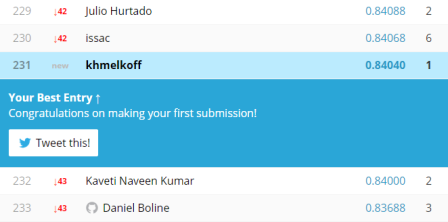

The result is quite modest, 231 out of 277, but still my “car” was wrong about one in six cases, which inspires some optimism.

As it turned out, the possibilities of R are quite enough for processing natural language texts. Unfortunately, I do not have data on how much faster the task is performed in Python. The tm package is not the only available tool, R has interfaces to OpenNLP , Weka and a number of packages that allow you to solve individual problems. More or less current tool list can be found here . There is even a Google implementation of word2vec and, despite the fact that it is still in development, you can try to make the second part of the Kaggle task, where word2vec is a central technological component.

The meaning of the task is to build a “machine” that will in a certain way handle film reviews in English and determine the tone of the review, relating it to one of two classes: negative / positive. As a training sample, the task uses a data set with twenty-five thousand revisions from the IMDB, marked up by unknown volunteers.

The code for the article and the task preparation can be found in my repository on GitHub. To do this, you will need R version 3.1.3 (if you ignore warnings from packages, then in 3.1.2 everything will work too). Additionally, you must install the following packages:

- tm - a set of tools for working with texts

- caret is a wrapper that supports more than 100 machine learning methods

- randomForest - a package that implements the algorithm of the same name

- SnowballC - auxiliary package for lemmatization in tm

- e1071 - package of additional functions for randomForest

The task in abbreviated form (a thousand reviews and about a hundred of the most common terms) can be done on a machine with a 32-bit OS, but the full version will require 64 bits and 16 GB of RAM. During training, the model uses about 12 GB of physical memory.

')

A training set is a tab-delimited data set. The control sample also contains 25,000 revues, but of course without markup. Files can be downloaded from this page. This will require registration at Kaggle.

Preliminary data processing

After downloading and unpacking, I recommend to check the dimension, it should be 25000 rows in three columns. I did not succeed immediately because of quotes in the text. I experimented on the first record. The corresponding review is quite long, 2304 characters, so I quote only its fragment:

It was originally released. "\" Moonwalker This is a mi-kay message. .. "

The text has punctuation marks, HTML tags and special characters that you had to get rid of. For starters, I cleared the HTML review. Then he removed the punctuation marks, special characters, numbers. Generally speaking, with such an analysis, some constructions from punctuation marks, like emoticons, it is better not to delete, but convert to words, but I didn’t do this, I just converted all the characters to lower case and made out individual words. It remains to remove the words of general vocabulary that did not add meaning to the review. I took a list of such words for English from the tm package. For the sake of interest, I looked at the first 20:

“I” “me” “my” “myself” “we” “our” “ours” “we” “you” “your” “yours” “yourself” “yourselves” “he” “him” “his” “himself” "" She "" her "" hers "

Then I repeated the above operations for all 25,000 entries in the training set. At the same time removed one-letter words left over from constructions like MJ's.

Bag of words

A bag of words (or Bag of Words) is a model of texts in a natural language, in which each document or text looks like an unordered set of words without knowing the links between them. It can be represented as a matrix, each line in which corresponds to a separate document or text, and each column to a specific word. The cell at the intersection of the row and column contains the number of occurrences of the word in the corresponding document.

To prepare the word bag, I used the tm package. This package as an object works with the so-called linguistic corpus of the first order - a collection of texts united by a common feature. In our case - all texts are movie reviews. In order to create a body, you first need to convert the texts into a vector, each element of which represents a separate document. And then build on the basis of the housing matrix "document-term". She will become a bag of words.

First, I got a matrix of 25,000 documents and 73,759 terms. Obviously, there were too many terms. After lemmatization, in other words, bringing the word form to a lemma - the normal, vocabulary form, there are 49,549 terms left. It was still a lot. Therefore, I removed the words that are particularly rare in the reviews. In the original assignment, 5,000 words were used, unfortunately, the sparsity parameter has a non-linear effect on the number of terms, so, varying it, I stopped at the number 5072.

This is how the fragment of the Bag looked with the most frequently used words:

Docs act

1 0 0 1 2 1 0 3 0 0 1

2 0 0 0 0 0 0 0 0 0 1

3 0 1 0 0 0 0 1 0 1 0

4 0 1 0 1 2 0 0 0 0 1

Model training

To build the model, I chose the randomForest package in the caret wrapper. Caret allows you to flexibly manage the learning process, has built-in capabilities for data preprocessing and quality control of training. Besides with caret you can use several processor cores at once.

On a real sample (25000 x 5072) with a specified number of trees, nree = 100, training took about 110 hours on a computer with OS X 10.8, 2.3 GHz Intel Core i7, 16 GB 1600 MHz DDR3.

I note that when using caret, three models with different mtry values are trained at once, and then the optimal one is chosen according to the Accuracy indicator. mtry sets the number of terms that are randomly selected with each branch of the tree.

By default, when training a model, it is supposed to use bootstrapping with 25 cycles. In order to save computer time, I replaced it with a sliding control with five groups, i.e. in each cycle, 80% is used for training, 20% for control.

The best model for a reduced sample showed an accuracy of 0.71. This meant that approximately 29% of all reviews as a result would be classified incorrectly. But it is on the control sample, even with a sliding control. On the test sample, the accuracy will be slightly worse. But for our abbreviated data set this is not so bad.

The varImp function from the caret package provides a list of indicators used to train the model, in descending order of importance. The first 10 looked quite expected:

term rate

- great 100.00000

- bad 81.79039

- movi 59.40987

- film 53.80526

- even 42.43621

- love 39.98373

- time 38.14139

- best 36.50986

- one 36.36246

- like 35.85307

Kaggle forecasting and testing

I processed the test sample in the same way as the training sample with one exception - the dictionary obtained from the training sample was used to select terms in the test one.

After I had a matrix of documents / terms, I converted it into a data frame, which I sent to predict () for prediction using a trained model. The forecast was saved in csv and sent via Kaggle via the form on the website.

The result is quite modest, 231 out of 277, but still my “car” was wrong about one in six cases, which inspires some optimism.

As it turned out, the possibilities of R are quite enough for processing natural language texts. Unfortunately, I do not have data on how much faster the task is performed in Python. The tm package is not the only available tool, R has interfaces to OpenNLP , Weka and a number of packages that allow you to solve individual problems. More or less current tool list can be found here . There is even a Google implementation of word2vec and, despite the fact that it is still in development, you can try to make the second part of the Kaggle task, where word2vec is a central technological component.

Source: https://habr.com/ru/post/255143/

All Articles