How to catch what is not. Part Three: Who are the judges?

In the last article, it was shown that the main security problem is that the security tools (using the example of antivirus software) skip the most dangerous malicious files. And this behavior is normal and expected. On the other hand, there are results of numerous tests that show up to 100% of threat detection (from the last one on Habré you can look at the publication “How we are tested” , especially in the comments).

What are those who test and those who receive awards hold back?

Antivirus a lot. Dear and free, brake and not very. And all of them at first glance are easy to assess the quality of work. In this regard, numerous antivirus tests are readily available on the Internet (tests of firewalls, access restriction systems also exist, but are much less common, although in fact these products are much more consistent with the test results).

There are many tests, and many are testing it - professional organizations and ordinary users.

')

You can compare antiviruses in real time by testing the file:

And this is not a complete list. So recently I found out about a few more (not advertising, I really just found out from the next review of Sergei Storchak - www.securitylab.ru/blog/personal/ser-storchak ): avcaesar.malware.lu and www.malwaretracker.com/pdf.php .

There is even an AMTSO (Anti-Malware Testing Standards Organization) organization that has taken on the task of developing test methods.

There are many tests, but the most common ones are:

Evgeny Kaspersky superbly wrote about tests on large collections. He has the word (http://e-kaspersky.livejournal.com/87090.html):

The philosophical question is whether the company should be involved in such tests and Dr. Web, which is not right, which is trying to sell from the client’s needs, and not from non-speaking tests, we will not discuss.

But back to the tests. Indeed, for tests on large collections, a collection based on the principle “the more the better” is taken, the antiviruses participating in the test are taken, updated to the current state, and the collection is scanned for detection. In general, it is logical. The more samples an antivirus knows, the more likely it is that it will find some kind of infection at the moment of its penetration to the user (recall the myth that antiviruses should know all existing malicious files).

From the point of knowledge of the average user and, unfortunately, the majority of customers - everything is clear and there are no questions. But unknown malware spoils such a beautiful picture.

The antivirus should cure and cure live viruses - those that have penetrated the user's system. It is also necessary to check whether the antivirus finds something malicious in the file, but not primarily for the scanner and the file monitor of systems designed to protect workstations, but for:

In such tests, the operation is not checked by detection systems (launch interception, for example), but only by the anti-virus kernel. The collection is either directly checked by the anti-virus scanner, or the files from the collection are copied by the script, and their detection is controlled by the file monitor. There are three submarine stones here.

First, as already mentioned, the antivirus must be treated. But with the huge size of the collection, it is physically impossible to check whether the file is really treated and whether it is treated correctly - that is, whether it is fully operational after the treatment. Accordingly, in the case of treatment, one would simply have to rely on the integrity of the manufacturer and his reports on the success of the treatment. As a result, testing on huge collections are conducted on detection.

A small digression. Detection detectable discord. For example, not all viruses (we are now talking about viruses as a class of malware) infect files correctly. For example, Doctor Web analysts believe that it is not necessary to report the detection if the virus has infected the file so that it will never be executed, on the one hand, and on the other, it does not affect the performance of the infected program. The loss in the test - definitely. But do users know that the losing program cares about them, since the outcome of treatment is not always predictable?

Another digression and another example. Polymorphic viruses - now a rarity, yes. But nonetheless. Polymorphic viruses are not identified by signatures — they change on every launch. How to catch them in the test, if the antivirus does not have a polymorphic analyzer? Find all samples available from testers and add them to the database. Anyway, no one will run them to get new samples. Real case from the past.

Secondly, the antivirus should be treated in real conditions. Real viruses that have penetrated into the system actively oppose antiviruses — they are encrypted, apply methods of polymorphic change and obfuscation, disguise themselves as completely harmless processes, etc. It is much more difficult to remove them than to detect them in a file. And it is even more difficult to remove them so as not to damage the system. Again, due to the huge size of the collections and the impossibility of recruiting the necessary number of specialists who personally check the quality of treatment for each virus, it is impossible to conduct tests for treatment. Again, you have to believe the word manufacturers.

Thirdly, the antivirus should only detect malicious files. Due to the lack of the necessary number of specialists able to check the entire collection and guaranteeing the absence in it of various debris that is not related to malicious objects, again you have to trust the word to the product that it detected a virus and not a fragment of the registry. And to win the test, you need to bring all this garbage in the database. On the one hand, the bases swell, and on the other, how can you win if you do not get all this stuff in advance?

Total Tests on huge collections:

Protection can only be limited by the ways in which viruses penetrate the system - office control, port closure, etc.

How honest is proud of such victories towards their users? Philosophical question.

The wide distribution of tests on huge collections provokes manufacturers to create "antiviruses", which:

Word Evgeny Kaspersky:

A kind of test for large collections are tests for heuristics. Based on the fact that the antivirus should catch samples that have not yet arrived at the laboratory, each antivirus contains mechanisms for detecting unknown malware. To this end, the state of the anti-virus is fixed to the date, which is very far back from the time of the test, and the collection used contains viruses that appeared after this date. Such a test well shows how well an antivirus catches variants of already known viruses, but, as already mentioned, modern viruses, including those aimed at stealing financial information, are checked on current anti-virus databases before release - and a high product rating in this test does not save the user. from losing your money and data.

Another type of test is dynamic. The current version of the antivirus is installed, and the user's work is simulated. There are several problems:

But dynamic tests are still better than regular tests on large collections, since they check most of the components of the antivirus.

And by the way, guess which of the very often recommended antiviruses not only failed the test like habrahabr.ru/post/160905 , but was also able to score a negative amount of points in due time (http://dennistechnologylabs.com/reports/s/am/ 2012 / DTL_2012_Q4_Home.1.pdf, dennistechnologylabs.com/reports/s/am/2013/DTL_2013_Q4_Home.1.pdf )?

In addition to tests for detection, for antiviruses, tests for speed and launch speed of any programs are most common. Performance tests are usually combined with detection tests on large collections. There are two ambushes here.

Roughly speaking, anti-virus databases are a set of records and procedures that determine how to detect a virus. In addition, the databases contain unpackers of various archive formats, packers, databases, etc. Accordingly, in order to find this or that virus, the antivirus must be run through the entire database. Naturally, manufacturers optimize database formats to speed up the search (for example, the already mentioned FLY-CODE technology from Doctor Web, including this), but in general, the larger the base, the more likely it is to search through it. Since to identify a virus, any manufacturer needs approximately the same amount of data (of course, we are talking about signatures), a much smaller database size at high speed checks should be alarming. No tests that take into account antivirus awareness of viruses (for example, normalizing the speed of checking for record size) are not performed.

An example from life. I don’t know how this year, but a year ago in Russia there was still an antivirus that “detected” viruses by five or seven, I don’t remember exactly the signatures.

In an amicable way, such testing should also be carried out on standard user profiles. For example, in real life, as a rule, many files open at the same time, and modern processors are multi-core. How to evaluate how well the antivirus works well on modern computers, and on the fact that it is in large numbers in the regions (requests to protect computers based on the 386th processor stopped not so long ago)? There should be several test modes with a different number of simultaneously opened files (the number of test streams). Test only on one and, moreover, only modern machine configuration? Or do testers think that everywhere in Russia all users have computers of this year of release? And, going back to what was said - I did not see a single standard profile for the user on the Internet - how much and what data is sent / downloaded, at what time intervals, etc.

Total current situation with antivirus tests for speed pushes manufacturers to create:

All this is aggravated by the fact that the threshold for entering the market is very high - to bring a new product to the market, you must immediately have a database with information about all existing and existing viruses, methods for detecting them and much more - it is much easier to detect the top 100 viruses.

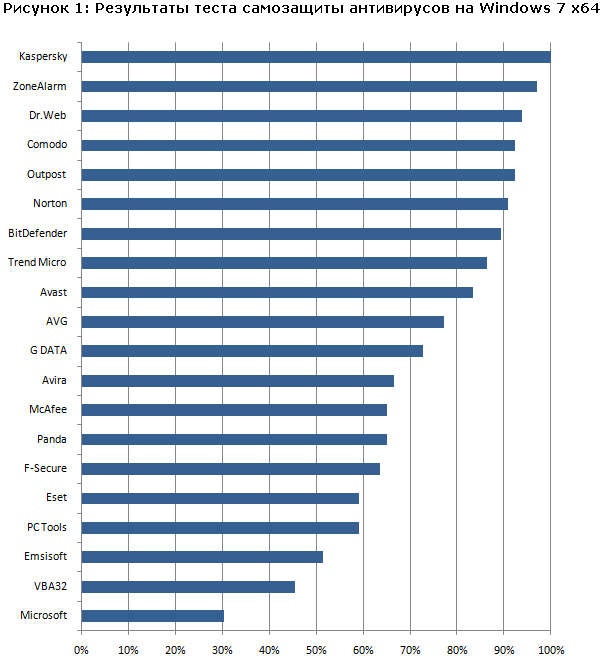

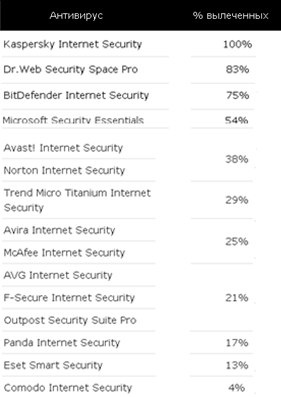

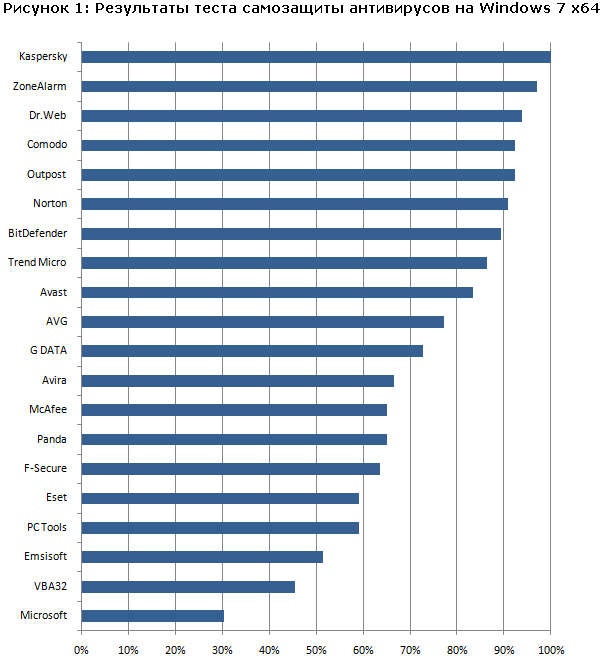

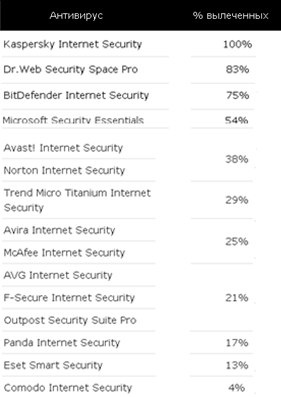

Before moving on to the last group of tests, a bit of theory. There is a fairly large number of anti-virus programs on the market. And they are divided into two parts. The first one develops everything necessary for catching and treating malware on its own, the second one licenses anti-virus kernels from the first. Both on tests on large collections, on heuristics, both the first and the second show approximately equal results. But in real life, everything is different. We look at the last two tests from the category of tests on small collections, when the test organizers have the opportunity to check the results of the “communication” of each antivirus and each virus. These are tests for the treatment of active infections and self-defense.

www.anti-malware.ru/antivirus_self_protection_test_x64_2011

www.anti-malware.ru/malware_treatment_test_2012 . Removed rewards from table

The difference between the first and second group, roughly speaking, in the drivers. The anti-virus kernel is licensed and transferred. The interception system in the API is usually not included. But the malicious file needs to be caught - intercept traffic before the virus is introduced into the system, detect and destroy the rootkit after the update arrives. And on the tests of active infection treatment and self-defense tests, we just see the difference in approaches to safety - a sharp drop in the quality of work after the top three.

Adherents of free products, please understand - free cheese is only in a mousetrap. Development of any security solutions is an extremely costly process, and professionals work in leading companies who want to receive an adequate salary.

And further. The information security market is unique. It is here that the purchase of a certain company may not give the buyer anything, because to buy patents is not enough - heads are needed here (http://www.i2r.ru/news.shtml?count=30&id=20668, www.kaspersky.ru/about/security_experts ) .

Unfortunately, the resource on which the above tests were conducted was founded by Ilya Shabanov, who was working at that time in Kaspersky Lab. And this caused sharp suspicions of engaging victories. Not for this test, but for a similar reason, Eugene Kaspersky wrote (http://e-kaspersky.livejournal.com/83656.html):

To my deep regret, tests on www.anti-malware.ru have not been conducted for a long time, and there is no replacement for them.

Total: tests for active treatment and self-defense:

Even if the collection was created impartially, one cannot guarantee that the one who came to the second is worse than the leader - the corresponding copy could simply not have time to come to the laboratory for analysis.

And more about the tests.

Summing up. The situation with the tests exactly fit into the phrase: "We do not deceive you, we just do not tell the whole truth." The test results, of course, need to be taken into account - but only initially having made up a list of tasks for which the antivirus in the system should be responsible. If you need an antivirus to be invisible - your right, but if you need insurance in case the northern beast has visited you - tests without understanding the functions of the antivirus will not save you.

Or in the words of Yevgeny Kaspersky:

What can you advise?

Conclusions everyone makes himself.

And finally, the traditional question:

Added due to the prediction made in the article:

Chinese anti-virus company was deprived of awards due to fraud

What are those who test and those who receive awards hold back?

Antivirus a lot. Dear and free, brake and not very. And all of them at first glance are easy to assess the quality of work. In this regard, numerous antivirus tests are readily available on the Internet (tests of firewalls, access restriction systems also exist, but are much less common, although in fact these products are much more consistent with the test results).

There are many tests, and many are testing it - professional organizations and ordinary users.

')

- www.virusbtn.com

- www.virus.gr

- www.av-test.org

- www.av-comparatives.org

- www.checkvir.com

- www.westcoastlabs.org

- anti-malware.ru

- anti-virus-software-review.toptenreviews.com

- anti-spyware-review.toptenreviews.com

- www.techsupportalert.com/security_scanners.htm

- antivirus-software.6starreviews.com

You can compare antiviruses in real time by testing the file:

And this is not a complete list. So recently I found out about a few more (not advertising, I really just found out from the next review of Sergei Storchak - www.securitylab.ru/blog/personal/ser-storchak ): avcaesar.malware.lu and www.malwaretracker.com/pdf.php .

There is even an AMTSO (Anti-Malware Testing Standards Organization) organization that has taken on the task of developing test methods.

There are many tests, but the most common ones are:

- Antivirus tests on large collections

- dynamic tests

- tests for heuristics

- self defense tests

- performance tests

Evgeny Kaspersky superbly wrote about tests on large collections. He has the word (http://e-kaspersky.livejournal.com/87090.html):

Classic, good old-on-demand test.

Actually, this is the most common standard and familiar test, and once, long ago, in pre-mass Internet times, it really was the most correct one.

The testing technique is as follows: the disk is taken and scored by Malvara, the more - the better, the most varied, to which the arms have reached. Then various anti-virus scanners are set on it and the number of detectors is measured. Cheap and angry. But already 10 years old as absolutely irrelevant!

Why? And because anti-virus signature, heuristic and other “scanning” engines are only part of a set of technologies that are used in the real world for protection! (and the value of these engines in the general level of protection is rapidly falling). Often, the scanner generally works as a last resort for purely surgical work: for example, System Watcher tracks down some Trojan, understands the infection pattern, and then transfers the task of ejection to the scanner.

Another drawback is the base for Malvari to scan.

There are two extremes and both vicious. Too few malvar files - you know, irrelevant. Too many samples - the same trabla, but from the other end: too much garbage gets into mega-collections (broken files, data files, not-so-good malware, for example, scripts that use malware, etc.). And to clean the collection of such garbage is the hardest and ungrateful work. In addition, low paid :)

The philosophical question is whether the company should be involved in such tests and Dr. Web, which is not right, which is trying to sell from the client’s needs, and not from non-speaking tests, we will not discuss.

But back to the tests. Indeed, for tests on large collections, a collection based on the principle “the more the better” is taken, the antiviruses participating in the test are taken, updated to the current state, and the collection is scanned for detection. In general, it is logical. The more samples an antivirus knows, the more likely it is that it will find some kind of infection at the moment of its penetration to the user (recall the myth that antiviruses should know all existing malicious files).

From the point of knowledge of the average user and, unfortunately, the majority of customers - everything is clear and there are no questions. But unknown malware spoils such a beautiful picture.

The antivirus should cure and cure live viruses - those that have penetrated the user's system. It is also necessary to check whether the antivirus finds something malicious in the file, but not primarily for the scanner and the file monitor of systems designed to protect workstations, but for:

- systems to protect mail servers and gateways - only there is work with viruses only in their non-activated form;

- components of scanning email and Internet traffic for antiviruses for workstations / file servers (which are required to be, but not one of them fulfills its purpose (traditional promise - this will be described in the technology article)). But in tests for workstations, as a rule, these components are not checked.

In such tests, the operation is not checked by detection systems (launch interception, for example), but only by the anti-virus kernel. The collection is either directly checked by the anti-virus scanner, or the files from the collection are copied by the script, and their detection is controlled by the file monitor. There are three submarine stones here.

First, as already mentioned, the antivirus must be treated. But with the huge size of the collection, it is physically impossible to check whether the file is really treated and whether it is treated correctly - that is, whether it is fully operational after the treatment. Accordingly, in the case of treatment, one would simply have to rely on the integrity of the manufacturer and his reports on the success of the treatment. As a result, testing on huge collections are conducted on detection.

A small digression. Detection detectable discord. For example, not all viruses (we are now talking about viruses as a class of malware) infect files correctly. For example, Doctor Web analysts believe that it is not necessary to report the detection if the virus has infected the file so that it will never be executed, on the one hand, and on the other, it does not affect the performance of the infected program. The loss in the test - definitely. But do users know that the losing program cares about them, since the outcome of treatment is not always predictable?

Another digression and another example. Polymorphic viruses - now a rarity, yes. But nonetheless. Polymorphic viruses are not identified by signatures — they change on every launch. How to catch them in the test, if the antivirus does not have a polymorphic analyzer? Find all samples available from testers and add them to the database. Anyway, no one will run them to get new samples. Real case from the past.

Secondly, the antivirus should be treated in real conditions. Real viruses that have penetrated into the system actively oppose antiviruses — they are encrypted, apply methods of polymorphic change and obfuscation, disguise themselves as completely harmless processes, etc. It is much more difficult to remove them than to detect them in a file. And it is even more difficult to remove them so as not to damage the system. Again, due to the huge size of the collections and the impossibility of recruiting the necessary number of specialists who personally check the quality of treatment for each virus, it is impossible to conduct tests for treatment. Again, you have to believe the word manufacturers.

Thirdly, the antivirus should only detect malicious files. Due to the lack of the necessary number of specialists able to check the entire collection and guaranteeing the absence in it of various debris that is not related to malicious objects, again you have to trust the word to the product that it detected a virus and not a fragment of the registry. And to win the test, you need to bring all this garbage in the database. On the one hand, the bases swell, and on the other, how can you win if you do not get all this stuff in advance?

Total Tests on huge collections:

- open wide scope for abuse by unscrupulous manufacturers, for advertising purposes overstated by the percentage of detections;

- they do not show how well the antivirus behaves in real conditions of a combat collision with a virus;

- they do not test all the components of the antivirus - which, by the way, is extremely important, since modern viruses are tested on current versions of the antivirus at the time of release and are not detected by anyone before the samples arrive at the laboratory.

Protection can only be limited by the ways in which viruses penetrate the system - office control, port closure, etc.

How honest is proud of such victories towards their users? Philosophical question.

The wide distribution of tests on huge collections provokes manufacturers to create "antiviruses", which:

- focused on the detection of only those samples that are found in the tests;

- detect 100% of viruses in any collection, even if there are no viruses in it;

- sharpened only on the detection and removal of the malicious programs themselves.

Word Evgeny Kaspersky:

under such tests you can sharpen any product. To show outstanding results in these tests. Fitting the product to a test is done elementary - you just need to detect the files that are used in the test to the maximum. Do you follow the train of thought?

In order to show in the "scan tests" close to 100% result, do not rest against and improve the quality of technology. You just have to detect everything that gets into these tests.

To win these tests do not need to "run faster than a bear." You just need to stick to the sources of Malvari, which use the most famous testers (and these sources are known - VirusTotal, Jotti and Malvaro-exchangers of anti-virus companies) - and stupidly detect everything that others detect. Those. if the file is detected by competitors - just detect it on MD5 or something like that.

Everything! I am personally ready from scratch, by a couple of developers, to make a scanner that will show almost 100% of detections in a couple of months. // why exactly two developers are needed - just in case, suddenly one gets sick.

A kind of test for large collections are tests for heuristics. Based on the fact that the antivirus should catch samples that have not yet arrived at the laboratory, each antivirus contains mechanisms for detecting unknown malware. To this end, the state of the anti-virus is fixed to the date, which is very far back from the time of the test, and the collection used contains viruses that appeared after this date. Such a test well shows how well an antivirus catches variants of already known viruses, but, as already mentioned, modern viruses, including those aimed at stealing financial information, are checked on current anti-virus databases before release - and a high product rating in this test does not save the user. from losing your money and data.

Another type of test is dynamic. The current version of the antivirus is installed, and the user's work is simulated. There are several problems:

- Typical users are not involved in security configuration (say, parental control and behavioral analyzer). Accordingly, malicious files tested for non-detection by typical installation will pass without problems.

- I personally don’t know the standard user profiles on the network. If I'm wrong, please correct.

- Social engineering works wonders. As an example of recent times - letters from the tax inspectorate indicating their real names (hello to the protection of personal data!).

But dynamic tests are still better than regular tests on large collections, since they check most of the components of the antivirus.

And by the way, guess which of the very often recommended antiviruses not only failed the test like habrahabr.ru/post/160905 , but was also able to score a negative amount of points in due time (http://dennistechnologylabs.com/reports/s/am/ 2012 / DTL_2012_Q4_Home.1.pdf, dennistechnologylabs.com/reports/s/am/2013/DTL_2013_Q4_Home.1.pdf )?

In addition to tests for detection, for antiviruses, tests for speed and launch speed of any programs are most common. Performance tests are usually combined with detection tests on large collections. There are two ambushes here.

Roughly speaking, anti-virus databases are a set of records and procedures that determine how to detect a virus. In addition, the databases contain unpackers of various archive formats, packers, databases, etc. Accordingly, in order to find this or that virus, the antivirus must be run through the entire database. Naturally, manufacturers optimize database formats to speed up the search (for example, the already mentioned FLY-CODE technology from Doctor Web, including this), but in general, the larger the base, the more likely it is to search through it. Since to identify a virus, any manufacturer needs approximately the same amount of data (of course, we are talking about signatures), a much smaller database size at high speed checks should be alarming. No tests that take into account antivirus awareness of viruses (for example, normalizing the speed of checking for record size) are not performed.

An example from life. I don’t know how this year, but a year ago in Russia there was still an antivirus that “detected” viruses by five or seven, I don’t remember exactly the signatures.

In an amicable way, such testing should also be carried out on standard user profiles. For example, in real life, as a rule, many files open at the same time, and modern processors are multi-core. How to evaluate how well the antivirus works well on modern computers, and on the fact that it is in large numbers in the regions (requests to protect computers based on the 386th processor stopped not so long ago)? There should be several test modes with a different number of simultaneously opened files (the number of test streams). Test only on one and, moreover, only modern machine configuration? Or do testers think that everywhere in Russia all users have computers of this year of release? And, going back to what was said - I did not see a single standard profile for the user on the Internet - how much and what data is sent / downloaded, at what time intervals, etc.

Total current situation with antivirus tests for speed pushes manufacturers to create:

- products that have the smallest possible base;

- products that have information only about what is found in test kits (“viruses found in wildlife”);

- products, all the attention paid to the development of components subject to testing.

All this is aggravated by the fact that the threshold for entering the market is very high - to bring a new product to the market, you must immediately have a database with information about all existing and existing viruses, methods for detecting them and much more - it is much easier to detect the top 100 viruses.

Before moving on to the last group of tests, a bit of theory. There is a fairly large number of anti-virus programs on the market. And they are divided into two parts. The first one develops everything necessary for catching and treating malware on its own, the second one licenses anti-virus kernels from the first. Both on tests on large collections, on heuristics, both the first and the second show approximately equal results. But in real life, everything is different. We look at the last two tests from the category of tests on small collections, when the test organizers have the opportunity to check the results of the “communication” of each antivirus and each virus. These are tests for the treatment of active infections and self-defense.

www.anti-malware.ru/antivirus_self_protection_test_x64_2011

www.anti-malware.ru/malware_treatment_test_2012 . Removed rewards from table

The difference between the first and second group, roughly speaking, in the drivers. The anti-virus kernel is licensed and transferred. The interception system in the API is usually not included. But the malicious file needs to be caught - intercept traffic before the virus is introduced into the system, detect and destroy the rootkit after the update arrives. And on the tests of active infection treatment and self-defense tests, we just see the difference in approaches to safety - a sharp drop in the quality of work after the top three.

Adherents of free products, please understand - free cheese is only in a mousetrap. Development of any security solutions is an extremely costly process, and professionals work in leading companies who want to receive an adequate salary.

And further. The information security market is unique. It is here that the purchase of a certain company may not give the buyer anything, because to buy patents is not enough - heads are needed here (http://www.i2r.ru/news.shtml?count=30&id=20668, www.kaspersky.ru/about/security_experts ) .

Unfortunately, the resource on which the above tests were conducted was founded by Ilya Shabanov, who was working at that time in Kaspersky Lab. And this caused sharp suspicions of engaging victories. Not for this test, but for a similar reason, Eugene Kaspersky wrote (http://e-kaspersky.livejournal.com/83656.html):

let's not judge about objectivity and independence, because Passmark does not hide anything: the document disclaimer honestly reports that the test was subsidized and carried out according to the methodology of one of the participants: tests

It is curious that this disclaimer is also present in previous tests of antivirus software (2010, 2009, 2008). Guess who ranked first in them?

But the fact of the test is obvious, the winner appeals to the opinion of a respected organization (and Passmark is indeed such), waving a flag, the audience applauded and admired. Therefore, it is very interesting to know how some of the “supplied scripts” turned the test in such a way as to enthrone the sponsor?

To my deep regret, tests on www.anti-malware.ru have not been conducted for a long time, and there is no replacement for them.

Total: tests for active treatment and self-defense:

- Most accurately reflect the qualities of antivirus, for which you need to choose a product.

- Clearly show which product can be used to perform the main task of the antivirus - the treatment of active infections.

- By virtue of a small collection, the non-detection of even one sample can lead to a significant difference with the winner.

Even if the collection was created impartially, one cannot guarantee that the one who came to the second is worse than the leader - the corresponding copy could simply not have time to come to the laboratory for analysis.

And more about the tests.

- As a rule, only products for protection of workstations are tested — server products are rarely tested. This is also due to the lack of generally accepted testing methods. If the standard profile of a standard employee for mail can still be obtained, but for gateways I have not seen this.

- The figures given in the marketing documents, showing the size of the traffic to be checked for gateway solutions, are given without specifying the methods for obtaining them, which devalues them.

- We are often asked about the maximum traffic for a particular situation. In most cases, the performance of gateway devices is limited by the access channel - modern servers even in minimum configuration can protect up to 250 employees (of course, if the traffic size does not exceed reasonable limits), which overlaps with the needs of most companies.

Summing up. The situation with the tests exactly fit into the phrase: "We do not deceive you, we just do not tell the whole truth." The test results, of course, need to be taken into account - but only initially having made up a list of tasks for which the antivirus in the system should be responsible. If you need an antivirus to be invisible - your right, but if you need insurance in case the northern beast has visited you - tests without understanding the functions of the antivirus will not save you.

Or in the words of Yevgeny Kaspersky:

Ask, how then to navigate? Who can authoritatively measure the quality of antivirus? The answer is simple - there is really NO such test, which I could definitely recommend as the best indicator. Some have the flaws of the methodology, others are very specific.

What can you advise?

- In my opinion, our antiviruses (I do not speak Russian, without employees from Ukraine, Kazakhstan, other countries, we would not be who we are) - the best in the world.

- The choice of antivirus software should be made only among vendors who develop everything they need to catch and treat themselves. Advertising about using multiple cores - the path to viruses.

- It is necessary to use only antiviruses from manufacturers that are oriented towards the development of the antivirus kernel, and not just making changes to the interface. By the way, who will call the innovations in the vendor's antivirus engine over the past year? The words “optimized” and “accelerated” are not considered.

Conclusions everyone makes himself.

And finally, the traditional question:

- Do you currently have standards / orders / methods that allow you to build a reliable protection system based only on them? Can a young specialist, who was suddenly instructed to create a TK for the protection of the company, to complete the task? What materials should he rely on? We take into account that, sadly enough, the majority of those who offer help are interested in selling a particular software / hardware (sometimes without malicious intent - the offer is easily sold / no need to convince the client).

Added due to the prediction made in the article:

Chinese anti-virus company was deprived of awards due to fraud

Source: https://habr.com/ru/post/254351/

All Articles