The process of developing and testing demons

Today we will talk about the "low-level" bricks of our project - about demons.

Today we will talk about the "low-level" bricks of our project - about demons.Wikipedia definition:

“ Demon is a computer program on UNIX class systems, launched by the system itself and running in the background without direct user interaction.”

Although it is not obvious, but almost all the functionality of the site depends on the work of these programs. The game of “Dating”, the search for new faces, the center of attention, messaging, statuses, geolocation and many other things are tied to one or another demon. So we can say that they help people around the world to communicate and find new friends. At the same time, several dozen demons can work and interact with each other on the site. Their correct behavior is a very important task, so we decided to cover the main functionality of the daemons with autotests.

In Badoo, this is a special department. And today we will talk about how we go through the process of developing this critical part of the site and performing autotests. This area is quite specific and there is a lot of material, so we prepared a structured overview of the whole process so that everyone who is interested could understand it.

We use Git as VCS, TeamCity for continuous integration, and JIRA acts as a bug tracker. For testing, we use PHPUnit. Development of demons, like the rest of the site, is conducted on the principle of "feature - branch". In order to understand what it is, we will look at the projections of our flow on Git and on JIRA.

Git flow

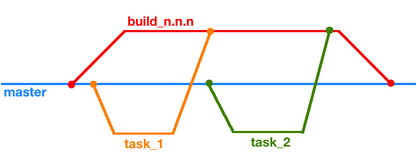

Work in Git can be schematically described with a picture:

')

To work on the task from the master branch, fork a separate branch task_n, in which all development is done. Then this branch and, possibly, several others merge into the so-called branch build_n.nn (or just a build), and only it already enters the master.

JIRA flow

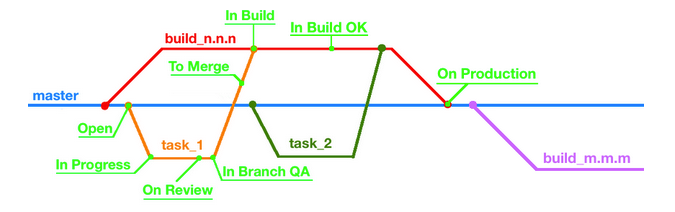

The process in JIRA is more complex and involves more steps. The figure below shows the main ones.

Open -> In progress

Open status indicates that there is a task, but it has not yet begun. When the programmer starts working and sends the first changes to our git-server (a branch appears in the name task_n, the identifier in JIRA), several events occur. Firstly, the status of the task becomes In progress , and secondly, our TeamCity creates a separate assembly for this branch, which is launched every time a new developer pushes it. Thanks to the efforts of release engineers and developers, artifacts from the assembly will be copied to the machines of our development environment, after that all regression tests will be launched, and the result of the run will be displayed in the TeamCity web interface. When the developer considers that he has finished work on the task, he transfers the ticket with her to the On Review status.

On review

Here the task is thoroughly studied by a colleague. If his glance did not find something to complain about, the ticket is transferred to the In Branch QA status.

In Branch QA

At this stage, the task falls to the tester. He first of all looks at the result of the regression test run - suddenly some of them do not pass now. In this case, there are two options: either everything is fine, this is the result of a new behavior and the regression tests need to be updated, or the daemon does not work as expected, then the task returns to the developer. If revision of tests is necessary, the QA-engineer gets down to business closely. It covers the demon's new behavior with tests, simultaneously correcting old ones, if necessary.

To Merge -> In Build

When the developer and tester believe that the build from the task_n branch is stable and expected, the To Merge button is pressed in JIRA. At this point, TeamCity “merges” the developer’s branch into the build_n.nn branch and launches its build. The task again falls to the tester, but with the status of In Build .

When the developer and tester believe that the build from the task_n branch is stable and expected, the To Merge button is pressed in JIRA. At this point, TeamCity “merges” the developer’s branch into the build_n.nn branch and launches its build. The task again falls to the tester, but with the status of In Build .The fact is that during the merge unexpected conflicts can occur: while the branch was under testing, incompatible changes could be added to the build one. When such a situation arises, the task is transferred to the programmer for manual merge in build. Another problem may be falling regression tests or, in the worst case, inability to start a daemon. This is solved by rolling back the task from the build branch with returning the ticket to the developer. But if minor changes are needed to solve the problem, the task remains and a patch appears in the build itself.

When the tests pass and the daemon is stable, the QA engineer assigns the status to the task to In Build OK and it returns to the developer. Build a demon with a modified behavior becomes the main development environment devel and passes the test of battle.

In Build OK -> On Production

At some point, many necessary changes are accumulated in the build branch, or critical tasks appear, and the developer decides that it is time to move the build to production. The Finish Build button is pressed, and the new version of the demon, thanks to our valiant admins, starts working on the machines responsible for the real work of our site. At this time, the build is merged into the master branch and a new build is created with the name build_m.mm, into which all new changes will fall. Well, and the programmer's ticket will be set to On Production .

Combining the two projections of the entire development process, we get the cycle shown below.

Learn more about our development processes and tight integration of JIRA, TeamCity and Git in our articles here , here and here .

Performing autotests

Test object

First, let's define what we are testing.

In our case, the program in general is a kind of binary file that runs from the terminal. As arguments, various parameters affecting its operation can be passed to it: configuration files (“configs”), ports, log files, folders with scripts, etc. Most often this is at least a config, and the launch string in this case will look like this:

>> daemon_name -c daemon_config

Once launched, the daemon waits for connections on several ports (socket files), which communicate with the outside world. The format can be different, starting from text or JSON and ending with protobuf . Usually supported immediately a couple of them. Also, some demons have the ability to save their data to disk, and then load them at startup. C and C ++ are mainly used for development, but Golang and Lua have recently been added.

In general, the implementation of auto tests can be divided into several stages:

- Preparation of the test environment.

- Run and run tests.

- Cleaning the test environment.

So, we run tests.

>> phpunit daemon_tests/test.php --option=value

Preparing the test environment

After running the tests, the part of the code that is defined in the setUpBeforeClass and setUp constructors is first executed. Just at this stage, the environment is prepared: first, the launch parameters are read, which we describe separately, then the necessary version of the binary file is selected, temporary folders, configs and various files that may be needed for work are created, and the databases are prepared if needed . All created objects contain a unique identifier in the name, which is selected once in the constructor and then used everywhere. This avoids a conflict while simultaneously running tests with several developers and testers.

After running the tests, the part of the code that is defined in the setUpBeforeClass and setUp constructors is first executed. Just at this stage, the environment is prepared: first, the launch parameters are read, which we describe separately, then the necessary version of the binary file is selected, temporary folders, configs and various files that may be needed for work are created, and the databases are prepared if needed . All created objects contain a unique identifier in the name, which is selected once in the constructor and then used everywhere. This avoids a conflict while simultaneously running tests with several developers and testers.It often happens that a demon communicates with other demons during work — it can receive or send data, receive or send commands from its kindred. In this case, we use test stubs ("mocks") or run real demons. When everything necessary is created, a daemon instance is launched with our config and working in our environment.

Remember, I said that the daemon is waiting for a connection on the ports? The last step is to create connections to them and check the demon's readiness for communication. If all is well, then tests run directly.

Launch and execution

Most tests can be described by the algorithm:

A send request can be one or more requests, resulting in a particular state. Actions inside get response - getting a response, reading records in the database, reading a file. Assert, however, means a wide range of actions: this is a validation of the response, evaluation of the state of the daemon, verification of data from neighboring demons, comparison of records in the database, and even verification of creating snapshots with the correct data.

But our tests are not limited to the above-mentioned algorithm - we try to cover various aspects of our experimental life, if possible. Therefore, random troubles can occur with it: it can be restarted several times, the data in the requests may be cut off or contain incorrect information, invalid settings may get into the configuration, the interlocutors of the daemon may not respond or mysteriously disappear.

At the moment, the most difficult and interesting (in my opinion) is the area of testing inter-program interaction "demon-demon". This is mainly due to the fact that the process of communication depends on many factors: internal logic, external requests, time, settings in the config, etc. It becomes quite fun when our object can communicate with several different demons.

Cleaning the test environment

It would not have ended the tests, after you need to clean. First of all, we close the connections, stop the daemon and all associated mocks or neighboring demons. Then all files and folders created during tests are deleted if they are not needed, and the databases are cleared.

The problem place here is cleaning after fatal errors - in some cases, temporary files may remain (sometimes even the daemon continues to work).

A specific feature is superimposed on the whole described testing process - distribution across different machines. The usual scenario now is to run tests on one server, and start the daemon on another. Well, or on another server in the docker container.

Let's return to the test run parameters. Now the list consists of 5-6 options, and it is constantly growing. All of them are aimed at facilitating the testing process and providing an opportunity to “customize” tests a little, without getting into the code. Here is our top:

- branch . The name of the ticket is transferred to JIRA, within which the work on the daemon was carried out. Tests will use the binary file assembly of this particular task;

- settings . The path to the ini-file is passed here, in which are test-specific things. This may be the version of the build, the path to the default config, the user who starts the daemon, the location of the necessary files;

- debug . If this flag is passed, tests run in debug mode. For us, this means that the names of temporary files and folders will be readable, which allows them to be easily separated from the rest. Also, all files created during the test run will not be deleted - this allows, if necessary, to play the falling tests;

- script . When this flag is present, during the execution of the test, a php script is generated that repeats all the test actions until the end of the test or the assertion is triggered. This script is completely independent, which helps with debugging a demon.

Today, this is all we would like to talk about, and we are happy to proceed to the questions in the comments.

In stock, we have ideas for articles about creating scripts for playing a test, generating documentation, and using docker containers in tests — tick off which topics you are most interested in.

Konstantin Karpov, QA engineer

Source: https://habr.com/ru/post/254087/

All Articles