"Soft + boxed server" or a complete solution?

Chad, and what do you want to say with this heading?

Last week I had a discussion with Japanese partners on software-defined storage. We discussed what EMC is doing in this direction, and also shared thoughts on what partners should do. Interestingly, they were fully focused on economic models of using the “software + general purpose server” bundle. It seemed that they even found the differences of these models from others where I did not see any differences at all.

And a week before - when I was in Australia - I had a lot of conversations with customers on the topic of Hadoop deployment scenarios. In particular, it was about when it makes sense to use Isilon for this. All clients thought the same: take the distribution and install it on the boxed server. At first, they could not accept the idea that a solution based on Isilon could be better, more productive and cheaper. But still they came to this.

... And on the same week - at the VMUG conference in Sydney - I had interesting discussions about VSAN , ScaleIO, and the hardware-software complexes Nutanix and Solidfire compared to pure software solutions.

')

The main snag in all these conversations was the same: it is difficult for people to accept one VERY, VERY simple thing:

I note: “for the most part” means that there are categories and architectures that include either unique equipment or standard equipment that is so specific (for example, connecting XtremIO or VMAX3 nodes via InfiniBand) that the separation of software from hard does not make sense. (By the way, according to my classification , these are “Second type” architectures - “horizontally scalable clusters with close connections”).

People are utterly illogical ... HAN !!! (Glory to you Leonard Nimoy - live long and prosper in our hearts!)

Oh, people. We get stuck on visualizations, dwell on the physical concept of things. It’s hard for us to think about system architecture in terms of how they FUNCTION, and not in terms of how they are PACKAGED.

Let me illustrate here — show what I mean by all means! Keep reading - and you will understand the reality!

Suppose I need to deploy a Hadoop cluster. Petabyte by 5. For this, I have to think over complex communications, computing infrastructure and staging (staging - uploading data to the Hadoop cluster from the environment where the data was generated or stored. Approx. Transl.)

If I were in place of the majority, I would take a distribution kit, as well as servers and network infrastructure components. I would probably start with a small cluster, and then build it up. I would probably use rack-mounted servers. And for a rough estimate of the cluster size, I would use the standard ratios for the number of disks per server. And I see that many do.

If you proceed from such a scenario, tell someone “ you know ... if you: 1) virtualize Hadoop nodes using vSphere and Big Data Extensions — not for consolidation, but for better manageability; and 2) instead of rack servers used Cisco UCS; and 3) instead of shoving packs of disks in rack servers, use EMC Isilon - if you did these three things, the solution would be twice as fast, twice as compact and twice cheaper in terms of TCO ”- well ... if anyone told you this, you would have decided that he was drunk to bits and pieces.

But it turns out that the above statement is true .

Of course, not for everyone. However, it is true for an interesting case, disassembled by reference . This is the case of a real client, whose Hadoop cluster has grown to 5 petabytes. The link results are the results of their own testing (thanks to Dan Beresl and Chris Birdwell for sharing). The client in question is a huge telecommunications company. Detailed test results are available to you, including performance testing.

And now an interesting observation: for many it’s hard to think of Isilon as HDFS storage (HDFS is a distributed file system in Hadoop - approx. Transl.) , Because it “looks” like a hardware complex, and not as standard general-purpose servers that carry on itself the necessary software .

Often, clients starting Hadoop think "I need a standard hardware platform."

However, do not judge the book on the cover. "The rose smells like a rose, even if you call it a rose, though not."

Take a look at these pictures:

When you look at them, you probably think: “Standard industrial servers” .

... And now let me show their face:

When you look at them, you probably think: “Hardware complex” .

But in both cases you are looking at the same thing .

Software called OneFS is what gives Isilon his power. Ishilon’s submission in the form of a software and hardware complex is a consequence of the customers ’desire to buy (and maintain) it in this way.

In the case of HDFS disassembled by reference, the virtualized configuration based on UCS and Isilon became so fast (2 times faster!), Compact (2 times more compact!) And economical (2 times more economical!) Due to Isylon's software functions:

... in the case of a telecom client, there was no magic from the side of iron (although there was some gain from using UCS. It was due to a more dense packing of computing power. The failure of local disks allowed us to replace the rack servers with blades).

There is a fun moment in this whole story. Tell the clients: “ Here's a software implementation of HDFS with all the above properties, put it on your hardware ” - customers would more willingly start using ONEFS. They would not have to face the difficulties that carry local disks. Scaling HDFS would be less painful for them.

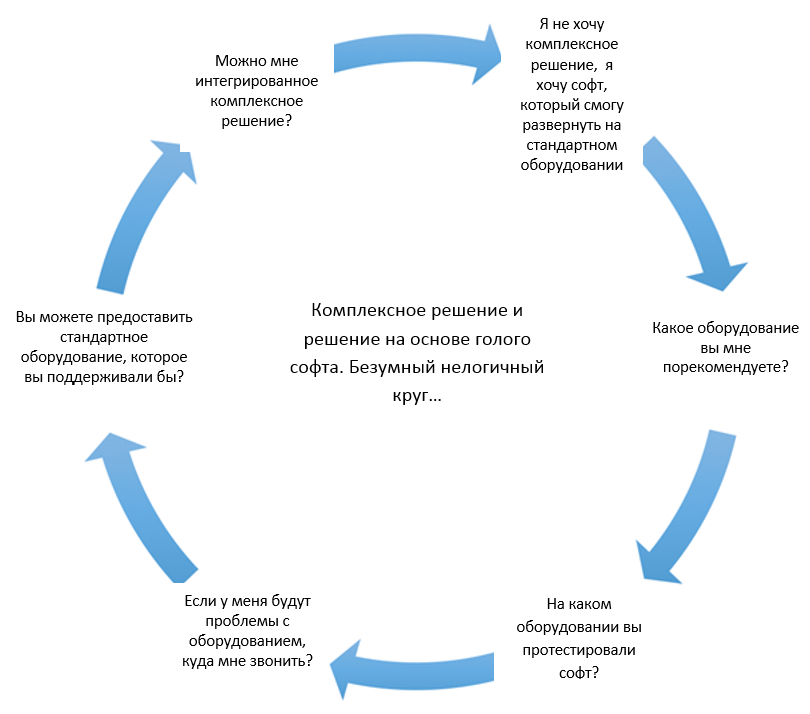

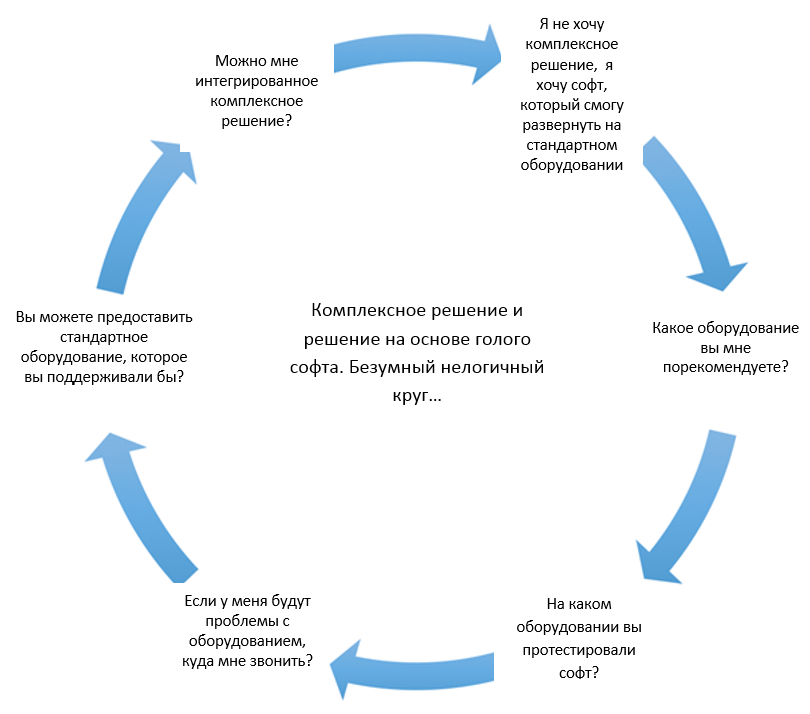

So why don't we do this? The answer is shown in the diagram below:

I constantly conduct this strange dialogue with clients. They start by saying that “I don't want the hardware complex” (yeah, then “Here's ScaleIO / VSAN and Isilon as software”), and then they come to the conclusion that they are asking for the hardware complex :-) This is as predictable as that the sun will rise in the east. Because of such dialogues, Solidfire recently announced the release of a software version of their product (I bet that sales will be low compared to sales of their complex), and Nutanix even started with purely software solutions (and finished with complex ones). For the same reasons, for a successful start, VSAN needed special “Nodes for VSAN” ( VSAN Ready Nodes - note. ) And I suspect that very soon very substantial consumption of VSAN will go through complex solutions like VSPEX Blue. Of course, this use case will be more popular than the “install VSAN on your hardware” option. The same is true for ScaleIO.

Why?

The answer is:

But don't get me wrong. The idea of software that is not attached to hardware is still important.

Such software can be used in many different ways. If software exists separately from hardware, then it can be acquired without “extra overhangs” in order to study, try, play, use in work - but without support. In any case, someone will have to pay for support (regardless of whether it is an open or closed code).

For all these reasons and many others, all EMC software will be gradually available “without appendages,” and in some cases, perhaps even open source.

By the way, all this does not mean that there is no room for innovation in the world of iron. Here is the newest Isilon HD node, with and without decorative panels:

It is easy to understand why he bears the codename "Colossus". The disks are mounted vertically, as are the covers for their tight packing: Viking @ 120 x 2.5 ”SSD / HDD and Voyager @ 60 x 3.5” HDD. Each node Ishilona also needs in computing power. They are located in the same 4U case. As a result, we have up to 376TB in one case, which is very plump and very cool. This node is designed for hyper-capacious configurations and for archival use.

Just look at all this! Software defined storage is amazing. And the fact that they can be deployed in many different ways, up to their own hardware, is simply wonderful. At the same time, I think that in the foreseeable future, the most preferred models for using software-defined storages will remain complex solutions and a convergent / hyper-convergent infrastructure.

Your thoughts?

(You can also read about Isilon and HDFS in our blog: A “Quick Data” recipe based on a big data solution - note.)

Last week I had a discussion with Japanese partners on software-defined storage. We discussed what EMC is doing in this direction, and also shared thoughts on what partners should do. Interestingly, they were fully focused on economic models of using the “software + general purpose server” bundle. It seemed that they even found the differences of these models from others where I did not see any differences at all.

And a week before - when I was in Australia - I had a lot of conversations with customers on the topic of Hadoop deployment scenarios. In particular, it was about when it makes sense to use Isilon for this. All clients thought the same: take the distribution and install it on the boxed server. At first, they could not accept the idea that a solution based on Isilon could be better, more productive and cheaper. But still they came to this.

... And on the same week - at the VMUG conference in Sydney - I had interesting discussions about VSAN , ScaleIO, and the hardware-software complexes Nutanix and Solidfire compared to pure software solutions.

')

The main snag in all these conversations was the same: it is difficult for people to accept one VERY, VERY simple thing:

Storage systems (mostly) are built according to the “software + boxed server” scheme. Simply, they are packaged and sold in the form of software and hardware systems.

I note: “for the most part” means that there are categories and architectures that include either unique equipment or standard equipment that is so specific (for example, connecting XtremIO or VMAX3 nodes via InfiniBand) that the separation of software from hard does not make sense. (By the way, according to my classification , these are “Second type” architectures - “horizontally scalable clusters with close connections”).

People are utterly illogical ... HAN !!! (Glory to you Leonard Nimoy - live long and prosper in our hearts!)

Oh, people. We get stuck on visualizations, dwell on the physical concept of things. It’s hard for us to think about system architecture in terms of how they FUNCTION, and not in terms of how they are PACKAGED.

Let me illustrate here — show what I mean by all means! Keep reading - and you will understand the reality!

Suppose I need to deploy a Hadoop cluster. Petabyte by 5. For this, I have to think over complex communications, computing infrastructure and staging (staging - uploading data to the Hadoop cluster from the environment where the data was generated or stored. Approx. Transl.)

If I were in place of the majority, I would take a distribution kit, as well as servers and network infrastructure components. I would probably start with a small cluster, and then build it up. I would probably use rack-mounted servers. And for a rough estimate of the cluster size, I would use the standard ratios for the number of disks per server. And I see that many do.

If you proceed from such a scenario, tell someone “ you know ... if you: 1) virtualize Hadoop nodes using vSphere and Big Data Extensions — not for consolidation, but for better manageability; and 2) instead of rack servers used Cisco UCS; and 3) instead of shoving packs of disks in rack servers, use EMC Isilon - if you did these three things, the solution would be twice as fast, twice as compact and twice cheaper in terms of TCO ”- well ... if anyone told you this, you would have decided that he was drunk to bits and pieces.

But it turns out that the above statement is true .

Of course, not for everyone. However, it is true for an interesting case, disassembled by reference . This is the case of a real client, whose Hadoop cluster has grown to 5 petabytes. The link results are the results of their own testing (thanks to Dan Beresl and Chris Birdwell for sharing). The client in question is a huge telecommunications company. Detailed test results are available to you, including performance testing.

And now an interesting observation: for many it’s hard to think of Isilon as HDFS storage (HDFS is a distributed file system in Hadoop - approx. Transl.) , Because it “looks” like a hardware complex, and not as standard general-purpose servers that carry on itself the necessary software .

Often, clients starting Hadoop think "I need a standard hardware platform."

However, do not judge the book on the cover. "The rose smells like a rose, even if you call it a rose, though not."

Take a look at these pictures:

When you look at them, you probably think: “Standard industrial servers” .

... And now let me show their face:

When you look at them, you probably think: “Hardware complex” .

But in both cases you are looking at the same thing .

Software called OneFS is what gives Isilon his power. Ishilon’s submission in the form of a software and hardware complex is a consequence of the customers ’desire to buy (and maintain) it in this way.

In the case of HDFS disassembled by reference, the virtualized configuration based on UCS and Isilon became so fast (2 times faster!), Compact (2 times more compact!) And economical (2 times more economical!) Due to Isylon's software functions:

- Ensuring high availability of data both within the same rack, and within the rack array, and without duplicating data three times. This is SOFT

- The rejection of the staging, because the same data is available for both NFS and HDFS. This is also SOFT

- Rich features for creating snapshots and replicas of HDFS objects. And this too SOFT

... in the case of a telecom client, there was no magic from the side of iron (although there was some gain from using UCS. It was due to a more dense packing of computing power. The failure of local disks allowed us to replace the rack servers with blades).

There is a fun moment in this whole story. Tell the clients: “ Here's a software implementation of HDFS with all the above properties, put it on your hardware ” - customers would more willingly start using ONEFS. They would not have to face the difficulties that carry local disks. Scaling HDFS would be less painful for them.

So why don't we do this? The answer is shown in the diagram below:

I constantly conduct this strange dialogue with clients. They start by saying that “I don't want the hardware complex” (yeah, then “Here's ScaleIO / VSAN and Isilon as software”), and then they come to the conclusion that they are asking for the hardware complex :-) This is as predictable as that the sun will rise in the east. Because of such dialogues, Solidfire recently announced the release of a software version of their product (I bet that sales will be low compared to sales of their complex), and Nutanix even started with purely software solutions (and finished with complex ones). For the same reasons, for a successful start, VSAN needed special “Nodes for VSAN” ( VSAN Ready Nodes - note. ) And I suspect that very soon very substantial consumption of VSAN will go through complex solutions like VSPEX Blue. Of course, this use case will be more popular than the “install VSAN on your hardware” option. The same is true for ScaleIO.

Why?

The answer is:

- Many people do not understand that the economics of software and hardware solutions is determined by the benefits for the client and its business models, and not by the cost of hardware. People come to this when they start building complete solutions with their own hands. Try building a Nexenta cluster with high availability and fault tolerance. The price will be the same as the price for VNX in the NetApp FAS configuration. Try deploying Gluster with Redhat Enterprise support. Pay as much as Isilon. Try building a Ceph cluster (again with enterprise support). Get an ECS price. Conclusion: iron has nothing to do with it (at least, not in the case of architectures of the “third” and “fourth” types) ( See above the reference to the classification of architectures from Chad Sakash - approx. Lane )

- Even the largest companies usually do not have the internal function of “bare iron as a service.” They hold a team that is responsible for developing standards for boxed equipment and options for its support, as well as for creating an abstract model of iron, following which you can deploy all sorts of software. Google, Amazon, and Facebook have a “bare iron as a service” feature, most of the others don't. Conclusion: support models for complex and convergent solutions.

But don't get me wrong. The idea of software that is not attached to hardware is still important.

Such software can be used in many different ways. If software exists separately from hardware, then it can be acquired without “extra overhangs” in order to study, try, play, use in work - but without support. In any case, someone will have to pay for support (regardless of whether it is an open or closed code).

For all these reasons and many others, all EMC software will be gradually available “without appendages,” and in some cases, perhaps even open source.

By the way, all this does not mean that there is no room for innovation in the world of iron. Here is the newest Isilon HD node, with and without decorative panels:

It is easy to understand why he bears the codename "Colossus". The disks are mounted vertically, as are the covers for their tight packing: Viking @ 120 x 2.5 ”SSD / HDD and Voyager @ 60 x 3.5” HDD. Each node Ishilona also needs in computing power. They are located in the same 4U case. As a result, we have up to 376TB in one case, which is very plump and very cool. This node is designed for hyper-capacious configurations and for archival use.

Just look at all this! Software defined storage is amazing. And the fact that they can be deployed in many different ways, up to their own hardware, is simply wonderful. At the same time, I think that in the foreseeable future, the most preferred models for using software-defined storages will remain complex solutions and a convergent / hyper-convergent infrastructure.

Your thoughts?

(You can also read about Isilon and HDFS in our blog: A “Quick Data” recipe based on a big data solution - note.)

Source: https://habr.com/ru/post/253607/

All Articles