GridFS vs SQL Server vs Local

For meticulous

Recently, in the environment of developers of server applications often arise disputes about how to better manage the files and which technology provides faster read / write files. The network began to appear articles and articles about the comparative performance of the local file system and GridFS. Or about storing files in a relational database as a BLOB versus storage on a hard disk in a file system. So I decided to get involved in this confrontation. Today we will compare the performance and overhead of MongoDB 2.6.7 x64 GridFS vs. MS SQL Server Express 2012 v11.0.5058.0 x64 vs. NTFS. The experiment used the Windows 7 x64 SP1 platform on the AMD Athlon (tm) II X2 250 Processor 3.00 GHz with 4GB of RAM 1033 MHz and the HDD 600 Gb SATA 6Gb / s Western Digital VelociRaptor 10000rpm 32Mb. After each test, the computer was restarted, and the bases were reset. Performance will be considered on the example of a file server in C # under .NET 4.5, whose code is attached to the article.

Closer to the point

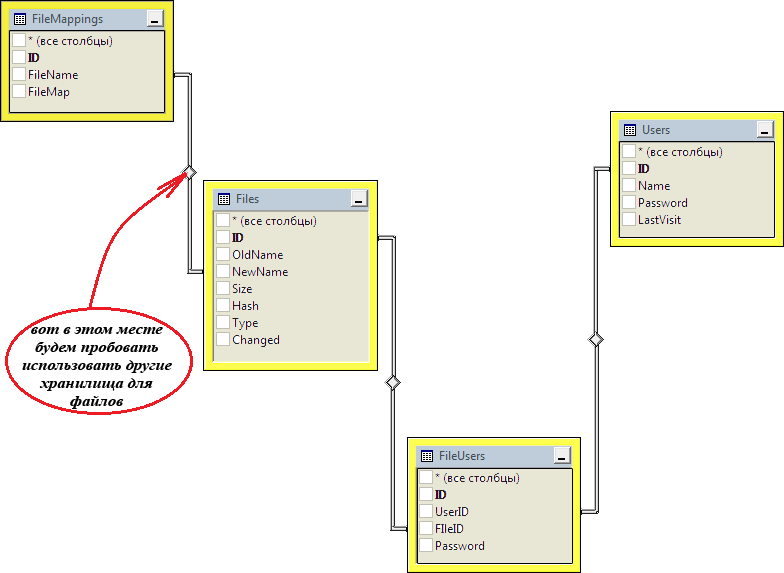

During the test, try to save 1000 files of 10,000,000 bytes. Each downloaded file will be registered in the “Files” table: “Hash” is used to check whether such a file has already been downloaded, “NewName” links the information about the file with its bit image on the server in the “FileMapping” table. File server database schema:

At the same time, we will try to store files in the GridFS using the official MongoDB driver and simply on the hard disk using the FileInfo class from the .NET class library. To obtain the ability to conveniently build human-readable queries to SQL Server using LINQ technology and lambda expressions, we will use Entity Framework 6.0:

')

Single threaded recording

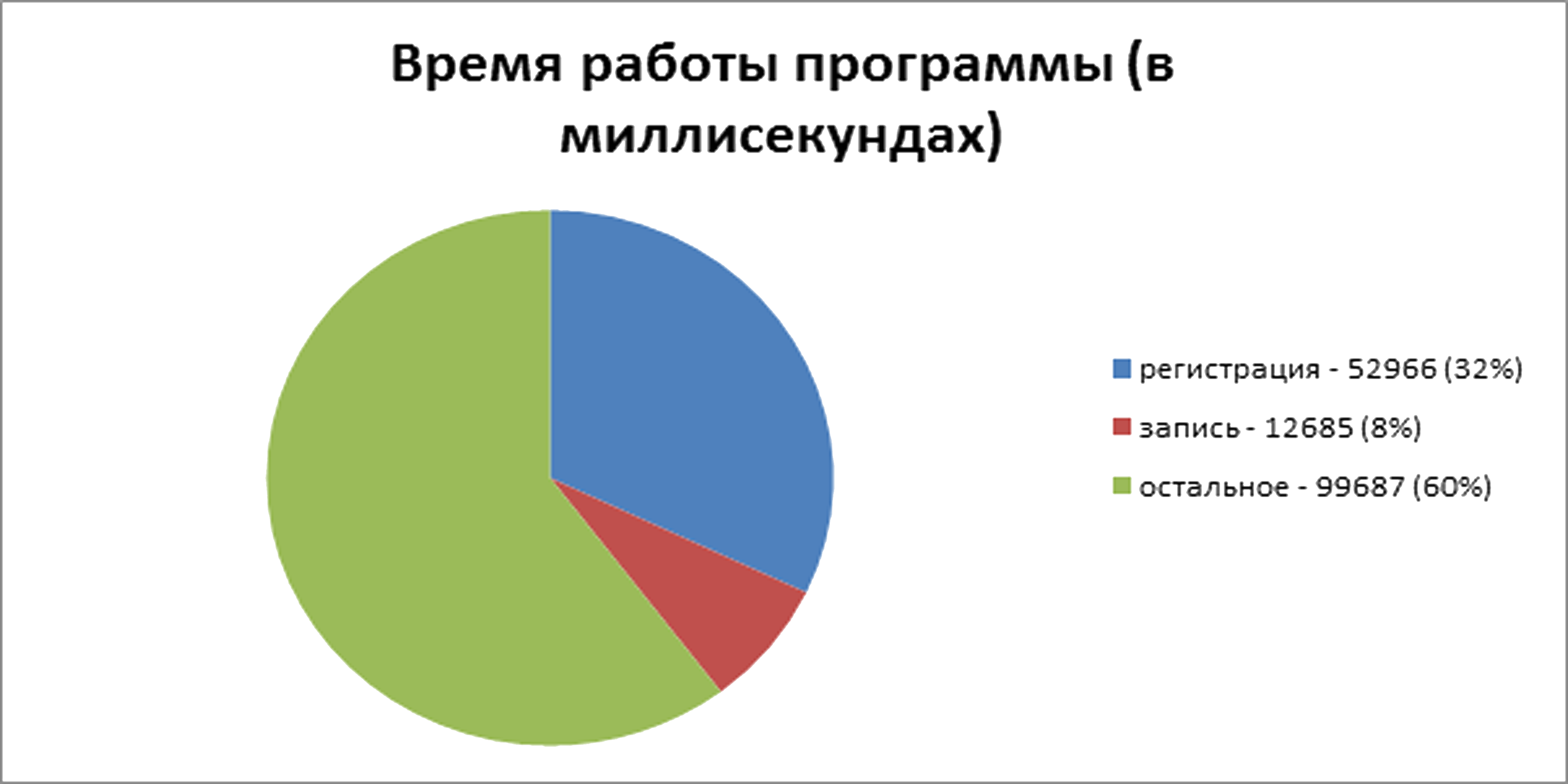

To begin with, let's test saving just to disk, loading files in single-threaded mode. According to the results of five launches, the operations required: 164751, 165095, 164611, 165937 and 166296 milliseconds. The maximum difference between the results was about 1%. This means that, on average, the process worked for 165,338 milliseconds of which 52966 is the time to register the file, 12685 is the time to write the file:

Starting the program with the measurement of the operation time of its parts and without measurement did not lead to obtaining different results. Running the program with the stream browser showed that the maximum occupied memory size was 59796 KB. Disk space: approximately 9.3 GB. For simplicity, I will continue to give immediately the average result of the execution time of operations and in seconds.

Now let's test writing files to the SQL Server database. The size of the resulting database on disk was approximately 9.8 GB. At the peak, the program ranked 233432 KB, and the DBMS - 1627048 KB. The program worked an average of 998 seconds. Of these, 34 seconds to register the file and 823 seconds to write:

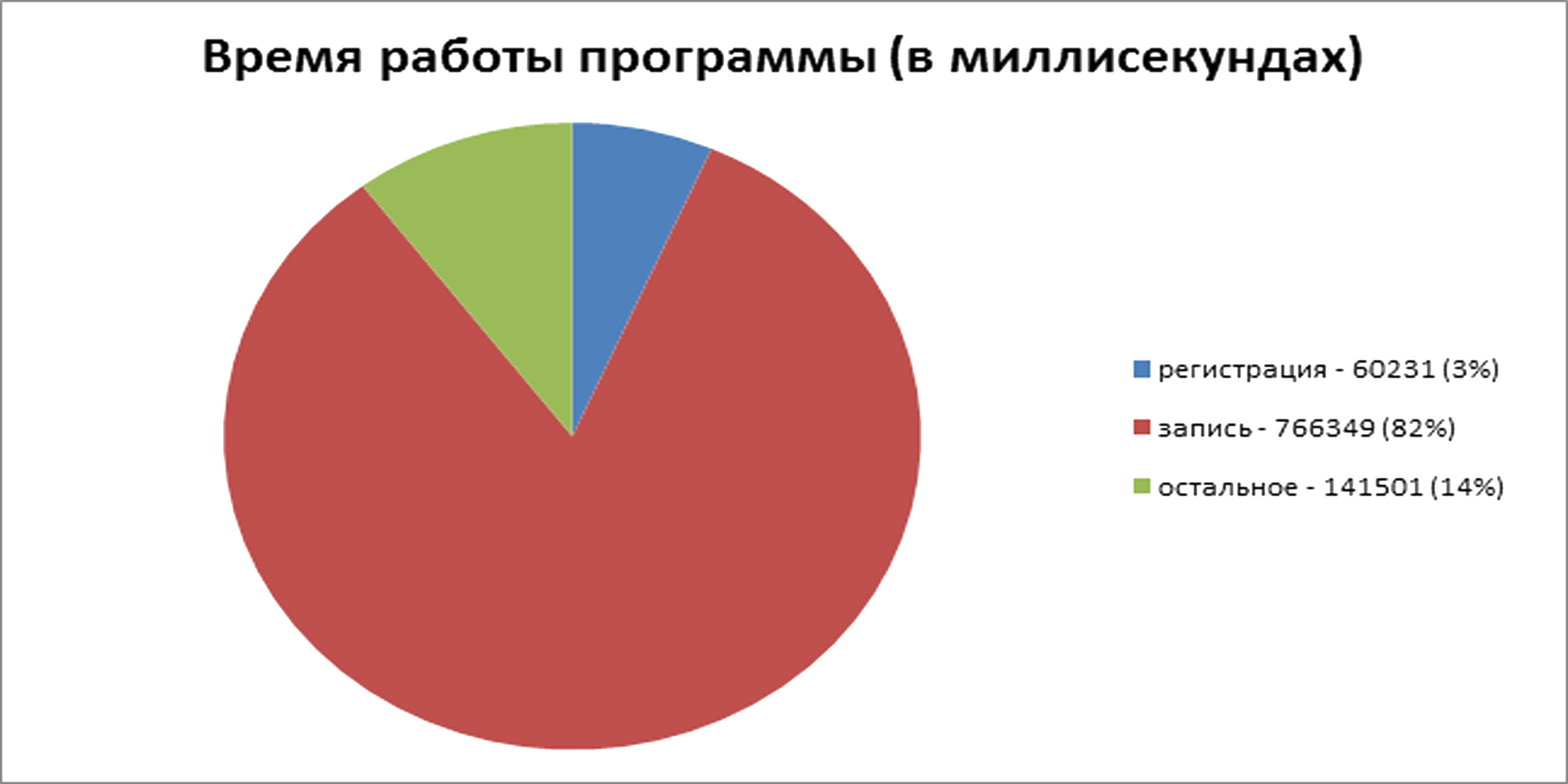

Next came the GridFS queue. The disk space occupied is approximately 12 GB. Has spent MongoDB all operative memory to which reached. At the same time, the memory consumption of SQL Server and our server remained within the usual values, which can, in this situation, be neglected. Everything was completed in about 921 seconds. For registration - 60 seconds, for recording - 766 seconds:

Multi-threaded recording

Here we faced the first difficulties: when several threads are exhausted to establish connection with the DBMS at the same time, errors occur. And if working with MongoDB goes “without confirmation” - the driver does not give an error and continues to work and everything runs smoothly, then EF, while calling “CreateIfNotExists”, shows a bunch of debug information, gives an error that is not caught using try-catch and ends the process with by mistake. In this case, the error does not appear if you compile and run with the debugger from the development environment. The problem was solved by synchronizing the threads and establishing connections with the DBMS in turn.

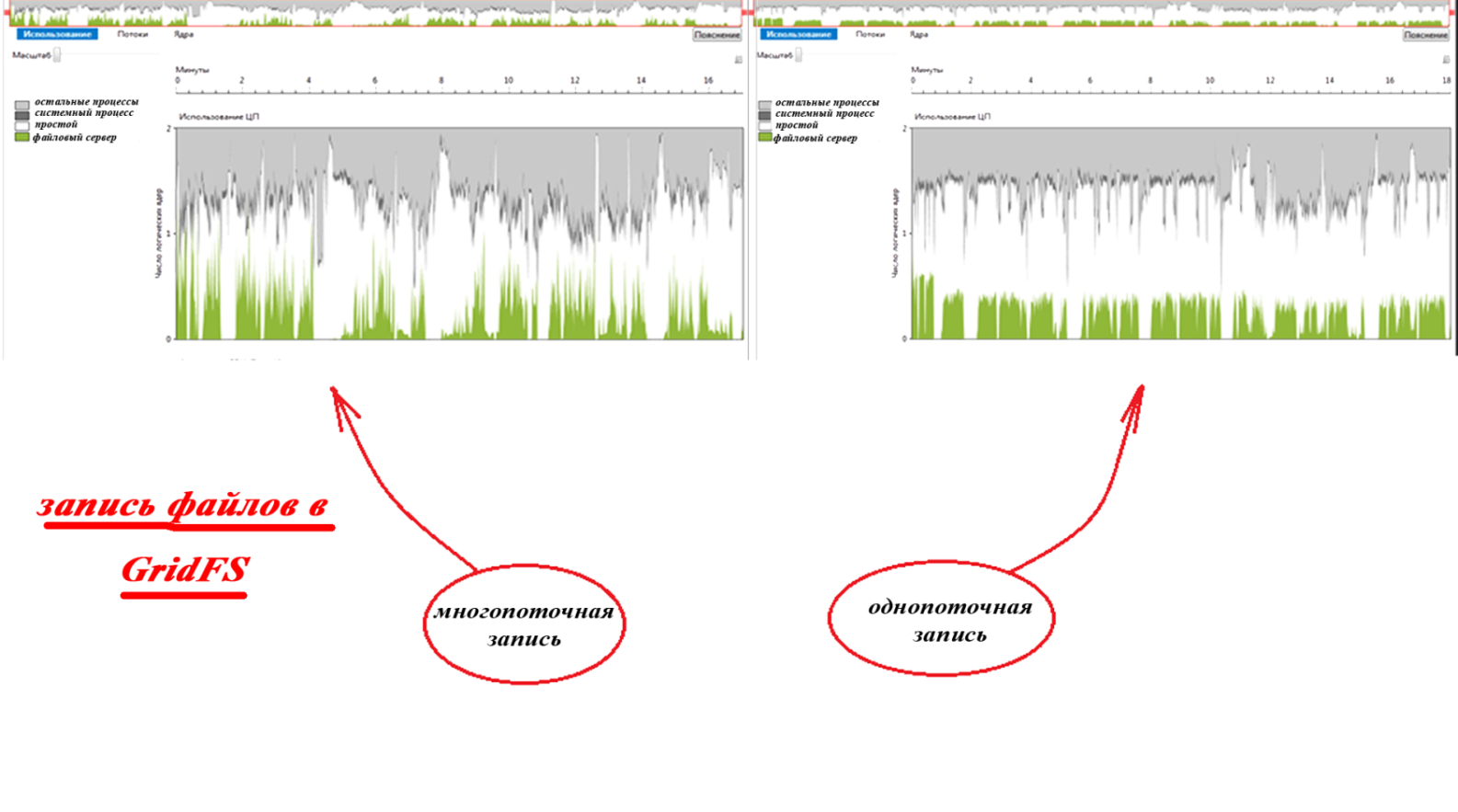

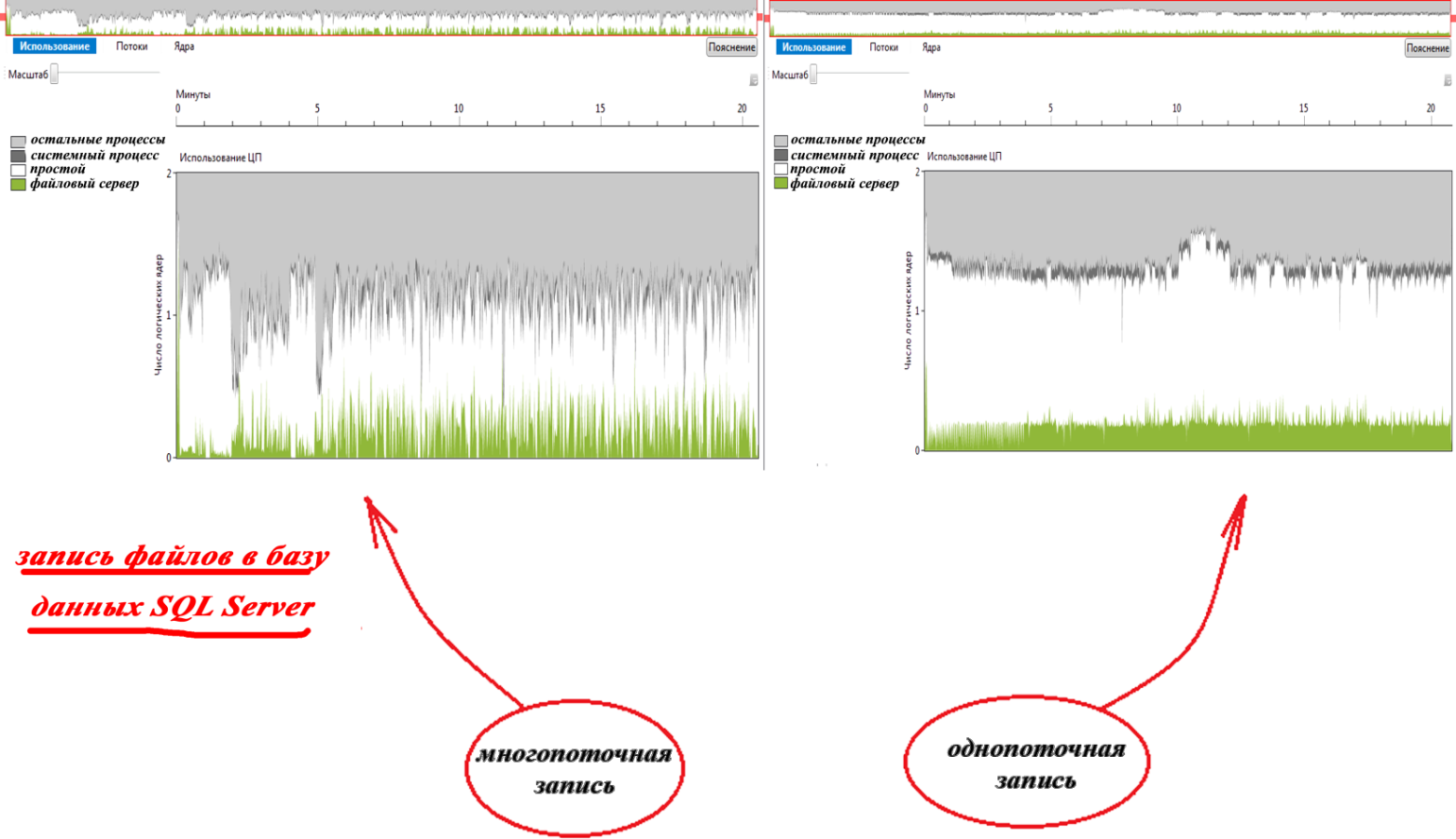

Let's perform write operations using for recording 20 streams of 50 files each. GridFS testing showed a strong scatter: 716259, 623205, 675829, 583331 and 739815 milliseconds, which averaged 668 seconds. As you can see, with the same overhead, it took less time to complete. This is due to less downtime, which is shown by the parallelism visualizer on the graphs of using computational CPU cores (execution using parallelism visualizer took much longer):

When writing files directly to the file system, the spread of the obtained test values was not large and the average value was 170 seconds. As you can see, the difference between single-threaded and multi-threaded recording to disk was less than 3%. Therefore, I consider it expedient to omit the graphics of using the CPU for this case.

Multi-threaded writing of files to the SQL Server database led to additional problems: During loading, an error occurred in some of the threads due to a waiting timeout. As a result, the connection was interrupted, the process was terminated with an error, and the database was brought to an inconsistent state. For a thousand requests, the first attempt ended in failure in about 40 cases. Of these, about two ended in failure and the second attempt. The problem was solved by increasing the timeout to 30 seconds and retrying the connection on failure.

When multithreaded saving 1000 files using SQL Server, the time spread was less than 5%, and the result was an average of 840 seconds. This is almost 16% faster than in single-threaded mode:

Reading files

Now let's test reading files from different repositories. In single-threaded mode, we count all files in a row, and in multi-threaded mode - in 20 streams of 50 random files each. Traditionally, 5 attempts of each type:

Reading files from the SQL Server database did not go smoothly the first time. The Entity Framework at each request to the database for a sample of data caches the result on the side of the client application. And, although the CLR implies automatic “garbage collection”, the memory is quickly consumed, which leads to an “OutOfMemory” error and the process crash. This is due to the fact that EF does not independently handle this type of exceptions and does not delete the data from the “cache” even if all the memory is used up. And by querying the tables that store the files, this becomes critical. The problem was resolved by disabling caching in the FileMapping entity collections using the AsNoTracking installation.

Total

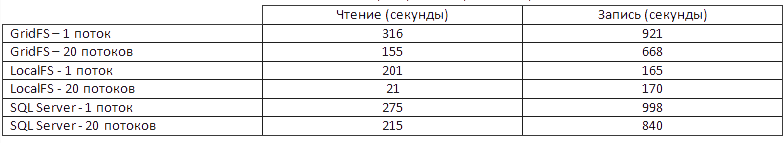

We have a raped hard drive and the following summary table:

Bonus

Trying to anticipate possible criticism and to answer unasked questions in advance, after a while, I supplement the article with some interesting (I hope) and useful comments. First: if the base is not reset before each new test, then the performance will not significantly change. At least, neither at the second, nor at the third and not at repeated multiple (in reasonable aisles) runs it was not possible to get differences in the results. Therefore, we omit the detailed description of this part of the work as the most boring one.

Secondly, many will surely say that when working with a DBMS through sockets, the data for transmission will have to go through the entire stack of network communication protocols back and forth. Therefore, we consider another option. In fact, the same version with the class FileInfo, only now we’ll access it using .NET Remouting, if you understand what I mean:

Source: https://habr.com/ru/post/253541/

All Articles