Unloading the conditions of public procurement contests with the EP. Zakupki.gov.ru

Notes:

Quote:

For many commercial and other companies, the system of "public procurement" is the main way of attracting state (budget) funds to economic activity. Information on the procurement of goods, services, research carried out by companies with state participation (and other individual types of companies) is mandatory (according to federal laws No. 223, 94, 44) posted on the public procurement website. Commercial companies also often publish their tenders on the official public procurement website.

Links to the mentioned laws, information on the website "Consultant Plus":

Thus, information on tenders, published on the website of public procurement, is the central source of current information on possible “state” orders by profile for many companies from various fields of activity - from security services to geophysical surveys. Therefore, the need to have regularly updated information about ongoing competitions that fall under certain criteria arises from each organization participating in public procurement. In this article we will consider various methods and a practical example of the implementation of this need.

Most often, the task is put by management to the marketing or IT division of the company in the following wording: “a daily summary of public procurement competitions that meets the following criteria is needed ...” . It is daily, because sometimes between the publication of the tender documentation and the end of the submission of applications for it is declared a period of less than 10 days, on average - about two weeks. There is not much time to collect all the necessary documentation for participation in the contest, every day is “on the bill”.

We list the options for implementing the task.

')

The site itself http://zakupki.gov.ru/ allows customizable search and selection of competitions by parameters.

Disadvantages:

To partially automate the process, I once wrote a plug-in for the FireFox browser, which was supposed to do a daily download of selected contests.

In a nutshell, the plugin formed the address in the form of something like this:

Naturally, for the 94 and 223 laws, the query parameters are different. As you can see, OKPD, price range, etc. it is necessary to “sew” into the request, since otherwise the number of found contests will be too large and the search results can be downloaded only partially.

In response to a market request, several companies have organized information retrieval sites for ongoing competitions. Often, these sites provide not only information on public procurement, but also on "private" tenders from electronic platforms.

Several such systems:

Other.

Separately, I will mention the government spending , which was on the Habré article .

The purpose of this article is not to compare or analyze the indicated resources, so neither their merits nor the disadvantages are given here.

In some (I know a few) cases, the management of a company participating in tenders sets very specific tasks concerning information selection parameters, regularity of search, or execution of a search result. In such cases, turn to IT specialists, “manual work” on monitoring and selection of competitions becomes too time consuming.

There are examples of the order of the described work with freelancers. For example, on SQL.ru , on Weblancer . Finally, you can purchase a turnkey solution from time-honored performers . However, the main part of this article describes the procedure for independent performance of the task.

The basic necessary knowledge about the source of the data: The EP (Russian official procurement website) has a public ftp-server . Moreover, if http regularly (usually at night and holidays) is not available due to “maintenance”, then ftp works (according to my practice) quite reliably.

The addresses of ftp servers are divided according to federal laws, which are used for placing tenders:

№223: ftp://ftp.zakupki.gov.ru/out/

Federal Law No. 94 and Federal Law No. 44: ftp://zakupki.gov.ru

The directory structure for law 223 is completely transparent. As for 94 and 44, the following subdirectories of interest are located here:

The remaining catalogs contain regulatory reference information, information on bank guarantees, unloading according to the rules and as part of the solution of the problem are not of interest.

Further, the necessary extracts from the explanations on the procedure for uploading information about published documents in the region to the FTP server of the All-Russian official website, a set of quotes:

Note that for our purposes, only notice is of interest. All other types of documents within the task are not used!

The current version of the above information can be downloaded from the EP in the form of documents "Information Exchange Schemes ...".

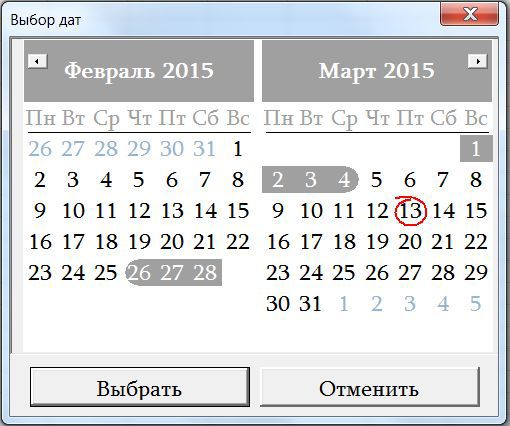

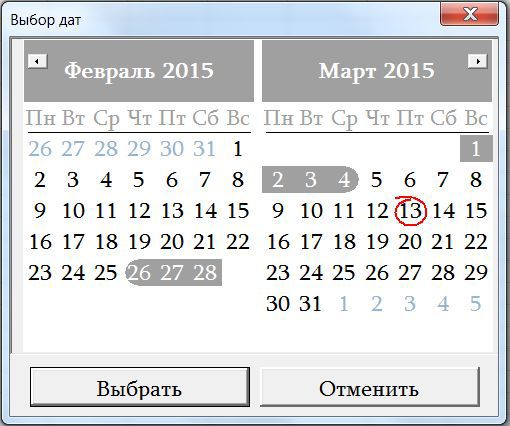

To query the date range in which to download contests, I made a userForm using the MonthView element. Its settings allow you to expand two months side by side (suppose that the range is no longer than a month), show the current date (red frame), set the selected date range as default.

The example selected range from February 26 to March 4. Selected dates are read from Form properties:

This filter allows you to select files (the structure of whose names are known to us) in a given date range:

Note that here the semicolon separates the alternatives, so this filter is suitable for files generated according to all three laws.

I download files to a specified directory, which I pre-clear of old downloads using FSO methods:

Next, select the target folder bFld = FSO.GetFolder (...) and destroy the subdirectories:

Not at all obligatory, but the use of

The second parameter is asynchronous execution.

Start downloading. Make sure the target folder exists by

Create a shell object

and apply the main feature of the described approach - the namespace method:

The specified line implements access to FTP, returning folders and files. Please note that the passed parameter must be of type Variant , not String .

You can select all directories as follows:

Select subdirectories and files ( 96 = 32 + 64 ) and apply a filter like the one that was built at the beginning of the paragraph to select files by date - like this:

It remains to specify the target (local) folder for downloading in a similar way:

And run the "copy" (it is also "download") as follows:

This command starts the external process (in Windows, the standard progress bar for copying files is displayed), its execution from vba is not controlled directly. However, we need to wait until the end of its execution, for which we use the following method in the cycle to check for the presence of the last of the copied files:

So arranged "waiting" lasts exactly as long as the files are copied.

After scanning all the directories and subdirectories and downloading all the filtered files, we proceed to processing them on the local machine:

To do this, use the namespace method again.

We iterate through all the archives in the folder

and use the fact that these archives in Windows are visible as subdirectories! Accordingly, the entire contents of the archive is available as follows:

(Again, I draw your attention to the fact that the parameter must be a Variant , which is why a type conversion from the string is necessary).

Again, the same CopyHere command allows you to “copy” (actually extract) all files from the archive into the target folder:

and asynchronous execution causes us to wait in a loop to complete the execution of this command in the same way as described above.

There are a lot of garbage among the unzipped files. For example, for some region there were no purchases on a certain day, the CAB generates an empty file for that date. Therefore, before parsing xml, I prefer to delete unnecessary. Using FSO, iterate through files

“Screening out” is easy to produce by file size (fl.Size <= 198) and filtering its name Not (LCase (fl.Name) Like "* noti *")) .

Deleting a file is extremely simple: fl.Delete

Deciphering xml depends on their scheme, which sometimes changes at the EP. Therefore, the following are the basic techniques, without concentrating on individual fields and data. We start, of course, with

In this part, in addition to FSO, we need XML for working with files:

And, of course, the target sheet ( ActiveSheet ) in the Excel book, where we will write information.

Let's start by stopping rendering Excel for a while so that it doesn't “flicker”:

The fundamental point is that

This is how the namespace definitions look for parsing xml, formed by 94 FZ. For 44, it will be slightly different:

Actually, all the above data is taken from the header of any downloaded xml file, only for the namespace q is added by default.

Next, determine the field (more precisely, xpath , pointing to them) that need to be downloaded. Currently, for 94 FZ, they look like this: ".//oos:notificationNumber", ". //Oos:lot", ".//oos:orderName", ".//oos:maxPrice". For 44 FZ - another structure (thanks to the programmers OOS): ".//q:purchaseNumber", ". //Q:lot", ".//q:purchaseObjectInfo", ".//q:maxPrice|.// q: price | .// q: totalSum " .

We see that there is no unambiguity, it is necessary to set up parsing not only based on published schemes, but also on our own practical experience in decoding data.

Check whether the xml file is “readable” can be a double condition:

If the file is read (so far I have not had any problems with the files uploaded with OOS), you can actually parse its content. First of all, I recommend reading the field describing the composition of the message ( notice ).

And check that the content of the file is exactly the announcement of the competition, and not cancel it, notification of the publication of the protocol, etc. like that:

Since in xml, formed by 223 FZ, the name of the document type is “hidden”, you can add:

Next comes the actual writing of data from xml to the sheet cells:

Etc.

If the XPath can give several options (an indication, for example, of the names of the lots) and we want to preserve all of them, this will help:

Some fields in the document may be missing, then we skip them by the condition:

Downloading files from ftp can be implemented in different ways. I described one, using shell.namespace , working and VERY simply implementable.

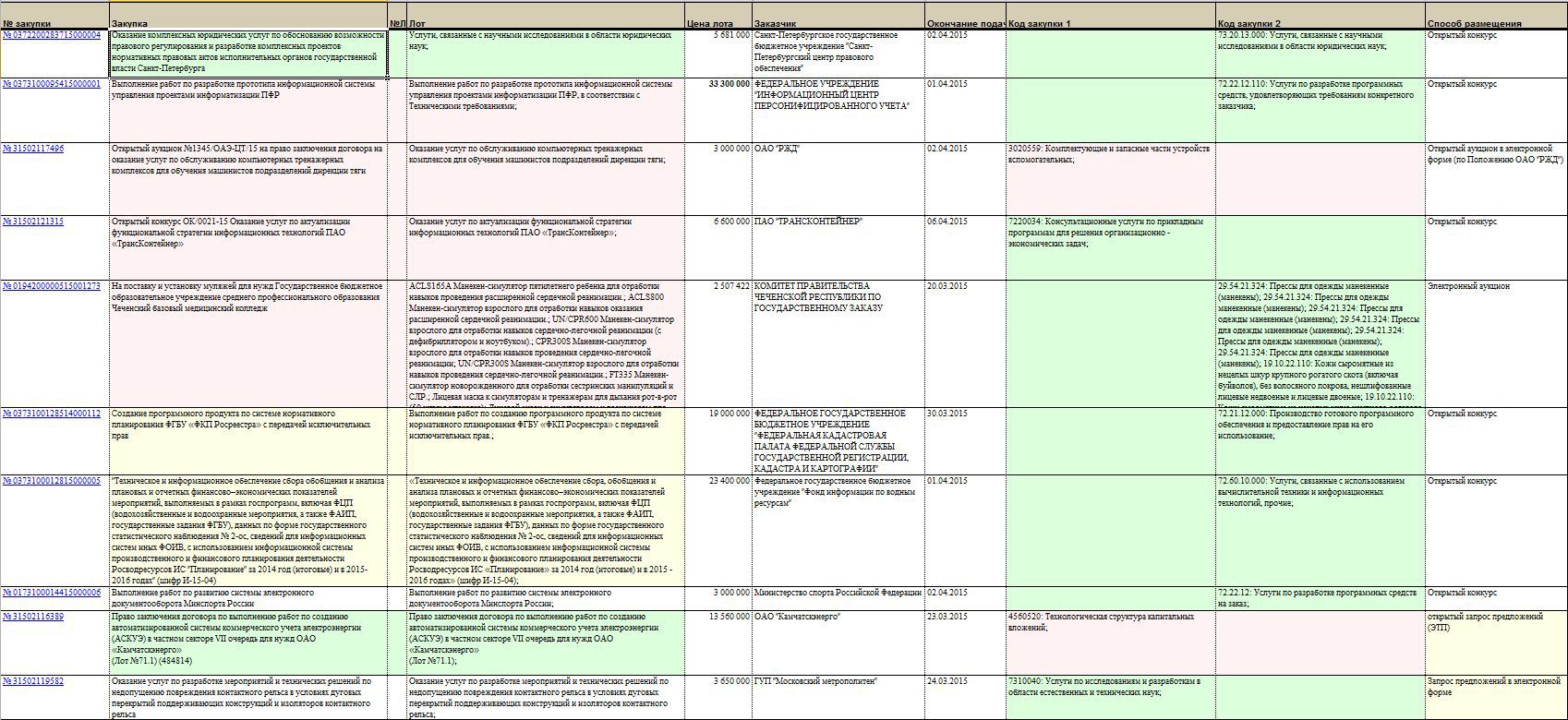

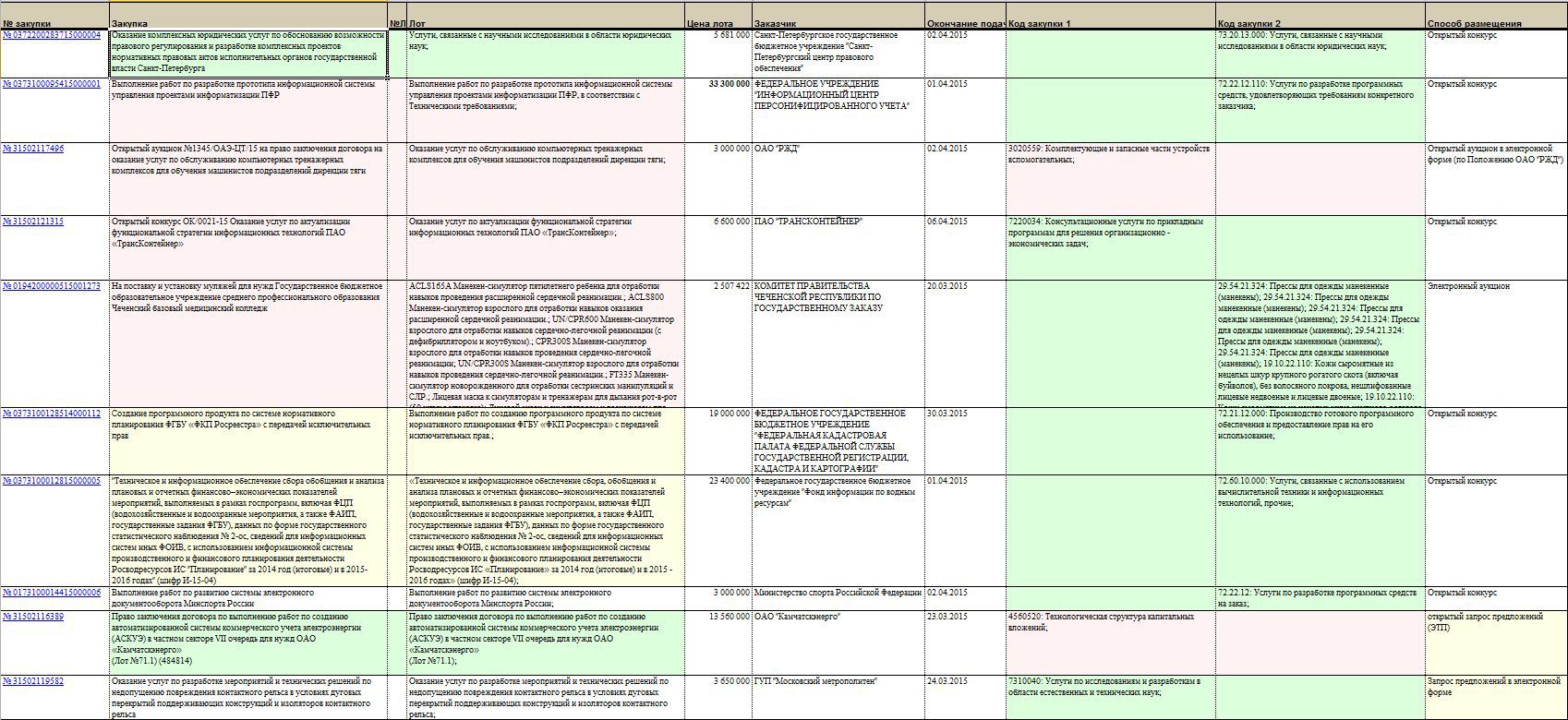

After downloading, automatic ranking (selection) and formatting the list of contests, my daily selection of contests (for all Federal Laws) looks like this:

The approach described above allows you to download data about tenders, contracts, procurement plans, etc. with OOS, because all this information is published on an open ftp . I don’t cite the entire program code and cannot, because it is “intellectual property”. However, anyone who owns the basics of vba and, more importantly, patience, can restore the program using the given key code points .

Patience will be needed, firstly, when parsing files and subdirectories on ftp: you should not download too much and not miss the right one. And secondly, when parsing xml. However, here is the question of the task: exactly which fields, in what sequence, how the customer wants to be formatted.

Good luck and victories to everyone: - in contests - and personal!

- OOS - All-Russian official website ( Government procurement ).

- Required knowledge to understand the article: VBA (MS Excel).

Introduction

Quote:

“The official website of the Russian Federation on the Internet for placing information about placing orders for goods, works and services ... is designed to provide free and free access to complete and reliable information about the contract system in the field of procurement and procurement of goods, works, services, certain types of legal entities, as well as for the formation, processing and storage of such information. ”

For many commercial and other companies, the system of "public procurement" is the main way of attracting state (budget) funds to economic activity. Information on the procurement of goods, services, research carried out by companies with state participation (and other individual types of companies) is mandatory (according to federal laws No. 223, 94, 44) posted on the public procurement website. Commercial companies also often publish their tenders on the official public procurement website.

Links to the mentioned laws, information on the website "Consultant Plus":

- Federal law of 21.07.2005 N 94-FZ (as amended on 02.07.2013) "On placing orders for the supply of goods, the performance of works, the provision of services for state and municipal needs"

- Federal Law of July 18, 2011 No. 223-FZ (as amended on March 12, 2014, as amended of December 29, 2014) “On the procurement of goods, works, services by certain types of legal entities” (as amended and added, force from 01/01/2015)

- Federal law of 05/04/2013 N 44- (ed. 03/08/2015) "On the contract system in the procurement of goods, works, services for state and municipal needs"

Thus, information on tenders, published on the website of public procurement, is the central source of current information on possible “state” orders by profile for many companies from various fields of activity - from security services to geophysical surveys. Therefore, the need to have regularly updated information about ongoing competitions that fall under certain criteria arises from each organization participating in public procurement. In this article we will consider various methods and a practical example of the implementation of this need.

Options for obtaining information on public procurement

Most often, the task is put by management to the marketing or IT division of the company in the following wording: “a daily summary of public procurement competitions that meets the following criteria is needed ...” . It is daily, because sometimes between the publication of the tender documentation and the end of the submission of applications for it is declared a period of less than 10 days, on average - about two weeks. There is not much time to collect all the necessary documentation for participation in the contest, every day is “on the bill”.

We list the options for implementing the task.

')

1. The site of EP (state procurement)

The site itself http://zakupki.gov.ru/ allows customizable search and selection of competitions by parameters.

Disadvantages:

- The search does not work correctly, sometimes there are no contests. I am witness to a long slave through the official site, the statement I have verified;

- The site often does not work at night and on holidays;

- The limit on the number of results. If the search returns more than 500 lines, it is impossible to download contest data (in the form of a .csv table of format) using the site.

To partially automate the process, I once wrote a plug-in for the FireFox browser, which was supposed to do a daily download of selected contests.

In a nutshell, the plugin formed the address in the form of something like this:

"http://zakupki.gov.ru/epz/order/extendedsearch/search.html?" + "placeOfSearch=FZ_44" + "&orderPriceFrom=" + priceArray[i] + "&orderPriceTo=" + (priceArray[i+1]-1) + "&orderPriceCurrencyId=-1" + "&orderPublishDateFrom=" + OrderDate + "&orderPublishDateTo=" + OrderDate + "&headAgencyWithSubElements=true&matchingWordPlace44=NOTIFICATIONS" + "&law44.okpd.withSubElements=true” + "&law44.okpd.ids=31301%2C37097%2C50876%2C51122" + "&law44.advantages[MP44]=I&law44.advantages[UG44]=I" + "&law44.advantages[IN44]=I&law44.advantages[MPSP44]=I" + "&morphology=false&strictEqual=false"; Naturally, for the 94 and 223 laws, the query parameters are different. As you can see, OKPD, price range, etc. it is necessary to “sew” into the request, since otherwise the number of found contests will be too large and the search results can be downloaded only partially.

2. Specialized web-based contest search services

In response to a market request, several companies have organized information retrieval sites for ongoing competitions. Often, these sites provide not only information on public procurement, but also on "private" tenders from electronic platforms.

Several such systems:

- Multitender.ru : "free specialized search engine that allows you to track public procurement and commercial tenders" ©;

- TenderCAT : “The TenderCAT catalog is intended to facilitate the search for government and commercial tenders and auctions in a huge mass of orders published in the government procurement portal (http://zakupki.gov.ru) daily in all regions and activities” ©;

- East Budget : "The site of public procurement and tenders - the best search and analytics from the East Budget" ©;

- Procurement 360

Other.

Separately, I will mention the government spending , which was on the Habré article .

The purpose of this article is not to compare or analyze the indicated resources, so neither their merits nor the disadvantages are given here.

3. Independent development for downloading contests.

In some (I know a few) cases, the management of a company participating in tenders sets very specific tasks concerning information selection parameters, regularity of search, or execution of a search result. In such cases, turn to IT specialists, “manual work” on monitoring and selection of competitions becomes too time consuming.

There are examples of the order of the described work with freelancers. For example, on SQL.ru , on Weblancer . Finally, you can purchase a turnkey solution from time-honored performers . However, the main part of this article describes the procedure for independent performance of the task.

Downloading contest data from http://zakupki.gov.ru using Excel VBA

Initial data

The basic necessary knowledge about the source of the data: The EP (Russian official procurement website) has a public ftp-server . Moreover, if http regularly (usually at night and holidays) is not available due to “maintenance”, then ftp works (according to my practice) quite reliably.

The addresses of ftp servers are divided according to federal laws, which are used for placing tenders:

№223: ftp://ftp.zakupki.gov.ru/out/

Login and password:

fz223free

Federal Law No. 94 and Federal Law No. 44: ftp://zakupki.gov.ru

Login and password:

free

The directory structure for law 223 is completely transparent. As for 94 and 44, the following subdirectories of interest are located here:

- 94fz directory containing data of public uploads in accordance with 94FZ (other directories - 44FZ),

- fcs_regions directory containing the data of the complete regional uploading of information published on DUS in accordance with 94FZ.

The remaining catalogs contain regulatory reference information, information on bank guarantees, unloading according to the rules and as part of the solution of the problem are not of interest.

Further, the necessary extracts from the explanations on the procedure for uploading information about published documents in the region to the FTP server of the All-Russian official website, a set of quotes:

Full regional landings include all documents of the following types published on DUS:

• published notices;

• published changes to notices;

• published protocols;

• published information about contracts;

• published contract changes;

• published information on the execution / termination of contracts.

Note that for our purposes, only notice is of interest. All other types of documents within the task are not used!

Uploading is done in archived XML files.

In one file there can be documents of only one type in an amount not exceeding 3000 records. If the number of documents to be uploaded exceeds 3000 records, the system generates several files and each places in a separate archive.

All generated and archived XML files are uploaded to an FTP server ... the files in the upload are divided into directories corresponding to the region. In each catalog of the region there are 3 more catalogs: notifications, protocols and contracts. In each of the notifications, protocols and contracts directories there is additionally a daily directory.

Published documents are uploaded to an FTP server in the following order:

· Every calendar day (daily) a list of documents published on the previous calendar day is downloaded. At the same time ... uploading notices by region is done in the <Region name> / notifications / daily directory;

· Each calendar month (monthly) unloads a list of documents published in the previous calendar month. At the same time ... uploading notices by region is done in the <Region name> / notifications directory;

In the daily and monthly uploads, all types of documents published for the last calendar day or calendar month are respectively unloaded.

If at the time of the unloading for the elapsed period there was not a single published document of any type, then the XML file with this type of document is unloaded empty.

After completing the monthly upload, the catalogs with daily uploads for the past month are cleared.

The names of the regional upload files have the following structure:

<document_region_region_start-period_end-period_number.xml.zip>,

Where:

• document view — takes the value notification, protocol or contract for notices, protocols and contract details, respectively;

• region - the name of the region of discharge;

• start-period — the start date of the period for selecting documents by the date and time of publication of the documents being uploaded in the format yyyyddmm_hhmmss, where yyyy is the year, mm is the month (number), dd is the day, hh is the hour, mm is the minutes, ss is the second;

• end-period - the end date of the period for selecting documents by the date-time of publication of the documents being uploaded in the format yyyyddmm_hhmmss, where yyyy is the year, mm is the month (number), dd is the day, hh is the hour, mm is the minutes, ss is the second;

• number - the sequence number of the generated file;

The current version of the above information can be downloaded from the EP in the form of documents "Information Exchange Schemes ...".

Description of the program of automatic downloading of competitive information (VBA MS Excel, Windows).

Training

- We connect scripting Microsoft Scripting Runtime. For FSO functionality ( \ Windows \ System32 \ scrrun.dll )

- We connect scripting of Microsoft XML, v.6

To query the date range in which to download contests, I made a userForm using the MonthView element. Its settings allow you to expand two months side by side (suppose that the range is no longer than a month), show the current date (red frame), set the selected date range as default.

The example selected range from February 26 to March 4. Selected dates are read from Form properties:

MonthView.SelStart MonthView.SelEnd This filter allows you to select files (the structure of whose names are known to us) in a given date range:

fltr = "*_" & Format(targetDate, "yyyymmdd") & "*" & Format(targetDate + 1, "yyyymmdd") & "*.zip;" & "*_" & Format(targetDate, "yyyymmdd") & "*" & Format(targetDate, "yyyymmdd") & "*.zip" Note that here the semicolon separates the alternatives, so this filter is suitable for files generated according to all three laws.

I download files to a specified directory, which I pre-clear of old downloads using FSO methods:

Dim FSO As FileSystemObject Set FSO = New FileSystemObject Next, select the target folder bFld = FSO.GetFolder (...) and destroy the subdirectories:

For Each SubFolder In bFld.SubFolders SubFolder.Delete Next Not at all obligatory, but the use of

Application.Speech.Speak It is convenient not to monitor the progress of a long procedure, but to go about your business regularly to hear messages (in a pleasant female voice) like: Application.Speech.Speak "Downloading purchase notices", True The second parameter is asynchronous execution.

File download

Start downloading. Make sure the target folder exists by

FSO.FolderExists and, if necessary, create it with the MkDir function.Create a shell object

Set myShell = CreateObject("Shell.Application") and apply the main feature of the described approach - the namespace method:

Set ftpItems = myShell.Namespace(FTP).Items The specified line implements access to FTP, returning folders and files. Please note that the passed parameter must be of type Variant , not String .

You can select all directories as follows:

ftpItems.Filter 32, "*". Select subdirectories and files ( 96 = 32 + 64 ) and apply a filter like the one that was built at the beginning of the paragraph to select files by date - like this:

ftpItems.Filter 96, fltr. It remains to specify the target (local) folder for downloading in a similar way:

set tFolder = myShell.Namespace(tgtFolder) And run the "copy" (it is also "download") as follows:

tFolder.CopyHere ftpItems, 20 This command starts the external process (in Windows, the standard progress bar for copying files is displayed), its execution from vba is not controlled directly. However, we need to wait until the end of its execution, for which we use the following method in the cycle to check for the presence of the last of the copied files:

While Len(Dir$(tgtFolder & "\" & ftpItems.Item(ftpItems.Count - 1).Name)) = 0 Sleep 1: DoEvents Wend So arranged "waiting" lasts exactly as long as the files are copied.

Unpacking (unzipping) downloaded files

After scanning all the directories and subdirectories and downloading all the filtered files, we proceed to processing them on the local machine:

Application.Speech.Speak "Unzipping archives", True. To do this, use the namespace method again.

We iterate through all the archives in the folder

For Each fl In tFolderItems and use the fact that these archives in Windows are visible as subdirectories! Accordingly, the entire contents of the archive is available as follows:

Set flItems = myShell.Namespace(CVar(tgtFolder & "\" & fl.Name)).Items (Again, I draw your attention to the fact that the parameter must be a Variant , which is why a type conversion from the string is necessary).

Again, the same CopyHere command allows you to “copy” (actually extract) all files from the archive into the target folder:

myShell.Namespace(tgtFolder).CopyHere flItems, 20 and asynchronous execution causes us to wait in a loop to complete the execution of this command in the same way as described above.

There are a lot of garbage among the unzipped files. For example, for some region there were no purchases on a certain day, the CAB generates an empty file for that date. Therefore, before parsing xml, I prefer to delete unnecessary. Using FSO, iterate through files

Set fold = FSO.GetFolder(tgtFolder) For Each fl In fold.Files “Screening out” is easy to produce by file size (fl.Size <= 198) and filtering its name Not (LCase (fl.Name) Like "* noti *")) .

Deleting a file is extremely simple: fl.Delete

XML decryption with contest data

Deciphering xml depends on their scheme, which sometimes changes at the EP. Therefore, the following are the basic techniques, without concentrating on individual fields and data. We start, of course, with

Application.Speech.Speak "Decoding files", True. In this part, in addition to FSO, we need XML for working with files:

Dim xml As MSXML2.DOMDocument60 Set xml = New DOMDocument60: xml.async = False: xml.validateOnParse = True And, of course, the target sheet ( ActiveSheet ) in the Excel book, where we will write information.

Let's start by stopping rendering Excel for a while so that it doesn't “flicker”:

Application.ScreenUpdating = False The fundamental point is that

“XPath treats an empty prefix as a null namespace. In other words, only prefixes associated with namespaces can be used in XPath queries. This means that if you need to build a namespace query in an XML document, even if it is a default namespace, you need to define a prefix for it. ”Therefore, to successfully parse the fields of downloaded documents for the default namespace, we add a certain prefix. For example, "q":

xml.setProperty "SelectionNamespaces", " xmlns:q= 'http://zakupki.gov.ru/oos/export/1' xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance' xmlns:oos='http://zakupki.gov.ru/oos/types/1'" This is how the namespace definitions look for parsing xml, formed by 94 FZ. For 44, it will be slightly different:

xml.setProperty "SelectionNamespaces", "xmlns:q='http://zakupki.gov.ru/oos/types/1' xmlns:ns2='http://zakupki.gov.ru/oos/export/1' xmlns:ns3='http://zakupki.gov.ru/oos/printform/1'" Actually, all the above data is taken from the header of any downloaded xml file, only for the namespace q is added by default.

Next, determine the field (more precisely, xpath , pointing to them) that need to be downloaded. Currently, for 94 FZ, they look like this: ".//oos:notificationNumber", ". //Oos:lot", ".//oos:orderName", ".//oos:maxPrice". For 44 FZ - another structure (thanks to the programmers OOS): ".//q:purchaseNumber", ". //Q:lot", ".//q:purchaseObjectInfo", ".//q:maxPrice|.// q: price | .// q: totalSum " .

We see that there is no unambiguity, it is necessary to set up parsing not only based on published schemes, but also on our own practical experience in decoding data.

Check whether the xml file is “readable” can be a double condition:

If Not xml.Load(tgtFolder & "\" & fl.Name) then…If (xml.parseError.ErrorCode <> 0) then… If the file is read (so far I have not had any problems with the files uploaded with OOS), you can actually parse its content. First of all, I recommend reading the field describing the composition of the message ( notice ).

purchaseType = LCase(xml.DocumentElement.ChildNodes(0).BaseName) documentType = LCase(xml.DocumentElement.BaseName) And check that the content of the file is exactly the announcement of the competition, and not cancel it, notification of the publication of the protocol, etc. like that:

If Not (purchaseType Like "*cancel*" Or purchaseType Like "*protocol*" Or documentType Like "*cancel*") Then Since in xml, formed by 223 FZ, the name of the document type is “hidden”, you can add:

If (purchaseType Like "*notification*" Or documentType Like "*notice*") Then Next comes the actual writing of data from xml to the sheet cells:

Range("A" & i) = xml.DocumentElement.SelectSingleNode(XPath).Text Etc.

If the XPath can give several options (an indication, for example, of the names of the lots) and we want to preserve all of them, this will help:

For Each it In lot.SelectNodes(XPath) Range("E" & i) = Range("E" & i) & it.Text & "; " Next Some fields in the document may be missing, then we skip them by the condition:

If Not xml.DocumentElement.SelectSingleNode(XPath) Is Nothing Then Conclusion

Downloading files from ftp can be implemented in different ways. I described one, using shell.namespace , working and VERY simply implementable.

After downloading, automatic ranking (selection) and formatting the list of contests, my daily selection of contests (for all Federal Laws) looks like this:

The approach described above allows you to download data about tenders, contracts, procurement plans, etc. with OOS, because all this information is published on an open ftp . I don’t cite the entire program code and cannot, because it is “intellectual property”. However, anyone who owns the basics of vba and, more importantly, patience, can restore the program using the given key code points .

Patience will be needed, firstly, when parsing files and subdirectories on ftp: you should not download too much and not miss the right one. And secondly, when parsing xml. However, here is the question of the task: exactly which fields, in what sequence, how the customer wants to be formatted.

Good luck and victories to everyone: - in contests - and personal!

Source: https://habr.com/ru/post/253201/

All Articles