How are we tested

Evgeny Kaspersky talks about independent tests of security software and our methodology for evaluating their results.

Evgeny Kaspersky talks about independent tests of security software and our methodology for evaluating their results.As we always say, Kaspersky Lab saves the world from cyber evil. How well do we do it? In general, and in relation to other salvage? To assess the success of this ambitious task, we use various metrics. One of the main such metrics is an independent expert assessment of the quality of our products and technologies. The better the performance, the better our technologies crush the digital infection and more actively save the world.

And who can do this? Of course, independent test labs! But the question is - how to summarize the results? Indeed, dozens, hundreds of tests are held in the world by all and sundry! Different protection technologies are tested, individually or in combination, the performance, ease of installation, and much more are explored.

')

How to squeeze the most correct from this porridge - that which reflects the most plausible picture of the abilities of hundreds of antivirus products? And exclude the possibility of test marketing? And to make this metric understandable to a wide range of users for a conscious and reasoned choice of protection? Happiness is, it can

How does she work?

First, you need to take into account all known test sites that conducted a study of anti-malware protection during the reporting period. Secondly, you need to take into account the whole range of tests of the selected sites and all participating vendors. Third, you need to take into account a) the number of tests in which the vendor took part; b)% absolute wins and c)% prizes (TOP3).

So - it is simple, transparent and excluding unscrupulous test marketing (and this, alas, often happens ). Of course, you can screw up another 25 thousand parameters to add 0.025% objectivity, but this will be technological narcissism and geek tediousness - and here we will definitely lose the ordinary reader ... and not the very ordinary one either. The most important thing is that we take a specific period, a whole set of tests of specific sites and all participants. We do not miss anything and do not spare anyone (including ourselves).

Let us now stretch this methodology to the real entropy of the world as of 2014!

Technical details and disclaimers who are interested in:

• In 2014, studies from eight test laboratories (by the way, have many years of experience, technological base (I checked it myself ) and impressive coverage of both vendors and various security technologies, are members of AMTSO ) were recorded : -malware , Dennis Technology Labs , MRG EFFITAS , NSS Labs , PC Security Labs and Virus Bulletin . A detailed explanation of the methodology in this video and in this document .

• Only vendors that take part in 35% of tests and more are considered, otherwise you can get the “winners” who won the single test in their entire “case history” test.

• If someone considers the methods of calculating results incorrect, welcome to the comments.

• If someone in the comments will speak on the topic “you cannot praise yourself, no one will praise,” I will immediately answer: yes. Do not like the technique - offer and justify your own.

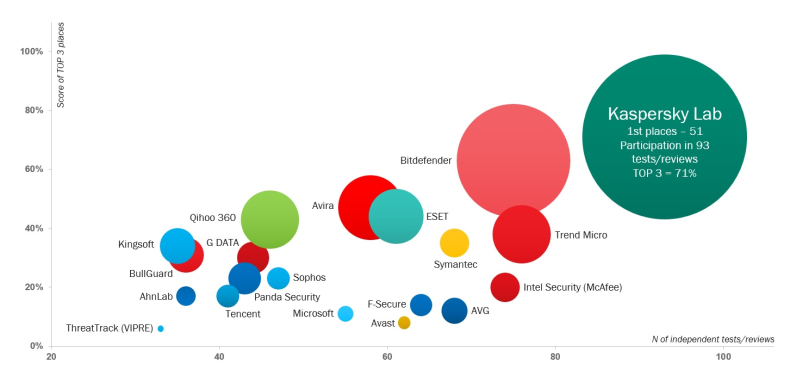

So, we analyze the test results for 2014 and get the following picture of the world:

Here on the abscissa is the number of tests in which the vendor took part and the final awards received; on the ordinate - the percentage of the vendor in the top three (TOP3). And the diameter of a circle is the number of absolute victories.

Well, well, congratulations to our R & D and all-all other employees of the company, as well as our partners, customers, contractors, our just friends / girlfriends and all their family members! Congratulations to all with a well-deserved, dignified and visual victory! So keep, the salvation of the world is not over the mountain !!! Hooray!

But that is not all! Ahead is dessert for the mind of real experts in the antivirus industry, who value data changes over time.

The method described above has been used for three years. And here it is very interesting with its help to deal with the question of the "sameness of washing powders." Yes, there is the opinion that all antiviruses are already more or less the same in terms of protection and functionality. And what curious observations emerge from this assessment:

• There are more tests (on average, in 2013, vendors participated in 50 tests, in 2014, already in 55), it became more difficult to win (the average hit rate in TOP3 tests decreased from 32% to 29%).

• As an outsider to reduce the rate of hitting the test winners - Symantec (-28%), F-Secure (-25%) and Avast! (-nineteen%).

• Curious - the position of vendors relative to each other does not change so much. Only 2 vendors changed their positions in the number of TOP3-places by more than 5 points. This proves that high-quality protection is determined not only by signatures, it is built up over the years and theft will not give a decisive advantage to the detector . Advantage give consistently high results.

• Sophisticated statistics lovers will also be interested to know that the top 7 vendors in the TOP3-rating occupied prizes in 2 times more than the other 13 taken together (240 hits in TOP3 against 115) and took 63% of all "gold medals."

• At the same time, 19% of all “gold medals” were taken by a single vendor - guess who? :)

A corrosive reader will ask: is it possible to include in the statistics other test laboratories and get other results?

The answer is that everything is theoretically possible. How are alternative theories of gravity different from Newton or non-Euclidean geometry ? But, most likely, it will be either completely unknown sites with dubious results, or their addition to the general statistics will not change the overall picture. The magic of big numbers, however!

Well, the main conclusion.

Tests are one of the most important criteria for choosing protection. Of course, the user is more likely to believe an authoritative opinion from the outside than a beautiful booklet (“everybody praises his swamp”). All vendors understand this well, but everyone solves the problem to the best of their technological abilities. Some are really “hovering” about the quality, they constantly improve the protection, and some people manipulate the results. Received the only certificate of conformity for the year, fastened a medal on the lapel and it seemed to be the best. And you have to look at the whole picture, assessing not only the test results, but also the percentage of participation.

Therefore, users are advised to choose protection thoughtfully and not to allow a cranberry to be hung on their ears :)

Thank you all for your attention and see you soon!

Source: https://habr.com/ru/post/253155/

All Articles