Big Data course: three months for basic knowledge, and why?

A student at Big Data receives 70 thousand rubles a month, and a specialist with 3-4 years experience - 250 thousand rubles a month. These are those, for example, who can personalize retail offers, search a person’s social network for a personal application for a loan or for a list of visited sites to calculate a new SIM card from an old subscriber.

We decided to make a professional course on Big Data without “water”, marketing and any e-mails, only hardcore. They called practitioners from 7 large companies (including Sberbank and Oracle) and, in fact, staged a hackaton the entire course. We recently had an open day on the program, where we directly asked practitioners what Big Data is in Russia and how companies actually use big data. Below are the answers.

')

Sberbank

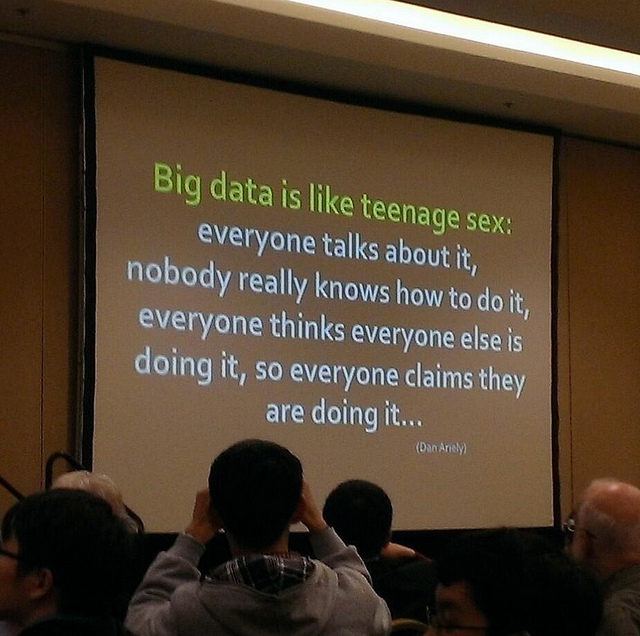

Ekaterina Frolovicheva, head of technology research at Sberbank of Russia, says that Big Data is a good, beautiful marketing, a term that emerged from a number of disciplines that did not exist yesterday, not the day before yesterday, or two or three years ago. Machine learning, data mining - all this in combination is simply used to solve problems.

Where is the fine line between classical analytics and big data? If you can fit your data in a regular table with the measured number of rows and create aggregated queries to it - this is a classic analytics. But if you take disparate sources of information and research them by different parameters, and in real time - this is Big Data.

For customers, the obvious things are massive personalization and everything that can help increase the number of secondary sales. Sberbank active cardholders - 50 million. These are not those who simply have cards, but those who spend. How to try to identify them by their vector of interests, by some set of parameters, signs, by what ID to recognize them, that they are recorded somewhere in some system of record - this is the first cut that needs to be overcome. And how to make the user receive a proposal in real-time mode, to which he responds - these are exactly the tasks to be oriented. Those cases that describe the work of compliance and problem assets, I would not want to disclose, because These are questions not public knowledge.

Labor market

Pavel Lebedev, head of research at Superjob, immediately began with money and statistics. At the time of the speech at the open door on Big Data, there were about 200 vacancies in their statistics right on the topic and 80 vacancies about Data Science / Data mining. Six large Russian companies are looking for specialists all the time, the rest - occasionally. Most of all Big Data-professionals are needed in telecoms, banks and large retail. Moreover, in order to get to work in these places, it is enough to simply go through an enhanced specialized course for 1-2 months with a common IT background (some math, some SQL).

As a rule, business analysts and machine learning engineers are needed. Sometimes looking for a database architect. In general, each employer understands Big Data in his own way, and so far there are no common criteria as C ++ developers, for example.

What is included in the work of such a person? As a rule, he must first rebuild the data collection process, then rebuild the process of its analysis. Analytics, hypothesis testing, etc. Then - the introduction of solutions obtained in business processes directly in the enterprise.

The first salary range is 70-80 thousand rubles per month. This is an entry level, with no work experience and a deep knowledge of programming languages As a rule, they are university graduates. It is assumed that in the university gave a basic knowledge of SQL queries and, again, taught to remove emissions when building a moving average.

The next range up to 100-120 thousand rubles per month implies a larger set of practical knowledge, work with various statistical tools. Most often SPSS, SAS Data Miner, Tableau, etc. You need to be able to visualize the data in order to prove to other people why it is important to do something concrete. Simply put, it will be necessary to get up at a meeting of investors and explain what you got there, but not in the bird's language.

The third range - up to about 180 thousand rubles per month - there are requirements for programming. The most commonly mentioned scripting languages are Python, etc., and already have two years of experience, machine learning experience, using Hadoop, etc. But the highest salaries - up to 250 thousand rubles a month - are people with very high qualifications. Determined by the experience of implementing something specific on the market, academic implementation and its development. Only exclusive is above, when the salary is higher, but there are dozens or ones in the country with the necessary qualifications.

Sberbank said: the norm - for the year from 1.5 to 3 million rubles. And yes, Sberbank expects to take at least a couple of people from the closest course to their job (but more on that below).

Mts

Expert - Saginov Vitaly, responsible for the direction of Big Data in the MTS.

“In the early 1990s, two mathematicians came to the conclusion that the use of regression analysis methods makes it possible to predict with sufficient probability how a bank customer will pay bills — whether he will pay on time or allow for delay. They walked with it all over Manhattan, offering City and everything else. They were told: “No, guys. You what? We have professionals who communicate with the client, who can determine the delay by the 3rd, 9th or 12th month by the color of the pupil. ” As a result, they found a small regional bank in Virginia called Signet. The quality of the loan portfolio has improved twice relative to the original values - before they began to experiment. The next 10 years, the retail business of this bank was transferred to a separate company, which is now called Capital One, and this company, this bank is among the ten largest US retail banks with a number of customers, in my opinion, about 20 million, and about 17-18 billion dollars of client money. In fact, this company has put the data and their processing into the basis of its business strategy and business model. ”

Vitali says that data is an asset. But there is no market for this asset, as there was once no market for online business until the 2000s. The same is in Europe and the USA - there is simply no market now, so most of the real investment goes into working with data inside the company to optimize its processes. Usually, first, it is established experimentally what exactly makes a profit, then a hardware and software architecture is built under it. Only one company allowed itself to go the opposite way - British Telecom - but there Big Data was done by a former IT director, who ideally imagined what was needed.

Vitaly believes that Big Data in 15-20 years will generate a new Internet, and we are now at its source. Specifically, now the main problem of the development of directions is the lack of accurate legal procedures, a lot of approvals and controversial issues.

Oracle

Svetlana Arhipkina, Oracle, leader of sales direction for Big Data Technology, says that the first group of cases around big data is something related to customers, a personalized approach, such as a proposal for a discount on diapers, even when my father did not know that his 15-year-old daughter is pregnant.

The second group of tasks related to big data is optimization, that is, everything related to modeling and using very large amounts of data.

The third group is all tasks related to fraud. Here, various decisions are being made on video recognition of images, on the analysis of unstructured information. This is a very large stack of tasks, especially for banks and telecoms.

And the newest tasks are cross-industrial. There, issues of work with the level of non-traditional relational databases most often arise.

Acronis

Alexey Ruslyakov, director of product development for Acronis, said that the two main problems of Big Data are how to store this data and what to do with it.

Somewhere 5-6 years ago we launched a cloud storage backup service, thanks to which users could make backup copies of their laptops, workstations, servers, and store them in our data center, in the cloud. The first were data centers in the United States, Boston, France. Now there is a DC in Russia. If we organized the storage of cloud backups on netapah or devices from EMC, the cost of a gigabyte of storage would be very high, and this event would most likely become commercially unprofitable. With the advent of such giants as Google and Amazon, it would be difficult for us to withstand the competition, because, due to the huge capacity, the cost of a gigabyte of data is quite cheap. Therefore, our task was to develop an efficient and inexpensive storage system.

“It was about lazy data - data that is written once, and then periodically read, or deleted. This is not the data that needs constant access, and not those that require high IOPS. For such “cold information” we have developed our own technology for storing big data. Another question that has come before us is how to catalog the stored data, index it and provide our users with a quick search in it. The task is, in fact, non-trivial, given that the data is stored distributed and with some redundancy. In parallel, it is necessary to provide data tiering: so that the information frequently accessed is stored on expensive and fast carriers, and all the rest is slow and cheap.

»One of the most interesting tasks that we are working on now is data deduplication. When we talk about Big Data, there is a question about the distribution of nodes that store data, and how, given this distribution, to make deduplication effective. It is necessary to synchronize the data between nodes correctly, and this is a big job.

Luiza Iznaurova, director of new media development at CondeNast Russia, added that Big Data for journalism can change the sphere quite a lot.

Course

Actually, as you can see, the Big Data market is experiencing a severe shortage of qualified specialists. Therefore, it is these experts and several other representatives of large companies who have relied on the professional course on Big Data, which will allow to partially solve this issue.

The first set has already been. April 18 will be the second set for this three-month course. The program - 3 parts. These are three specific cases, each of which takes one month, and they are infinitely practical. Case №1 is the creation of a DMP-system for a month. Case №2 is an analysis of a social graph on the example of “Vkontakte”. This will also take a whole month, and as a result, you will need to write to the team the analyzer of this social graph on big data. Case №3 - recommendation systems. Again, this story is very clear and demanded by business, many people said about it - how can one predict what a person wants?

The market is interesting and demanded not by theory, but by practice, so a technical specialist, specialist in processing, data analysis should understand what business problem he solves, and the technology stack that is associated with it depends very much on this business problem. This means working with completely real data. Not with data sucked from Wikipedia, not with data that is academically known 25 times already, but with data from the business, and our business partners with us share them.

Deadlines are cruel. For a month to build a DMP system from scratch is hard. We understand this, and this means that the course will be very intensive and require a lot of concentration. It can be combined with work, but if in addition to work you will have this course in your life, then the rest will be gone.

- Konstantin Kruglov, founder of the DCA Alliance

It is arranged this way - three times a week: Tuesday, Thursday from 7 to 10 in the evening, on Saturday from 4 to 7.

Every week it will be necessary to commit something specific. One pass - and you do not pass the course. If you need a theory - go to the Cursor, there will be only practice here. Work will be a team, and the teams will be constantly mixed.

Another story is the DCA competition, which will allow you to return 25% of the cost of your training in cash in the first month if you write a good algorithm. Achivka such a plan is in each task.

Here is a link to the details and the program.

One third of graduates are expected to be analysts who know how to use various kinds of tools for analyzing big data, debug models, test hypotheses and collect data (for example, for sales companies or to identify fraud patterns), the remaining two thirds of graduates will be developers who can deploy tools for working with big data and do-it-yourself can create working systems (that is, at the entrance it should be people of the level of architects and advanced application programmers).

Source: https://habr.com/ru/post/252589/

All Articles