LVS + OpenVZ

Good day, dear readers!

In this article I want to tell you about load balancing technology, a little bit about fault tolerance and how to make friends with it all in OpenVZ containers. The basics of LVS, modes of operation and configuration of the LVS bundle with containers in OpenVZ will be discussed. The article contains both the theoretical aspects of the operation of these technologies and the practical part - forwarding traffic from the balancer into the containers. If this interested you - welcome!

To begin with - links to publications on this topic on Habré:

Detailed description of the work of LVS. You can't say better

Article about OpenVZ containers

A summary of the material above:

LVS (Linux Virtual Server) is a technology based on IPVS (IP Virtual Server), which is present in Linux kernels from version 2.4.x and later. It is a kind of virtual switch 4 levels.

')

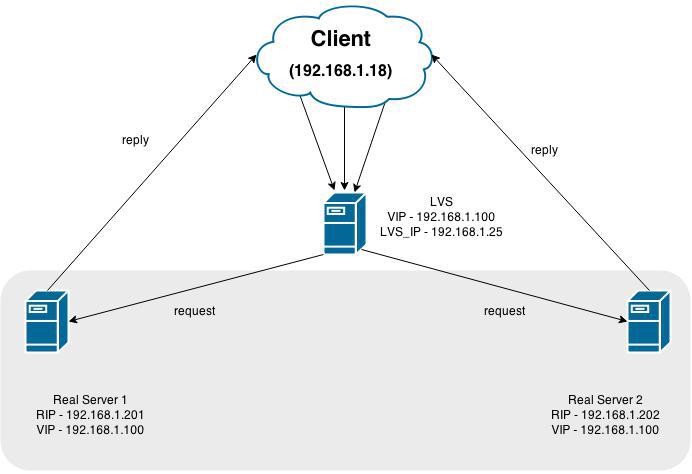

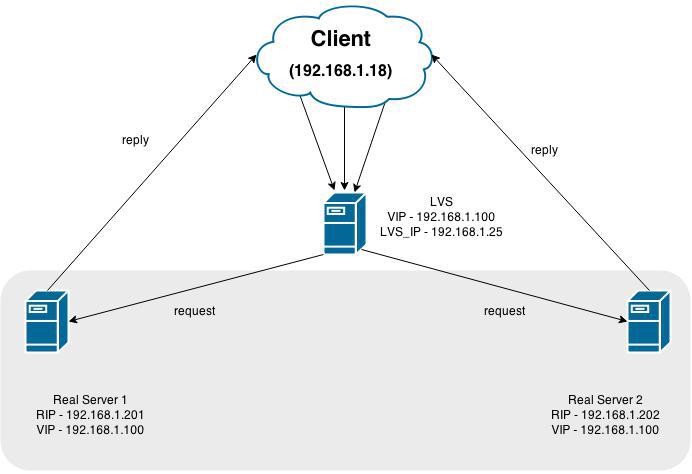

This picture shows LVS (the balancer itself), the virtual address to which calls (VIP) occur are 192.168.1.100 and 2 servers playing back-end roles: 192.168.1.201 and 192.168.1.202

In general, everything works this way - we make an entry point (VIP) where all requests come. Further, the traffic is redirected to its backends, where it is processed and responded directly to the client (in the NAT scheme, the answer is through LVS back)

With methods of balancing can be found here: link . later in the article we believe that Round-Robin balancing

Modes of operation:

A little more about each:

In this mode of operation, the request is processed according to the following scenario:

The client sends the packet to the network to the VIP address. This packet is caught by the LVS server, the MAK destination address (and only he !!) is replaced with the MAC address of one of the back-end servers and the packet is sent back to the network. It is received by the back-end server, it processes and sends the request directly to the client. The client receives an answer to his request physically from another server, but does not see the substitution and happily rustles. The attentive reader will be indignant of course - how can the Real Server process the request that came to another IP address (in the package the destination address is still the same VIP)? And he will be right, because To work correctly, this address (VIP) should be placed somewhere near the back end, most often it is hung up on the loopback. Thus, the biggest scam is committed in this technology.

The easiest mode of operation. The request from the client comes to LVS, LVS forwards the request to the back-end, he processes the request, answers back to LVS and he answers to the client. Ideally fit into the scheme where you have a gateway through which all subnet traffic goes. Otherwise, it will be extremely wrong to do NAT in a part of the network.

It is analogous to the first method, only traffic from LVS to backends is encapsulated in a packet. That’s what we’ll set up below, so now I’ll not reveal all the cards :)

Install the server with LVS. Configuring on CentOS 6.6.

On a clean system, do

Behind her, she will drag the Apache, pkhp and the ipvsadm we need. Apache and pkhp are set to access the administrative web-muzzle, which I advise you to look at once, to set up everything in a basic way and not to go there again :)

After installing all the packages do

set the password for access to the admin panel:

After that, go to IP_LVS : 3636 /, enter the login (piranha) and password from the step above and get into the admin area:

Two tabs are interesting for us now - GLOBAL SETTINGS and VIRTUAL SERVERS

Go to GLOBAL SETTINGS and set the Tunneling mode.

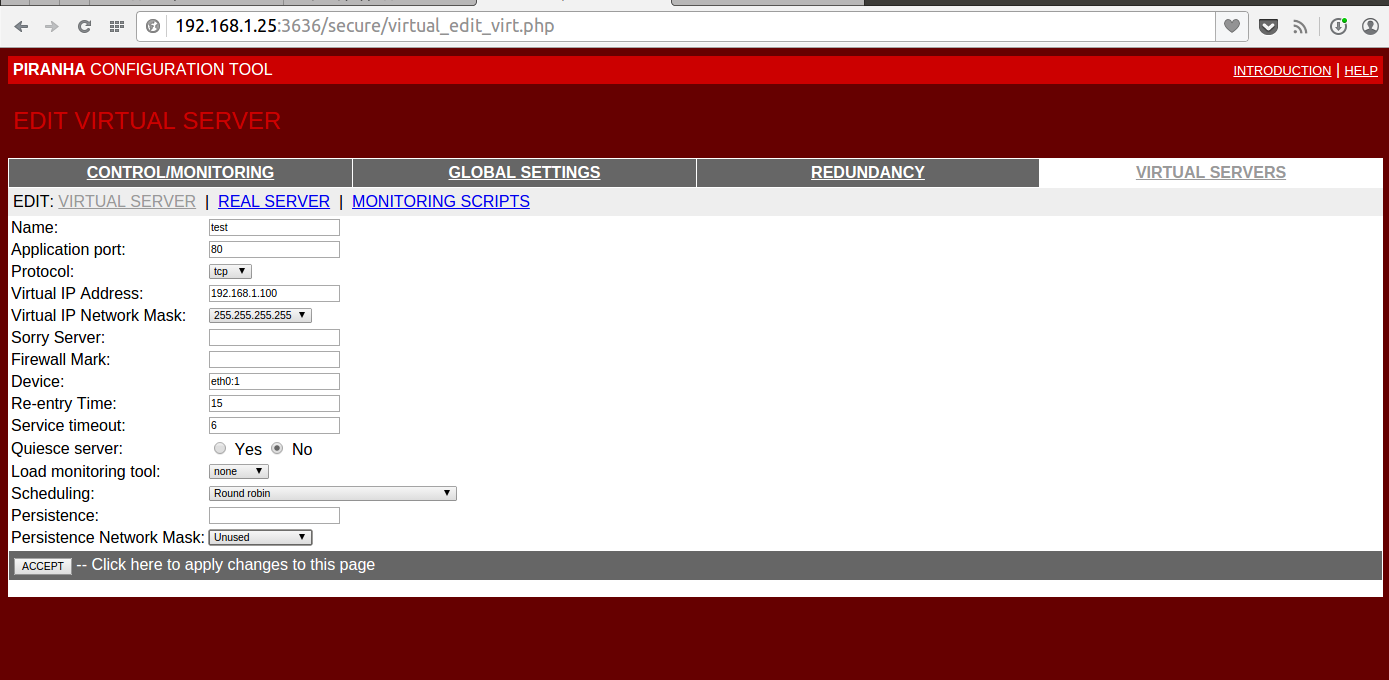

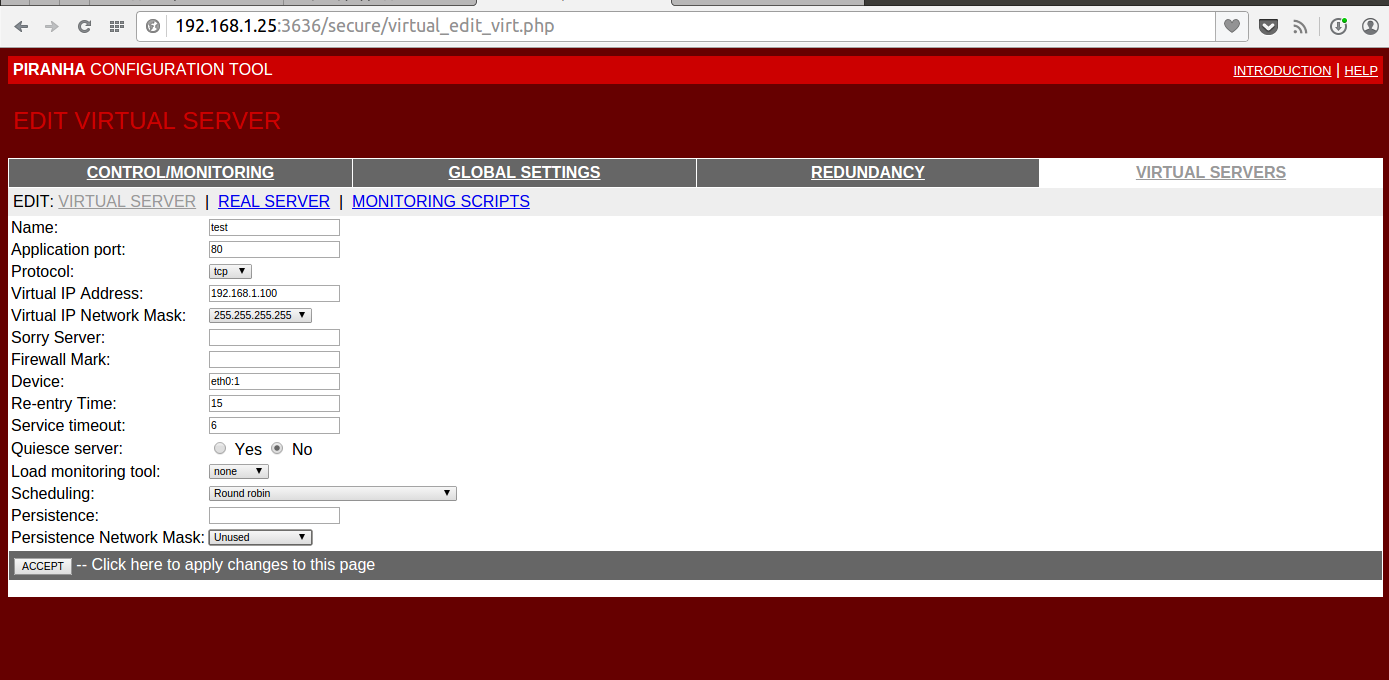

On the VIRTUAL SERVERS tab, we create one Virtual Server with the address 192.168.1.100 and port 80

In the same place, on the REAL SERVER tab, we will set two back end addresses with addresses 192.168.1.201 and 192.168.1.202 with ports 80

Details on the screenshots under the spoiler

The MONITORING SCRIPTS tab remains unchanged, although you can configure the very flexible operation of the availability check for nodes on it. By default, this is just a request of type GET / HTTP / 1.0 and checking that the web server responded to us.

You can execute arbitrary scripts, for example, such as under the spoiler.

Save the config, close the window and go to our usual console. If all of a sudden you wondered what we pissed off there, then the service config is in /etc/sysconfig/ha/lvs.cf

We look at the current settings:

Not much. This is because our final nodes are not raised! Installation and creation of containers OpenVZ skip, we believe that you magically appeared two containers with addresses 192.168.1.201 and 192.168.1.202, and inside any web server on port 80 :)

Again, we look at the output of the command:

Perfect! Exactly what is needed. If suddenly we turn off the web server on one of the nodes, then our balancer will honestly not send traffic there until the situation is corrected.

Let's take a closer look at our config for LVS:

The most interesting options here are timeout and reentry. In the above configuration, if our backend does not respond to us within 6 seconds - we will not send anything there. As soon as our bad guy responds to us within 15 seconds, we can send traffic there.

There are still quiesce_server - if the server returns to the system, then all connection counters are reset to zero and connections begin to be distributed as after starting the service.

LVS has its own Active-Pass mechanism, which is not considered in this article, and I don’t really like it. I would recommend using Pacemaker, because it has built-in mechanisms for throwing the pulse service (which is responsible for the whole mechanism)

But back to reality.

Our cars are seen, the balancer is ready to send traffic to them. Let's do on LVS

and try to turn for example to our VIP:

Let's figure it out! There may be several reasons, and one of them is iptables on lvs. Although he is engaged in the transfer of traffic, but the port must be available. Armed with tcpdump and climb on LVS.

Run and see:

Requests came, what became of them next?

Ibid

And the traffic does not go ... Trouble! We go to our nodes with OpenVZ, we go inside the virtualok and look at the traffic there. Requests from LVS reached them, but cannot be processed - protocol 4 is our IP-in-IP

We include support for tunnels for virtualok

Do not forget to add them to autoload -

Allow our virtual machines to have tunnel interfaces:

After this, restart the containers.

Now we need to add the address inside the containers to this (tun0) interface. So do:

Why is that?

Now running tcpdump we will see the long-awaited requests!

192.168.1.18 is the client.

Request reached the car! All cookies! But stop early, continue. Why does nobody answer us? It's all about the tricky kernel configuration, which checks the path back to the source - rp_filter

Turn off this check for our interface inside the container:

Checking:

Answers! Answers! But the miracle is still not happening. Sorry, Mario, your princess is in another castle. To go to another castle, first write down everything:

Disable the rp_filter check and add an interface. Inside containers:

And on the nodes:

Restart to confirm that everything is correct.

As a result, the restart of the container should have the following picture:

And the princess is hidden in the venet, as it is not sad. The technology of this device imposes the following limitations :

Those. Our node does not accept packets that come with left sors. And now the main crutch - add this address to the container! Let them be two addresses for each machine!

On the nodes, execute:

Of course, we’ll get a varning that such an address is already in the grid! But balancing requires sacrifice.

Why do we need to delete routes - so that we do not broadcast this address to the network and other machines did not know about it. Those. Formally, all the requirements are met - the answer from the machine comes with the address 192.168.1.100, it has such an address. We work!

To simplify the work, I would like to recommend the mount engine of scripts in OpenVZ, but in its pure form it will not help us, because The route of addresses is added after the mount operation, and the start scripts are executed inside the container.

The solution came from the OpenVZ forum.

We make two files (example for one container):

Restart the container for inspection, and now that moment has come - we open 192.168.1.100 iiiii ... VICTORY!

A few more brief notes:

1) The worst thing that happens with this balancing is when the address, carefully hung inside the container or on lo (for Direct mode), starts broadcasting to the network. To prevent this scenario, two tools will help you - setting tests and arptables . The tool is similar to iptables, but for ARP requests. I actively use it for my own purposes - we prohibit certain arpas from falling into the network.

2) This solution is not Enterprise; replete with crutches and narrow places. If you have the opportunity - use NAT, Direct and only then Tunnel. This is due to the fact that, for example, in Direct - if the backend is active in the output of ipvsadm, then you will receive traffic. Here he may not receive it, although the port is considered available and the packages will fly there.

4) In normal virtualization (KVM, VmWare and others) - there will be no problems, as well as not with the use of veth devices.

5) To diagnose any problems with LVS - use tcpdump. And just use it too :)

Thanks for attention!

In this article I want to tell you about load balancing technology, a little bit about fault tolerance and how to make friends with it all in OpenVZ containers. The basics of LVS, modes of operation and configuration of the LVS bundle with containers in OpenVZ will be discussed. The article contains both the theoretical aspects of the operation of these technologies and the practical part - forwarding traffic from the balancer into the containers. If this interested you - welcome!

To begin with - links to publications on this topic on Habré:

Detailed description of the work of LVS. You can't say better

Article about OpenVZ containers

A summary of the material above:

LVS (Linux Virtual Server) is a technology based on IPVS (IP Virtual Server), which is present in Linux kernels from version 2.4.x and later. It is a kind of virtual switch 4 levels.

')

This picture shows LVS (the balancer itself), the virtual address to which calls (VIP) occur are 192.168.1.100 and 2 servers playing back-end roles: 192.168.1.201 and 192.168.1.202

In general, everything works this way - we make an entry point (VIP) where all requests come. Further, the traffic is redirected to its backends, where it is processed and responded directly to the client (in the NAT scheme, the answer is through LVS back)

With methods of balancing can be found here: link . later in the article we believe that Round-Robin balancing

Modes of operation:

- 1) Direct A lot of things about him

- 2) NAT Information about this mode of operation

- 3) Tunnel And about him

A little more about each:

1) Direct

In this mode of operation, the request is processed according to the following scenario:

The client sends the packet to the network to the VIP address. This packet is caught by the LVS server, the MAK destination address (and only he !!) is replaced with the MAC address of one of the back-end servers and the packet is sent back to the network. It is received by the back-end server, it processes and sends the request directly to the client. The client receives an answer to his request physically from another server, but does not see the substitution and happily rustles. The attentive reader will be indignant of course - how can the Real Server process the request that came to another IP address (in the package the destination address is still the same VIP)? And he will be right, because To work correctly, this address (VIP) should be placed somewhere near the back end, most often it is hung up on the loopback. Thus, the biggest scam is committed in this technology.

2) NAT

The easiest mode of operation. The request from the client comes to LVS, LVS forwards the request to the back-end, he processes the request, answers back to LVS and he answers to the client. Ideally fit into the scheme where you have a gateway through which all subnet traffic goes. Otherwise, it will be extremely wrong to do NAT in a part of the network.

3) Tunnel

It is analogous to the first method, only traffic from LVS to backends is encapsulated in a packet. That’s what we’ll set up below, so now I’ll not reveal all the cards :)

Practice!

Install the server with LVS. Configuring on CentOS 6.6.

On a clean system, do

yum install piranha Behind her, she will drag the Apache, pkhp and the ipvsadm we need. Apache and pkhp are set to access the administrative web-muzzle, which I advise you to look at once, to set up everything in a basic way and not to go there again :)

After installing all the packages do

/etc/init.d/piranha-gui start ; /etc/init.d/httpd start set the password for access to the admin panel:

piranha-passwd After that, go to IP_LVS : 3636 /, enter the login (piranha) and password from the step above and get into the admin area:

So to say admin

Two tabs are interesting for us now - GLOBAL SETTINGS and VIRTUAL SERVERS

Go to GLOBAL SETTINGS and set the Tunneling mode.

A small lyrical digression about OpenVZ

As you probably already know, if you worked with OpenVZ, the user is given a choice of two types of interfaces - venet and veth. The principal difference between them is that veth is essentially a virtual network interface for each virtual machine with its MAC address. Venet is a huge 3 level switch that all your machines are connected to.

You can read more here

Comparative table of interfaces from the link above:

It so happened that venet is commonly used in my work, so the tuning is done on it.

I’ll say right away that I didn’t manage to configure LVS-Direcrt for this type of interface. Everything is fixed on the fact that the virtual machine node receives traffic, but does not know which machine to send it to. I’ll stop on this in more detail when forwarding traffic inside the container.

You can read more here

Comparative table of interfaces from the link above:

It so happened that venet is commonly used in my work, so the tuning is done on it.

I’ll say right away that I didn’t manage to configure LVS-Direcrt for this type of interface. Everything is fixed on the fact that the virtual machine node receives traffic, but does not know which machine to send it to. I’ll stop on this in more detail when forwarding traffic inside the container.

On the VIRTUAL SERVERS tab, we create one Virtual Server with the address 192.168.1.100 and port 80

In the same place, on the REAL SERVER tab, we will set two back end addresses with addresses 192.168.1.201 and 192.168.1.202 with ports 80

Details on the screenshots under the spoiler

Customization Screenshots

The MONITORING SCRIPTS tab remains unchanged, although you can configure the very flexible operation of the availability check for nodes on it. By default, this is just a request of type GET / HTTP / 1.0 and checking that the web server responded to us.

You can execute arbitrary scripts, for example, such as under the spoiler.

Check scripts for various services

For example muskul

And the check in LVS is considered successful if the script returned UP and not successful if DOWN. Server it displays the results of the test

A piece of config for this check

#!/bin/sh CMD=/usr/bin/mysqladmin IS_ALIVE=`timeout 2s $CMD -h $1 -P $2 ping | grep -c "alive"` if [ "$IS_ALIVE" = "1" ]; then echo "UP" else echo "DOWN" fi And the check in LVS is considered successful if the script returned UP and not successful if DOWN. Server it displays the results of the test

A piece of config for this check

expect = "UP" use_regex = 0 send_program = "/opt/admin/mysql_chesk.sh %h 9005" Save the config, close the window and go to our usual console. If all of a sudden you wondered what we pissed off there, then the service config is in /etc/sysconfig/ha/lvs.cf

We look at the current settings:

ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.1.100:80 wlc Not much. This is because our final nodes are not raised! Installation and creation of containers OpenVZ skip, we believe that you magically appeared two containers with addresses 192.168.1.201 and 192.168.1.202, and inside any web server on port 80 :)

Again, we look at the output of the command:

ipvsadm -L -n IP Virtual Server version 1.2.1 (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 192.168.1.100:80 wlc -> 192.168.1.201:80 Tunnel 1 0 0 -> 192.168.1.202:80 Tunnel 1 0 0 Perfect! Exactly what is needed. If suddenly we turn off the web server on one of the nodes, then our balancer will honestly not send traffic there until the situation is corrected.

Let's take a closer look at our config for LVS:

/etc/sysconfig/ha/lvs.cf

serial_no = 4

primary = 192.168.1.25

service = lvs

network = tunnel

debug_level = NONE

virtual habrahabr {

active = 1

address = 192.168.1.100 eth0: 1

port = 80

send = "GET / HTTP / 1.0 \ r \ n \ r \ n"

expect = "HTTP"

use_regex = 0

load_monitor = none

scheduler = wlc

protocol = tcp

timeout = 6

reentry = 15

quiesce_server = 0

server test1 {

address = 192.168.1.202

active = 1

weight = 1

}

server test2 {

address = 192.168.1.201

active = 1

weight = 1

}

}

primary = 192.168.1.25

service = lvs

network = tunnel

debug_level = NONE

virtual habrahabr {

active = 1

address = 192.168.1.100 eth0: 1

port = 80

send = "GET / HTTP / 1.0 \ r \ n \ r \ n"

expect = "HTTP"

use_regex = 0

load_monitor = none

scheduler = wlc

protocol = tcp

timeout = 6

reentry = 15

quiesce_server = 0

server test1 {

address = 192.168.1.202

active = 1

weight = 1

}

server test2 {

address = 192.168.1.201

active = 1

weight = 1

}

}

The most interesting options here are timeout and reentry. In the above configuration, if our backend does not respond to us within 6 seconds - we will not send anything there. As soon as our bad guy responds to us within 15 seconds, we can send traffic there.

There are still quiesce_server - if the server returns to the system, then all connection counters are reset to zero and connections begin to be distributed as after starting the service.

LVS has its own Active-Pass mechanism, which is not considered in this article, and I don’t really like it. I would recommend using Pacemaker, because it has built-in mechanisms for throwing the pulse service (which is responsible for the whole mechanism)

But back to reality.

Our cars are seen, the balancer is ready to send traffic to them. Let's do on LVS

chkconfig pulse on and try to turn for example to our VIP:

Result

curl -vv http://192.168.1.100 * Rebuilt URL to: http://192.168.1.100/ * About to connect() to 192.168.1.100 port 80 (#0) * Trying 192.168.1.100... * Adding handle: conn: 0x7e9aa0 * Adding handle: send: 0 * Adding handle: recv: 0 * Curl_addHandleToPipeline: length: 1 * - Conn 0 (0x7e9aa0) send_pipe: 1, recv_pipe: 0 * Connection timed out * Failed connect to 192.168.1.100:80; Connection timed out * Closing connection 0 curl: (7) Failed connect to 192.168.1.100:80; Connection timed out

Let's figure it out! There may be several reasons, and one of them is iptables on lvs. Although he is engaged in the transfer of traffic, but the port must be available. Armed with tcpdump and climb on LVS.

Run and see:

tcpdump -i any host 192.168.1.100 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes 13:36:09.802373 IP 192.168.1.18.37222 > 192.168.1.100.http: Flags [S], seq 3328911904, win 29200, options [mss 1460,sackOK,TS val 2106524 ecr 0,nop,wscale 7], length 0 13:36:10.799885 IP 192.168.1.18.37222 > 192.168.1.100.http: Flags [S], seq 3328911904, win 29200, options [mss 1460,sackOK,TS val 2106774 ecr 0,nop,wscale 7], length 0 13:36:12.803726 IP 192.168.1.18.37222 > 192.168.1.100.http: Flags [S], seq 3328911904, win 29200, options [mss 1460,sackOK,TS val 2107275 ecr 0,nop,wscale 7], length 0 Requests came, what became of them next?

Ibid

tcpdump -i any host 192.168.1.201 or host 192.168.1.202 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes 13:37:08.257049 IP 192.168.1.25 > 192.168.1.201: IP 192.168.1.18.37293 > 192.168.1.100.http: Flags [S], seq 1290874035, win 29200, options [mss 1460,sackOK,TS val 2121142 ecr 0,nop,wscale 7], length 0 (ipip-proto-4) 13:37:08.257538 IP 192.168.1.201 > 192.168.1.25: ICMP 192.168.1.201 protocol 4 unreachable, length 88 13:37:09.255564 IP 192.168.1.25 > 192.168.1.201: IP 192.168.1.18.37293 > 192.168.1.100.http: Flags [S], seq 1290874035, win 29200, options [mss 1460,sackOK,TS val 2121392 ecr 0,nop,wscale 7], length 0 (ipip-proto-4) 13:37:09.256192 IP 192.168.1.201 > 192.168.1.25: ICMP 192.168.1.201 protocol 4 unreachable, length 88 And the traffic does not go ... Trouble! We go to our nodes with OpenVZ, we go inside the virtualok and look at the traffic there. Requests from LVS reached them, but cannot be processed - protocol 4 is our IP-in-IP

We include support for tunnels for virtualok

: modprobe ipip lsmod | grep ipip Do not forget to add them to autoload -

cd /etc/sysconfig/modules/ echo "#!/bin/sh" > ipip.modules echo "/sbin/modprobe ipip" >> ipip.modules chmod +x ipip.modules Allow our virtual machines to have tunnel interfaces:

vzctl set 201 --feature ipip:on --save vzctl set 202 --feature ipip:on --save After this, restart the containers.

Now we need to add the address inside the containers to this (tun0) interface. So do:

ifconfig tunl0 192.168.1.100 netmask 255.255.255.255 broadcast 192.168.1.100 Why is that?

Direct, Tunnel and Addresses

The common feature of these two methods is that in the final system (backend) VIP addresses are added for correct operation. Why is that? The answer is simple: the client addresses the fixed address and waits for the answer from him. If someone answers him from another address, the client will consider such an answer as an error and simply ignore it. Imagine that you are asking to call the cute girl Oksana to the telephone, and Valentina Yakovlevich’s hoarse voice answers you.

For Direct, the package processing steps are lined up:

The client makes an ARP request to the VIP address, receives a response, forms a request with data, sends it to the VIP, LVS caught these packets, changed the MAK there, gave it back to the network, the network equipment delivered the backend package to the Mac, he began to deploy it from the second level Mak my? Yes. And my IP? Yes. I processed and answered with the source VIP (the package is intended for it) and at the destination client.

For Tunnel, the situation is almost the same, but without the substitution of the MAC, and full encapsulated traffic. The backend received a packet intended specifically for him, and inside a request to the VIP address, which the backend should process and respond.

For Direct, the package processing steps are lined up:

The client makes an ARP request to the VIP address, receives a response, forms a request with data, sends it to the VIP, LVS caught these packets, changed the MAK there, gave it back to the network, the network equipment delivered the backend package to the Mac, he began to deploy it from the second level Mak my? Yes. And my IP? Yes. I processed and answered with the source VIP (the package is intended for it) and at the destination client.

For Tunnel, the situation is almost the same, but without the substitution of the MAC, and full encapsulated traffic. The backend received a packet intended specifically for him, and inside a request to the VIP address, which the backend should process and respond.

Now running tcpdump we will see the long-awaited requests!

tcpdump -i any host 192.168.1.18 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes 14:03:28.907670 IP 192.168.1.18.38850 > 192.168.1.100.http: Flags [S], seq 3110076845, win 29200, options [mss 1460,sackOK,TS val 2516581 ecr 0,nop,wscale 7], length 0 14:03:29.905359 IP 192.168.1.18.38850 > 192.168.1.100.http: Flags [S], seq 3110076845, win 29200, options [mss 1460,sackOK,TS val 2516831 ecr 0,nop,wscale 7], length 0 192.168.1.18 is the client.

Request reached the car! All cookies! But stop early, continue. Why does nobody answer us? It's all about the tricky kernel configuration, which checks the path back to the source - rp_filter

Turn off this check for our interface inside the container:

echo 0 > /proc/sys/net/ipv4/conf/tunl0/rp_filter Checking:

tcpdump -i any host 192.168.1.18 tcpdump: verbose output suppressed, use -v or -vv for full protocol decode listening on any, link-type LINUX_SLL (Linux cooked), capture size 65535 bytes 14:07:03.870449 IP 192.168.1.18.39051 > 192.168.1.100.http: Flags [S], seq 89280152, win 29200, options [mss 1460,sackOK,TS val 2570336 ecr 0,nop,wscale 7], length 0 14:07:03.870499 IP 192.168.1.100.http > 192.168.1.18.39051: Flags [S.], seq 593110812, ack 89280153, win 14480, options [mss 1460,sackOK,TS val 3748869 ecr 2570336,nop,wscale 7], length 0 Answers! Answers! But the miracle is still not happening. Sorry, Mario, your princess is in another castle. To go to another castle, first write down everything:

Disable the rp_filter check and add an interface. Inside containers:

echo "net.ipv4.conf.tunl0.rp_filter = 0" >> /etc/sysctl.conf echo "ifconfig tunl0 192.168.1.100 netmask 255.255.255.255 broadcast 192.168.1.100" >> /etc/rc.local And on the nodes:

echo "net.ipv4.conf.venet0.rp_filter = 0" >> /etc/sysctl.conf Restart to confirm that everything is correct.

As a result, the restart of the container should have the following picture:

ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: venet0: <BROADCAST,POINTOPOINT,NOARP,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN link/void inet 127.0.0.1/32 scope host venet0 inet 192.168.1.202/32 brd 192.168.1.202 scope global venet0:0 3: tunl0: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN link/ipip 0.0.0.0 brd 0.0.0.0 inet 192.168.1.100/32 brd 192.168.1.100 scope global tunl0 cat /proc/sys/net/ipv4/conf/tunl0/rp_filter 0 And the princess is hidden in the venet, as it is not sad. The technology of this device imposes the following limitations :

The ip-packets of the ip-packets are set to the ip-address of the container .

Those. Our node does not accept packets that come with left sors. And now the main crutch - add this address to the container! Let them be two addresses for each machine!

Illustration of such a decision

On the nodes, execute:

vzctl set 201 --ipadd 192.168.1.100 --save vzctl set 202 --ipadd 192.168.1.100 --save ip ro del 192.168.1.100 dev venet0 scope link Of course, we’ll get a varning that such an address is already in the grid! But balancing requires sacrifice.

Why do we need to delete routes - so that we do not broadcast this address to the network and other machines did not know about it. Those. Formally, all the requirements are met - the answer from the machine comes with the address 192.168.1.100, it has such an address. We work!

To simplify the work, I would like to recommend the mount engine of scripts in OpenVZ, but in its pure form it will not help us, because The route of addresses is added after the mount operation, and the start scripts are executed inside the container.

The solution came from the OpenVZ forum.

We make two files (example for one container):

cat /etc/vz/conf/202.mount #!/bin/bash . /etc/vz/start_stript/202.sh & disown exit 0 cat /etc/vz/start_stript/202.sh #!/bin/bash _sleep() { sleep 4 status=(`/usr/sbin/vzctl status 202`) x=1 until [ $x == 6 ] ; do sleep 1 if [ ${status[4]} == "running" ] ; then ip ro del 192.168.1.100 dev venet0 scope link exit 0 else x=`expr $x + 1` fi done } _sleep : chmod +x /etc/vz/start_stript/202.sh chmod +x /etc/vz/conf/202.mount Restart the container for inspection, and now that moment has come - we open 192.168.1.100 iiiii ... VICTORY!

A few more brief notes:

1) The worst thing that happens with this balancing is when the address, carefully hung inside the container or on lo (for Direct mode), starts broadcasting to the network. To prevent this scenario, two tools will help you - setting tests and arptables . The tool is similar to iptables, but for ARP requests. I actively use it for my own purposes - we prohibit certain arpas from falling into the network.

2) This solution is not Enterprise; replete with crutches and narrow places. If you have the opportunity - use NAT, Direct and only then Tunnel. This is due to the fact that, for example, in Direct - if the backend is active in the output of ipvsadm, then you will receive traffic. Here he may not receive it, although the port is considered available and the packages will fly there.

4) In normal virtualization (KVM, VmWare and others) - there will be no problems, as well as not with the use of veth devices.

5) To diagnose any problems with LVS - use tcpdump. And just use it too :)

Thanks for attention!

Source: https://habr.com/ru/post/252415/

All Articles