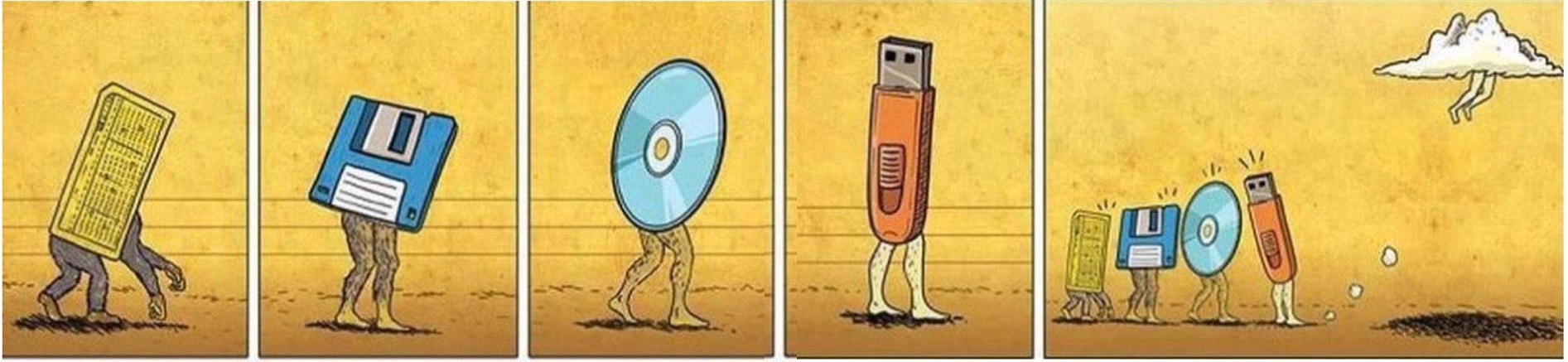

Evolution of data carriers

“To live in an era of change” is a very concise and quite understandable curse for a person, say, over 30 years old. The modern stage of human development has made us unwittingly witness a unique "era of change." And here even plays a role not that the scale of modern scientific progress, the significance for civilization of the transition from stone tools to copper obviously was much more significant than doubling the computational capabilities of the processor, which in itself would be clearly more technological. That huge, ever-increasing rate of change in the technical development of the world simply discourages. If a hundred years ago every gentleman respected himself simply had to be aware of all the "new products" of the world of science and technology, so as not to look like a fool and a hunk in the eyes of his surroundings, now taking into account the volumes and speed of production of these "new products" impossible, even the question is not asked. Inflation of technologies, even before recently not conceivable, and the possibilities of a person connected with them, actually killed a great trend in literature - “Technical fiction”. There is no need for it, the future has become many times closer than ever, the conceived story about the “miraculous technology” risks reaching the reader later than something like this will have to leave the scientific research institutes.

The progress of technical thought of a person has always been displayed most quickly in the field of information technology. Ways of collecting, storing, organizing, disseminating information are a red thread throughout the history of mankind. Breakthroughs, whether in the technical or human sciences, in one way or another, responded to IT. The civilizational way traveled by mankind is a series of successive steps to improve the methods of storing and transmitting data. In this article we will try to more thoroughly understand and analyze the main stages in the development of information carriers, to conduct their comparative analysis, ranging from the most primitive clay tablets to the latest successes in the creation of a machine-brain interface.

')

The task set is really not a comic one, look at what he raised, an intrigued reader will say. It would seem, how is it possible, while observing at least elementary correctness, to compare the technologies of the past and the present that differ significantly from each other? Contribute to the solution of this issue can the fact that the ways of perception of information by a man actually is not much and have changed. The forms of recording and the form of reading information by means of sounds, images and coded characters (letters) remained the same. In many ways, this reality has become a common denominator, so to speak, thanks to which it will be possible to make qualitative comparisons.

For a start, it is worth reviving the common truths, with which we will continue to operate. The elementary storage medium of a binary system is “bit”, while the minimum unit of storage and computer processing of data is “byte” in this case in standard form, the latter includes 8 bits. More familiar to our hearing megabytes corresponds to: 1 MB = 1024 KB = 1048576 bytes.

The units listed are currently the universal measure of the amount of digital data placed on a particular carrier, so it will be very easy to use them in further work. Universality is that a group of bits, actually a cluster of numbers, a set of 1/0 values, can describe any material phenomenon and thereby digitize it. It doesn't matter if it is the wisest font, picture, melody, all these things consist of separate components, each of which is assigned its own unique digital code. Understanding this basic principle makes it possible for us to move forward.

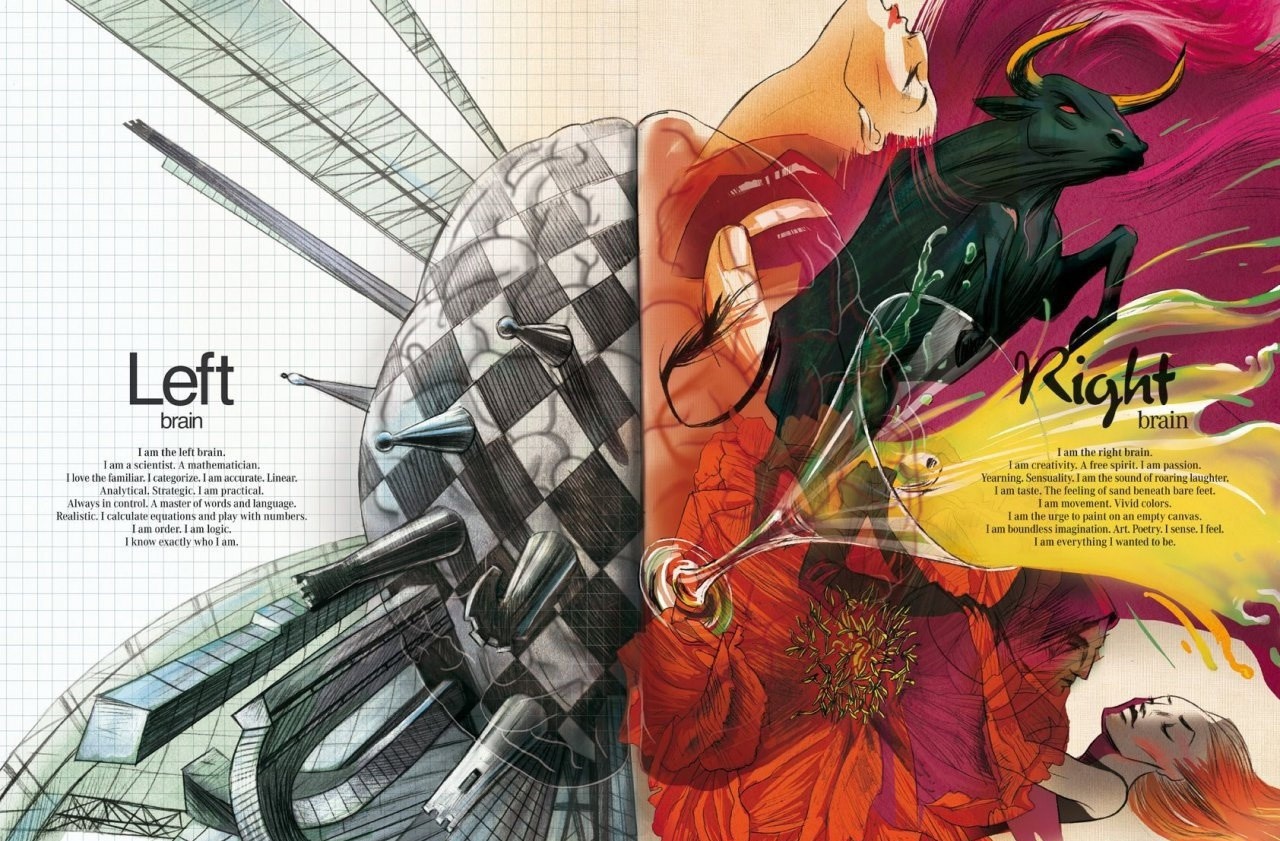

The very evolutionary formation of our species has thrown people into the embrace of the analog perception of the space surrounding them, which in many respects predetermined the fate of our technological development.

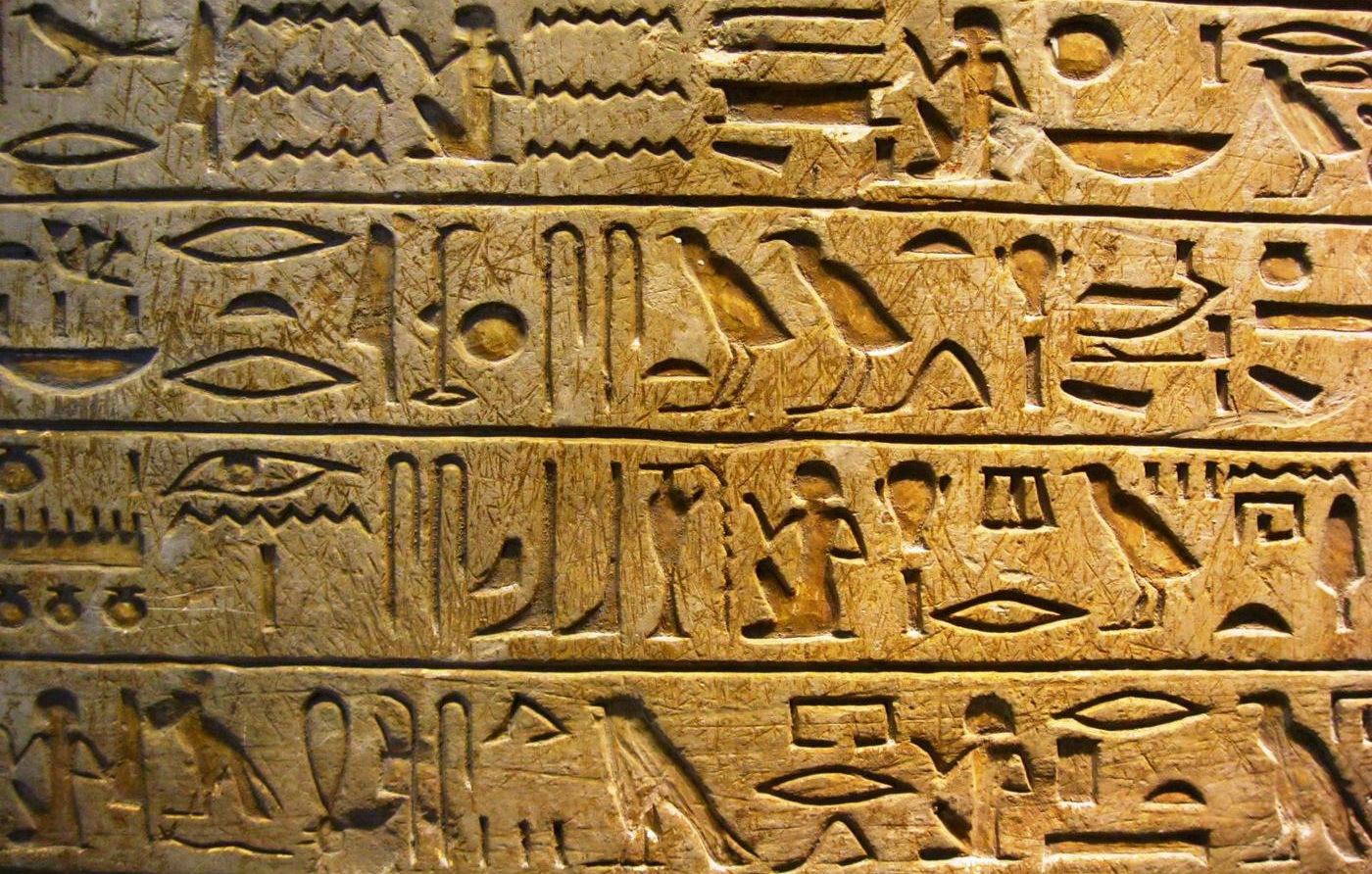

At the first glance of modern man, the technologies that emerged at the very dawn of mankind are very primitive, not sophisticated in details, the very existence of mankind before the transition to the era of “numbers” can be presented, but was it really so difficult? After asking to study the question posed, we can see very simple technologies for storing and processing information at the stage of their appearance. The first of its kind carrier of information created by man, became portable areal objects with images printed on them. Tablets and parchments made it possible not only to preserve, but more efficiently than ever before, to process this information. At this stage, the opportunity to concentrate a huge amount of information in specially designated places - repositories, where this information was systematized and carefully guarded, became the main impetus to the development of all mankind.

The first known data centers, as we would call them now, until recently called libraries, emerged in the expanses of the Middle East, between the rivers Nile and Euphrates, about another II thousand years BC. The whole format of the information carrier all this time essentially determined the ways of interaction with it. And here it is not so important, an adobe slab this, a papyrus scroll, or a standard, A4 paper pulp and paper sheet, all these thousands of years have been closely combined by an analog method of entering and reading data from a carrier.

The period of time throughout which was dominated by the analog way of human interaction with his information store successfully lasted to the present day, only very recently, already in the XXI century, finally giving way to the digital format.

Having outlined the approximate temporal and semantic frameworks of the analog stage of our civilization, we can now return to the question posed at the beginning of this section, since they are not efficient these data storage methods that we had and until very recently used, without knowing about the iPad, flash drives and optical discs?

If we take away the last stage of the decline of analog data storage technologies, which lasted for the last 30 years, we can sadly note that these technologies themselves, by and large, have not undergone significant changes over thousands of years. Indeed, a breakthrough in this area went relatively long ago, this is the end of the nineteenth century, but more on that below. Until the middle of the declared century, among the main ways of recording data there are two main ones, this is letter and painting. The essential difference between these methods of registering information, absolutely independent of the medium on which it is carried out, lies in the logic of registration of information.

Painting seems to be the easiest way to transfer data, which does not require any additional knowledge, both at the stage of creation and use of data, thereby actually being the original format perceived by a person. The more accurate the transfer of reflected light from the surface of surrounding objects to the scribe's retina, the more informative this image will be. Not the thoroughness of the transmission technique, the materials that the creator of the image uses, is the noise that will interfere in the future for accurate reading of the information registered in this way.

How informative is the image, what is the quantitative value of the information carried by the picture. At this stage of understanding the process of transferring information in a graphical way, we can finally plunge into the first calculations. In this, a basic computer science course will come to our aid.

Any bitmap image is discrete, it’s just a whole set of points. Knowing this property of it, we can translate the displayed information that it carries in understandable units for us. Since the presence / absence of a contrast point is actually the simplest binary code 1/0, and therefore, each of these points acquires 1 bit of information. In turn, the image of a group of points, say 100x100, will contain:

V = K * I = 100 x 100 x 1 bit = 10 000 bits / 8 bits = 1250 bytes / 1024 = 1.22 kB

But let's not forget that the above presented calculation is correct only for a monochrome image. In the case of much more frequently used color images, naturally, the amount of information transmitted will increase significantly. If we accept the condition of a sufficient color depth of 24 bit (photographic quality) encoding, and I remind you, it has support for 16,777,216 colors, therefore we get much more data for the same number of dots:

V = K * I = 100 x 100 x 24 bits = 240,000 bits / 8 bits = 30,000 bytes / 1024 = 29.30 kb

As you know, a point has no size and, in theory, any area allotted for drawing an image can carry an infinitely large amount of information. In practice, there are well-defined sizes and, accordingly, you can determine the amount of data.

On the basis of many studies conducted, it was found that a person with an average visual acuity, with a comfortable reading distance of information (30 cm), can distinguish about 188 lines per 1 centimeter, which in modern technology approximately corresponds to the standard parameter of image scanning by household scanners at 600 dpi . Consequently, from one square centimeter of the plane, without additional accessories, the average person can count 188: 188 points, which will be equivalent to:

For a monochrome image:

Vm = K * I = 188 x 188 x 1 bit = 35 344 bits / 8 bits = 4418 bytes / 1024 = 4.31 KB

For photographic image quality:

Vc = K * I = 188 x 188 x 24 bits = 848,256 bits / 8 bits = 106,032 bytes / 1024 = 103.55 kb

For greater clarity, on the basis of the obtained calculations, we can easily determine how much information a piece of paper like A4 with the dimensions of 29.7 / 21 cm bears as usual:

VA4 = L1 x L2 x Vm = 29.7 cm x 21 cm x 4.31 KB = 2688.15 / 1024 = 2.62 MB - monochrome pictures

VA4 = L1 x L2 x Vm = 29.7 cm x 21 cm x 103.55 kB = 64584.14 / 1024 = 63.07 MB - color picture

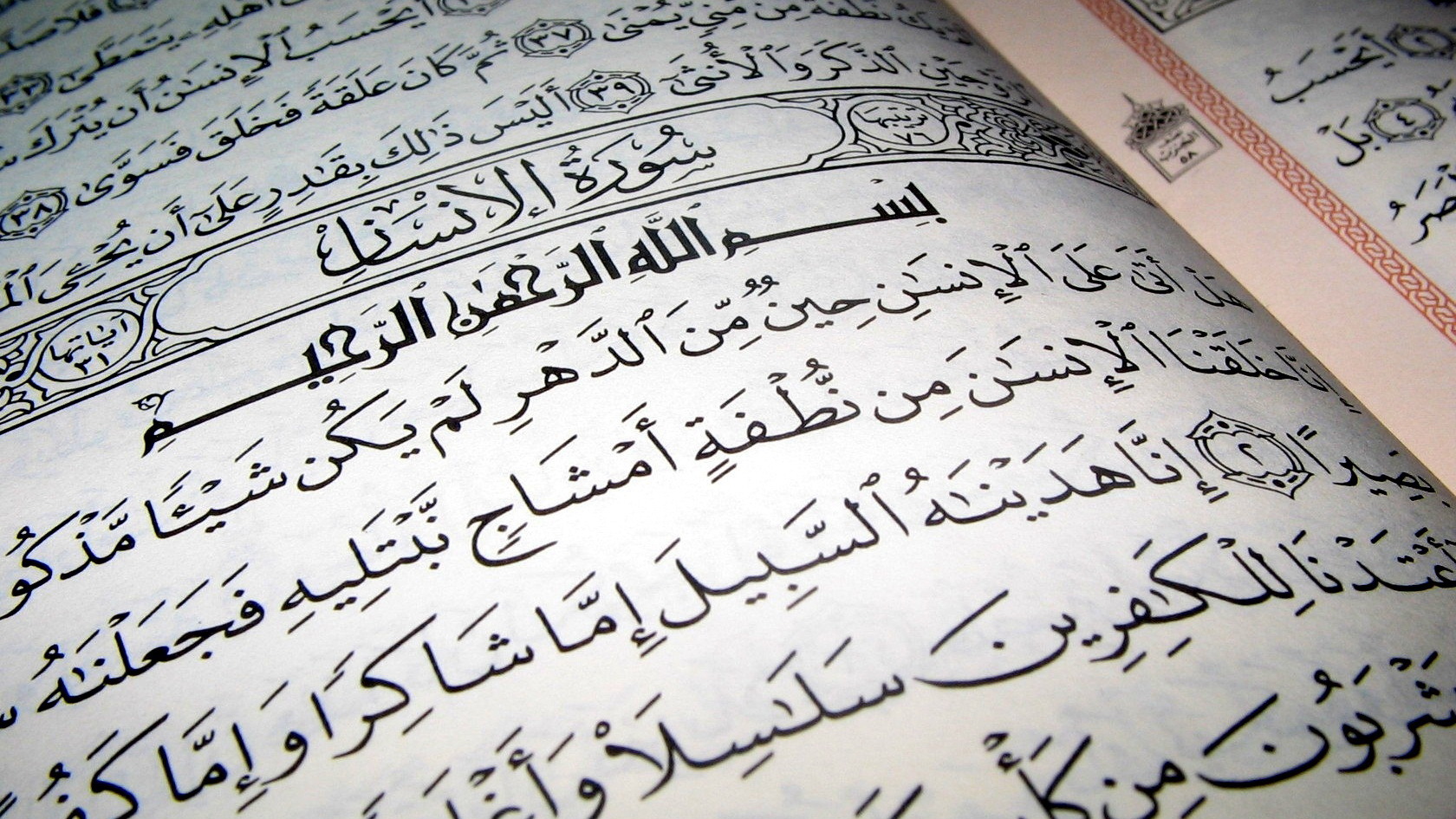

If the picture is more or less clear with the visual art, then the letter is not so simple. Obvious differences in the way information is transmitted between the text and the picture dictate a different approach in determining the informativeness of these forms. Unlike an image, a letter is a kind of standardized, coded data transfer. Without knowing the code of words embedded in the letter and the letters that form it, the informative load, say the Sumerian cuneiform, for most of us is zero, while the ancient images on the ruins of the same Babylon will be completely correctly perceived even by man absolutely not versed about the intricacies of the ancient world . It becomes quite obvious that the information content of the text extremely strongly depends on the one in whose hands it fell, on deciphering it by a specific person.

Nevertheless, even under such circumstances, somewhat eroding the validity of our approach, we can quite unequivocally calculate the amount of information that was placed in texts on various flat surfaces.

By resorting to the binary coding system and standard byte already familiar to us, the written text, which can be imagined as a set of letters that forms words and sentences, is very easily reduced to digital form 1/0.

The 8-byte habitual for us can acquire up to 256 different numeric combinations, which in fact should be enough for a digital description of any existing alphabet, as well as numbers and punctuation marks. From here, the conclusion is that any applied standard character of the alphabet letter on the surface takes 1 byte in digital equivalent.

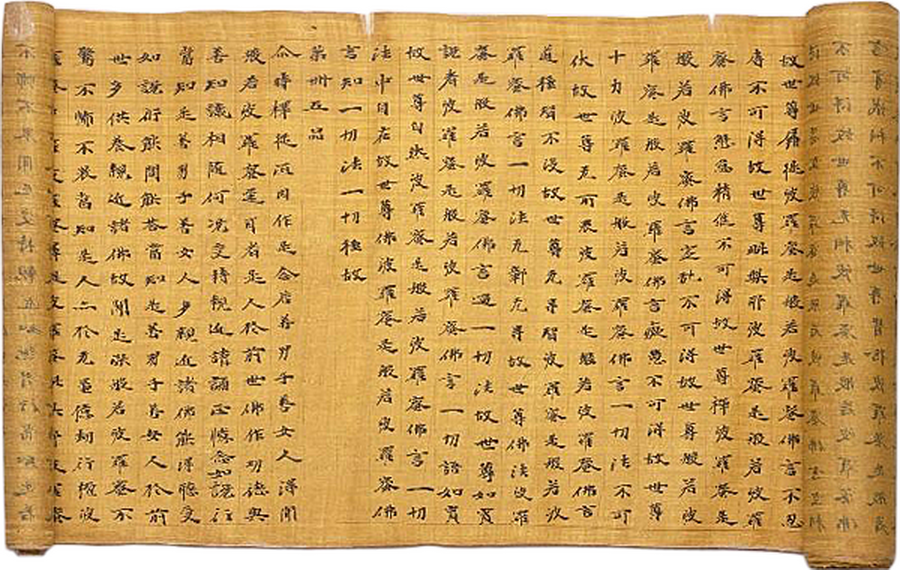

The situation is somewhat different with hieroglyphs, which have also been widely used for several thousand years. Replacing the whole word with one sign, this encoding is clearly much more efficient in using the plane allotted to it from the point of view of information load rather than in alphabetical languages. At the same time, the number of unique characters, each of which must be assigned a non-repeated combination of a combination of 1 and 0, is many times larger. In the most common existing hieroglyphic languages: Chinese and Japanese, according to statistics, no more than 50,000 unique characters are actually used, in Japanese and even less, at the moment the country's ministry of education, for everyday use, identified only 1,850 hieroglyphs. In any case, the 256th combinations that fit into one byte cannot be avoided here. One byte is good, and two is even better, the modified popular wisdom says, 65536 - we will get as many numerical combinations using two bytes, which in principle becomes sufficient for digitizing the actively used language, thereby assigning two bytes to the absolute majority of hieroglyphs.

The existing practice of using the letter says that about 1800 readable, unique signs can be placed on a standard A4 sheet. Having carried out not complicated arithmetic calculations, it is possible to establish how much in a digital equivalent one standard typewritten piece of alphabetical and more informative hieroglyphic letters will carry information:

V = n * I = 1800 * 1 byte = 1800/1024 = 1.76 KB or 2.89 bytes / cm2

V = n * I = 1800 * 2 bytes = 3600/1024 = 3.52 KB or 5.78 bytes / cm2

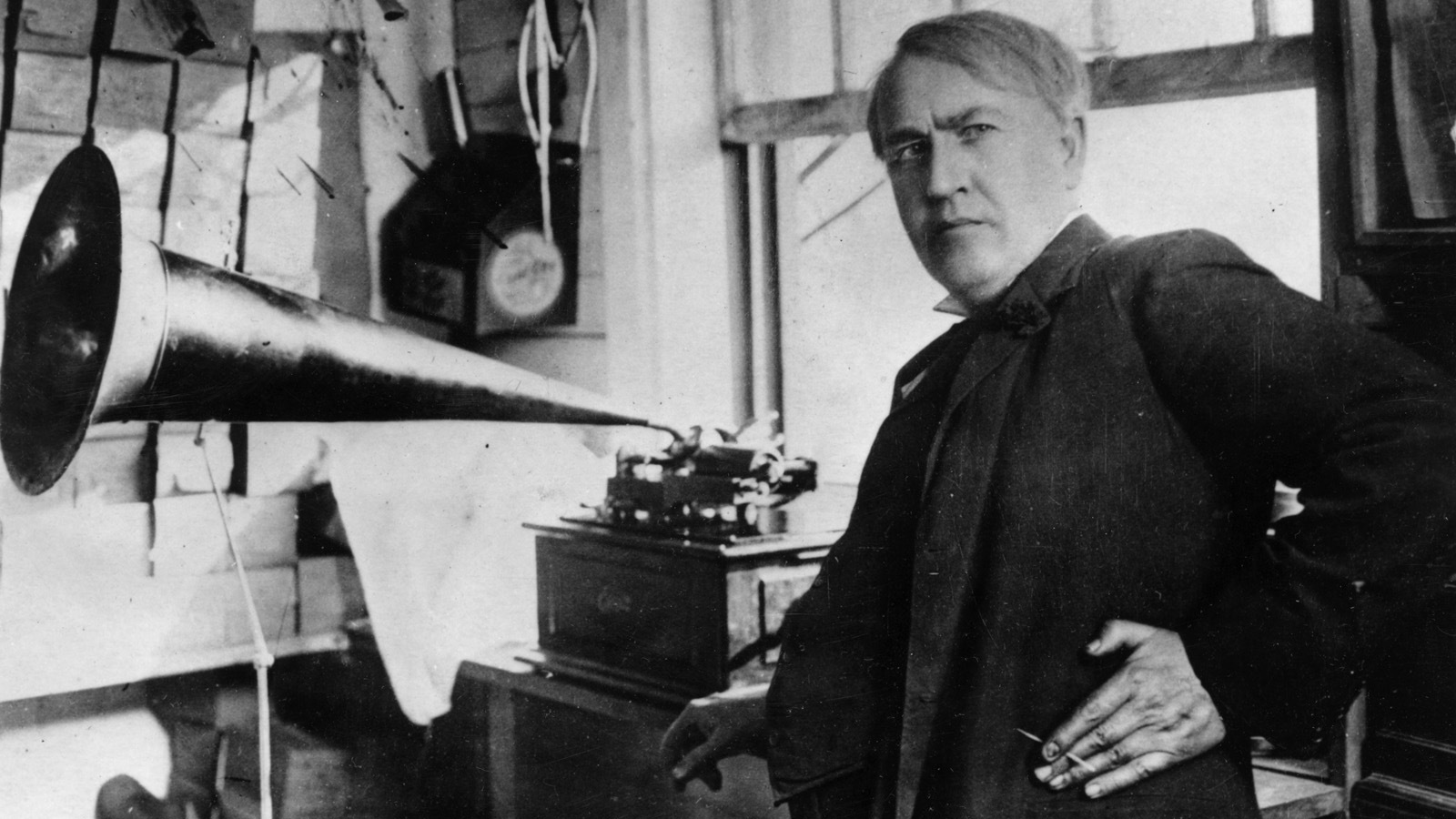

The XIX century was a turning point, both for the methods of registration and storage of analog data, this was the result of the emergence of revolutionary materials and information recording techniques that were to change the IT world. One of the main innovations was sound recording technology.

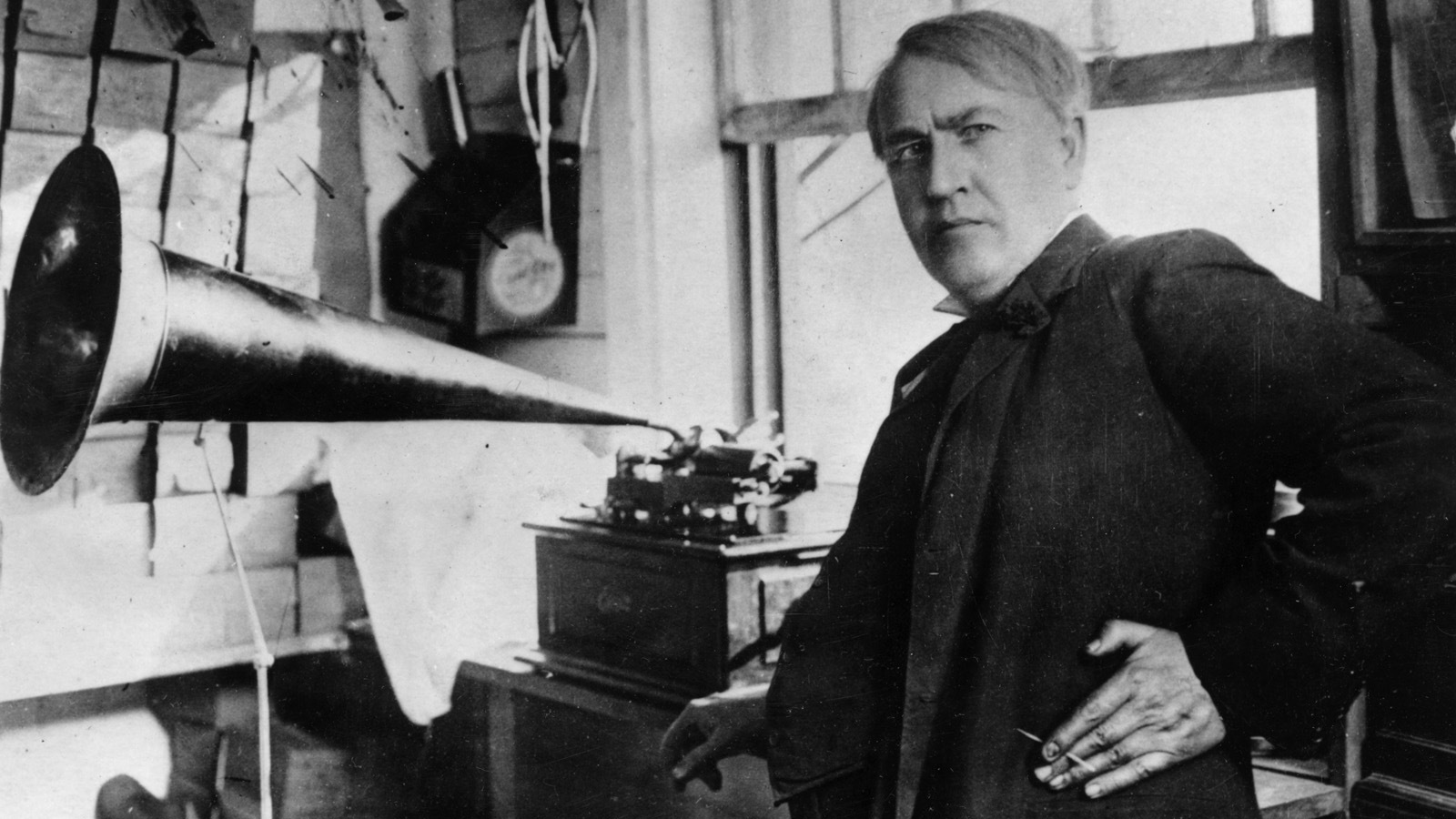

The invention of the phonograph by Thomas Edison gave rise to the existence of the first cylinders, with grooves applied to them, and soon the plates were the first prototypes of optical disks.

Reacting to the sound vibrations, the phonograph cutter tirelessly made grooves on the surface of both metal and a little later polymer. Depending on the vibration picked up, the cutter applied a swirling groove of different depths and widths on the material, which in turn made it possible to record sound and reproduce it back in a purely mechanical way, already once engraved sound vibrations.

At the presentation of the first phonograph by T. Edison at the Paris Academy of Sciences, there was an embarrassment, one is not young, a linguistic scholar, almost hearing a reproduction of human speech by a mechanical device, jumped off and the indignant rushed with his fists at the inventor, accusing him of fraud. According to this respected member of the Academy, metal would never be able to repeat the melodiousness of the human voice, and Edison himself is an ordinary ventriloquist. But we all know that this is certainly not the case. Moreover, in the twentieth century, people learned to store sound recordings in digital format, and now we will plunge into some numbers, after which it will become quite clear how much information fits on a regular vinyl (the material has become the most characteristic and mass representative of this technology) disc.

Just as before with the image, here we will build on human abilities to capture information. It is widely known that, most often, the human ear is able to perceive sound vibrations from 20 to 20,000 Hertz, based on this constant, the value of 44100 Hertz was adopted for switching to a digital audio format, because for a correct transition, the sampling frequency of sound vibrations should be two times its original value. Also not unimportant factor here is the coding depth of each of the 44100 oscillations. This parameter directly affects the number of bits inherent in a single wave, the higher the position of the sound wave recorded in a specific second of time, the greater the number of bits it must be encoded and the higher quality the digitized sound will sound. The ratio of sound parameters chosen for the most common format to date, not distorted by compression, used on audio discs, is its 16-bit depth, with an oscillation resolution of 44.1 kHz. Although there are more "capacious" ratios of the above parameters, up to 32bit / 192 kHz, which could be more comparable to the actual sound quality of a recording gram, but we will include in the calculations the ratio of 16 bits / 44.1 kHz. It was the chosen ratio in the 80-90s of the twentieth century that dealt a crushing blow to the industry of analog audio recording, becoming in fact a full-fledged alternative to it.

And so, taking the announced values as the initial sound parameters, we can calculate the digital equivalent of the amount of analog information carried by the recording technology:

V = f * I = 44100 Hertz * 16 bits = 705600 bits / s / 8 = 8820 bytes / s / 1024 = 86.13 kbytes / s

By calculation, we obtained the necessary amount of information for coding 1 second of sound quality recording. Since the sizes of the plates varied, just like the density of grooves on its surface, the amount of information on specific representatives of such a carrier also differed significantly. The maximum time of high-quality recording on a vinyl record with a diameter of 30 cm was less than 30 minutes on one side, which was on the edge of the material's capabilities, and this value usually did not exceed 20-22 minutes. With this characteristic, it follows that the vinyl surface could fit:

Vv = V * t = 86.13 kb / s * 60 s * 30 = 155034 kbyte / 1024 = 151.40 mbyte

And in fact placed no more:

Vvf = 86.13 kb / s * 60 s * 22 = 113691.6 kbyte / 1024 = 111.03 mbyte

The total area of such a plate was:

S = π * r ^ 2 = 3.14 * 15 cm * 15 cm = 706.50 cm2

In fact, there is 160.93 kB of information per one square centimeter of the plate; naturally, the proportion for different diameters will not change linearly, since it is not the effective recording area that is taken, but the entire carrier.

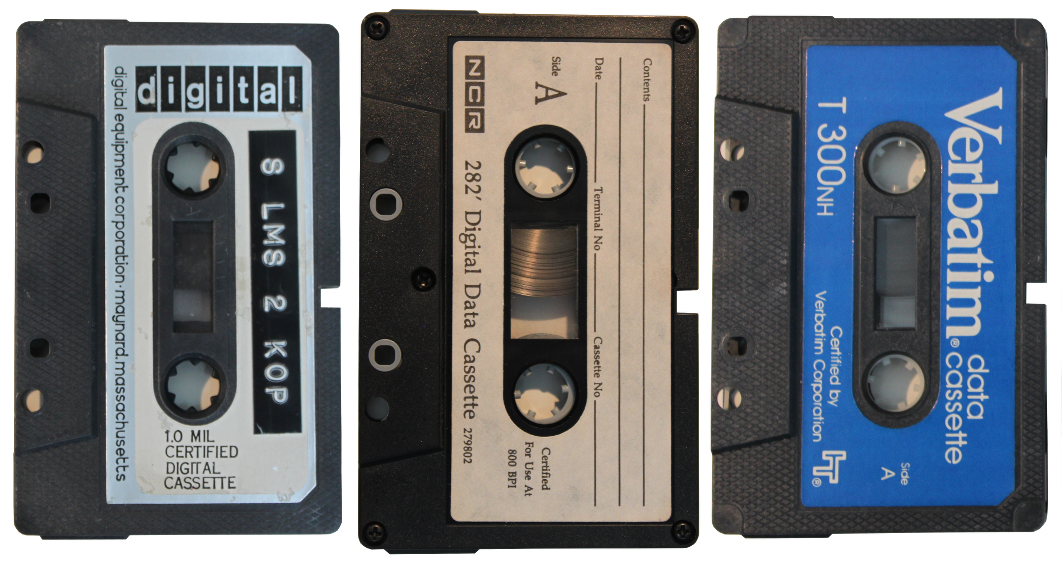

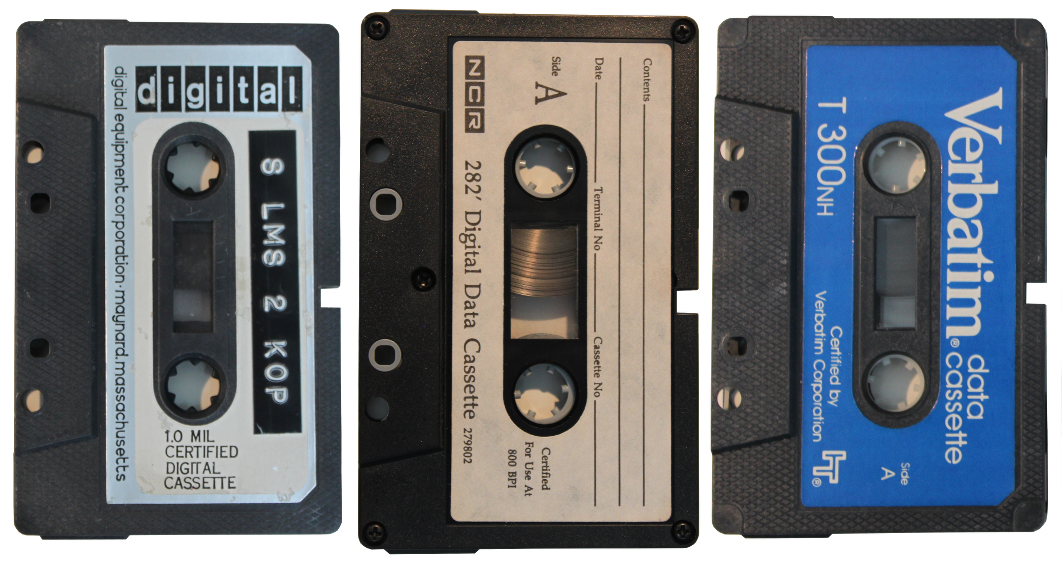

The last and, perhaps, the most effective carrier of data applied and readable by analog methods was magnetic tape. The tape is actually the only carrier that has quite successfully survived the analog era.

The technology of recording information through magnetization was patented by the Danish physicist Waldemar Poultsen at the end of the 19th century, but unfortunately, it did not acquire wide distribution at that time. For the first time, technology on an industrial scale was used only in 1935 by German engineers, and the first tape recorder was created at its base. Over the 80 years of its active use, magnetic tape has undergone significant changes. Different materials were used, different geometrical parameters of the tape itself, but all these improvements were based on a single principle developed by Poultsen in 1898, the magnetic registration of vibrations.

One of the most widely used formats was a tape consisting of a flexible base on which one of the metal oxides was applied (iron, chromium, cobalt). The width of the tape used in household audio tape recorders was usually one inch (2.54 cm), the thickness of the tape started from 10 microns, as regards the length of the tape, it varied significantly in different coils and most often ranged from hundreds of meters to a thousand. For example, a bobbin with a diameter of 30 cm could hold about 1000 meters of tape.

The sound quality depended on many parameters, both the tape itself and the equipment reading it, but in general, with the right combination of these parameters, it was possible to make high-quality studio recordings on a magnetic tape. Higher sound quality was achieved by using more tape to record a unit of sound time. Naturally, the more tape is used to record the moment of the sound, the more a wide range of frequencies could be transferred to the carrier. For studio, high-quality materials, the registration rate on the tape was at least 38.1 cm / sec. When listening to records in everyday life, for a fairly full sound, there was enough recording made at a speed of 19 cm / sec. As a result, a reel of up to 45 minutes could accommodate up to 45 minutes of studio sound, or up to 90 minutes of acceptable content for the majority of consumers. In cases of technical recordings or speeches for which the width of the frequency range during playback did not play a special role, with a tape consumption of 1.19 cm / s per aforementioned reel, it was possible to record sounds for as long as 24 hours.

Having a general idea about tape recording technologies in the second half of the twentieth century, it is possible to more or less correctly translate the capacity of reel-to-media into usable units of measurement of data volume, as we already did for recording.

In the square centimeter of this carrier will accommodate:

Vo = V / (S * n) = 86.13 Kb / sec / (2.54 cm * 1 cm * 19) = 1.78 Kbyte / cm2

Total coil volume with 1000 meters of film:

Vh = V * t = 86.13 Kb / sec * 60 sec * 90 = 465102 Kb / 1024 = 454.20 Mb

Do not forget that the specific footage of the tape in the reel was very different, it depended primarily on the diameter of the reel and the thickness of the tape. Quite widespread, due to acceptable dimensions, were widely used reels containing 500 ... 750 meters of film, which for an ordinary music lover was equivalent to a clock sound, which was quite enough for theraging of an average music album.

The life of the video cassettes, which used the same principle of recording an analog signal onto a magnetic tape, was quite short, but equally bright. By the time of the industrial use of this technology, the recording density on a magnetic tape had increased dramatically. On a half-inch film length of 259.4 meters fit 180 minutes of video with a very doubtful, as of today, the quality. The first video formats produced a picture at the level of 352x288 lines, the best samples showed the result at the level of 352x576 lines. In terms of bit-rate, the most progressive methods of reproduction of the recording made it possible to approach the value of 3060 kbit / s, at the speed of reading information from the tape at 2.339 cm / sec. The standard three-hour cassette could accommodate about 1724.74 MB, which in general is not so bad, as a result of the videotape remained in demand until recently.

The appearance and widespread introduction of numbers (binary coding) is entirely due to the twentieth century. Although the philosophy of coding binary code 1/0, Yes / No, one way or another soared among humanity at different times and on different continents, sometimes gathering the most amazing forms, it finally materialized in 1937. A student of the Massachusetts University of Technology - Claude Shannon, based on the work of the great British (Irish) mathematician Georg Buhl, applied the principles of Bulenovsky algebra to electrical circuits, which in fact became the starting point for cybernetics in the form in which we know it now.

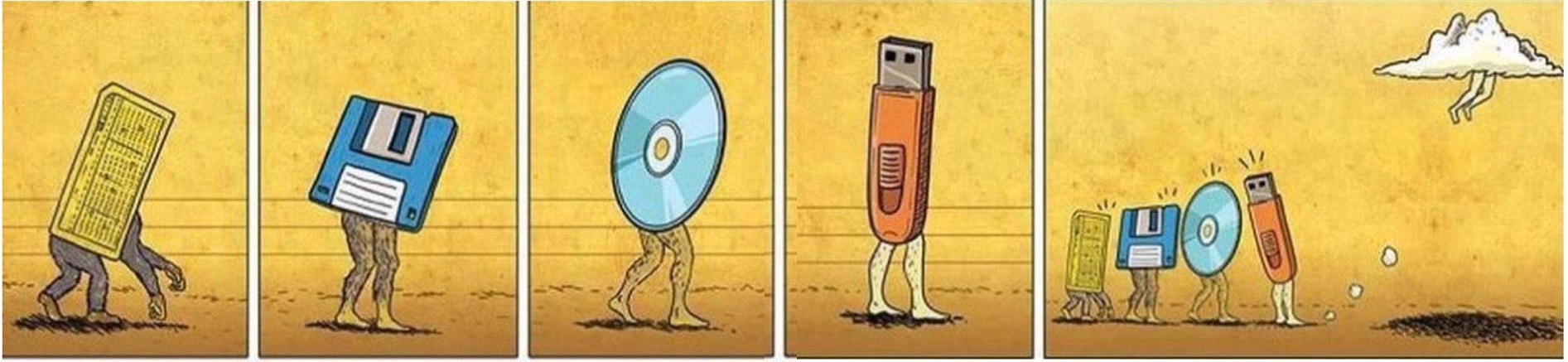

In less than a hundred years, both the hardware and software components of digital technology have undergone a huge number of major changes. The same will be true for media. Starting from overly inefficient - paper digital media, we have come to super efficient - solid storage. In general, the second half of the last century passed under the banner of experiments and the search for new forms of media, which can be succinctly called the general format mess.

Punch cards have become, perhaps, the first step in the way of interaction between computers and humans. Such communication lasted for quite a long time, sometimes even now this carrier can be found in specific research institutes scattered in the CIS.

One of the most common format of punch cards was the IBM format introduced back in 1928. This format has become the base for the Soviet industry. Dimensions of such a punched card according to GOST were 18.74 x 8.25 cm. A maximum of 80 bytes fit on a punched card, only 0.52 bytes accounted for 1 cm2. In such a calculation, for example, 1 gigabyte of data would be approximately 861.52 hectares of punched cards, and the weight of one such gigabyte was just under 22 tons.

In 1951, the first samples of data carriers based on the technology of pulsed magnetization of a tape were specially produced for registering “numbers” on it. This technology allowed to make up to 50 characters per centimeter of a half-inch metal tape. In the future, the technology has been seriously improved, allowing the number of single values per unit area to be increased many times, and the material of the carrier itself can be reduced as much as possible.

At the moment, according to the latest statements from Sony, their nano-development allows you to place on 1 cm2 the amount of information is 23 GB. Such ratios of figures suggest that this technology of tape magnetic recording has not outlived itself and has rather bright prospects for further exploitation.

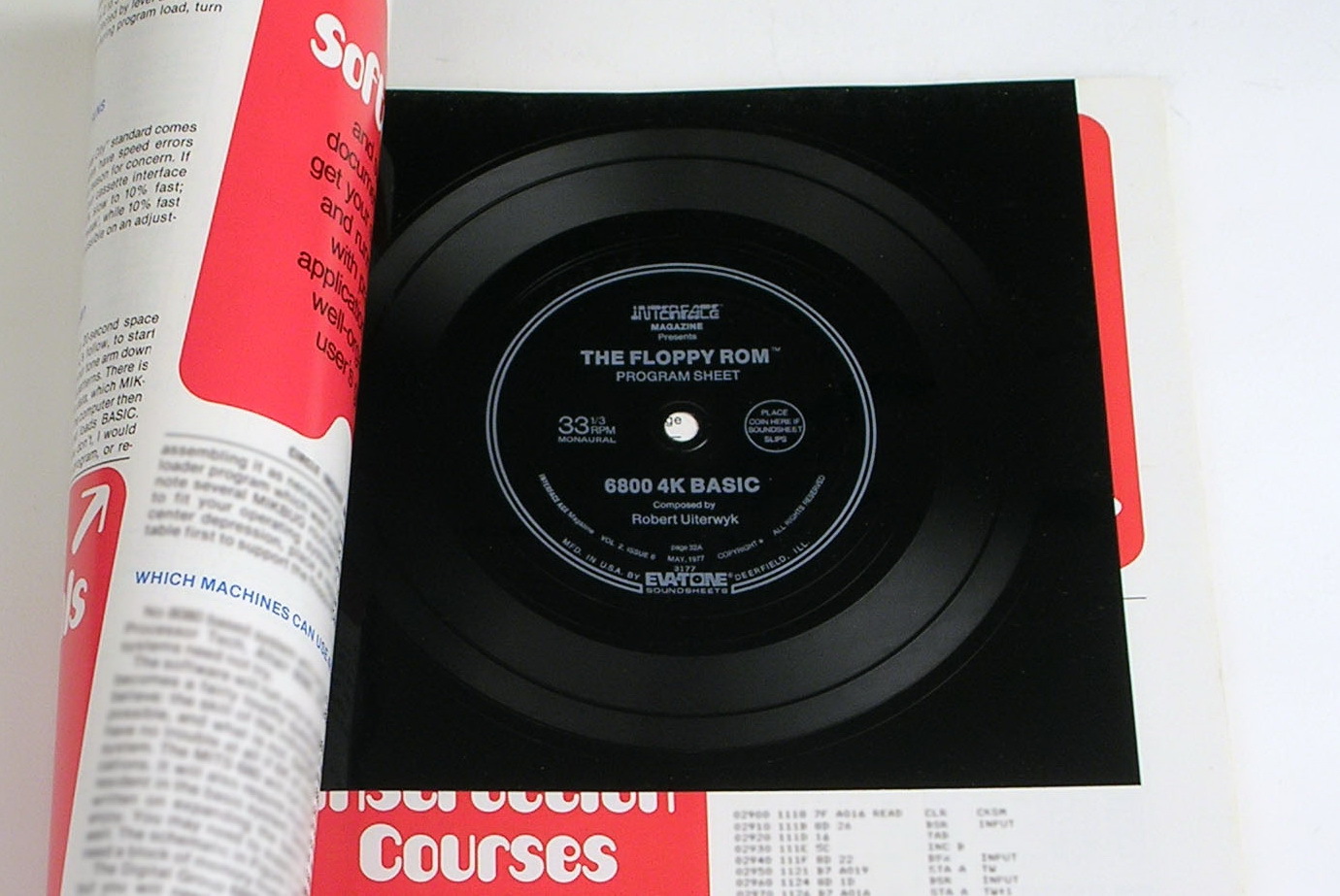

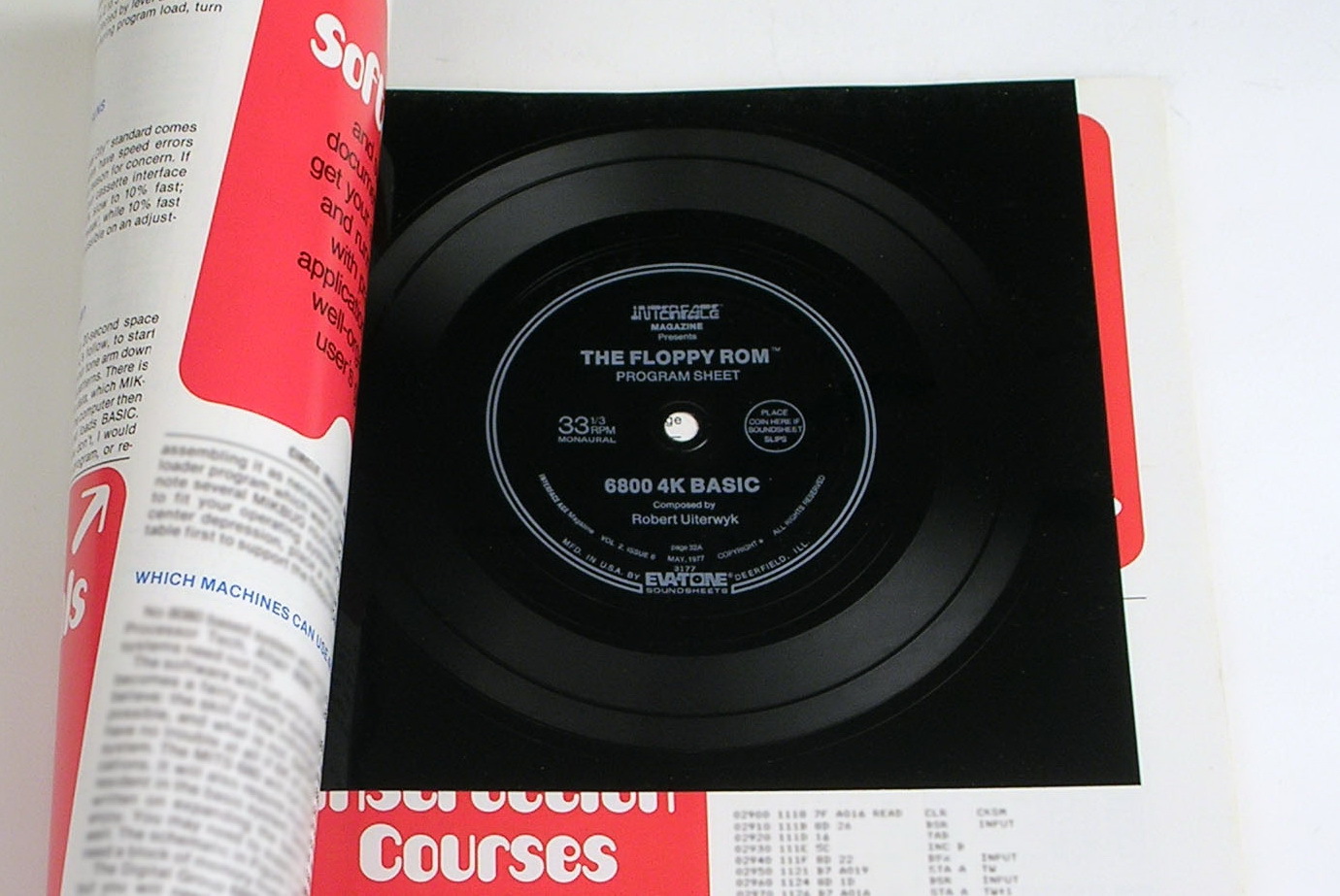

, , . 1976 Processor Technology, , . , . , « », . , , .

1977 , , 4 BASIC Motorola 6800. 6 .

, , , Floppy-Rom, 1978 , .

IBM 1956 , IBM 350 . « » 971 . . 50 , 61 . , «» 3.5 .

The data recording technology itself was, so to speak, a derivative of recording and magnetic tapes. The disks placed inside the case, stored on themselves a lot of magnetic pulses that were made on them and read by the moving head of the recorder. Like a gramophone top, at each moment in time, the recorder moved across the area of each of the disks, gaining access to the necessary cell, which carried a magnetic vector of a certain directivity.

At the moment, the aforementioned technology is also alive and moreover is actively developing. Less than a year ago, Western Digital released the world's first 10 GB hard drive. 7 plates were placed in the middle of the body, and instead of air, helium was pumped into the middle of it.

Obligated by their appearance partnership of two corporations, Sony and Philips. The optical disc was presented in 1982 as a suitable, digital alternative to analog audio carriers. With a diameter of 12 cm on the first samples it was possible to place up to 650 MB, which, with a sound quality of 16 bit / 44.1 kHz, was 74 minutes of sound and this value was not chosen for nothing. It was 74 minutes that Beethoven's 9th symphony, which one of the co-owners of Sony overly loved toli, one of the developers from Philips, lasted, and now she could fully fit on one disc.

The technology of the process of applying and reading information is very simple. On the mirror surface of the disk, grooves are burned, which, when reading information, optically, are unambiguously recorded as 1/0.

2015 . Blu-ray disc 111.7 , , «» .

All this is the brainchild of one technology. Developed in the 1950s, the principle of recording data based on the registration of electric charge in an isolated region of a semiconductor structure. For a long time, he did not find its practical implementation in order to create a full-fledged data carrier on its basis. The main reason for this was the large dimensions of the transistors, which, with the maximum possible concentration, could not produce a competitive product on the data carrier market. They remembered technology and periodically tried to introduce it throughout the 70s-80s.

80-, . Toshiba 1989 «Flash», «». , . , .

SDCX. 24 32 2.1 2 .

, , , , , .

. , .

– , . , , , , . , , , .

, , . , 1 / 0 , , : «+», «-». 1 / 0. , , , – .

But not everything is so sad for living beings, in contrast to a computer where processes are performed in a sequential mode, billions of neurons, united in the brain, solve tasks in parallel, which gives a number of advantages. Millions of these low-frequency processors here quite successfully make it possible, in particular for humans, to interact with the environment.

, – , , , . , , . , , . , , «», , , , .

, : , . , , , ? , , .

2009 , -- – -, , , , 86 , , , 100 . , :

V = 86 000 000 000 / (1024 * 1024*1024) = 80.09 / 8 =10.01

? . . . .

, , . – , . 25 , , 100…150 , , .

, . , 1990- , .

HDD 1980-, . , 74 50-60 ( ), , , .

, , , 10…20 . , . , , , . , . , , . , , , . , , . , — , , , – .

The progress of technical thought of a person has always been displayed most quickly in the field of information technology. Ways of collecting, storing, organizing, disseminating information are a red thread throughout the history of mankind. Breakthroughs, whether in the technical or human sciences, in one way or another, responded to IT. The civilizational way traveled by mankind is a series of successive steps to improve the methods of storing and transmitting data. In this article we will try to more thoroughly understand and analyze the main stages in the development of information carriers, to conduct their comparative analysis, ranging from the most primitive clay tablets to the latest successes in the creation of a machine-brain interface.

')

The task set is really not a comic one, look at what he raised, an intrigued reader will say. It would seem, how is it possible, while observing at least elementary correctness, to compare the technologies of the past and the present that differ significantly from each other? Contribute to the solution of this issue can the fact that the ways of perception of information by a man actually is not much and have changed. The forms of recording and the form of reading information by means of sounds, images and coded characters (letters) remained the same. In many ways, this reality has become a common denominator, so to speak, thanks to which it will be possible to make qualitative comparisons.

Methodology

For a start, it is worth reviving the common truths, with which we will continue to operate. The elementary storage medium of a binary system is “bit”, while the minimum unit of storage and computer processing of data is “byte” in this case in standard form, the latter includes 8 bits. More familiar to our hearing megabytes corresponds to: 1 MB = 1024 KB = 1048576 bytes.

The units listed are currently the universal measure of the amount of digital data placed on a particular carrier, so it will be very easy to use them in further work. Universality is that a group of bits, actually a cluster of numbers, a set of 1/0 values, can describe any material phenomenon and thereby digitize it. It doesn't matter if it is the wisest font, picture, melody, all these things consist of separate components, each of which is assigned its own unique digital code. Understanding this basic principle makes it possible for us to move forward.

Heavy, analog civilization childhood

The very evolutionary formation of our species has thrown people into the embrace of the analog perception of the space surrounding them, which in many respects predetermined the fate of our technological development.

At the first glance of modern man, the technologies that emerged at the very dawn of mankind are very primitive, not sophisticated in details, the very existence of mankind before the transition to the era of “numbers” can be presented, but was it really so difficult? After asking to study the question posed, we can see very simple technologies for storing and processing information at the stage of their appearance. The first of its kind carrier of information created by man, became portable areal objects with images printed on them. Tablets and parchments made it possible not only to preserve, but more efficiently than ever before, to process this information. At this stage, the opportunity to concentrate a huge amount of information in specially designated places - repositories, where this information was systematized and carefully guarded, became the main impetus to the development of all mankind.

The first known data centers, as we would call them now, until recently called libraries, emerged in the expanses of the Middle East, between the rivers Nile and Euphrates, about another II thousand years BC. The whole format of the information carrier all this time essentially determined the ways of interaction with it. And here it is not so important, an adobe slab this, a papyrus scroll, or a standard, A4 paper pulp and paper sheet, all these thousands of years have been closely combined by an analog method of entering and reading data from a carrier.

The period of time throughout which was dominated by the analog way of human interaction with his information store successfully lasted to the present day, only very recently, already in the XXI century, finally giving way to the digital format.

Having outlined the approximate temporal and semantic frameworks of the analog stage of our civilization, we can now return to the question posed at the beginning of this section, since they are not efficient these data storage methods that we had and until very recently used, without knowing about the iPad, flash drives and optical discs?

Let's do the calculation

If we take away the last stage of the decline of analog data storage technologies, which lasted for the last 30 years, we can sadly note that these technologies themselves, by and large, have not undergone significant changes over thousands of years. Indeed, a breakthrough in this area went relatively long ago, this is the end of the nineteenth century, but more on that below. Until the middle of the declared century, among the main ways of recording data there are two main ones, this is letter and painting. The essential difference between these methods of registering information, absolutely independent of the medium on which it is carried out, lies in the logic of registration of information.

art

Painting seems to be the easiest way to transfer data, which does not require any additional knowledge, both at the stage of creation and use of data, thereby actually being the original format perceived by a person. The more accurate the transfer of reflected light from the surface of surrounding objects to the scribe's retina, the more informative this image will be. Not the thoroughness of the transmission technique, the materials that the creator of the image uses, is the noise that will interfere in the future for accurate reading of the information registered in this way.

How informative is the image, what is the quantitative value of the information carried by the picture. At this stage of understanding the process of transferring information in a graphical way, we can finally plunge into the first calculations. In this, a basic computer science course will come to our aid.

Any bitmap image is discrete, it’s just a whole set of points. Knowing this property of it, we can translate the displayed information that it carries in understandable units for us. Since the presence / absence of a contrast point is actually the simplest binary code 1/0, and therefore, each of these points acquires 1 bit of information. In turn, the image of a group of points, say 100x100, will contain:

V = K * I = 100 x 100 x 1 bit = 10 000 bits / 8 bits = 1250 bytes / 1024 = 1.22 kB

But let's not forget that the above presented calculation is correct only for a monochrome image. In the case of much more frequently used color images, naturally, the amount of information transmitted will increase significantly. If we accept the condition of a sufficient color depth of 24 bit (photographic quality) encoding, and I remind you, it has support for 16,777,216 colors, therefore we get much more data for the same number of dots:

V = K * I = 100 x 100 x 24 bits = 240,000 bits / 8 bits = 30,000 bytes / 1024 = 29.30 kb

As you know, a point has no size and, in theory, any area allotted for drawing an image can carry an infinitely large amount of information. In practice, there are well-defined sizes and, accordingly, you can determine the amount of data.

On the basis of many studies conducted, it was found that a person with an average visual acuity, with a comfortable reading distance of information (30 cm), can distinguish about 188 lines per 1 centimeter, which in modern technology approximately corresponds to the standard parameter of image scanning by household scanners at 600 dpi . Consequently, from one square centimeter of the plane, without additional accessories, the average person can count 188: 188 points, which will be equivalent to:

For a monochrome image:

Vm = K * I = 188 x 188 x 1 bit = 35 344 bits / 8 bits = 4418 bytes / 1024 = 4.31 KB

For photographic image quality:

Vc = K * I = 188 x 188 x 24 bits = 848,256 bits / 8 bits = 106,032 bytes / 1024 = 103.55 kb

For greater clarity, on the basis of the obtained calculations, we can easily determine how much information a piece of paper like A4 with the dimensions of 29.7 / 21 cm bears as usual:

VA4 = L1 x L2 x Vm = 29.7 cm x 21 cm x 4.31 KB = 2688.15 / 1024 = 2.62 MB - monochrome pictures

VA4 = L1 x L2 x Vm = 29.7 cm x 21 cm x 103.55 kB = 64584.14 / 1024 = 63.07 MB - color picture

Writing

If the picture is more or less clear with the visual art, then the letter is not so simple. Obvious differences in the way information is transmitted between the text and the picture dictate a different approach in determining the informativeness of these forms. Unlike an image, a letter is a kind of standardized, coded data transfer. Without knowing the code of words embedded in the letter and the letters that form it, the informative load, say the Sumerian cuneiform, for most of us is zero, while the ancient images on the ruins of the same Babylon will be completely correctly perceived even by man absolutely not versed about the intricacies of the ancient world . It becomes quite obvious that the information content of the text extremely strongly depends on the one in whose hands it fell, on deciphering it by a specific person.

Nevertheless, even under such circumstances, somewhat eroding the validity of our approach, we can quite unequivocally calculate the amount of information that was placed in texts on various flat surfaces.

By resorting to the binary coding system and standard byte already familiar to us, the written text, which can be imagined as a set of letters that forms words and sentences, is very easily reduced to digital form 1/0.

The 8-byte habitual for us can acquire up to 256 different numeric combinations, which in fact should be enough for a digital description of any existing alphabet, as well as numbers and punctuation marks. From here, the conclusion is that any applied standard character of the alphabet letter on the surface takes 1 byte in digital equivalent.

The situation is somewhat different with hieroglyphs, which have also been widely used for several thousand years. Replacing the whole word with one sign, this encoding is clearly much more efficient in using the plane allotted to it from the point of view of information load rather than in alphabetical languages. At the same time, the number of unique characters, each of which must be assigned a non-repeated combination of a combination of 1 and 0, is many times larger. In the most common existing hieroglyphic languages: Chinese and Japanese, according to statistics, no more than 50,000 unique characters are actually used, in Japanese and even less, at the moment the country's ministry of education, for everyday use, identified only 1,850 hieroglyphs. In any case, the 256th combinations that fit into one byte cannot be avoided here. One byte is good, and two is even better, the modified popular wisdom says, 65536 - we will get as many numerical combinations using two bytes, which in principle becomes sufficient for digitizing the actively used language, thereby assigning two bytes to the absolute majority of hieroglyphs.

The existing practice of using the letter says that about 1800 readable, unique signs can be placed on a standard A4 sheet. Having carried out not complicated arithmetic calculations, it is possible to establish how much in a digital equivalent one standard typewritten piece of alphabetical and more informative hieroglyphic letters will carry information:

V = n * I = 1800 * 1 byte = 1800/1024 = 1.76 KB or 2.89 bytes / cm2

V = n * I = 1800 * 2 bytes = 3600/1024 = 3.52 KB or 5.78 bytes / cm2

Industrial leap

The XIX century was a turning point, both for the methods of registration and storage of analog data, this was the result of the emergence of revolutionary materials and information recording techniques that were to change the IT world. One of the main innovations was sound recording technology.

The invention of the phonograph by Thomas Edison gave rise to the existence of the first cylinders, with grooves applied to them, and soon the plates were the first prototypes of optical disks.

Reacting to the sound vibrations, the phonograph cutter tirelessly made grooves on the surface of both metal and a little later polymer. Depending on the vibration picked up, the cutter applied a swirling groove of different depths and widths on the material, which in turn made it possible to record sound and reproduce it back in a purely mechanical way, already once engraved sound vibrations.

At the presentation of the first phonograph by T. Edison at the Paris Academy of Sciences, there was an embarrassment, one is not young, a linguistic scholar, almost hearing a reproduction of human speech by a mechanical device, jumped off and the indignant rushed with his fists at the inventor, accusing him of fraud. According to this respected member of the Academy, metal would never be able to repeat the melodiousness of the human voice, and Edison himself is an ordinary ventriloquist. But we all know that this is certainly not the case. Moreover, in the twentieth century, people learned to store sound recordings in digital format, and now we will plunge into some numbers, after which it will become quite clear how much information fits on a regular vinyl (the material has become the most characteristic and mass representative of this technology) disc.

Just as before with the image, here we will build on human abilities to capture information. It is widely known that, most often, the human ear is able to perceive sound vibrations from 20 to 20,000 Hertz, based on this constant, the value of 44100 Hertz was adopted for switching to a digital audio format, because for a correct transition, the sampling frequency of sound vibrations should be two times its original value. Also not unimportant factor here is the coding depth of each of the 44100 oscillations. This parameter directly affects the number of bits inherent in a single wave, the higher the position of the sound wave recorded in a specific second of time, the greater the number of bits it must be encoded and the higher quality the digitized sound will sound. The ratio of sound parameters chosen for the most common format to date, not distorted by compression, used on audio discs, is its 16-bit depth, with an oscillation resolution of 44.1 kHz. Although there are more "capacious" ratios of the above parameters, up to 32bit / 192 kHz, which could be more comparable to the actual sound quality of a recording gram, but we will include in the calculations the ratio of 16 bits / 44.1 kHz. It was the chosen ratio in the 80-90s of the twentieth century that dealt a crushing blow to the industry of analog audio recording, becoming in fact a full-fledged alternative to it.

And so, taking the announced values as the initial sound parameters, we can calculate the digital equivalent of the amount of analog information carried by the recording technology:

V = f * I = 44100 Hertz * 16 bits = 705600 bits / s / 8 = 8820 bytes / s / 1024 = 86.13 kbytes / s

By calculation, we obtained the necessary amount of information for coding 1 second of sound quality recording. Since the sizes of the plates varied, just like the density of grooves on its surface, the amount of information on specific representatives of such a carrier also differed significantly. The maximum time of high-quality recording on a vinyl record with a diameter of 30 cm was less than 30 minutes on one side, which was on the edge of the material's capabilities, and this value usually did not exceed 20-22 minutes. With this characteristic, it follows that the vinyl surface could fit:

Vv = V * t = 86.13 kb / s * 60 s * 30 = 155034 kbyte / 1024 = 151.40 mbyte

And in fact placed no more:

Vvf = 86.13 kb / s * 60 s * 22 = 113691.6 kbyte / 1024 = 111.03 mbyte

The total area of such a plate was:

S = π * r ^ 2 = 3.14 * 15 cm * 15 cm = 706.50 cm2

In fact, there is 160.93 kB of information per one square centimeter of the plate; naturally, the proportion for different diameters will not change linearly, since it is not the effective recording area that is taken, but the entire carrier.

Magnetic tape

The last and, perhaps, the most effective carrier of data applied and readable by analog methods was magnetic tape. The tape is actually the only carrier that has quite successfully survived the analog era.

The technology of recording information through magnetization was patented by the Danish physicist Waldemar Poultsen at the end of the 19th century, but unfortunately, it did not acquire wide distribution at that time. For the first time, technology on an industrial scale was used only in 1935 by German engineers, and the first tape recorder was created at its base. Over the 80 years of its active use, magnetic tape has undergone significant changes. Different materials were used, different geometrical parameters of the tape itself, but all these improvements were based on a single principle developed by Poultsen in 1898, the magnetic registration of vibrations.

One of the most widely used formats was a tape consisting of a flexible base on which one of the metal oxides was applied (iron, chromium, cobalt). The width of the tape used in household audio tape recorders was usually one inch (2.54 cm), the thickness of the tape started from 10 microns, as regards the length of the tape, it varied significantly in different coils and most often ranged from hundreds of meters to a thousand. For example, a bobbin with a diameter of 30 cm could hold about 1000 meters of tape.

The sound quality depended on many parameters, both the tape itself and the equipment reading it, but in general, with the right combination of these parameters, it was possible to make high-quality studio recordings on a magnetic tape. Higher sound quality was achieved by using more tape to record a unit of sound time. Naturally, the more tape is used to record the moment of the sound, the more a wide range of frequencies could be transferred to the carrier. For studio, high-quality materials, the registration rate on the tape was at least 38.1 cm / sec. When listening to records in everyday life, for a fairly full sound, there was enough recording made at a speed of 19 cm / sec. As a result, a reel of up to 45 minutes could accommodate up to 45 minutes of studio sound, or up to 90 minutes of acceptable content for the majority of consumers. In cases of technical recordings or speeches for which the width of the frequency range during playback did not play a special role, with a tape consumption of 1.19 cm / s per aforementioned reel, it was possible to record sounds for as long as 24 hours.

Having a general idea about tape recording technologies in the second half of the twentieth century, it is possible to more or less correctly translate the capacity of reel-to-media into usable units of measurement of data volume, as we already did for recording.

In the square centimeter of this carrier will accommodate:

Vo = V / (S * n) = 86.13 Kb / sec / (2.54 cm * 1 cm * 19) = 1.78 Kbyte / cm2

Total coil volume with 1000 meters of film:

Vh = V * t = 86.13 Kb / sec * 60 sec * 90 = 465102 Kb / 1024 = 454.20 Mb

Do not forget that the specific footage of the tape in the reel was very different, it depended primarily on the diameter of the reel and the thickness of the tape. Quite widespread, due to acceptable dimensions, were widely used reels containing 500 ... 750 meters of film, which for an ordinary music lover was equivalent to a clock sound, which was quite enough for theraging of an average music album.

The life of the video cassettes, which used the same principle of recording an analog signal onto a magnetic tape, was quite short, but equally bright. By the time of the industrial use of this technology, the recording density on a magnetic tape had increased dramatically. On a half-inch film length of 259.4 meters fit 180 minutes of video with a very doubtful, as of today, the quality. The first video formats produced a picture at the level of 352x288 lines, the best samples showed the result at the level of 352x576 lines. In terms of bit-rate, the most progressive methods of reproduction of the recording made it possible to approach the value of 3060 kbit / s, at the speed of reading information from the tape at 2.339 cm / sec. The standard three-hour cassette could accommodate about 1724.74 MB, which in general is not so bad, as a result of the videotape remained in demand until recently.

Magic figure

The appearance and widespread introduction of numbers (binary coding) is entirely due to the twentieth century. Although the philosophy of coding binary code 1/0, Yes / No, one way or another soared among humanity at different times and on different continents, sometimes gathering the most amazing forms, it finally materialized in 1937. A student of the Massachusetts University of Technology - Claude Shannon, based on the work of the great British (Irish) mathematician Georg Buhl, applied the principles of Bulenovsky algebra to electrical circuits, which in fact became the starting point for cybernetics in the form in which we know it now.

In less than a hundred years, both the hardware and software components of digital technology have undergone a huge number of major changes. The same will be true for media. Starting from overly inefficient - paper digital media, we have come to super efficient - solid storage. In general, the second half of the last century passed under the banner of experiments and the search for new forms of media, which can be succinctly called the general format mess.

Card

Punch cards have become, perhaps, the first step in the way of interaction between computers and humans. Such communication lasted for quite a long time, sometimes even now this carrier can be found in specific research institutes scattered in the CIS.

One of the most common format of punch cards was the IBM format introduced back in 1928. This format has become the base for the Soviet industry. Dimensions of such a punched card according to GOST were 18.74 x 8.25 cm. A maximum of 80 bytes fit on a punched card, only 0.52 bytes accounted for 1 cm2. In such a calculation, for example, 1 gigabyte of data would be approximately 861.52 hectares of punched cards, and the weight of one such gigabyte was just under 22 tons.

Magnetic tapes

In 1951, the first samples of data carriers based on the technology of pulsed magnetization of a tape were specially produced for registering “numbers” on it. This technology allowed to make up to 50 characters per centimeter of a half-inch metal tape. In the future, the technology has been seriously improved, allowing the number of single values per unit area to be increased many times, and the material of the carrier itself can be reduced as much as possible.

At the moment, according to the latest statements from Sony, their nano-development allows you to place on 1 cm2 the amount of information is 23 GB. Such ratios of figures suggest that this technology of tape magnetic recording has not outlived itself and has rather bright prospects for further exploitation.

Gram record

, , . 1976 Processor Technology, , . , . , « », . , , .

1977 , , 4 BASIC Motorola 6800. 6 .

, , , Floppy-Rom, 1978 , .

IBM 1956 , IBM 350 . « » 971 . . 50 , 61 . , «» 3.5 .

The data recording technology itself was, so to speak, a derivative of recording and magnetic tapes. The disks placed inside the case, stored on themselves a lot of magnetic pulses that were made on them and read by the moving head of the recorder. Like a gramophone top, at each moment in time, the recorder moved across the area of each of the disks, gaining access to the necessary cell, which carried a magnetic vector of a certain directivity.

At the moment, the aforementioned technology is also alive and moreover is actively developing. Less than a year ago, Western Digital released the world's first 10 GB hard drive. 7 plates were placed in the middle of the body, and instead of air, helium was pumped into the middle of it.

Optical discs

Obligated by their appearance partnership of two corporations, Sony and Philips. The optical disc was presented in 1982 as a suitable, digital alternative to analog audio carriers. With a diameter of 12 cm on the first samples it was possible to place up to 650 MB, which, with a sound quality of 16 bit / 44.1 kHz, was 74 minutes of sound and this value was not chosen for nothing. It was 74 minutes that Beethoven's 9th symphony, which one of the co-owners of Sony overly loved toli, one of the developers from Philips, lasted, and now she could fully fit on one disc.

The technology of the process of applying and reading information is very simple. On the mirror surface of the disk, grooves are burned, which, when reading information, optically, are unambiguously recorded as 1/0.

2015 . Blu-ray disc 111.7 , , «» .

, , SD

All this is the brainchild of one technology. Developed in the 1950s, the principle of recording data based on the registration of electric charge in an isolated region of a semiconductor structure. For a long time, he did not find its practical implementation in order to create a full-fledged data carrier on its basis. The main reason for this was the large dimensions of the transistors, which, with the maximum possible concentration, could not produce a competitive product on the data carrier market. They remembered technology and periodically tried to introduce it throughout the 70s-80s.

80-, . Toshiba 1989 «Flash», «». , . , .

SDCX. 24 32 2.1 2 .

, , , , , .

. , .

– , . , , , , . , , , .

, , . , 1 / 0 , , : «+», «-». 1 / 0. , , , – .

But not everything is so sad for living beings, in contrast to a computer where processes are performed in a sequential mode, billions of neurons, united in the brain, solve tasks in parallel, which gives a number of advantages. Millions of these low-frequency processors here quite successfully make it possible, in particular for humans, to interact with the environment.

, – , , , . , , . , , . , , «», , , , .

, : , . , , , ? , , .

2009 , -- – -, , , , 86 , , , 100 . , :

V = 86 000 000 000 / (1024 * 1024*1024) = 80.09 / 8 =10.01

? . . . .

Total

, , . – , . 25 , , 100…150 , , .

, . , 1990- , .

HDD 1980-, . , 74 50-60 ( ), , , .

, , , 10…20 . , . , , , . , . , , . , , , . , , . , — , , , – .

Source: https://habr.com/ru/post/252187/

All Articles