Social Network Analysis: Spark GraphX

Hi, Habr!

Today we will get acquainted with the tasks of Social Network Analysis ( SNA ), as well as finish the review of the Apache Spark library for analyzing Big Data. Namely, as promised in previous articles ( one and two times ), we will consider one of the Apache Spark components designed to analyze graphs - GraphX . We will try to understand how distributed storage of graphs and calculations on them is implemented in this library. We will also show with concrete examples how this library can be used in practice: searching for spam, ranking search results, identifying communities in social networks, searching for opinion leaders is not a complete list of applications for analyzing graphs.

Let's start with the fact that we recall (for those who are not immersed in graph theory) the main objects with which we will work in this article and dive into algorithms and all the beautiful mathematics that stands behind it.

')

Probably the best thing in this section is to send the reader to watch the wonderful video lectures and brochures of my supervisor Andrei Mikhailovich Raigorodsky , for example, here - no one will tell about it better than anyone. It is highly recommended to also see this or that . And even better - sign up for the course Andrei Mikhailovich Coursera . Therefore, here we only give the basic concepts and we will not go into details.

A graph is a pair G = (V, E) - where, V is a set of vertices (say, sites on the Internet), and E is a set of edges connecting vertices (respectively, links between sites). Quite understandable structure, the analysis of which is engaged for many years. However, in practice, when analyzing real networks, the question arises - how is this graph constructed? Let's say a new site appears on the Internet - to whom it will link first of all, how many new links will appear on average, how well will this site be ranked in search results?

People have been involved in this task ( web graph device) almost since the advent of the Internet. During this time, many models were invented. Not so long ago, a generalized model was proposed in Yandex , and its properties were also investigated.

If the graph is already given to us, its properties, as well as further calculations on the graph, are completely defined. For example, you can examine how the degree of a particular vertex (the number of friends of a person in a social network) itself, or measure the distances between specific vertices (through how many handshakes 2 specified people in the network are familiar), calculate connectivity components (a group of people any 2 people are familiar) and more.

Classical algorithms are:

PageRank is a well-known algorithm for calculating the "authority" of a vertex in a graph proposed by Google in 1998 and used for a long time to rank search results

Search for (strongly) connected components — an algorithm for finding subsets of graph vertices such that between any two vertices from a particular subset there is a path, and there are no paths between the vertices of different subsets

Counting shortest paths in a graph — between any pair of vertices, between specific two vertices, on weighted graphs, and in other productions

As well as counting the number of triangles, clustering, the distribution of degrees of vertices, click search in the graph and much more. It is worth noting that most of the algorithms are iterative, and therefore, in this context, the GraphX library shows itself very well in view of the fact that it caches data in RAM. Next, we consider what opportunities this library provides us.

Immediately, we note that GraphX is far from being the first and not the only graph analysis tool (well-known tools are, for example, GraphLab — the current Dato.com or Pregel calculation model — whose API is partially used in GraphX), and also that the writing of this post was still under development and its possibilities are not so great. Nevertheless, practically for any tasks that arise in practice, GraphX somehow justifies its use.

GraphX does not yet have Python support, so we will write the code in Scala, assuming that SparkContext has already been created (in the code below, the variable sc ). Most of the code below is taken from the documentation and public materials. So, to begin with we will load all necessary libraries:

In Spark, the concept of a graph is implemented in the form of a so-called Property Graph - this is a multigraph with labels (additional information) on vertices and edges. A multigraph is a directed (edges have directions) graph in which multiple edges are allowed (there may be several edges between two vertices), loops (an edge from a vertex to itself). We immediately say that in the case of oriented graphs, such concepts as the incoming degree (the number of incoming edges) and the outgoing degree (the number of outgoing edges from the vertex) are defined. Let's look at examples how to build a specific graph.

You can build a graph using the Graph constructor, passing arrays of vertices and edges from a local program to the input (not forgetting to make RDD of them using the .parallelize () function):

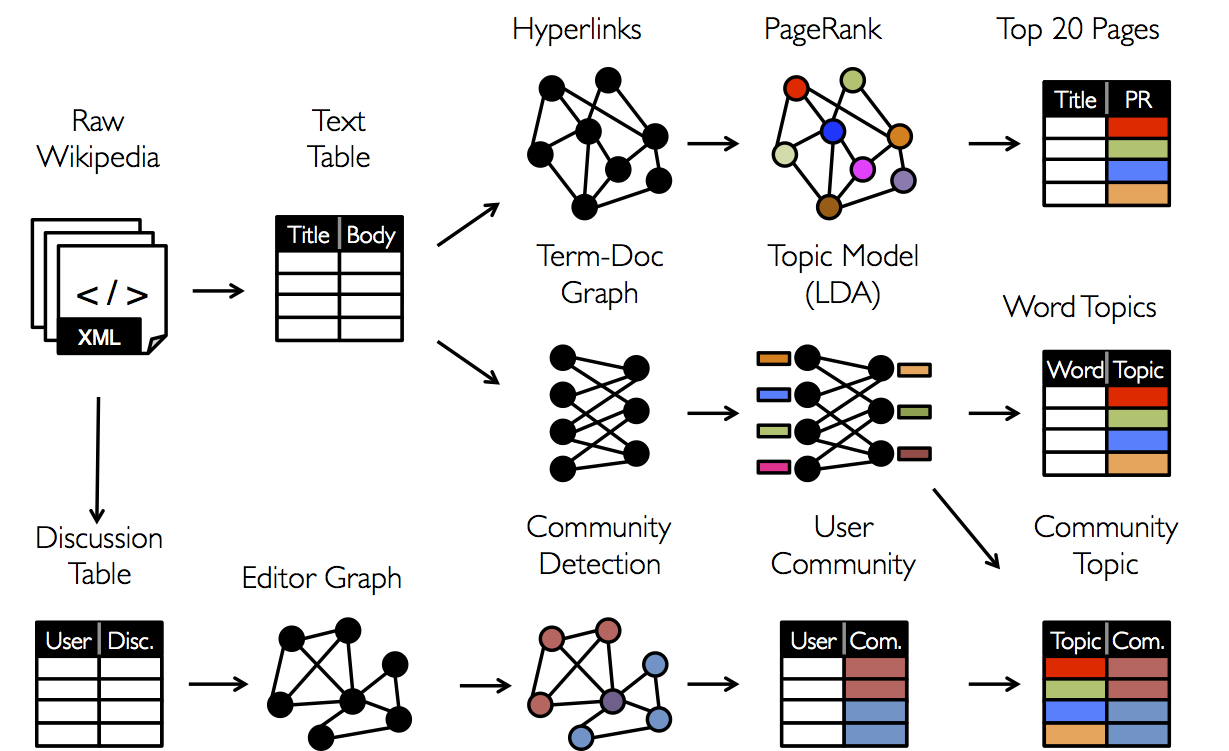

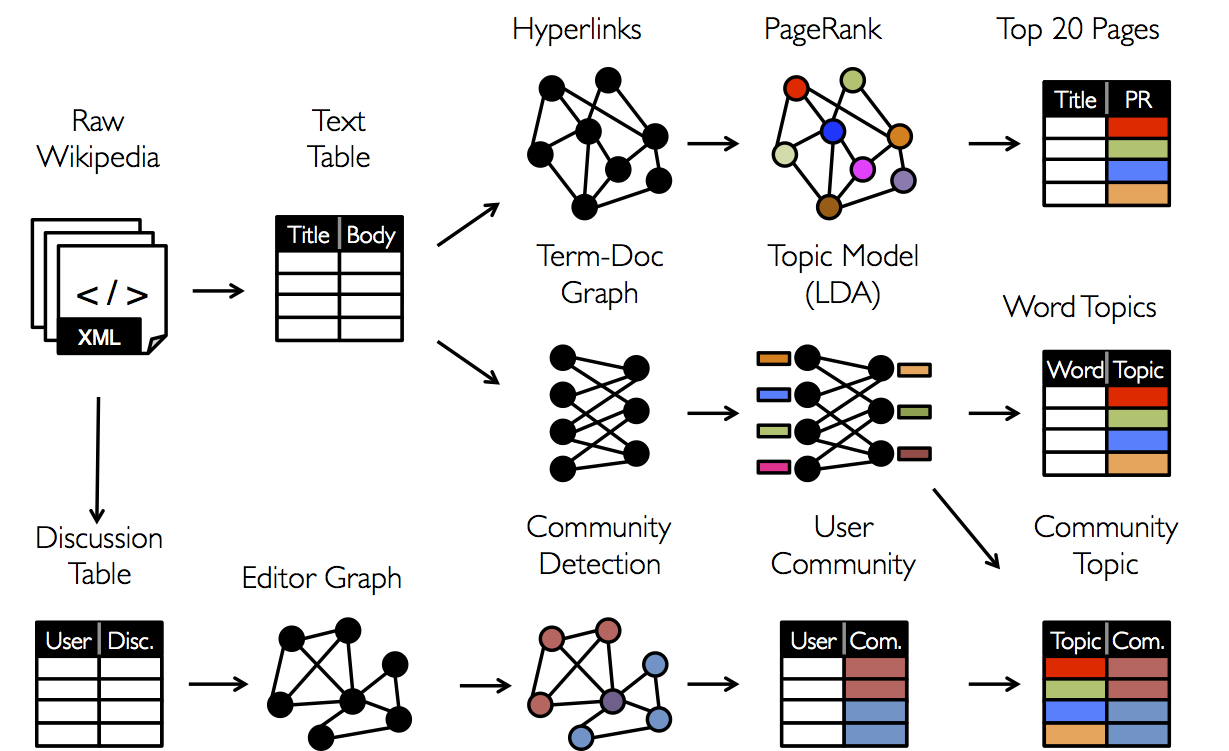

Or, if the vertices and edges must first be constructed on the basis of some data lying, say in HDFS , it is necessary to first process the initial data themselves (as is often the case with the .map () transformation). For example, if we have Wikipedia articles stored as (id, title) , as well as links between articles stored as pairs, then the graph is fairly easy to create - you need to separate id from title in the first case and construct the edges themselves (there is an Edge constructor for this) - in the second case, at the output, getting a list of vertices and edges that can be passed to the Graph constructor:

After constructing the graph for it, it is possible to calculate some characteristics and also run on it the algorithms, including those listed above. Until we continue, it is worth noting here that in Spark, in addition to the concept of vertices and edges, the concept of triplet is also implemented - this object, which in a sense extends the edge object a little ( Edge ) - in addition to information about the edge, information about tops adjacent to it.

The remarkable fact is that in most packages (and GraphX is not an exception) - after the construction of the graph, it becomes easy to do calculations on it, as well as to run standard algorithms. Indeed, the methods of calculation on graphs themselves are studied quite well, and in concrete applied problems the most difficult thing is to define the graph, namely, to determine what are vertices and what are edges (on what basis to carry them out). Below is a list of some of the available methods for the Graph object with comments:

It is worth noting that the current implementation of SparkX contains quite a few implemented algorithms, so it is still relevant to use the above known packages instead of Apache Spark, however, there is confidence that GraphX will be significantly improved in the future, and thanks to the possibility of caching data in RAM, likely to get enough popularity in graph problems. In conclusion, we give examples of practical problems where graph methods have to be applied.

As mentioned above, the main problem in practical tasks is no longer to run complex algorithms - namely, the correct definition of the graph, its correct preprocessing and the reduction of the problem to the classical solved one. Consider this in examples where we leave the reader a large number of questions for reflection:

Prediction of the appearance of a new edge (Link Prediction)

The task is fairly common - a sequence of edges is given, which are added to the graph up to a certain point. It is necessary to predict which edges will appear in the future in our graph. From the point of view of real life, this task is part of a recommendatory system — for example, predicting connections (“friendship”) between two users of social services. network. In this task, it is actually necessary to predict for each pair of arbitrarily selected vertices - what is the probability that there will be a rib between them in the future - here you just need to work with signs and with a description of the vertices. For example, as one of the signs, there may be an intersection of a set of friends, or a Jacquard measure. The reader is invited to think about possible metrics of similarity between the peaks and write your own variation in the comments).

Selection of communities in social networks

A task that is difficult to attribute to any specific tasks. Often it is considered in the context of the “clustering on graphs” task. There are a lot of methods to solve here - from simple selection of connected components (the algorithm was mentioned above) with a correctly defined subset of vertices, to sequential removal of edges from the graph until the necessary component remains. Here, again - it is very important to first understand which communities we want to allocate in the network, i.e. first work with the description of vertices and edges, and only then think how to select the communities themselves in the subgraph.

Shortest distances on graphs

This task is also a classic one and is implemented, for example, in the same Yandex. Metro or other services that help find the shortest paths in a certain graph — be it a graph of connections between points of a city or a graph of dating.

Or a task that a mobile operator can easily encounter, for example:

Identifying opinion leaders online

In order to promote, say, some new option, for example, the mobile Internet, in order to optimize the advertising budget, I would like to highlight people who are in some sense surrounded by attention. In our terms, these are the vertices of the graph that are more authoritative in the network - therefore, this task, if properly constructed, is reduced to the PageRank task.

So, we have considered typical applied graph analysis problems that may arise in practice, as well as one of the tools that can be used to solve them. This concludes the review of the Apache Spark library, and indeed the review of tools and in the future we will focus more on algorithms and specific tasks!

Today we will get acquainted with the tasks of Social Network Analysis ( SNA ), as well as finish the review of the Apache Spark library for analyzing Big Data. Namely, as promised in previous articles ( one and two times ), we will consider one of the Apache Spark components designed to analyze graphs - GraphX . We will try to understand how distributed storage of graphs and calculations on them is implemented in this library. We will also show with concrete examples how this library can be used in practice: searching for spam, ranking search results, identifying communities in social networks, searching for opinion leaders is not a complete list of applications for analyzing graphs.

Let's start with the fact that we recall (for those who are not immersed in graph theory) the main objects with which we will work in this article and dive into algorithms and all the beautiful mathematics that stands behind it.

')

Graph theory, random and web graphs

Probably the best thing in this section is to send the reader to watch the wonderful video lectures and brochures of my supervisor Andrei Mikhailovich Raigorodsky , for example, here - no one will tell about it better than anyone. It is highly recommended to also see this or that . And even better - sign up for the course Andrei Mikhailovich Coursera . Therefore, here we only give the basic concepts and we will not go into details.

A graph is a pair G = (V, E) - where, V is a set of vertices (say, sites on the Internet), and E is a set of edges connecting vertices (respectively, links between sites). Quite understandable structure, the analysis of which is engaged for many years. However, in practice, when analyzing real networks, the question arises - how is this graph constructed? Let's say a new site appears on the Internet - to whom it will link first of all, how many new links will appear on average, how well will this site be ranked in search results?

People have been involved in this task ( web graph device) almost since the advent of the Internet. During this time, many models were invented. Not so long ago, a generalized model was proposed in Yandex , and its properties were also investigated.

If the graph is already given to us, its properties, as well as further calculations on the graph, are completely defined. For example, you can examine how the degree of a particular vertex (the number of friends of a person in a social network) itself, or measure the distances between specific vertices (through how many handshakes 2 specified people in the network are familiar), calculate connectivity components (a group of people any 2 people are familiar) and more.

Classical algorithms are:

PageRank is a well-known algorithm for calculating the "authority" of a vertex in a graph proposed by Google in 1998 and used for a long time to rank search results

Search for (strongly) connected components — an algorithm for finding subsets of graph vertices such that between any two vertices from a particular subset there is a path, and there are no paths between the vertices of different subsets

Counting shortest paths in a graph — between any pair of vertices, between specific two vertices, on weighted graphs, and in other productions

As well as counting the number of triangles, clustering, the distribution of degrees of vertices, click search in the graph and much more. It is worth noting that most of the algorithms are iterative, and therefore, in this context, the GraphX library shows itself very well in view of the fact that it caches data in RAM. Next, we consider what opportunities this library provides us.

Spark GraphX

Immediately, we note that GraphX is far from being the first and not the only graph analysis tool (well-known tools are, for example, GraphLab — the current Dato.com or Pregel calculation model — whose API is partially used in GraphX), and also that the writing of this post was still under development and its possibilities are not so great. Nevertheless, practically for any tasks that arise in practice, GraphX somehow justifies its use.

GraphX does not yet have Python support, so we will write the code in Scala, assuming that SparkContext has already been created (in the code below, the variable sc ). Most of the code below is taken from the documentation and public materials. So, to begin with we will load all necessary libraries:

import org.apache.spark.graphx._ import org.apache.spark.rdd.RDD In Spark, the concept of a graph is implemented in the form of a so-called Property Graph - this is a multigraph with labels (additional information) on vertices and edges. A multigraph is a directed (edges have directions) graph in which multiple edges are allowed (there may be several edges between two vertices), loops (an edge from a vertex to itself). We immediately say that in the case of oriented graphs, such concepts as the incoming degree (the number of incoming edges) and the outgoing degree (the number of outgoing edges from the vertex) are defined. Let's look at examples how to build a specific graph.

Graphing

You can build a graph using the Graph constructor, passing arrays of vertices and edges from a local program to the input (not forgetting to make RDD of them using the .parallelize () function):

val vertexArray = Array( (1L, ("Alice", 28)), (2L, ("Bob", 27)), (3L, ("Charlie", 65)), (4L, ("David", 42)), (5L, ("Ed", 55)), (6L, ("Fran", 50)) ) val edgeArray = Array( Edge(2L, 1L, 7), Edge(2L, 4L, 2), Edge(3L, 2L, 4), Edge(3L, 6L, 3), Edge(4L, 1L, 1), Edge(5L, 2L, 2), Edge(5L, 3L, 8), Edge(5L, 6L, 3) ) val vertexRDD = sc.parallelize(vertexArray) val edgeRDD = sc.parallelize(edgeArray) val graph = Graph(vertexRDD, edgeRDD) Or, if the vertices and edges must first be constructed on the basis of some data lying, say in HDFS , it is necessary to first process the initial data themselves (as is often the case with the .map () transformation). For example, if we have Wikipedia articles stored as (id, title) , as well as links between articles stored as pairs, then the graph is fairly easy to create - you need to separate id from title in the first case and construct the edges themselves (there is an Edge constructor for this) - in the second case, at the output, getting a list of vertices and edges that can be passed to the Graph constructor:

val articles = sc.textFile("articles.txt") val links = sc.textFile("links.txt") val vertices = articles.map { line => val fields = line.split('\t') (fields(0).toLong, fields(1)) } val edges = links.map { line => val fields = line.split('\t') Edge(fields(0).toLong, fields(1).toLong, 0) } val graph = Graph(vertices, edges, "").cache() After constructing the graph for it, it is possible to calculate some characteristics and also run on it the algorithms, including those listed above. Until we continue, it is worth noting here that in Spark, in addition to the concept of vertices and edges, the concept of triplet is also implemented - this object, which in a sense extends the edge object a little ( Edge ) - in addition to information about the edge, information about tops adjacent to it.

Calculations on graphs

The remarkable fact is that in most packages (and GraphX is not an exception) - after the construction of the graph, it becomes easy to do calculations on it, as well as to run standard algorithms. Indeed, the methods of calculation on graphs themselves are studied quite well, and in concrete applied problems the most difficult thing is to define the graph, namely, to determine what are vertices and what are edges (on what basis to carry them out). Below is a list of some of the available methods for the Graph object with comments:

class Graph[VD, ED] { // val numEdges: Long // val numVertices: Long // val inDegrees: VertexRDD[Int] // val outDegrees: VertexRDD[Int] // val degrees: VertexRDD[Int] // // , val vertices: VertexRDD[VD] val edges: EdgeRDD[ED] val triplets: RDD[EdgeTriplet[VD, ED]] // ( ) def mapVertices[VD2](map: (VertexID, VD) => VD2): Graph[VD2, ED] def mapEdges[ED2](map: Edge[ED] => ED2): Graph[VD, ED2] def mapEdges[ED2](map: (PartitionID, Iterator[Edge[ED]]) => Iterator[ED2]): Graph[VD, ED2] def mapTriplets[ED2](map: EdgeTriplet[VD, ED] => ED2): Graph[VD, ED2] // def reverse: Graph[VD, ED] // - def subgraph( epred: EdgeTriplet[VD,ED] => Boolean = (x => true), vpred: (VertexID, VD) => Boolean = ((v, d) => true)) : Graph[VD, ED] // , def groupEdges(merge: (ED, ED) => ED): Graph[VD, ED] // // def pageRank(tol: Double, resetProb: Double = 0.15): Graph[Double, Double] // PageRank def connectedComponents(): Graph[VertexID, ED] // def triangleCount(): Graph[Int, ED] // def stronglyConnectedComponents(numIter: Int): Graph[VertexID, ED] // } It is worth noting that the current implementation of SparkX contains quite a few implemented algorithms, so it is still relevant to use the above known packages instead of Apache Spark, however, there is confidence that GraphX will be significantly improved in the future, and thanks to the possibility of caching data in RAM, likely to get enough popularity in graph problems. In conclusion, we give examples of practical problems where graph methods have to be applied.

Practical tasks

As mentioned above, the main problem in practical tasks is no longer to run complex algorithms - namely, the correct definition of the graph, its correct preprocessing and the reduction of the problem to the classical solved one. Consider this in examples where we leave the reader a large number of questions for reflection:

Prediction of the appearance of a new edge (Link Prediction)

The task is fairly common - a sequence of edges is given, which are added to the graph up to a certain point. It is necessary to predict which edges will appear in the future in our graph. From the point of view of real life, this task is part of a recommendatory system — for example, predicting connections (“friendship”) between two users of social services. network. In this task, it is actually necessary to predict for each pair of arbitrarily selected vertices - what is the probability that there will be a rib between them in the future - here you just need to work with signs and with a description of the vertices. For example, as one of the signs, there may be an intersection of a set of friends, or a Jacquard measure. The reader is invited to think about possible metrics of similarity between the peaks and write your own variation in the comments).

Selection of communities in social networks

A task that is difficult to attribute to any specific tasks. Often it is considered in the context of the “clustering on graphs” task. There are a lot of methods to solve here - from simple selection of connected components (the algorithm was mentioned above) with a correctly defined subset of vertices, to sequential removal of edges from the graph until the necessary component remains. Here, again - it is very important to first understand which communities we want to allocate in the network, i.e. first work with the description of vertices and edges, and only then think how to select the communities themselves in the subgraph.

Shortest distances on graphs

This task is also a classic one and is implemented, for example, in the same Yandex. Metro or other services that help find the shortest paths in a certain graph — be it a graph of connections between points of a city or a graph of dating.

Or a task that a mobile operator can easily encounter, for example:

Identifying opinion leaders online

In order to promote, say, some new option, for example, the mobile Internet, in order to optimize the advertising budget, I would like to highlight people who are in some sense surrounded by attention. In our terms, these are the vertices of the graph that are more authoritative in the network - therefore, this task, if properly constructed, is reduced to the PageRank task.

So, we have considered typical applied graph analysis problems that may arise in practice, as well as one of the tools that can be used to solve them. This concludes the review of the Apache Spark library, and indeed the review of tools and in the future we will focus more on algorithms and specific tasks!

Source: https://habr.com/ru/post/252157/

All Articles