The geolocation saga and how to make a geo-web service on NGINX without a database engine and without programming

Today we will raise a rather old topic about geolocation by IP address and a new one about fast web services without “programming languages”  . We will also publish a ready container image so that you can deploy such a web service in 5 minutes.

. We will also publish a ready container image so that you can deploy such a web service in 5 minutes.

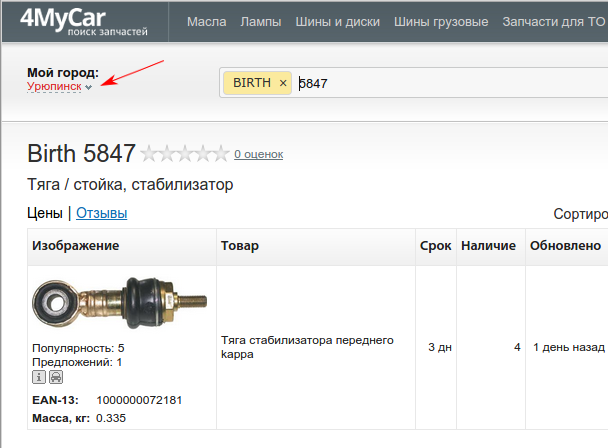

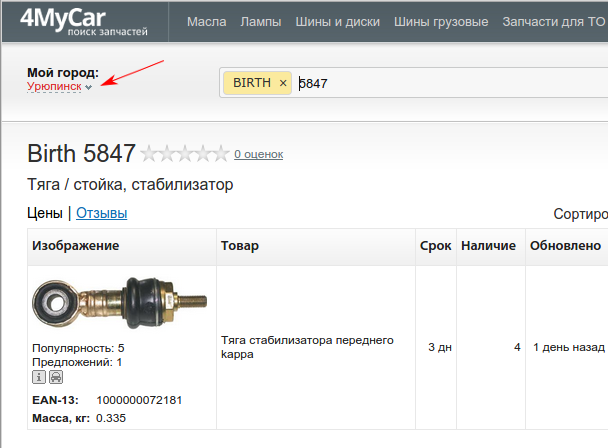

Our company is developing online parts stores on its own SaaS platform ( ABCP.RU ), and we also have several related projects, for example, the spare parts search service 4MyCar.ru .

Like many other web projects, we once came to understand the need for geolocation by IP-address. For example, now it is used on 4MyCar.ru to determine the region (when you first enter the site, the region is automatically set that way).

')

In the same way, the nearest branch of the store is selected on the ABCP client sites.

When a geolocation problem first arose before us, we were just beginning to study this question. As a matter of fact, at that time, apart from the MaxMind bases, there were no special alternatives. Tried, played and abandoned. In real work, MaxMind GeoLite was used several times to filter out particularly annoying bots who tried to lay down the sites of our clients.

(there was enough filtering by country in nginx, a primitive check in if, see the documentation ngx_http_geoip_module ). Free databases did not give sufficient accuracy in RU, contained the names of cities in Latin and therefore were not very suitable for other purposes.

After some time, one of our employees discovered an excellent site ipgeobase.ru , which allows you to “download” the geolocation database for Russia and Ukraine, and also use its XML web service through a simple http request. For example, switching to the 4mycar.ru website for the phrase “buy an oil filter in uryupinsk” from the respective city resulted in approximately such a request to the web service http://ipgeobase.ru:7020/geo?ip=217.149.183.4 . The results were the names of the city and region in Russian, which was very convenient. In a very short time, work with the web service was used in the code determining the nearest store branch. However, after launching into production, several problems emerged:

1) usually the request to the web service required some small time (hundredths of a second in normal condition from the data center in Moscow), but from the developers office in the region the delays were already higher (about half a second);

2) occasionally (according to our observations, at peak hours) this time was significantly longer, which caused unpleasant delays in responding to our customers;

3) it just so happened that for the same client several times it was required to conduct geolocation, hence the question of caching geodata;

4) with our non-optimal requests, we created a load on the web service ipgeobase, which was not good with respect to the owners of the service;

5) for other countries (not RU and not UA) geolocation did not work.

To solve these problems, we quickly “gathered a meeting”

and got two main solutions: take the database and write your web service (periodically download the ipgeobase database, import it into your database, give it via http with caching, for example, in memcached) or make caching of geodata in memcached or redis (we request data in ipgeobase and saving to cache). Offhand, both options required quite a lot of such scarce man-hours of developers, and eventually there was a third option: we reduce the accuracy somewhat (we replace the last octet in the ip address with 0 and assume that the same subnet / 24 is in different cities too often) and we make on our equipment a caching proxy on nginx with a lot of caching time and small timeouts when querying ipgeobase. This option was very effective, at times reduced the load on ipgeobase and geolocation time. The option with its own web service was postponed indefinitely.

After some time, we again needed geolocation in nginx (yes, again these bots, but now a lot of RU), so filtering by country according to MaxMind databases was not enough.

This was urgently needed, so we used another geo module ( ngx_http_geo_module ) and brought the region number into the variable from the ipgeobase database. That was enough to “plug the holes”.

Soon we got the ipgeobase2nginx.php script, which created the bases for nginx and, as a result, got human-readable information about the city in a variable. This data, as well as MaxMind data, could already be outputted to logs or transmitted in headers to the backend, which, in principle, suited everyone.

All this time we have been periodically thinking about further development. Plans to create your own web service gathered dust in the TODO lists and occasionally surfaced in the form “I want to study python / erlang / haskell / etc in the evening to write the following after 'Hello world'?”, But they didn’t move further.

Suddenly, first as a joke for tea (just for fun), an idea arose to make a web service similar to ipgeobase, but without the database engine and the use of scripting languages, based on the developments in nginx.

A quick analysis of what we have, gave the following result:

1) in free access there are GeoLite databases in csv and ipgeobase in the text;

2) the ngx_http_geo_module module can set variable values by IP address, and also does it terribly fast (it even uses binary geo range base to speed up);

3) for RU and UA, we trust ipgeobase, but, if possible, we want to see MaxMind data as well;

4) ssi ( ngx_http_ssi_module ) is perfectly implemented in nginx, and not only for text / html, but also for other file types;

5) nginx can take the ip address from the request header and assume that this is the client's IP address ( ngx_http_realip_module ), which means transfer it to the geo module.

It remains to add a few “knee” scripts, which from the csv and ipgeobase files make the required pieces of configs for nginx.

Here's what we got:

https://yadi.sk/d/QsNN87nMesXo8 - configs and scripts.

In order to show the web service in operation, we temporarily deployed it on VDS, available at http://muxgeo-demo.4mycar.ru:6280/muxge// .

To quickly launch such a service at home, you can download a ready-made LXC image - https://yadi.sk/d/1WrvV2RyesYFM (login: password - ubuntu: ubuntu).

Here is a brief description of how scripts work, in LXC we place them in / opt / scripts.

In the / opt / scripts / in subdirectory you need to put the files obtained from MaxMind and ipgeobase, and process them a bit (like this):

iconv -f latin1 GeoLiteCity-Location.csv | iconv -t ascii // translit> GeoLiteCity-Location-translit.csv

To work, you need an additional file from MaxMind with the names of the regions:

dev.maxmind.com/static/csv/codes/maxmind/region.csv

Now the scripts themselves:

GeoLite2nginx.pl - generates out / nginx_geoip_ * files

ipgeobase2nginx.pl - generates out / nginx_ipgeobase_ * files

We will need to impose ranges of IP addresses in geoip and ipgeobase. For this, the first two scripts, when executed, created files with an integer representation of IP addresses (out / nginx_geoip_num.txt and out / nginx_ipgeobase_num.txt). We manually made the file in / nginx_localip_num.txt into which we put the list of reserved ranges (local networks, etc.). Additionally, from the resulting lists exclude the range of multicast addresses.

How we do it:

The make-dup-ranges.pl script goes through the list and for each even ip (the beginning of the new range) adds the previous one (the end of the previous range) to the list, and the next for each odd ip. Next list sorted, remove duplicates.

The make-ranges.pl script creates such a config with ranges for nginx.

Now we have configs for nginx, we need to connect them.

The scheme we will have consists of frontend and backend (the frontend sends requests to the backend with header conversion and caching). Let's do all this on ubuntu 14.04 in the LXC container, we take nginx from the official site.

Contents out put here:

/ etc / nginx / muxgeo / data /

Let's make “strapping” that will set the necessary variables:

/etc/nginx/muxgeo/muxgeo.conf

/etc/nginx/muxgeo/muxgeo-geoip.conf

/etc/nginx/muxgeo/muxgeo-ipgeobase.conf

And also the primitive logic for the backend:

/etc/nginx/muxgeo/muxgeo_site.conf

The configs for the frontend and backend are here:

/etc/nginx/conf.d/muxgeo-frontend.conf (listening on port 6280)

/etc/nginx/conf.d/muxgeo-backend.conf (port 6299)

We also need a file, say, index.html, in which we will output the data in the required format using SSI in nginx. We place it in the catalog

/ opt / muxgeo / muxgeo-backend / muxgeo

So the request to

http://muxgeo-demo.4mycar.ru:6280/muxgeo/?ip=217.149.183.4

It is broadcast on the backend with the substitution of the IP address on 217.149.183.4, and the backend will insert the information in the right places of the html text.

But the html page is a bit not what we wanted, we need xml, like in ipgeobase. Just fill the template with the output of the corresponding fields, see an example in the file muxgeo.xml

Link

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.xml?ip=217.149.183.4

we get “the same but better” than ipgeobase xml output, and even in utf-8

Need JSON - no problem. Similar to the pattern, and done:

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.json?ip=217.149.183.4

I want exotics - let's display it as an ini-file:

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.ini?ip=217.149.183.4

In order to test the work, you can, for example, create a geo-base to the addresses of all countries in a format similar to the result mentioned above ( ipgeobase2nginx.php ). Let's create a text file with a template (muxgeo_fullstr.txt) and a simple script that will read the data for all available ranges.

A small note. In the examples, the frontend and backend work on the same nginx. In the case of a large load, it makes sense to spread them to different nginx, since the worker for backend with geodata consumes more memory than the minimum nginx with proxy_cache.

What is the further development of this project? You can, for example, add other data sources, quite a bit complicating the configuration, as well as connect your geo-databases, in which you can put “updates obtained from reliable sources :)”.

Our company is developing online parts stores on its own SaaS platform ( ABCP.RU ), and we also have several related projects, for example, the spare parts search service 4MyCar.ru .

Like many other web projects, we once came to understand the need for geolocation by IP-address. For example, now it is used on 4MyCar.ru to determine the region (when you first enter the site, the region is automatically set that way).

')

In the same way, the nearest branch of the store is selected on the ABCP client sites.

When a geolocation problem first arose before us, we were just beginning to study this question. As a matter of fact, at that time, apart from the MaxMind bases, there were no special alternatives. Tried, played and abandoned. In real work, MaxMind GeoLite was used several times to filter out particularly annoying bots who tried to lay down the sites of our clients.

(there was enough filtering by country in nginx, a primitive check in if, see the documentation ngx_http_geoip_module ). Free databases did not give sufficient accuracy in RU, contained the names of cities in Latin and therefore were not very suitable for other purposes.

After some time, one of our employees discovered an excellent site ipgeobase.ru , which allows you to “download” the geolocation database for Russia and Ukraine, and also use its XML web service through a simple http request. For example, switching to the 4mycar.ru website for the phrase “buy an oil filter in uryupinsk” from the respective city resulted in approximately such a request to the web service http://ipgeobase.ru:7020/geo?ip=217.149.183.4 . The results were the names of the city and region in Russian, which was very convenient. In a very short time, work with the web service was used in the code determining the nearest store branch. However, after launching into production, several problems emerged:

1) usually the request to the web service required some small time (hundredths of a second in normal condition from the data center in Moscow), but from the developers office in the region the delays were already higher (about half a second);

2) occasionally (according to our observations, at peak hours) this time was significantly longer, which caused unpleasant delays in responding to our customers;

3) it just so happened that for the same client several times it was required to conduct geolocation, hence the question of caching geodata;

4) with our non-optimal requests, we created a load on the web service ipgeobase, which was not good with respect to the owners of the service;

5) for other countries (not RU and not UA) geolocation did not work.

To solve these problems, we quickly “gathered a meeting”

and got two main solutions: take the database and write your web service (periodically download the ipgeobase database, import it into your database, give it via http with caching, for example, in memcached) or make caching of geodata in memcached or redis (we request data in ipgeobase and saving to cache). Offhand, both options required quite a lot of such scarce man-hours of developers, and eventually there was a third option: we reduce the accuracy somewhat (we replace the last octet in the ip address with 0 and assume that the same subnet / 24 is in different cities too often) and we make on our equipment a caching proxy on nginx with a lot of caching time and small timeouts when querying ipgeobase. This option was very effective, at times reduced the load on ipgeobase and geolocation time. The option with its own web service was postponed indefinitely.

After some time, we again needed geolocation in nginx (yes, again these bots, but now a lot of RU), so filtering by country according to MaxMind databases was not enough.

This was urgently needed, so we used another geo module ( ngx_http_geo_module ) and brought the region number into the variable from the ipgeobase database. That was enough to “plug the holes”.

Soon we got the ipgeobase2nginx.php script, which created the bases for nginx and, as a result, got human-readable information about the city in a variable. This data, as well as MaxMind data, could already be outputted to logs or transmitted in headers to the backend, which, in principle, suited everyone.

All this time we have been periodically thinking about further development. Plans to create your own web service gathered dust in the TODO lists and occasionally surfaced in the form “I want to study python / erlang / haskell / etc in the evening to write the following after 'Hello world'?”, But they didn’t move further.

Suddenly, first as a joke for tea (just for fun), an idea arose to make a web service similar to ipgeobase, but without the database engine and the use of scripting languages, based on the developments in nginx.

A quick analysis of what we have, gave the following result:

1) in free access there are GeoLite databases in csv and ipgeobase in the text;

2) the ngx_http_geo_module module can set variable values by IP address, and also does it terribly fast (it even uses binary geo range base to speed up);

3) for RU and UA, we trust ipgeobase, but, if possible, we want to see MaxMind data as well;

4) ssi ( ngx_http_ssi_module ) is perfectly implemented in nginx, and not only for text / html, but also for other file types;

5) nginx can take the ip address from the request header and assume that this is the client's IP address ( ngx_http_realip_module ), which means transfer it to the geo module.

It remains to add a few “knee” scripts, which from the csv and ipgeobase files make the required pieces of configs for nginx.

Here's what we got:

https://yadi.sk/d/QsNN87nMesXo8 - configs and scripts.

In order to show the web service in operation, we temporarily deployed it on VDS, available at http://muxgeo-demo.4mycar.ru:6280/muxge// .

To quickly launch such a service at home, you can download a ready-made LXC image - https://yadi.sk/d/1WrvV2RyesYFM (login: password - ubuntu: ubuntu).

Here is a brief description of how scripts work, in LXC we place them in / opt / scripts.

In the / opt / scripts / in subdirectory you need to put the files obtained from MaxMind and ipgeobase, and process them a bit (like this):

iconv -f latin1 GeoLiteCity-Location.csv | iconv -t ascii // translit> GeoLiteCity-Location-translit.csv

To work, you need an additional file from MaxMind with the names of the regions:

dev.maxmind.com/static/csv/codes/maxmind/region.csv

Now the scripts themselves:

GeoLite2nginx.pl - generates out / nginx_geoip_ * files

ipgeobase2nginx.pl - generates out / nginx_ipgeobase_ * files

We will need to impose ranges of IP addresses in geoip and ipgeobase. For this, the first two scripts, when executed, created files with an integer representation of IP addresses (out / nginx_geoip_num.txt and out / nginx_ipgeobase_num.txt). We manually made the file in / nginx_localip_num.txt into which we put the list of reserved ranges (local networks, etc.). Additionally, from the resulting lists exclude the range of multicast addresses.

How we do it:

The make-dup-ranges.pl script goes through the list and for each even ip (the beginning of the new range) adds the previous one (the end of the previous range) to the list, and the next for each odd ip. Next list sorted, remove duplicates.

The make-ranges.pl script creates such a config with ranges for nginx.

Now we have configs for nginx, we need to connect them.

The scheme we will have consists of frontend and backend (the frontend sends requests to the backend with header conversion and caching). Let's do all this on ubuntu 14.04 in the LXC container, we take nginx from the official site.

Contents out put here:

/ etc / nginx / muxgeo / data /

Let's make “strapping” that will set the necessary variables:

/etc/nginx/muxgeo/muxgeo.conf

/etc/nginx/muxgeo/muxgeo-geoip.conf

/etc/nginx/muxgeo/muxgeo-ipgeobase.conf

And also the primitive logic for the backend:

/etc/nginx/muxgeo/muxgeo_site.conf

The configs for the frontend and backend are here:

/etc/nginx/conf.d/muxgeo-frontend.conf (listening on port 6280)

/etc/nginx/conf.d/muxgeo-backend.conf (port 6299)

We also need a file, say, index.html, in which we will output the data in the required format using SSI in nginx. We place it in the catalog

/ opt / muxgeo / muxgeo-backend / muxgeo

So the request to

http://muxgeo-demo.4mycar.ru:6280/muxgeo/?ip=217.149.183.4

It is broadcast on the backend with the substitution of the IP address on 217.149.183.4, and the backend will insert the information in the right places of the html text.

But the html page is a bit not what we wanted, we need xml, like in ipgeobase. Just fill the template with the output of the corresponding fields, see an example in the file muxgeo.xml

Link

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.xml?ip=217.149.183.4

we get “the same but better” than ipgeobase xml output, and even in utf-8

Need JSON - no problem. Similar to the pattern, and done:

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.json?ip=217.149.183.4

I want exotics - let's display it as an ini-file:

http://muxgeo-demo.4mycar.ru:6280/muxgeo/muxgeo.ini?ip=217.149.183.4

In order to test the work, you can, for example, create a geo-base to the addresses of all countries in a format similar to the result mentioned above ( ipgeobase2nginx.php ). Let's create a text file with a template (muxgeo_fullstr.txt) and a simple script that will read the data for all available ranges.

A small note. In the examples, the frontend and backend work on the same nginx. In the case of a large load, it makes sense to spread them to different nginx, since the worker for backend with geodata consumes more memory than the minimum nginx with proxy_cache.

What is the further development of this project? You can, for example, add other data sources, quite a bit complicating the configuration, as well as connect your geo-databases, in which you can put “updates obtained from reliable sources :)”.

Source: https://habr.com/ru/post/251463/

All Articles