Lock-free data structures. Concurrent maps: trees

This is the last, for today, article from the cycle about the internal structure of competitive associative containers. In previous articles, the hash map was considered, the lock-free ordered list algorithm and containers based on it were built. Overboard was left one important type of data structure - trees. It's time to tell a little about them.

This is the last, for today, article from the cycle about the internal structure of competitive associative containers. In previous articles, the hash map was considered, the lock-free ordered list algorithm and containers based on it were built. Overboard was left one important type of data structure - trees. It's time to tell a little about them.Studies on algorithms of competitive trees that do not require external synchronization of access to them began a long time ago - in the 70s of the last century - and were initiated by the development of the DBMS, therefore, they mainly focused on optimizing page trees ( B-tree and its modifications).

The development of the lock-free approach in the early 2000s did not pass by tree algorithms, but only recently, in the 2010s, many really interesting works on competitive trees appeared. The algorithms of the trees are quite complex, so the researchers took time - about 10 years - to lock-free / non-blocking their adaptation. In this article we consider the simplest case - the usual binary tree, not even self-balancing.

What is the practical meaning of a regular binary tree, the reader will ask, because we all know that for ordered data such a tree degenerates into a linear list with O (N) search complexity? The main point is to test the approaches on a simple data structure. In addition, for random data, the binary tree fits quite well, providing O (log N) complexity, and its internal simplicity is the key to high efficiency. So it all depends on the task where such a tree is used.

')

To begin with - a retrospective look at the problem of building lock-free / non-blocking trees. After quite effective lock-free algorithms for queues and lists were found, works on lock-free self-balancing trees appeared - AVL tree, Red-Black tree. The pseudocode of these algorithms was complicated, so complex that I would not undertake to implement it, since it is not clear to me, for example, how to apply memory management schemes to them (Hazard Pointer, RCU or something else), the algorithms relied on garbage collector (GC) language, usually Java. In addition, I would not embody an algorithm that uses the CAS primitive (compare-and-swap) to find the key in the tree — CAS is too heavy for this. And in general, despite the formal proof of the correctness of such an algorithm (in the presence of GC, this reservation is essential, the absence of GC may violate the proof), its complexity, the presence of many boundary cases, seemed insurmountable to me in the light of debugging.

Apparently, not only the complexity and, according to the developers, the inefficiency of the resulting lock-free algorithms for trees scared me. Therefore, in the beginning of the 2010s, the emphasis in the development of algorithms shifted somewhat: instead of providing a lock-free by any means, many works began to appear, where the effectiveness of the search operation on the tree was put at the forefront. Indeed, trees are used mainly in search tasks (it is believed that search is 70 - 90% of operations), and that search must be fast. Therefore, non-blocking algorithms have appeared in which the search is lock-free, and the insertion / deletion can be blocked at the node group level (fine-grained locking) - either explicitly using mutexes, or implicitly using checkboxes, etc., that is, essentially it is spinning. This approach gave less complex and more understandable algorithms, one of which we will consider.

Binary search tree

We will consider the tree in which the data is located in the leaves, the internal nodes are routing and contain only keys:

This is the so-called leaf-oriented tree. The presence of the required key in the internal node does not mean that the key exists in the tree, only the leaves contain the keys and the corresponding data, the internal nodes can contain remote keys.

In such a tree, each node has exactly two descendants. Inserting into such a tree always gives rise to one leaf and one inner node, when deleting a key, one leaf and one inner node, its parent, is deleted. No balancing is provided.

Consider what surprises await us in the performance of competing operations. Let there be some kind of tree and streams A and B perform deletion of keys 15 and 27 respectively:

Flow A to remove key 15 must remove a sheet with this key and its parent — an internal node with key 20. To do this, it changes the right link from its grandfather — node 10 — from node 20 to node 30, which is a brother of the node to be deleted. Since we are considering competitive trees, this operation must be performed atomically, that is, using CAS (compare-and-swap).

At the same time, flow B removes key 27. In the same way as above, flow B throws the right link of node 20 (grandfather 27) from CAS 30 to sheet 45.

If these two actions are performed in parallel, as a result, we get an attainable node 30 and an attainable sheet 27 that needs to be removed.

When performing competing deletions and inserts, the situation is similar:

Here, flow A, deleting the key sheet 27, competes with flow B, which inserts the new key 29. To delete 27 (and its parent, internal node 30), flow A throws the pointer to the right son of node 20 from 30 to 45. At the same time key 29 and the corresponding internal node 29 are inserted as the left son of node 30, in the same position from which flow A removes key 27. As a result, the new key becomes unreachable - a memory leak.

It is obvious that the CAS primitive itself cannot resolve the above described cases. It is necessary before tagging / deleting to somehow mark the nodes involved in the operation. The insert operation involves a leaf node and its parent is an internal node. Before inserting a parent node, it should be marked "insertion in progress" so that competing insertions / deletions cannot be performed on this node. A delete sheet is involved in a delete operation; its parent and the parent of its parent are the grandfather of the sheet to be deleted. Both the internal node - the parent and grandfather - must also be marked to exclude competition on them.

In this paper, we propose to use the

State field for each internal node:

The internal node can be in one of the following states:

Clean- no inserts or deletes are performed on the node. This is the default node state.IFlag- the insert is inserted into the node. This flag marks the internal node, in which at least one son is a leaf.DFlag- deletion inDFlag. This flag marks the internal node-grandfather of the sheet being removed.Mark- the internal node will be deleted. This flag marks the parent of the sheet being deleted (remember that in our tree, when a sheet is deleted, its parent is also always deleted).

These states are mutually exclusive: each node can only be in one of them. Changing the state of a node is performed by the CAS primitive.

Recall that our main goal is to implement the key search operation in the tree as efficiently as possible. How do these states affect search? Obviously, the insert operation and the corresponding

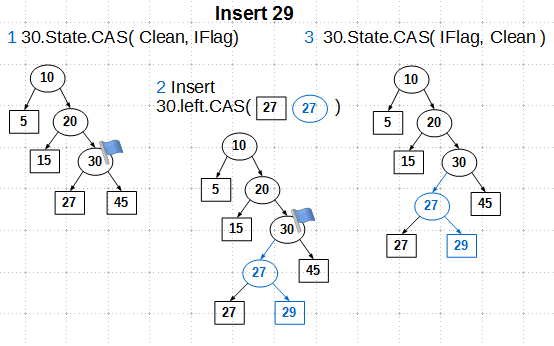

IFlag flag can be ignored during the search: there are no IFlag when inserting, which means that we will not be able to “enter the forbidden, remote zone”. But the flags DFlag and Mark should influence the search: when reaching a node with one of these flags, the search should be resumed from the beginning (variations of actions are possible here, but the simplest thing is to start the search again).So, let's look at how these states work by the example of inserting the key 29:

We find the insertion node - this is the internal node 30. First, we set the state of the

IFlag node 30 by the CAS primitive. CAS here guarantees that we will move to the IFlag only from the Clean state, which eliminates competing operations, that is, we become the exclusive owners of node 30. Next, we create an internal node 27, assign sons to it — the existing sheet 27 and the new 29, and we change the pointer of the left son of node 30 to the newly created internal node 27. Why is CAS needed here, is it possible to get along with an ordinary atomic store? The answer is that in the original algorithm you cannot; in the implementation of libcds, you can use an atomic store, we'll talk about this later. And finally, the third step: remove the IFlag flag from node 30. Here also CAS is used in the original algorithm, which can be replaced with an atomic store, if we give up something not very necessary.The deletion operation using the

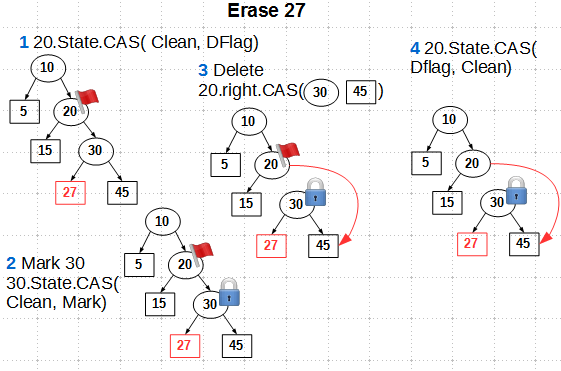

State flags consists of four steps:

- We mark the grandfather of the sheet being

DFlagwith the state ofDFlagusing CAS Markparent of the deleted sheet withMarkstate also with CAS- Throw CAS'om pointer descendant node grandfather

- Remove the label

DFlagwith grandfather. It uses CAS, but you can use a simpler atomic store with some simplification of the algorithm.

Note that we do not remove the

Mark label from the parent node of the leaf being deleted, since in our algorithm the parent is also deleted and there is no point in removing the label from it.Let's see how flags work in case of competitive deletion. Without state flags, concurrent deletion of keys 15 and 27 led, as we saw earlier, to reachability of a deleted sheet:

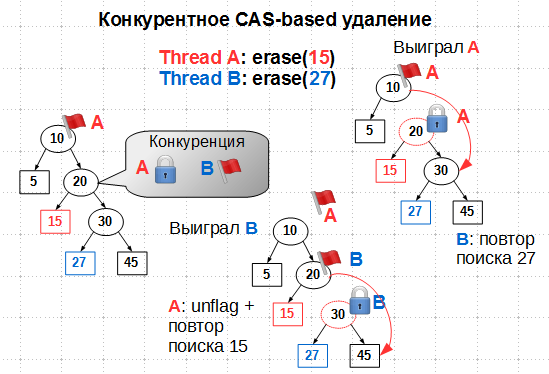

With the status flags we will have:

It would seem that if flow A set the

DFlag flag on node 10, flow B should not go beyond node 10 when searching for 27. But our operations are performed in parallel, so it is quite possible that flow B managed to slip node 10 before it was marked flag DFlag . Further, competition arises at node 20: flow A wants to mark it with the Mark flag, flow B with the flag DFlag . Since the node state is set by the atomic CAS from the Clean state, only one of the streams will win this battle. If stream A wins, that is, if he managed to mark 20 with Mark , stream B starts searching for the deleted node 27 from the beginning, and A deletes 15 and then removes the DFlag flag from 10. If B wins, marking 20 with the DFlag flag, stream A should remove your tag from 10 and repeat the search for node 15. In both cases, the deletion of keys is finally performed successfully and without disrupting the tree structure.As you can see, state flags play the role of internal mutexes, providing exclusive access to the deleted nodes. It remains to clarify some hints about the admissibility of replacing the CAS primitive with the atomic store when removing flags, that is, when the node is transferred to the

Clean state. In the original algorithm, mutual help (helping) of flows is used when competition is detected on a node. To do this, in addition to the status flags, the internal node may contain a handle to the operation being performed - insert or delete. A competing stream, detecting the state of a node other than

In the original algorithm, mutual help (helping) of flows is used when competition is detected on a node. To do this, in addition to the status flags, the internal node may contain a handle to the operation being performed - insert or delete. A competing stream, detecting the state of a node other than Clean , can read this descriptor and try to help its fellow run the operation. In this case, the transition to the Clean state at the end of the operation should be made only by the CAS primitive, since several threads — the initiator of the operation and the helpers — can transfer the node state to Clean . If we make it an atomic store, then it is possible that a node transferred to the Clean state and then to some other subsequent insert / delete operation will be transferred back to Clean by some late assistant.Receiving mutual assistance looks good in pseudocode, but in practice I have not seen much benefit from it. Moreover, in C ++, where there is no garbage collector, this technique is quite problematic to implement effectively: the question immediately arises of how to distribute descriptors (on the stack? Alloc? Pool?), And even more serious - about the lifetime of such a descriptor (reference counting?). In the implementation of libcds helping is disabled: several attempts to introduce it have not been successful, the code is unstable (this beautiful phrase from the managers' lexicon means that the program crashes). So the binary search tree algorithm in libcds contains several artifacts, including CAS instead of an atomic store, when translating the node state to

Clean , which I’ll get rid of in the future.Conclusion

This article describes the simplest binary tree algorithm. Despite the lack of balancing, the algorithm is of interest, if only because it is the first step towards the implementation of more complex, self-balancing trees such as AVL-tree and Red-Black tree, which are being worked on, I hope to present them in libcds soon.

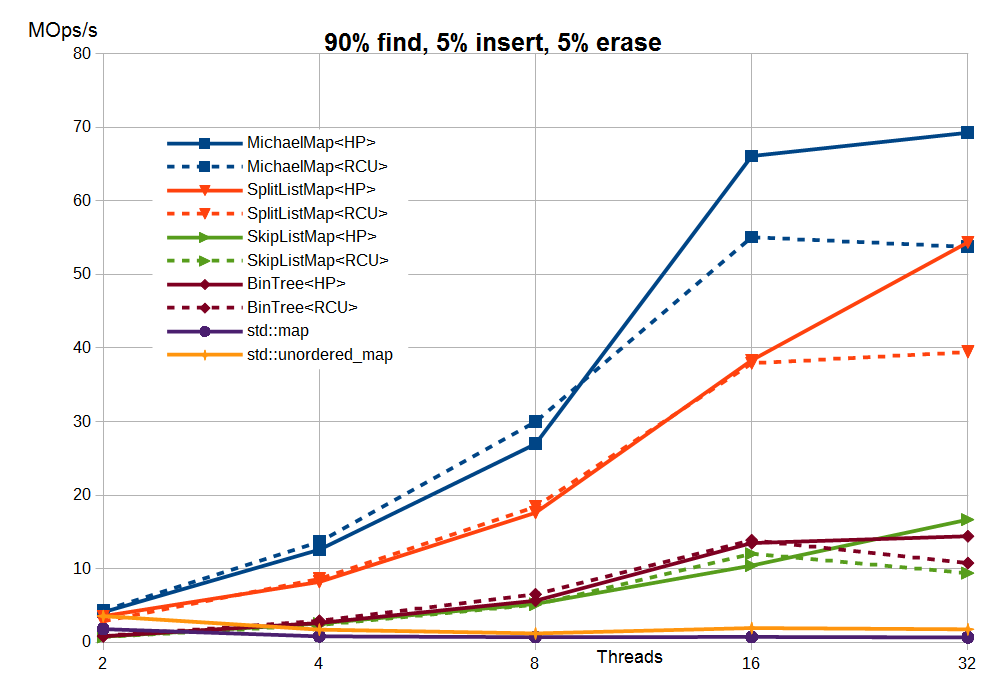

I would like to finish a series of articles on concurrent map with synthetic test results for implementations from libcds (Intel Dual Xeon X5670 2.93 GHz 12 cores 24 threads / 24 GB RAM, average number of elements - 1 million, keys - int):

Here:

- MichaelMap is the simplest hash map without rehasing, reviewed here.

- SplitListMap - split-ordered list algorithm

- SkipListMap - algorithm skip list

- BinTree - the binary tree considered in this article

- std :: map, std :: unordered_map - STL-algorithms for std :: mutex

The results are given for Hazard Pointer (HP) and the user-space RCU (more precisely, its buffered variety

cds::urcu::general_buffered ).You can confirm or deny these results by downloading and compiling libcds or applying data structures from libcds in your application.

Perhaps today this is all that I would like to tell you about lock-free data structures. The end of an epic cycle! There are many questions on lock-free and in general on the internal structure of containers that could be discussed, but they are all very technical and, I am afraid, will not be of interest to the general public.

Source: https://habr.com/ru/post/251267/

All Articles