Introduction to the course "Image and video analysis". Lectures from Yandex

We are starting to publish lectures by Natalia Vasilyeva , Senior Researcher at HP Labs and Head of HP Labs Russia. Natalya Sergeevna gave a course on image analysis at the St. Petersburg Computer Science Center, which was created on the joint initiative of the Yandex Data Analysis School, JetBrains and CS Club

In total, the program - nine lectures. The first of them tells how image analysis is used in medicine, security systems and industry, which tasks it has not yet learned to solve, what advantages human visual perception has. Decryption of this part of the lectures - under the cut. Starting from the 40th minute, the lecturer talks about Weber's experiment, color representation and perception, Mansell color system, color spaces and digital representations of the image. Fully lecture slides are available here .

Images everywhere around us. The volume of multimedia information is growing every second. Films, sports matches are being made, equipment for video surveillance is being installed. Every day we ourselves take a large number of photos and videos - almost every telephone has such an opportunity.

')

For all these images to be useful, you need to be able to do something with them. You can put them in a box, but then it is not clear why they should be created. It is necessary to be able to search for the right pictures, to do something with video data - to solve problems specific to a particular area.

Our course is called “Image and Video Analysis”, but it’s mainly about images. It’s impossible to start video processing without knowing what to do with the picture. Video is a collection of static images. Of course, there are tasks specific to the video. For example, tracking objects or the allocation of some key frames. But at the heart of all the algorithms for working with video are algorithms for processing and analyzing images.

What is image analysis? This is largely adjacent and intersecting with computer vision area. She has no exact and only definition. For example, we give three.

This definition implies that regardless of whether we are or not, there is some surrounding world and its images, analyzing which we want to understand something about it. And it is suitable not only for determining the analysis of digital images by a machine, but also for analyzing them with our head. We have a sensor - the eyes, we have a transforming device - the brain, and we perceive the world by analyzing those pictures that we see.

Probably, this is more related to robotics. We want to make decisions and draw conclusions about real objects around us based on the images that the sensors capture. For example, this definition fits perfectly into the description of what a robot vacuum cleaner does. He decides where to go next and what angle to vacuum based on what he sees.

The most common definition of the three. If you rely on it, we just want to describe the phenomena and objects around us on the basis of image analysis.

Summing up, we can say that, on average, image analysis comes down to extracting meaningful information from images. For each specific situation, this meaningful information may be different.

If we look at a photo in which a little girl eats ice cream, then we can describe it with words - this is how the brain interprets what we see. About this we want to teach the car. To describe an image with text, it is necessary to carry out such operations as recognizing objects and faces, determining the sex and age of a person, highlighting areas of uniform color, recognizing actions, and extracting textures.

In the course of the course we will talk about image processing algorithms. They are used when we increase the contrast, remove color or noise, apply filters, etc ... In principle, changing images is all that is done in image processing.

Next come image analysis and computer vision. There are no exact definitions for them, but, in my opinion, they are characterized by the fact that having an image at the input, at the output we get a certain model or a certain set of features. That is, some numeric parameters that describe this image. For example, the histogram of the distribution of gray levels.

In the image analysis, as a result, we get a feature vector. Computer vision solves wider tasks. In particular, models are built. For example, a set of two-dimensional images can be constructed three-dimensional model of the premises. And there is another adjacent area - computer graphics, in which they generate an image by model.

All this is impossible without the use of knowledge and algorithms from a number of areas. Such as pattern recognition and machine learning. In principle, it can be said that image analysis is a special case of data analysis, an area of artificial intelligence. Neuropsychology can be attributed to the related discipline - in order to understand what opportunities we have and how the perception of pictures is arranged, it would be good to understand how our brain solves these problems.

There are huge archives and collections of images, and one of the most important tasks is the indexing and search for pictures. Collections are different:

What can you do with all these pictures? The simplest thing is to somehow intelligently build navigation on them, classifying them by topic. Separately fold the bears, the elephants separately, the oranges separately - so that the user would later be comfortable navigating through this collection.

A separate task is the search for duplicates . In two thousand photographs from a non-repeating vacation are not so much. We love to experiment, shoot with different shutter speeds, focal length, etc., which ultimately gives us a large number of fuzzy duplicates . In addition, a duplicate search can help detect the illegal use of your photo, which you once could put on the Internet.

An excellent task is to choose the best photo . With the help of the algorithm, it is possible to understand which picture the user most likes. For example, if this is a portrait, the face should be lit, the eyes open, the image should be clear, etc. Modern cameras already have this feature.

The search task is also the creation of collages , i.e. selection of photos that will look good next.

Now absolutely amazing things are happening in medicine.

Another application is security systems . In addition to the use of fingerprints and retina for authorization, there are still unsolved problems. For example, ** detection of "suspicious" items **. Its complexity is that you cannot give a description in advance of what is a suspicious subject. Another interesting task is ** identifying suspicious behavior ** of a person in a video surveillance system. It is impossible to provide all possible examples of anomalous behavior, so the recognition will be arranged to identify deviations from what is marked as normal.

There are still a large number of areas where image analysis is used: military industry, robotics, filmmaking, the creation of computer games, and the automotive industry. In 2010, an Italian company equipped a truck with cameras, which, using maps and a GPS signal, automatically drove from Italy to Shanghai. The path passed through Siberia, not all of which is on the maps. On this segment, a man-driven car that was driving in front of him passed the map to him. The truck itself recognized traffic signs, pedestrians and understood how it can be rebuilt.

But why do we still drive cars ourselves, and even a person should be assigned to video surveillance systems? One of the key problems is the semantic gap .

A person, looking at a picture, understands its semantics. The computer also understands the color of the pixels, knows how to select the texture and ultimately distinguish the brick wall from the carpet and recognize the person in the photo, but to determine whether he is happy, the machine can still. We ourselves can not always understand this. That is, an automatic understanding of whether students miss a lecture is the next level.

In addition, our brain is a unique system of understanding and processing the picture that we see. He is inclined to see what we want to see, and how to teach the same computer is an open question.

We are very good at summarizing. In the image we are able to guess that we see the lamp. We do not need to know all the modifications of an item from one class in order to assign a sample to it. It is more difficult for a computer to do this, because visually different lamps can be very different.

There are a number of difficulties that image analysis has not yet coped with.

Our brain often "completes" the picture and adds semantics. We can all see “something” or “someone” in the outline of a cloud. The visual system is self-learning. It is difficult for Europeans to distinguish the faces of Asians, as he usually rarely meets them in life. The visual system has learned to capture differences in European faces, and Asians, whom he saw little, seem to him "to the same person." And vice versa. There was a case with colleagues from Palo Alto who, together with the Chinese, developed an algorithm for detecting faces. As a result, he miraculously found Asians, but could not see the Europeans.

In each picture, we first look for familiar images. For example, we see squares and circles here.

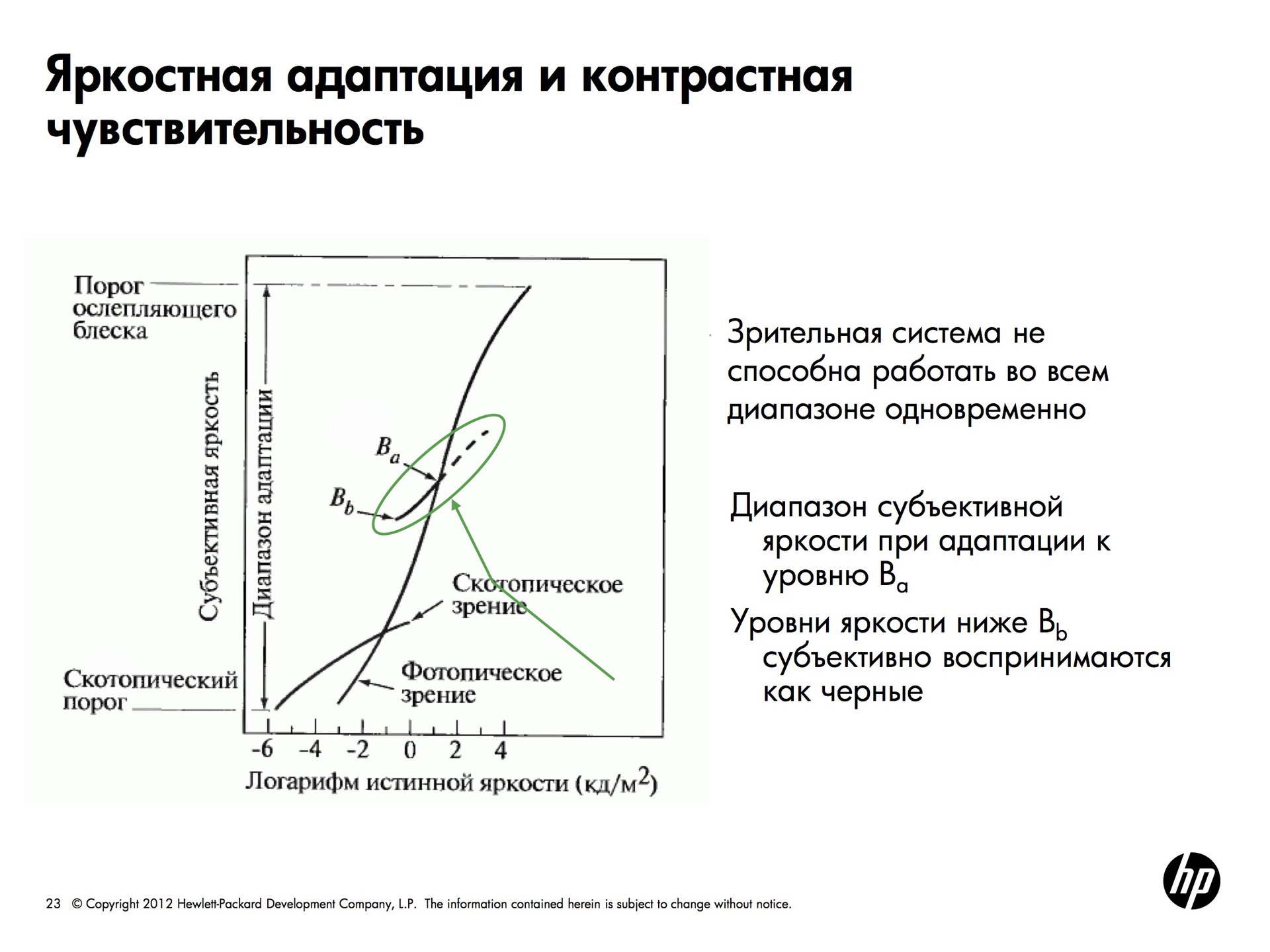

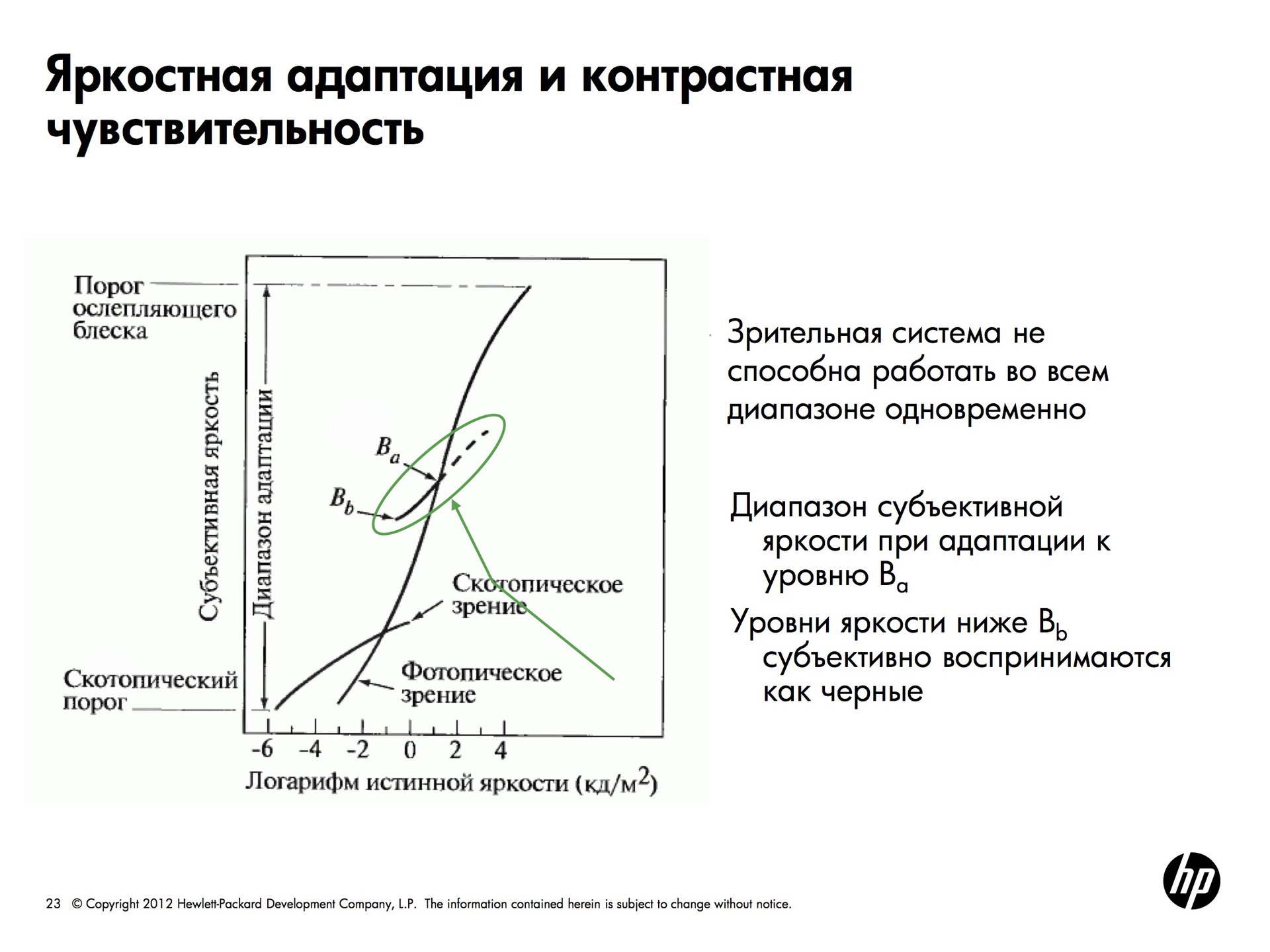

The eye is able to perceive very large ranges of brightness, but it does this in a sly way. The visual system adapts to the range of brightness values of the order of 10 ^ 10. But at any given moment we can recognize a small patch of brightness. That is, our eye chooses some point for itself, adapts to the brightness value in it and recognizes only a small range around this point. All that is darker appears black, all that is lighter white. But the eye moves very quickly and the brain completes the picture, so we see well.

Subjective brightness is the logarithm of physical brightness. If we look at the change in the brightness of any source and begin to change the brightness linearly, our eye will perceive it as a logarithm.

Two types of components are responsible for visual perception - cones and rods. Cones are responsible for color perception and can very clearly perceive the picture, but if it is not very dark. This is called photopic vision . Skotopic vision works in the dark - sticks are included, which are smaller than cones and which do not perceive color, therefore the picture is blurred.

In total, the program - nine lectures. The first of them tells how image analysis is used in medicine, security systems and industry, which tasks it has not yet learned to solve, what advantages human visual perception has. Decryption of this part of the lectures - under the cut. Starting from the 40th minute, the lecturer talks about Weber's experiment, color representation and perception, Mansell color system, color spaces and digital representations of the image. Fully lecture slides are available here .

Images everywhere around us. The volume of multimedia information is growing every second. Films, sports matches are being made, equipment for video surveillance is being installed. Every day we ourselves take a large number of photos and videos - almost every telephone has such an opportunity.

')

For all these images to be useful, you need to be able to do something with them. You can put them in a box, but then it is not clear why they should be created. It is necessary to be able to search for the right pictures, to do something with video data - to solve problems specific to a particular area.

Our course is called “Image and Video Analysis”, but it’s mainly about images. It’s impossible to start video processing without knowing what to do with the picture. Video is a collection of static images. Of course, there are tasks specific to the video. For example, tracking objects or the allocation of some key frames. But at the heart of all the algorithms for working with video are algorithms for processing and analyzing images.

What is image analysis? This is largely adjacent and intersecting with computer vision area. She has no exact and only definition. For example, we give three.

Computing properties of the 3D world. Trucco and Veri

This definition implies that regardless of whether we are or not, there is some surrounding world and its images, analyzing which we want to understand something about it. And it is suitable not only for determining the analysis of digital images by a machine, but also for analyzing them with our head. We have a sensor - the eyes, we have a transforming device - the brain, and we perceive the world by analyzing those pictures that we see.

Make it a useful decision. Shapiro

Probably, this is more related to robotics. We want to make decisions and draw conclusions about real objects around us based on the images that the sensors capture. For example, this definition fits perfectly into the description of what a robot vacuum cleaner does. He decides where to go next and what angle to vacuum based on what he sees.

Explicit construction, meaningful decisions of physical objects from images

The most common definition of the three. If you rely on it, we just want to describe the phenomena and objects around us on the basis of image analysis.

Summing up, we can say that, on average, image analysis comes down to extracting meaningful information from images. For each specific situation, this meaningful information may be different.

If we look at a photo in which a little girl eats ice cream, then we can describe it with words - this is how the brain interprets what we see. About this we want to teach the car. To describe an image with text, it is necessary to carry out such operations as recognizing objects and faces, determining the sex and age of a person, highlighting areas of uniform color, recognizing actions, and extracting textures.

Relationship with other disciplines

In the course of the course we will talk about image processing algorithms. They are used when we increase the contrast, remove color or noise, apply filters, etc ... In principle, changing images is all that is done in image processing.

Next come image analysis and computer vision. There are no exact definitions for them, but, in my opinion, they are characterized by the fact that having an image at the input, at the output we get a certain model or a certain set of features. That is, some numeric parameters that describe this image. For example, the histogram of the distribution of gray levels.

In the image analysis, as a result, we get a feature vector. Computer vision solves wider tasks. In particular, models are built. For example, a set of two-dimensional images can be constructed three-dimensional model of the premises. And there is another adjacent area - computer graphics, in which they generate an image by model.

All this is impossible without the use of knowledge and algorithms from a number of areas. Such as pattern recognition and machine learning. In principle, it can be said that image analysis is a special case of data analysis, an area of artificial intelligence. Neuropsychology can be attributed to the related discipline - in order to understand what opportunities we have and how the perception of pictures is arranged, it would be good to understand how our brain solves these problems.

What is image analysis for?

There are huge archives and collections of images, and one of the most important tasks is the indexing and search for pictures. Collections are different:

- Personalized For example, on vacation, a person can take a couple of thousand photographs, with which then you need to do something.

- Professional. They have millions of photos. Here, too, there is a need to somehow organize, search, find what is required.

- Collections of reproductions. These are also millions of images. Now a large number of museums have virtual versions for which reproductions are digitized, i.e. we get pictures of pictures. For the time being, the utopian task is to search for all reproductions of the same author. A person in style may assume that he sees, say, paintings by Salvador Dali. It would be great if the car learned it.

What can you do with all these pictures? The simplest thing is to somehow intelligently build navigation on them, classifying them by topic. Separately fold the bears, the elephants separately, the oranges separately - so that the user would later be comfortable navigating through this collection.

A separate task is the search for duplicates . In two thousand photographs from a non-repeating vacation are not so much. We love to experiment, shoot with different shutter speeds, focal length, etc., which ultimately gives us a large number of fuzzy duplicates . In addition, a duplicate search can help detect the illegal use of your photo, which you once could put on the Internet.

An excellent task is to choose the best photo . With the help of the algorithm, it is possible to understand which picture the user most likes. For example, if this is a portrait, the face should be lit, the eyes open, the image should be clear, etc. Modern cameras already have this feature.

The search task is also the creation of collages , i.e. selection of photos that will look good next.

Application of image analysis algorithms

Now absolutely amazing things are happening in medicine.

- Detection of anomalies . Already widely known and solved problem. For example, using an X-ray picture, they try to understand whether the patient is healthy or not — whether this picture is different from the picture of a healthy person. This can be either a snapshot of the entire body, or a separate circulatory system to isolate abnormal vessels from it. As part of this task - the search for cancer cells.

- Diagnosis of diseases . Also made based on snapshots. If you have a database of images of patients and it is known that the first anomaly occurs in healthy people, and the second means that the person has cancer, then, based on the similarity of images, you can help doctors diagnose diseases.

- Modeling the body and predicting the effects of treatment . Now this is what is called cutting edge. Although we are all similar, each organism is organized individually. For example, we may have a different location or thickness of blood vessels. If a person needs to connect a torn vessel with a shunt, then it is possible to determine where to put it, based on the expert opinion of the doctor, or by simulating the circulatory system and inserting the shunt in this model. So we will be able to see how the blood flow changes, and predict how the patient will feel in different ways.

Another application is security systems . In addition to the use of fingerprints and retina for authorization, there are still unsolved problems. For example, ** detection of "suspicious" items **. Its complexity is that you cannot give a description in advance of what is a suspicious subject. Another interesting task is ** identifying suspicious behavior ** of a person in a video surveillance system. It is impossible to provide all possible examples of anomalous behavior, so the recognition will be arranged to identify deviations from what is marked as normal.

There are still a large number of areas where image analysis is used: military industry, robotics, filmmaking, the creation of computer games, and the automotive industry. In 2010, an Italian company equipped a truck with cameras, which, using maps and a GPS signal, automatically drove from Italy to Shanghai. The path passed through Siberia, not all of which is on the maps. On this segment, a man-driven car that was driving in front of him passed the map to him. The truck itself recognized traffic signs, pedestrians and understood how it can be rebuilt.

Difficulties

But why do we still drive cars ourselves, and even a person should be assigned to video surveillance systems? One of the key problems is the semantic gap .

A person, looking at a picture, understands its semantics. The computer also understands the color of the pixels, knows how to select the texture and ultimately distinguish the brick wall from the carpet and recognize the person in the photo, but to determine whether he is happy, the machine can still. We ourselves can not always understand this. That is, an automatic understanding of whether students miss a lecture is the next level.

In addition, our brain is a unique system of understanding and processing the picture that we see. He is inclined to see what we want to see, and how to teach the same computer is an open question.

We are very good at summarizing. In the image we are able to guess that we see the lamp. We do not need to know all the modifications of an item from one class in order to assign a sample to it. It is more difficult for a computer to do this, because visually different lamps can be very different.

There are a number of difficulties that image analysis has not yet coped with.

Human visual perception

Our brain often "completes" the picture and adds semantics. We can all see “something” or “someone” in the outline of a cloud. The visual system is self-learning. It is difficult for Europeans to distinguish the faces of Asians, as he usually rarely meets them in life. The visual system has learned to capture differences in European faces, and Asians, whom he saw little, seem to him "to the same person." And vice versa. There was a case with colleagues from Palo Alto who, together with the Chinese, developed an algorithm for detecting faces. As a result, he miraculously found Asians, but could not see the Europeans.

In each picture, we first look for familiar images. For example, we see squares and circles here.

The eye is able to perceive very large ranges of brightness, but it does this in a sly way. The visual system adapts to the range of brightness values of the order of 10 ^ 10. But at any given moment we can recognize a small patch of brightness. That is, our eye chooses some point for itself, adapts to the brightness value in it and recognizes only a small range around this point. All that is darker appears black, all that is lighter white. But the eye moves very quickly and the brain completes the picture, so we see well.

Subjective brightness is the logarithm of physical brightness. If we look at the change in the brightness of any source and begin to change the brightness linearly, our eye will perceive it as a logarithm.

Two types of components are responsible for visual perception - cones and rods. Cones are responsible for color perception and can very clearly perceive the picture, but if it is not very dark. This is called photopic vision . Skotopic vision works in the dark - sticks are included, which are smaller than cones and which do not perceive color, therefore the picture is blurred.

Source: https://habr.com/ru/post/251161/

All Articles