Applied optimization practice and some history

“A thousand dollars for one hit with a sledgehammer ?!” cried the engine engineer of a surprised but happy (and saved solution to the problem). “No, a blow costs $ 1, the rest — for knowing where, when, and how hard to strike,” answered the old master.

Type of epigraph

In patenting there is such a category of inventions, when patent is not what a person / team has invented (it has been known for a long time, for example, glue), and not a new way to achieve a relevant goal (for example, sealing a wound from an infection). A patent, for example, is the use of a well-known substance in a completely new (for a given substance), but also well and long ago known to achieve the goal of the application. “And let's try to seal the wound with BF-6 glue? ABOUT! And it turns out that it has bactericidal properties ... and the wound under it breathes ... and heals faster! We must stake out and apply! "

In applied mathematics there are tools, the competent application of which allows solving a very wide range of various problems. This is what I want to tell you. Can someone push on the search for their non-trivial application of successfully mastered algorithms or techniques / programs. There will be few references to rigorous mathematical tools or relationships, more qualitative analysis of the advantages and applications of the numerical method (s), which has played a large role in my life and has become the basis for solving important professional problems.

Quite a long time ago (when defending a thesis and working at a military space enterprise, Krasnodar), tools were developed for solving optimization problems — finding a local extremum of a multiparameter function on a set of constraints for its parameters. Such methods are widely known, for example, almost everyone is familiar with the method of fastest descent ( Gradient descent ).

')

The software package (first Fortran, then Pascal / Modula 2, now the algorithm has been laid to rest in a well-inherited, convenient and universal VBA / Excel) implemented several different well-known extremum search methods that successfully complemented each other on a very wide class of problems. They were easily interchangeable and set on the stack (the next one started from the optimum found by the previous one, with a wide scope of possible initial values of the parameters), quickly found the minimum and “added” the objective function with a good guarantee of the globality of the found extremum.

For example, using this complex of programs, a model of an autonomous system consisting of solar batteries, batteries (then only long-lasting nickel-hydrogen batteries), payloads and control systems (battery orientation and energy conversion for loads) appeared. All this was chased for a given period of time under the influence of external meteorological conditions in a given area. For this, a weather model was also created (byme, independently, 7 years after Bradley Efron, rediscovered ) by the “ statistical bootstrap ” method. At the same time, the models of real parameters of silicon wafers (accumulators) were used as the initial "brick" for the model of the solar battery (and the battery), taking into account their statistical variation in the production and assembly scheme. The model of the autonomous system made it possible to estimate the size and configuration of the solar and rechargeable batteries sufficient to solve the tasks set in the given conditions of the system operation (here I directly physically recognized / recalled the cloth speech of the Colonel of the First Division).

Measurement of silicon wafers of photocells at the output of the process and identification of the parameters of the model of a single element using the optimization method allowed us to create an array of parameters of the elements describing the statistical parameters of the current process of production of solar cells.

The mathematical model of the current-voltage characteristics (VAC) of a solar cell is well known, there are few parameters. Selecting the parameters of the VAC model of a solar cell with a minimum standard deviation is the classical problem of finding the minimum of the objective function (the total square of the deviations in the IVC).

The parameters of the finished solar panel obtained by the Monte Carlo method reflected the possible stochastic scatter of its parameters for a given structure of the connection of elements. Building a model of a battery assembled from models of solar cells made it possible, in particular, to solve problems of estimating battery power losses during damage (the war in Afghanistan was in full swing, and meteorites were flying in space), and losses due to partial shading (snow, foliage ), which led to the occurrence of dangerous breakdown voltages and the need to correctly place shunt diodes to reduce power losses on average. All these were already stochastic problems; requiring a huge amount of computations with different scatter of the initial parameters of the “bricks”, battery connection diagrams and affecting factors. It was costly to “dance” from the primary identified model of a solar cell in such calculations, so a chain of models was built: a primary silicon wafer, a block from which the entire battery was assembled (if a structural diagram analysis was required) and the battery itself. The parameters of these models were identified using optimization methods, and the array of battery structure elements for its calculation was generated by the Monte Carlo method taking into account stochastic variations (parameter array + statistical bootstrap).

Solved problems are becoming more complicated. In the beginning, the idea was to make the most “computationally intensive” parts of optimization methods in assembler, but as productivity increases, first SM-computers (tasks were considered overnight), and then PCs (now the most complex tasks require an hour or two), the need to optimize the program optimization (a masterpiece of tautology) has disappeared. In addition, in most cases, the objective functions themselves are “computationally intensive”.

Here it is necessary to return to the already partially affected universality of optimization methods and correlate them with other methods of numerical analysis. Main applications of the developed tools:

- identification of the parameters of the theoretical model from the initial (experimental or calculated) data;

- approximation of data, this is the same identification not only of the model, but of the parameters of a suitable (in the general case abstract) formula, for the purposes of smoothing / approximation, interpolation and extrapolation;

- numerical solution of systems of arbitrary (!) Equations.

It seems to be a little bit, but this class of problems “covers” a wide range of real applied problems of mathematics in industry and science, an example of an autonomous system model is evidence of this.

In the arsenal of classical methods of numerical analysis there are effective direct methods / approaches for solving some standard problems (for example, systems of equations with polynomials of low degrees), special libraries of programs have been developed for them. However, when the number of parameters / unknowns exceeds 4-5 (and computational costs increase catastrophically), and / or when the type of equations is far from classical (not polynomials, etc.), but in life, as a rule, everything is more complicated (initial data noisy errors and measurement errors), ready-made libraries of programs are ineffective.

I will cite in more detail another example from the relatively recent use of the developed toolkit for solving optimization problems from life.

For a certain thesis on experimental (field) data, it was necessary to identify a theoretical model that was supposed to describe this data and allowed to give real recommendations on how to improve the efficiency of the adjacent technological process. Every percentage of technical process improvement justified repeated trips to field trials (and nearby beaches) first class through half the world and the purchase of a luxury Lexus jeep only for driving through the fields (and even with a nice leggy blonde who knows how not only to cook borsch :). The problem was that there were several such models, one could choose the applicability of one of them, the identification and the quality of reproduction of experimental data, and the parameters in these models were from 6 to 9, and potentially the most useful model according to the sandwich law was the most difficult.

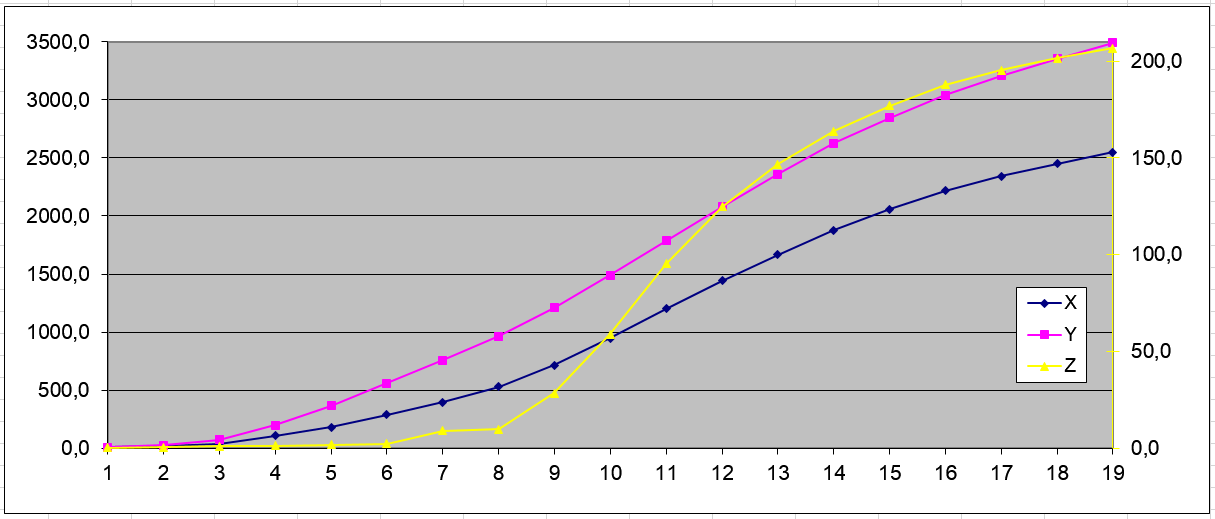

Below are the initial experimental data (X, Y, Z), 19 measurements:

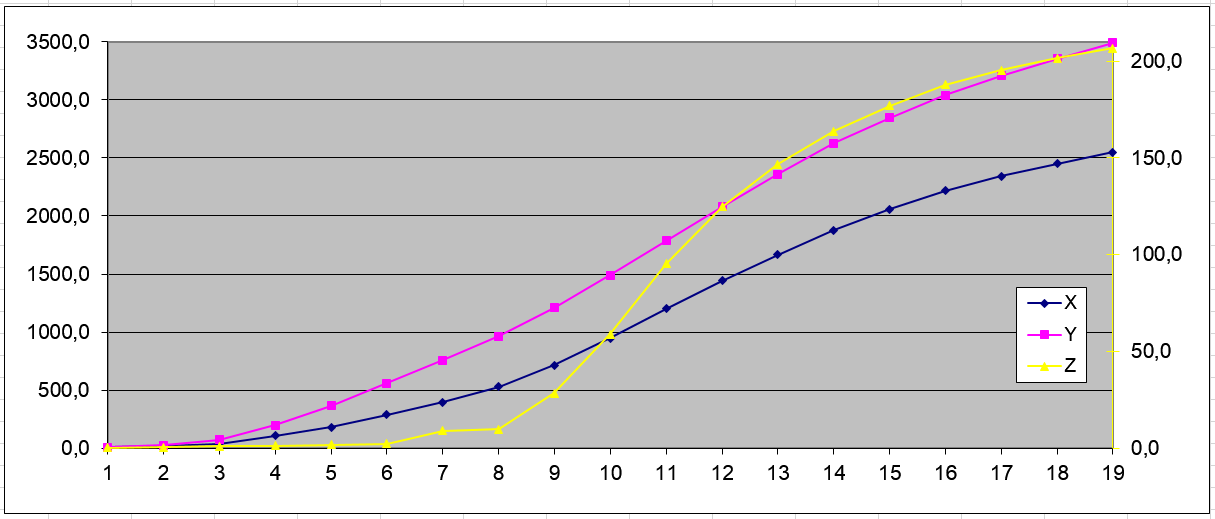

And an example of curve fitting (U3 is an experiment, U3R is a model calculation) for identifying the most complex nine-parametric model (a1 ... a9 are model parameters):

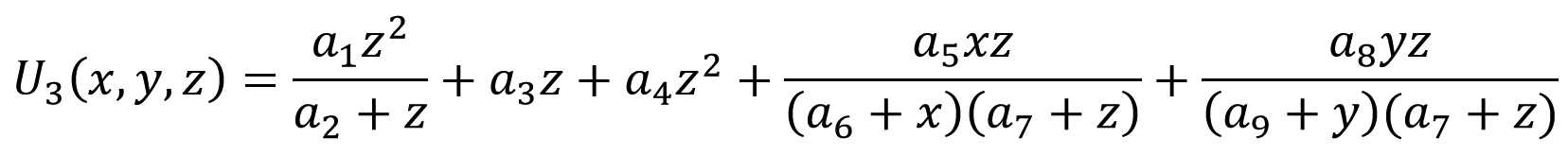

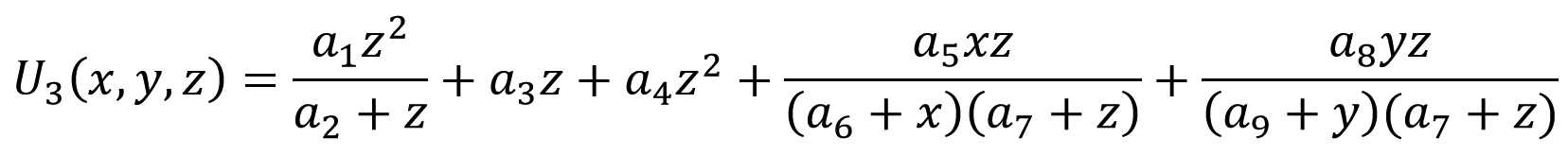

The formula of this model is:

If you have a need for such work - I could make calculations for the data provided and send the results by e-mail to assess the benefits of the developed mat. apparatus.

This is a demanded range of tasks in science, in production, in the preparation of dissertations, serious diplomas, data processing, and building models. Moreover, any deception is excluded, because according to the results provided, everyone can himself evaluate the accuracy of the calculation actually achieved.

Describe your problems in data processing - and I will find how to get a solution, the program should work!

Interested in getting a look from the outside on the described, perhaps, on new areas of application. I would appreciate useful comments and cooperation.

Type of epigraph

In patenting there is such a category of inventions, when patent is not what a person / team has invented (it has been known for a long time, for example, glue), and not a new way to achieve a relevant goal (for example, sealing a wound from an infection). A patent, for example, is the use of a well-known substance in a completely new (for a given substance), but also well and long ago known to achieve the goal of the application. “And let's try to seal the wound with BF-6 glue? ABOUT! And it turns out that it has bactericidal properties ... and the wound under it breathes ... and heals faster! We must stake out and apply! "

In applied mathematics there are tools, the competent application of which allows solving a very wide range of various problems. This is what I want to tell you. Can someone push on the search for their non-trivial application of successfully mastered algorithms or techniques / programs. There will be few references to rigorous mathematical tools or relationships, more qualitative analysis of the advantages and applications of the numerical method (s), which has played a large role in my life and has become the basis for solving important professional problems.

Quite a long time ago (when defending a thesis and working at a military space enterprise, Krasnodar), tools were developed for solving optimization problems — finding a local extremum of a multiparameter function on a set of constraints for its parameters. Such methods are widely known, for example, almost everyone is familiar with the method of fastest descent ( Gradient descent ).

')

The software package (first Fortran, then Pascal / Modula 2, now the algorithm has been laid to rest in a well-inherited, convenient and universal VBA / Excel) implemented several different well-known extremum search methods that successfully complemented each other on a very wide class of problems. They were easily interchangeable and set on the stack (the next one started from the optimum found by the previous one, with a wide scope of possible initial values of the parameters), quickly found the minimum and “added” the objective function with a good guarantee of the globality of the found extremum.

For example, using this complex of programs, a model of an autonomous system consisting of solar batteries, batteries (then only long-lasting nickel-hydrogen batteries), payloads and control systems (battery orientation and energy conversion for loads) appeared. All this was chased for a given period of time under the influence of external meteorological conditions in a given area. For this, a weather model was also created (by

Measurement of silicon wafers of photocells at the output of the process and identification of the parameters of the model of a single element using the optimization method allowed us to create an array of parameters of the elements describing the statistical parameters of the current process of production of solar cells.

The mathematical model of the current-voltage characteristics (VAC) of a solar cell is well known, there are few parameters. Selecting the parameters of the VAC model of a solar cell with a minimum standard deviation is the classical problem of finding the minimum of the objective function (the total square of the deviations in the IVC).

The parameters of the finished solar panel obtained by the Monte Carlo method reflected the possible stochastic scatter of its parameters for a given structure of the connection of elements. Building a model of a battery assembled from models of solar cells made it possible, in particular, to solve problems of estimating battery power losses during damage (the war in Afghanistan was in full swing, and meteorites were flying in space), and losses due to partial shading (snow, foliage ), which led to the occurrence of dangerous breakdown voltages and the need to correctly place shunt diodes to reduce power losses on average. All these were already stochastic problems; requiring a huge amount of computations with different scatter of the initial parameters of the “bricks”, battery connection diagrams and affecting factors. It was costly to “dance” from the primary identified model of a solar cell in such calculations, so a chain of models was built: a primary silicon wafer, a block from which the entire battery was assembled (if a structural diagram analysis was required) and the battery itself. The parameters of these models were identified using optimization methods, and the array of battery structure elements for its calculation was generated by the Monte Carlo method taking into account stochastic variations (parameter array + statistical bootstrap).

Solved problems are becoming more complicated. In the beginning, the idea was to make the most “computationally intensive” parts of optimization methods in assembler, but as productivity increases, first SM-computers (tasks were considered overnight), and then PCs (now the most complex tasks require an hour or two), the need to optimize the program optimization (a masterpiece of tautology) has disappeared. In addition, in most cases, the objective functions themselves are “computationally intensive”.

Here it is necessary to return to the already partially affected universality of optimization methods and correlate them with other methods of numerical analysis. Main applications of the developed tools:

- identification of the parameters of the theoretical model from the initial (experimental or calculated) data;

- approximation of data, this is the same identification not only of the model, but of the parameters of a suitable (in the general case abstract) formula, for the purposes of smoothing / approximation, interpolation and extrapolation;

- numerical solution of systems of arbitrary (!) Equations.

It seems to be a little bit, but this class of problems “covers” a wide range of real applied problems of mathematics in industry and science, an example of an autonomous system model is evidence of this.

In the arsenal of classical methods of numerical analysis there are effective direct methods / approaches for solving some standard problems (for example, systems of equations with polynomials of low degrees), special libraries of programs have been developed for them. However, when the number of parameters / unknowns exceeds 4-5 (and computational costs increase catastrophically), and / or when the type of equations is far from classical (not polynomials, etc.), but in life, as a rule, everything is more complicated (initial data noisy errors and measurement errors), ready-made libraries of programs are ineffective.

I will cite in more detail another example from the relatively recent use of the developed toolkit for solving optimization problems from life.

For a certain thesis on experimental (field) data, it was necessary to identify a theoretical model that was supposed to describe this data and allowed to give real recommendations on how to improve the efficiency of the adjacent technological process. Every percentage of technical process improvement justified repeated trips to field trials (and nearby beaches) first class through half the world and the purchase of a luxury Lexus jeep only for driving through the fields (and even with a nice leggy blonde who knows how not only to cook borsch :). The problem was that there were several such models, one could choose the applicability of one of them, the identification and the quality of reproduction of experimental data, and the parameters in these models were from 6 to 9, and potentially the most useful model according to the sandwich law was the most difficult.

Below are the initial experimental data (X, Y, Z), 19 measurements:

And an example of curve fitting (U3 is an experiment, U3R is a model calculation) for identifying the most complex nine-parametric model (a1 ... a9 are model parameters):

The formula of this model is:

If you have a need for such work - I could make calculations for the data provided and send the results by e-mail to assess the benefits of the developed mat. apparatus.

This is a demanded range of tasks in science, in production, in the preparation of dissertations, serious diplomas, data processing, and building models. Moreover, any deception is excluded, because according to the results provided, everyone can himself evaluate the accuracy of the calculation actually achieved.

Describe your problems in data processing - and I will find how to get a solution, the program should work!

Interested in getting a look from the outside on the described, perhaps, on new areas of application. I would appreciate useful comments and cooperation.

Source: https://habr.com/ru/post/250855/

All Articles