How to live with Docker, or why better with it than without it?

This article is intended for those who already know about Docker, who knows what it is for. But what to do with it does not know further. The article is advisory in nature and does not encroach on the title of "best practice".

So, you may have passed the docker tutorial , the docker seems simple and useful, but you still do not know how it can help you with your projects.

')

Usually there are three problems with deployment:

- How do I deliver the code to the server?

- How do I run the code on the servers?

- How can I ensure that the environment in which my code runs and works is the same?

As with this Docker under kat will help. So let's start not with the docker, but with how we lived without it.

How do I deliver the code to the server?

The first thing that comes to mind is to collect everything in a package. RPM or DEB does not matter.

Packages

The system already has a batch manager that can manage files, usually we expect the following from it:

- Deliver project files to server from some storage.

- Satisfy dependencies (libraries, interpreter, etc.)

- Delete the files of the previous version and deliver the files to the current one.

In practice, it works, for now ... Imagine the situation. You are a devOps permanently. Those. you spend all day automating the routine activities that admins were doing. You have a small fleet of machines, on which you do not breed zoo from distributions. For example, you love Centos. And she is everywhere. But here comes the problem: you need for one of the projects to replace libyy (which is a quest itself, found under Centos) with libxx. Why replace - you do not know. Most likely, the developer wrote “as usual Krivoruk” only for libxx, and it does not work with libyy. Well, we take vim in our hands and write rpmspecs for libxx, in parallel for new versions of libraries that are needed for building. And about 2-3 days of exercise, and here is one of the results:

- libxx collected, everything is fine, the task is completed

- libxx is not going to, not because, say, the system has libyy installed, which will have to be removed, but something else on the server depends on it

- libxx is not collected, there is a lack of a library that conflicts with some other library

Total probability to cope with the task is about 30% (although in practice, options 2 or 3 always fall out). And if you can somehow cope with the second situation (a virtual machine is there or lxc), then the third situation will either add one more beast to your menagerie, or use hi / usr / local hello for the zeros / slakvayar, etc.

In general, this situation is called dependency hell . There are a lot of options for solving this problem, from simple chroot to complex projects, such as NixOs .

What is it for me? Oh, yes. As soon as you start to collect everything in packages and drive them on the same system, you will definitely have a dependency hell situation. It can be solved in different ways. Or for each project to fence a new repository, plus a new virtual hardware / hardware, or to introduce restrictions. By restrictions, I mean a certain set of company policies, like: “We only have libyy-vN.N here. Who does not like the personnel department with a statement. ". About NN, I also just said so, some libraries can not live on one server in two versions. Restrictions give nothing in the end. Business, and common sense, will quickly destroy them all.

Think for yourself what is more important: to make a feature in a product that depends on updating a component of a third party, or to give Devops time to enjoy the heterogeneity of the environment.

Deploy through GIT

Good for interpreted languages. Not suitable for something that needs to be compiled. And also do not get rid of dependency-hell.

How do I run the code on the servers?

Many questions have been invented to this question: daemontools, runit, supervisor. We believe that this question has at least one correct answer.

How can I ensure that the environment in which my code runs and works is the same?

Imagine a banal situation, you are still the same DevOps, a task comes to you, you need to deploy the Eniac project (the name is taken from the name generator for the projects) on N servers, where N is more than 20.

You drag Eniac from GIT, it is on technology known to you (Django / RoR / Go /

Understand the problem. Go ahead, you manage to run Eniac on one server, and it does not work for another N-1.

How can Docker help me?

Well, first, let's understand how to use Docker as a means of delivering projects on hardware.

Private Docker Registry

What is the Docker Registry ? This is what stores your data, like a WEB server, which distributes deb-packages and files with metadata for them. You can install Docker Hub on a virtual machine in Amazon and store data in Amazon S3, or put it on your infrastructure and store data in Ceph or on file system.

The docker registry itself can also be run by the docker. This is just an application in python (apparently, they did not master go to write libraries to the repositories, of which the registry supports a large number).

And how our project will get to this storage, we will sort later.

How do I run the code on the servers?

Now let's compare how we deliver the project to a regular package manager. For example apt:

apt-get update && apt-get install -y myuperproject This command is suitable for automation. She will do everything quietly, she will need to restart our application.

Now an example for Docker:

docker run -d 192.168.1.1:80/reponame/mysuperproject:1.0.5rc1 superproject-run.sh And that's all. Docker will download everything from your docker registry located at 192.168.1.1 and listening to port 80 (I have nginx here) to run, and run superproject-run.sh inside the image.

What will happen if in the image of the 2 programs that we run, and, say, in the next release, one of them broke, but the other on the contrary, scored features that need to be rolled out. No problems:

docker run -d 192.168.1.1:80/reponame/mysuperproject:1.0.10 superproject-run.sh docker run -d 192.168.1.1:80/reponame/mysuperproject:1.0.5rc1 broken-program.sh And now we have 2 different containers, and inside them there can be completely different versions of the system file libraries, etc.

How can I ensure that the environment in which my code runs and works is the same?

In the case of standard virtual machines or in general, real systems will have to keep track of everything. Well, or learn Chef / Ansible / Puppet or something else from similar solutions. Otherwise, there is no guarantee that xxx-utils is the same version on all N servers.

In the case of the docker, you do not need to do anything, just expand the containers from the same images.

Container Assembly

In order for your code to get into the docker registry (yours or global does not matter), you will have to push it there.

You can force the developer to collect the container yourself:

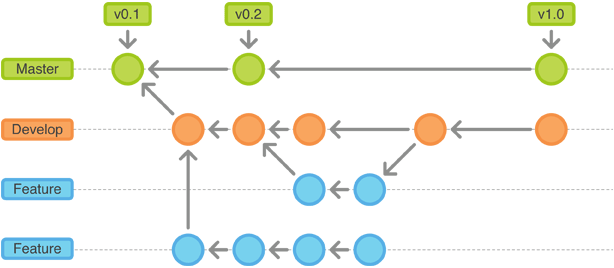

- The developer writes Dockerfile

- Makes docker tag $ imageId 192.168.1.1:80/reponame/mysuperproject:1.0.5rc1

- And docker push push 192.168.1.1:80/reponame/mysuperproject:1.0.5rc1

Or collect automatically. I did not find anything that collects containers, so here, I have collected a bicycle called lumper

The bottom line is as follows:

- Install 3 components on the server lumper and RabbitMQ

- We listen to port for github hooks.

- We configure in github web-hook on our installation lumper

- Developer makes git tag v0.0.1 && git push --tags

- Receives email with build results

Dockerfile

In fact, there is nothing difficult. For example, build a lumper with the help of Dockerfile.

FROM ubuntu:14.04 MAINTAINER Dmitry Orlov <me@mosquito.su> RUN apt-get update && \ apt-get install -y python-pip python-dev git && \ apt-get clean ADD . /tmp/build/ RUN pip install --upgrade --pre /tmp/build && rm -fr /tmp/build ENTRYPOINT ["/usr/local/bin/lumper"] Here FROM speaks about the image on which the image is based. Next, RUN launches commands preparing the environment. ADD places the code in / tmp / build, then ENTRYPOINT specifies the entry point to our container. So when you start by writing docker run -it - rm mosquito / lumper - help you will see the output - help entry point.

Conclusion

In order to run containers on servers there are several ways, you can either write an init-script or look at Fig, which can deploy entire solutions from your containers.

I also deliberately bypassed the topic of port forwarding, and ip container routing.

Also, since the technology is young, the docker has a huge community. And excellent documentation . Thanks for attention.

Source: https://habr.com/ru/post/250469/

All Articles