Сappasity 3D Scan - 3D scanning using Intel RealSense. Development experience

In 2014, we decided to launch a software start-up where we could use our five-year experience in 3D technology gained in game development. The subject of 3D reconstruction has interested us for a long time, and throughout 2013 we carried out numerous experiments with the decisions of other companies - this is how the desire to create our own gradually accumulated. As you, probably, understood, our dream was successfully realized in reality; Chronicle of this embodiment - under the cut. There's also a story of our relationship with Intel RealSense.

We reconstructed the stereo reconstruction earlier for our own needs, since we were looking for the best way to produce 3D models, and using 3D capture solutions seemed quite logical. 3D scanning using a hand scanner seemed to us the most affordable option. Everything looked simple in advertising, but it turned out that the process takes a lot of time: tracking is often lost and you have to start from the very beginning. But most of all distressed blurred texture. That is, such content for games was not suitable for us.

We asked about photogrammetry and realized that we did not have the extra $ 100,000 dollars. In addition, special skills were required to set up cameras and then a long post-processing of high-poly data. It turned out that we are definitely not saving anything, but, on the contrary, everything became much more expensive, considering our volumes of content production. It was at that moment that the thought arose of trying to do something different.

')

Initially, we had thought out the requirements for a system that would be convenient for the production of content:

- Stationarity of the system - calibrated once and can be removed.

- Snapshot - clicked and done.

- The ability to connect SLR cameras for photo-realistic texture quality.

- Independence of the system on the type of sensors - technology does not stand still, and new sensors appear.

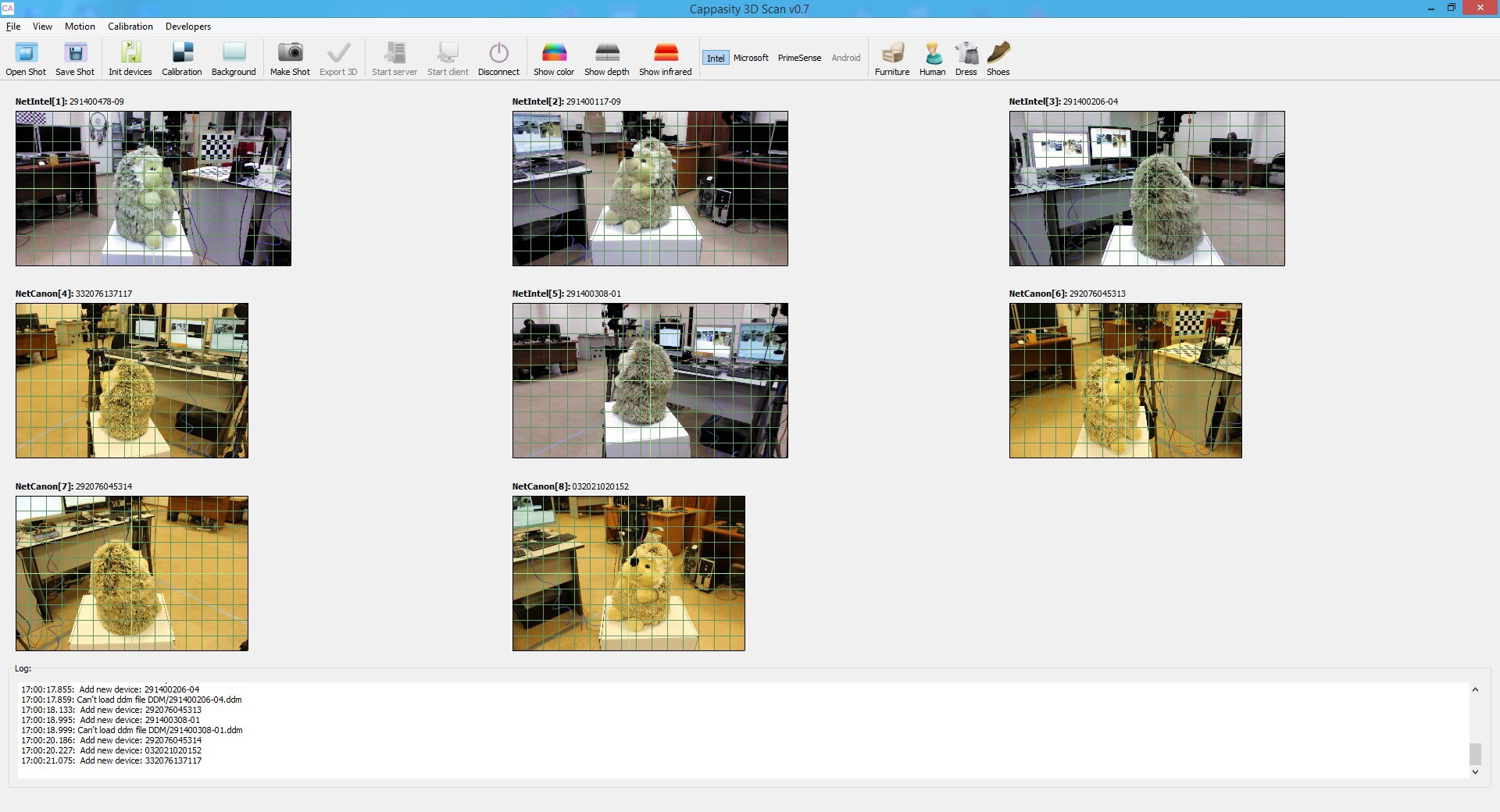

- Full implementation of the client-server technology. Each client is a computer with a sensor.

- Lack of restrictions on the number of devices - we may need to instantly capture objects of different sizes and, therefore, any restrictions will cause problems.

- Ability to display the model on 3D printing.

- Existence of a plug-in for display in the browser and SDK for integration into mobile applications.

Here is a dream system. We didn’t find any analogues, so we wrote everything ourselves. It has been almost a year and in the autumn of 2014, finally there was an opportunity to tell about our developments to potential investors. And in early 2015, we demonstrated the system assembled using Intel RealSense sensors at CES 2015.

But what happened before that?

Writing a network code with its own data transfer protocol immediately proved its worth. We worked with PrimeSense sensors, however, if there were more than two sensors on one PC, they functioned extremely unstable. At that moment, we didn’t think that Intel, Google and other market leaders were already working on new sensors, but out of habit had designed an expandable architecture. Accordingly, our system easily supported any of this equipment.

Most of the time was spent writing calibration. The known calibration tools for calibration were not ideal, since no one deeply analyzed the anatomy of the PrimeSense sensors and far from all the parameters we needed were calibrated. We abandoned the PrimeSense factory calibration and wrote our own, based on IR data. Along the way, there have been many studies of the functioning of sensors and algorithms for constructing and texturing the mesh. Much corresponded and reworked. As a result, we forced all sensors in our system to shoot equally. And having made, they immediately filed a patent and at the moment we have US Patent Pending.

After PrimeSense was bought by Apple, it became clear that it was worth switching attention to other manufacturers. Thanks to the existing developments at that time, software with Intel RealSense sensors started working just two weeks after receiving sensors from Intel. Now we are using Intel RealSense, and for shooting at long distances - Microsoft Kinect 2. Recently we saw Intel CES keynote on sensors for robots. Perhaps they will replace us Kinect 2, if they become available to developers.

With the transition to RealSense, the problem of calibration was announced with a new force. In order to paint the cloud points in the appropriate colors or to texture the mesh obtained from the cloud, it is necessary to know the RGB and Depth positions of the camera relative to each other. On RealSense, it was planned to first use the same own manual scheme as on PrimeSense, but we faced the limitation of sensors - the data came after processing by filters, and working with RAW requires a different approach. Fortunately, the Intel RealSense SDK has functionality for converting coordinates from one space to another, for example, from Depth Space to Color Space, from Color to Depth Space, from Camera to Color, etc. Therefore, in order not to waste time on the development of alternative calibration methods, it was decided to use this functionality, which surprisingly works very well. If we compare the colored point clouds with Primesense and with RealSense, then the clouds with RealSense are painted more qualitatively.

What have we got? Sensors and cameras stand in a circle around the object being shot. We calibrate them and find the positions of the sensors, cameras and their angles of inclination relative to each other. Optionally, we use Canon Rebel cameras for texturing as optimal in terms of price / quality. However, there are no limitations here, since we ourselves will calibrate all the optical parameters, including distortion.

Textures are projected and blend directly onto the 3D model. Therefore, the result is very clear.

The 3D model is built from the clouds of points that we form from N sensors. The speed of data capture is from 5 to 10 seconds (depending on the type and number of sensors). And we get the full object model!

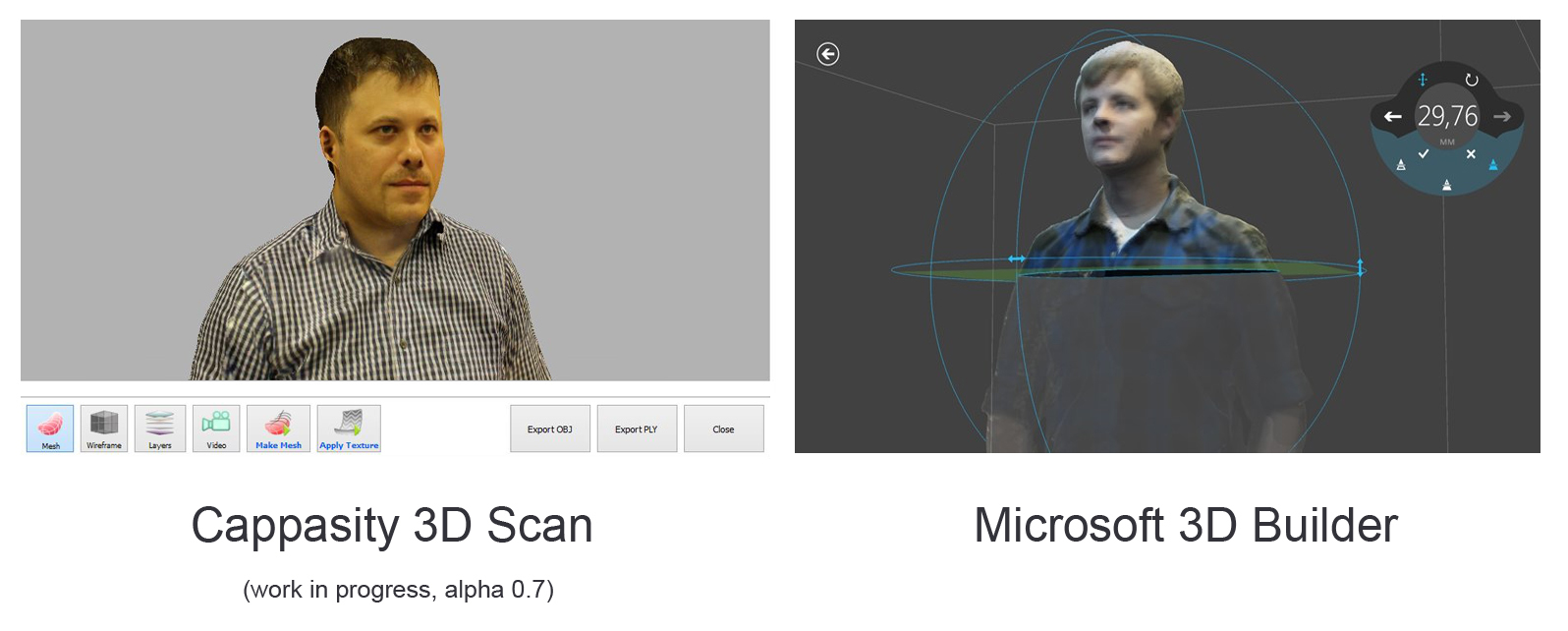

View 3D model

Preview 3D models:

This year we are planning to launch 4 products at once: appasity Human 3D Scan for scanning people, appasity Room 3D Scan for indoor, appasity Furniture 3D Scan for furniture and Cappasity Portable 3D Scan for scanning with one sensor.

Cappasity Portable 3D Scan will be released in just a couple of months for laptops with Intel RealSense. We will present it at the 2015 GDC conference held on March 4th in San Francisco. You can create high-quality 3D models using the turntable or by rotating the model manually. And if you have a camera, then we will give you the opportunity to create high-resolution textures.

Why did we choose Intel RealSense? We decided to focus on Intel technology for several reasons:

- already about 15 notebook models support RealSense;

- Planned release of tablets with RealSense;

- opens the way to B2C sales - a new direction of monetization of our products;

- there is a quality SDK;

- Sensors have a high speed.

A good role is also played by good technical, marketing and business support from the company itself. We have been closely cooperating since 2007, and there have never been situations where we could not get an answer to our questions from colleagues from Intel.

If we consider the technology RealSense from the point of view of 3D scanning at a distance of one meter, we can safely call Intel the leader in this area.

Undoubtedly, modern technologies offer ample opportunities to work with the depth of the world around us!

We regularly post new material about our progress on our Facebook and Twitter pages.

Source: https://habr.com/ru/post/250241/

All Articles