What every programmer should know about compiler optimization

High-level programming languages contain many abstract programmer constructs, such as functions, conditional operators, and cycles — they make us amazingly productive. However, one of the drawbacks of writing code in a high-level language is the potential significant reduction in the speed of the program. Therefore, the compilers are trying to automatically optimize the code and increase the speed of work. Nowadays, optimization logic has become very complex: compilers convert loops, conditional expressions, and recursive functions; remove whole blocks of code. They optimize the code for the processor architecture to make it really fast and compact. And it’s very cool, because it’s better to focus on writing a readable code than to do manual optimizations that are difficult to understand and maintain. In addition, manual optimizations may prevent the compiler from performing additional and more efficient automatic optimizations. Instead of writing optimization with your hands, it would be better to focus on the design of the architecture and on efficient algorithms, including parallelism and the use of library features.

This article focuses on the Visual C ++ compiler optimizations. I'm going to discuss the most important optimization techniques and solutions that the compiler has to apply in order to apply them correctly. My goal is not to tell you how to manually optimize the code, but to show why you should trust the compiler to optimize your code yourself. This article is by no means a description of the full set of optimizations that the Visual C ++ compiler does; it will show only the really important ones that you’ll need to know about. There are other important optimizations that the compiler is not able to perform. For example, replacing an inefficient algorithm with an effective one or changing the alignment of the data structure. We will not discuss such optimizations in this article.

')

Compilers are constantly being improved, the approaches they use are improved. Despite the fact that they are not perfect, often the most correct approach is to leave low-level optimizations to the compiler, rather than trying to do them manually.

There are four ways to help the compiler optimize more efficiently:

The purpose of this article is to show you why you can trust the compiler to perform optimizations that apply to inefficient, but readable code (the first method). I will also make a brief overview of profile-guided optimizations and mention some compiler directives that will allow you to improve part of your source code.

There are many compiler optimization techniques, ranging from simple transformations, such as constants to convolutions, to complex ones, such as instruction scheduling. In this article, we will limit ourselves to the most important optimizations that can significantly improve the performance of your code (by two-digit percent) and reduce its size by substitution of functions (function inlining), COMDAT optimizations and loop optimizations. I will discuss the first two approaches in the next section, and then show you how you can control the execution of optimizations in Visual C ++. In conclusion, I will briefly talk about the optimizations that are used in the .NET Framework. Throughout the article, I will use Visual Studio 2013 for all examples.

If LTCG is enabled (the

The front end also performs some optimizations (for example, a convolution of constants), regardless of whether optimizations are turned on or off. However, all important optimizations are performed by the back end, and they can be controlled using compilation keys.

LTCG allows back end to perform many optimizations aggressively (using the

I will work with the program from two source code files (

The file

The code is very simple, but useful. We have several functions that do simple calculations, some of which contain loops. The

Consider the result of the compiler under three different configurations. If you deal with the example yourself, you will need an assembler output file (obtained using the

If you look at the generated assembly listing file for

Now turn to

While the inline function is enabled (the

If you look at the assembly listing file for

As you can see, inlining functions is important not only because the function call is optimized, but also because it allows the compiler to perform many additional optimizations. Inline usually increases performance by increasing code size. Excessive use of this optimization leads to the phenomenon called code bloat. Therefore, each time a function is called, the compiler calculates the costs and benefits, and then decides whether to inline the function.

Because of the importance of inlining, the Visual C ++ compiler provides great opportunities for its support. You can tell the compiler to never inline a set of functions using the

The

The compiler may not always zainlaynit function. For example, during a virtual call to a virtual function: the function cannot be inline, because the compiler does not know exactly which function will be called. Another example: a function is called via a pointer to a function instead of a call through its name. You should try to avoid such situations so that inlineing is possible. A complete list of all such conditions can be found in MSDN.

Inline functions are not the only optimization that can be applied at the program level as a whole. Most optimizations work most effectively at this level. In the rest of this article, I will discuss a specific class of optimizations called COMDAT optimizations.

By default, during module compilation, all code is stored in a single section of the resulting object file. The linker works at the section level: it can delete sections, merge them, or reorder. This prevents him from performing three very important optimizations (a two-digit percentage) that help reduce the size of the executable file and increase its performance. The first removes unused functions and global variables. The second one collapses identical functions and global constants. The third reorders the functions and global variables so that, during execution, the transitions between the physical memory fragments are shorter.

To enable these linker optimizations, you must ask the compiler to package functions and variables into separate sections using the compiler keys

You should use LTCG whenever possible. The only reason for abandoning LTCG is that you want to distribute the resulting object files and library files. Recall that they contain CIL-code instead of machine. CIL-code can only be used by the compiler and linker of the same version with which they were generated, which is a significant limitation, because developers will have to use the same version of the compiler to use your files. In this case, if you do not want to distribute a separate version of the object files for each version of the compiler, then you should use code generation instead. In addition to the version limit, the object files are many times larger than the corresponding assembler object files. However, do not forget about the huge advantage of object files with CIL-code, which is the ability to use WPO.

If you code with the

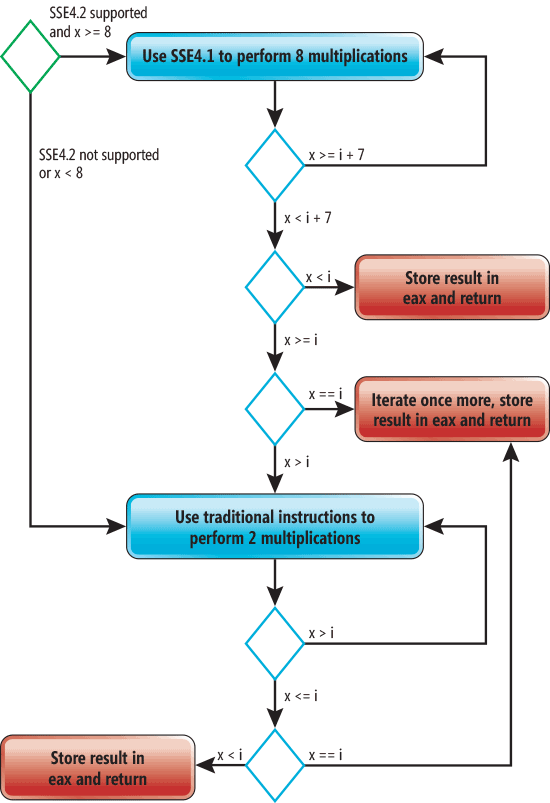

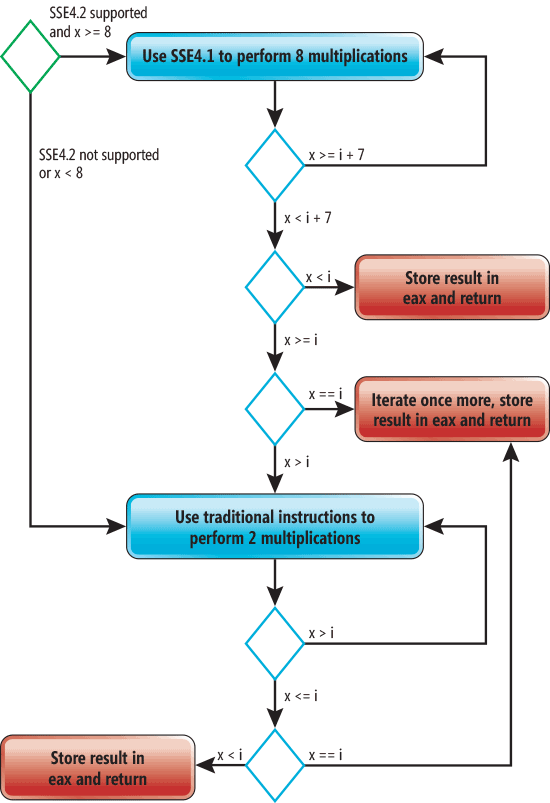

In this diagram, the green rhombus shows the entry point, and the red rectangles show the exit points. Blue diamonds represent the conditional operators that will be executed when the

The unwinding of cycles is performed by repeating the cycle body several times within one iteration of a new (unwound) cycle. This improves performance, because the operations of the cycle itself will be performed less frequently. In addition, this allows the compiler to perform additional optimizations (for example, vectorization). The disadvantage of unwinding cycles is an increase in the amount of code and the load on the registers. But despite this, depending on the body of the cycle, such optimization can increase productivity by a two-digit percentage.

Unlike x86 processors, all x86-64 processors support SSE2. Moreover, you can take advantage of AVX / AVX2 instructions on the latest x86-64 processors from Intel and AMD with the

Currently, the Visual C ++ compiler does not allow controlling the unwinding of loops. But you can influence it with

If you look at the generated assembler code, you may notice that additional optimizations can be applied to it. But the compiler has already done an excellent job anyway and there is no need to spend much more time on analysis in order to apply minor optimizations.

The

In conclusion, we will look at such optimization as the removal of loop invariants. Take a look at the following code:

The only change we have made is to add an additional variable, which is incremented at each iteration, and finally displayed on the console. It is easy to see that this code is easily optimized by moving the incremental variable out of the frame: just assign it to

: , . ? ,

, , , , . .

optimization list , :

c

RyuJIT Visual C++ ? , RyuJIT , , Visual C++ . , ,

. C# Visual Basic

This article focuses on the Visual C ++ compiler optimizations. I'm going to discuss the most important optimization techniques and solutions that the compiler has to apply in order to apply them correctly. My goal is not to tell you how to manually optimize the code, but to show why you should trust the compiler to optimize your code yourself. This article is by no means a description of the full set of optimizations that the Visual C ++ compiler does; it will show only the really important ones that you’ll need to know about. There are other important optimizations that the compiler is not able to perform. For example, replacing an inefficient algorithm with an effective one or changing the alignment of the data structure. We will not discuss such optimizations in this article.

Definition of compiler optimizations

Optimization is the process of converting a code fragment into another fragment that is functionally equivalent to the original, in order to improve one or several of its characteristics, of which the speed and size of the code are the most important. Other characteristics include the amount of energy consumed for code execution and compile time (as well as JIT compile time if the resulting code uses JIT).')

Compilers are constantly being improved, the approaches they use are improved. Despite the fact that they are not perfect, often the most correct approach is to leave low-level optimizations to the compiler, rather than trying to do them manually.

There are four ways to help the compiler optimize more efficiently:

- Write readable code that is easy to maintain. Do not think of various Visual C ++ OOP features as the worst enemy of performance. The latest version of Visual C ++ will be able to reduce the overhead from OOP to a minimum, and sometimes even completely get rid of them.

- Use compiler directives. For example, tell the compiler to use a function calling convention that is faster than the default.

- Use built-in compiler functions. These are special functions, the implementation of which is provided by the compiler automatically. Remember that the compiler has a deep knowledge of how to efficiently arrange a sequence of machine instructions so that the code runs as fast as possible on the specified software architecture. Currently, the Microsoft .NET Framework does not support built-in functions, so managed languages cannot use them. However, Visual C ++ has extensive support for such functions. However, you should not forget that, although their use will improve the performance of the code, it will also have a negative impact on readability and portability.

- Use profile-guided optimization (PGO). Thanks to this technology, the compiler knows more about how the code will behave during operation and optimizes it accordingly.

The purpose of this article is to show you why you can trust the compiler to perform optimizations that apply to inefficient, but readable code (the first method). I will also make a brief overview of profile-guided optimizations and mention some compiler directives that will allow you to improve part of your source code.

There are many compiler optimization techniques, ranging from simple transformations, such as constants to convolutions, to complex ones, such as instruction scheduling. In this article, we will limit ourselves to the most important optimizations that can significantly improve the performance of your code (by two-digit percent) and reduce its size by substitution of functions (function inlining), COMDAT optimizations and loop optimizations. I will discuss the first two approaches in the next section, and then show you how you can control the execution of optimizations in Visual C ++. In conclusion, I will briefly talk about the optimizations that are used in the .NET Framework. Throughout the article, I will use Visual Studio 2013 for all examples.

Link-Time Code Generation

Code generation at the linking stage (Link-Time Code Generation, LTCG) is a technique for performing whole program optimizations (WPO) on C / C ++ code. The C / C ++ compiler processes each source code file separately and produces the corresponding object file. In other words, the compiler can optimize only a single file, instead of optimizing the entire program. However, some important optimizations may be applicable only to the entire program. You can use these optimizations only during linking, and not at compile time, since the linker has a complete understanding of the program.If LTCG is enabled (the

/GL flag), then the compiler driver ( cl.exe ) will only call the front end ( c1.dll or c1xx.dll ) and will postpone the work of the back end ( c2.dll ) until linking. The resulting object files contain C Intermediate Language (CIL), not machine code. Then the linker is called ( link.exe ). He sees that the object files contain CIL code, and calls the back end, which, in turn, runs WPO and generates binary object files so that the linker can put them together and form the executable file.The front end also performs some optimizations (for example, a convolution of constants), regardless of whether optimizations are turned on or off. However, all important optimizations are performed by the back end, and they can be controlled using compilation keys.

LTCG allows back end to perform many optimizations aggressively (using the

/GL compiler keys with /O1 or /O2 and /Gw , as well as using the /OPT:REF and /OPT:ICF link keys). In this article I will discuss only inlining and COMDAT optimization. A complete list of LTCG optimizations is provided in the documentation. It is useful to know that the linker can perform LTCG on native, native-managed and purely managed object files, as well as on safe-managed (safe managed) object files and safe.netmodules.I will work with the program from two source code files (

source1.c and source2.c ) and a header file ( source2.h ). The source1.c and source2.c are shown in the listing below, and the header file containing the prototypes of all source2.c functions is so simple that I will not give it. // source1.c #include <stdio.h> // scanf_s and printf. #include "Source2.h" int square(int x) { return x*x; } main() { int n = 5, m; scanf_s("%d", &m); printf("The square of %d is %d.", n, square(n)); printf("The square of %d is %d.", m, square(m)); printf("The cube of %d is %d.", n, cube(n)); printf("The sum of %d is %d.", n, sum(n)); printf("The sum of cubes of %d is %d.", n, sumOfCubes(n)); printf("The %dth prime number is %d.", n, getPrime(n)); } // source2.c #include <math.h> // sqrt. #include <stdbool.h> // bool, true and false. #include "Source2.h" int cube(int x) { return x*x*x; } int sum(int x) { int result = 0; for (int i = 1; i <= x; ++i) result += i; return result; } int sumOfCubes(int x) { int result = 0; for (int i = 1; i <= x; ++i) result += cube(i); return result; } static bool isPrime(int x) { for (int i = 2; i <= (int)sqrt(x); ++i) { if (x % i == 0) return false; } return true; } int getPrime(int x) { int count = 0; int candidate = 2; while (count != x) { if (isPrime(candidate)) ++count; } return candidate; } The file

source1.c contains two functions: the function square , which calculates the square of an integer, and the main function of the program main . The main function calls the square function and all functions from source2.c with the exception of the isPrime . The file source2.c contains 5 functions: cube to raise an integer to a third power, sum to count the sum of integers from 1 to a given number, sumOfCubes to count the sum of cubes of integers from 1 to a given number, isPrime to check the number for simplicity, getPrime to get a prime number with a given number. I missed error handling, since it is of no interest in this article.The code is very simple, but useful. We have several functions that do simple calculations, some of which contain loops. The

getPrime function is the most complex, getPrime it contains a while , inside of which it calls the isPrime function, which also contains a loop. I will use this code to demonstrate one of the important optimizations of the function inlining compiler and several additional optimizations.Consider the result of the compiler under three different configurations. If you deal with the example yourself, you will need an assembler output file (obtained using the

/FA[s] compiler key) and a map file (obtained using the linker /MAP key) to study the COMDAT optimization performed (the linker will report them , if you turn on the /verbose:icf and /verbose:ref ) keys. Make sure all keys are correct and continue reading the article. I will use the C ( /TC ) compiler to make the generated code easier to learn, but everything described in the article also applies to C ++ code.Debug Configuration

The Debug configuration is used mainly because all back end optimizations are turned off if you specify the/Od key without the /GL key. In this configuration, the resulting object files contain a binary code that exactly matches the source code. You can examine the assembler output files and the map file to verify this. A configuration is equivalent to a Debug configuration in Visual Studio.Compile-Time Code Generation Release Configuration

This configuration is similar to the Release configuration (in which the/O1 , /O2 or /Ox switches are specified), but it does not include the /GL switch. In this configuration, the final object files contain an optimized binary code, but at the same time the optimization of the entire program level is not performed.If you look at the generated assembly listing file for

source1.c , you will notice that two important optimizations have been performed. The first call to the square function, square(n) , was replaced by the value computed at compile time. How did this happen? The compiler noticed that the function body is not enough, and decided to substitute its contents instead of the call. Then the compiler noticed that in the calculation of the value there is a local variable n with a known initial value, which did not change between the initial assignment and the function call. Thus, he came to the conclusion that it is safe to calculate the value of the multiplication operation and substitute the result ( 25 ). The second call to the square function, square(m) , was also inline, i.e., the function body was substituted for the call. But the value of the variable m is unknown at the time of compilation, so the compiler was unable to calculate in advance the value of the expression.Now turn to

source2.c 's assembly listing file, which is much more interesting. The call to the cube function in the sumOfCubes function was inline. This, in turn, allowed the compiler to perform loop optimization (for more details, see the “Cycle Optimization” section). In the isPrime function, SSE2 instructions were used to convert int to double when calling sqrt and converting from double to int when getting a result from sqrt . In fact, sqrt volunteered once before the start of the cycle. Note that the /arch switch tells the compiler that x86 uses SSE2 by default (most x86 processors and x86-64 processors support SSE2).Link-Time Code Generation Release Configuration

This configuration is identical to the Release configuration in Visiual Studio: the optimizations are enabled and the/GL compiler key is specified (you can also explicitly specify /O1 or /O2 ). Thus, we tell the compiler to generate object files with CIL code instead of assembly object files. This means that the linker will call the back end of the compiler to run WPO, as described above. Now we will discuss a few WPOs to show the great benefits of LTCG. Generated assembly code listings for this configuration are available online.While the inline function is enabled (the

/Ob key, which is turned on, if you have turned off optimization), the /GL key allows the compiler to inline functions defined in other files regardless of the /Gy key (we will discuss it a little later). The linker /LTCG optional and only affects the linker.If you look at the assembly listing file for

source1.c , you may notice that calls to all functions except scanf_s were inline. As a result, the compiler was able to calculate the functions cube , sum and sumOfCubes . Only the isPrime function isPrime not inline. However, if you manually inlined it in getPrime , then the compiler would still execute inline getPrime in main .As you can see, inlining functions is important not only because the function call is optimized, but also because it allows the compiler to perform many additional optimizations. Inline usually increases performance by increasing code size. Excessive use of this optimization leads to the phenomenon called code bloat. Therefore, each time a function is called, the compiler calculates the costs and benefits, and then decides whether to inline the function.

Because of the importance of inlining, the Visual C ++ compiler provides great opportunities for its support. You can tell the compiler to never inline a set of functions using the

auto_inline directive. You can also tell the compiler specific functions or methods using __declspec(noinline) . You can also mark a function with the inline and advise the compiler to execute the inline (although the compiler may decide to ignore this advice if it considers it bad). The inline has been available since the first C ++ version, it appeared in C99. You can use the __inline compiler __inline keyword for both C and C ++: this is convenient if you want to use older versions of C that do not support this keyword. The __forceinline (for C and C ++) causes the compiler to always inline a function, if possible. Last but not least, you can tell the compiler to expand the recursive function of the specified or indefinite depth by inlining using the inline_recursion directive. Note that at present the compiler does not have the ability to control inline in the place of the function call, and not in the place of its declaration.The

/Ob0 disables inlining completely, which is useful during debugging (this switch works in the Debug configuration in Visual Studio). The /Ob1 tells the compiler that only functions marked with inline , __inline , __forceinline should be considered as candidates for __forceinline . The /Ob2 only works when the specified /O[1|2|x] and tells the compiler to consider all functions for inlining. In my opinion, the only reason for using the inline and __inline is to control inlining for the /Ob1 .The compiler may not always zainlaynit function. For example, during a virtual call to a virtual function: the function cannot be inline, because the compiler does not know exactly which function will be called. Another example: a function is called via a pointer to a function instead of a call through its name. You should try to avoid such situations so that inlineing is possible. A complete list of all such conditions can be found in MSDN.

Inline functions are not the only optimization that can be applied at the program level as a whole. Most optimizations work most effectively at this level. In the rest of this article, I will discuss a specific class of optimizations called COMDAT optimizations.

By default, during module compilation, all code is stored in a single section of the resulting object file. The linker works at the section level: it can delete sections, merge them, or reorder. This prevents him from performing three very important optimizations (a two-digit percentage) that help reduce the size of the executable file and increase its performance. The first removes unused functions and global variables. The second one collapses identical functions and global constants. The third reorders the functions and global variables so that, during execution, the transitions between the physical memory fragments are shorter.

To enable these linker optimizations, you must ask the compiler to package functions and variables into separate sections using the compiler keys

/Gy (function level linking) and /Gw (global data optimization). These sections are called COMDATs. You can also mark a given global variable using __declspec( selectany) to tell the compiler to package the variable in COMDAT. Further, using the linker /OPT:REF key, you can get rid of unused functions and global variables. Key /OPT:ICF helps to minimize identical functions and global constants (ICF is Identical COMDAT Folding). The /ORDER switch will force the linker to place COMDATs in the final images in a specific order. Note that all linker optimizations do not need the /GL . The /OPT:REF and /OPT:ICF switches must be turned off during debugging for obvious reasons.You should use LTCG whenever possible. The only reason for abandoning LTCG is that you want to distribute the resulting object files and library files. Recall that they contain CIL-code instead of machine. CIL-code can only be used by the compiler and linker of the same version with which they were generated, which is a significant limitation, because developers will have to use the same version of the compiler to use your files. In this case, if you do not want to distribute a separate version of the object files for each version of the compiler, then you should use code generation instead. In addition to the version limit, the object files are many times larger than the corresponding assembler object files. However, do not forget about the huge advantage of object files with CIL-code, which is the ability to use WPO.

Cycle optimization

The Visual C ++ compiler supports several types of loop optimizations, but we will discuss only three: loop unrolling, automatic vectorization, and loop-invariant code motion. If you modify the code fromsource1.c so that sumOfCubes is passed to sumOfCubes instead of n, then the compiler will not be able to calculate the value of the parameters, you have to compile the function so that it can work for any argument. The final function will be well optimized, which is why it will have a large size, which means the compiler will not inline it.If you code with the

/O1 key, no optimizations to sumOfCubes will be applied. Compiling with the /O2 key will give speed optimizations. At the same time, the code size will increase significantly, sumOfCubes loop inside the sumOfCubes function will be unwound and vectorized. It is very important to understand that vectorization will not be possible without inlineing the cube function. Moreover, unwinding the cycle will also not be as effective without inlineing. A simplified graphical representation of the resulting code is shown in the following picture (this graph is valid for both x86 and x86-64).

In this diagram, the green rhombus shows the entry point, and the red rectangles show the exit points. Blue diamonds represent the conditional operators that will be executed when the

sumOfCubes function is sumOfCubes . If SSE4 is supported and x is greater than or equal to 8, then SSE4 instructions will be used to perform 4 multiplications at a time. The process of performing the same operation for several variables is called vectorization. Also the compiler unwinds this cycle twice. This means that the body of the loop will be repeated twice for each iteration. As a result, the execution of eight multiplication operations will occur in 1 iteration. If x less than 8, then the code without optimizations will be used to perform the function. Note that the compiler inserts three exit points instead of one - thus reducing the number of transitions.The unwinding of cycles is performed by repeating the cycle body several times within one iteration of a new (unwound) cycle. This improves performance, because the operations of the cycle itself will be performed less frequently. In addition, this allows the compiler to perform additional optimizations (for example, vectorization). The disadvantage of unwinding cycles is an increase in the amount of code and the load on the registers. But despite this, depending on the body of the cycle, such optimization can increase productivity by a two-digit percentage.

Unlike x86 processors, all x86-64 processors support SSE2. Moreover, you can take advantage of AVX / AVX2 instructions on the latest x86-64 processors from Intel and AMD with the

/arch switch. Specifying /arch:AVX2 , you tell the compiler to also use the FMA and BMI instructions.Currently, the Visual C ++ compiler does not allow controlling the unwinding of loops. But you can influence it with

__forceinline and loop directives with the no_vector option (the latter turns off the no_vector specified cycles).If you look at the generated assembler code, you may notice that additional optimizations can be applied to it. But the compiler has already done an excellent job anyway and there is no need to spend much more time on analysis in order to apply minor optimizations.

The

someOfCubes function someOfCubes not the only one whose loop has been unwound. If you modify the code and pass m to the sum function instead of n , then the compiler will not be able to calculate its value and it will have to generate the code, the loop will be unwound twice.In conclusion, we will look at such optimization as the removal of loop invariants. Take a look at the following code:

int sum(int x) { int result = 0; int count = 0; for (int i = 1; i <= x; ++i) { ++count; result += i; } printf("%d", count); return result; } The only change we have made is to add an additional variable, which is incremented at each iteration, and finally displayed on the console. It is easy to see that this code is easily optimized by moving the incremental variable out of the frame: just assign it to

x . This optimization is called the removal of the loop invariant (loop-invariant code motion). The word "invariant" shows that this technique is applicable when a part of the code does not depend on expressions that include a loop variable.: , . ? ,

x . , count . x count, ! , x , count . , . , Visual C++ , , x ., , , , . .

O1 , /O2 , /Ox , optimize : #pragma optimize( "[optimization-list]", {on | off} ) optimization list , :

g , s , t , y . /Og , /Os , /Ot , /Oy .c

off . on ./Og , , , . LTCG , /Og WPO.optimize , , : , . , , profile-guided- (PGO), , , . , . Visual Studio , , ..NET

.NET , . (C# compiler) JIT-. . , . JIT-. JIT- .NET 4.5 SIMD. JIT- .NET 4.5.1 ( RyuJIT) SIMD.RyuJIT Visual C++ ? , RyuJIT , , Visual C++ . , ,

true , . RyuJIT . , SSE4.1, JIT- SSE4.1 subOfCubes , . , RyuJIT , . . JIT- . Visual C++ , . Microsoft .NET Native Visual C++. Windows Store.. C# Visual Basic

/optimize . JIT- System.Runtime.CompilerServices.MethodImpl MethodImplOptions . NoOptimization , NoInlining , AggressiveInlining ( .NET 4.5) JIT- , .Total

, , . Visual C++. , , . , Visual C++. , . Visual C++ , . 2.Source: https://habr.com/ru/post/250199/

All Articles