How and why we do TLS in Yandex

I am engaged in Yandex product safety and it seems that now is the time for more details than I already had on YaC , to tell Habré how we implement TLS.

Using HTTPS connections is an important part of a secure web service, since it is HTTPS that ensures the confidentiality and integrity of data at the stage of transferring them between the client and the service. We gradually transfer all our services only to an HTTPS connection. Many of them are already working exclusively on it: Passport, Mail, Direct, Metric, Taxi, Yandex.Money, as well as all feedback forms dealing with personal data of users. For more than a year, Yandex.Mail has even been communicating with other SSL / TLS mail services that support this.

')

We all know that HTTPS is HTTP wrapped in TLS. Why TLS, not SSL? Because in principle, TLS is a newer SSL , while the name of the new protocol most accurately describes its purpose. And in the light of the POODLE vulnerability, we can officially assume that SSL can no longer be used.

Along with HTTP in TLS, almost any application layer protocol can be wrapped. For example, SMTP, IMAP, POP3, XMPP, etc. But since the deployment of HTTPS is the most massive problem and due to the peculiarities of the behavior of browsers has a large number of subtleties, I will tell you about it. However, with some assumptions, many things will be true for other protocols. I will try to tell you about the necessary minimum, which will be useful to our colleagues.

I will conditionally divide the story into two parts - infrastructure, where everything that is below HTTP will be, and part about changes at the application level.

The first thing the team that is going to deploy HTTPS will have to face is the choice of TLS termination method. TLS termination is the process of encapsulating an application layer protocol in TLS. There are usually three options to choose from:

In Yandex, we use software solutions. If you follow our path, then an important step in deploying TLS will be for you to unify the components.

Historically, at different times, our services used different web server software, so in order to unify everything, we decided to abandon most of the decisions in favor of Nginx, and where it is impossible to refuse - to “hide” them behind Nginx. An exception in this case was a search that uses its own development called - suddenly - Balancer.

Balancer can do a lot of things that others, even commercial solutions, cannot. One day, I think the guys will tell about it in more detail. Having a talented development team, we can afford to support one of our own web server in addition to Nginx.

As for cryptography itself, we use the OpenSSL library. Today it is the most stable and productive implementation of TLS with an adequate license. It is important to use OpenSSL version 1+, since it is optimized to work with memory, there is support for all the necessary modern ciphers and protocols. All our further recommendations will be targeted at users of the Nginx web server.

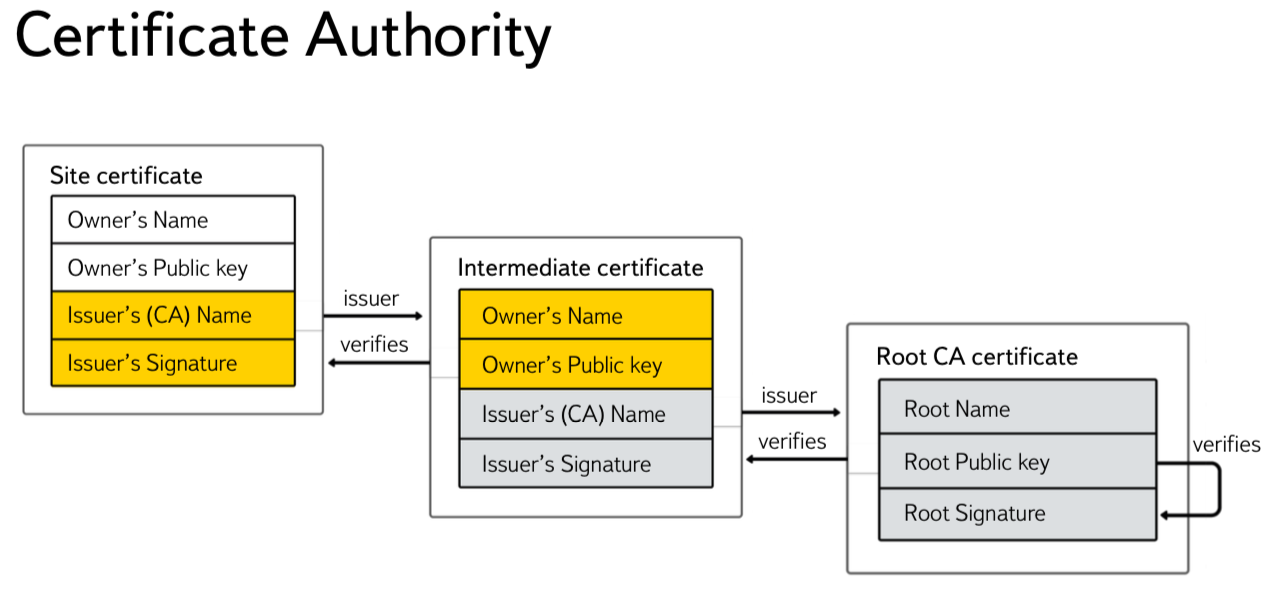

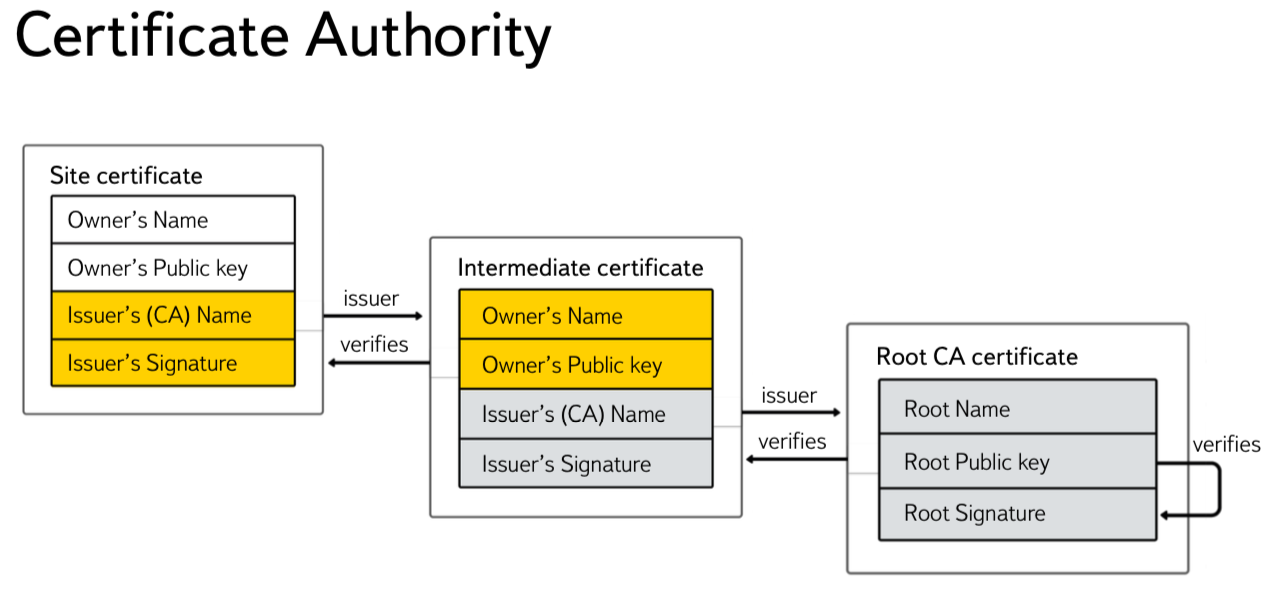

To use HTTPS on your service you will need a certificate. A certificate is a public key and some kind of data in the ASN.1 format, signed by a Certificate Authority. Typically, such certificates are signed by intermediate certification authorities (Intermediate CA) and contain the domain name of your service in the Common Name, or the Alt Names extension.

To check the validity of the certificate, the browser tries to verify the validity of the digital signature for the final certificate, and then for each of the intermediate certifying centers. The certificate of the latter in the chain of certification centers must be signed by the so-called Root CA.

Certificates of root authentication centers are stored in the operating system or in the user's browser (for example, in Firefox). When setting up a web server, it is important to send the client not only the server certificate, but also all intermediate ones. You do not need to send the root certificate - it already exists in the OS.

Large companies can afford to have their own Intermediate CA. For example, until 2012, all Yandex certificates were signed by YandexExternalCA. Using your own Intermediate CA gives you both additional opportunities for certificate optimization and pinning, and imposes additional responsibility, as it allows you to write out a certificate for almost any final domain name, and in the case of compromise it can lead to serious consequences, including revocation of the intermediate CA certificate.

Maintaining your own CA can be too expensive and complicated, so some companies use them in the MPKI - Managed PKI mode. Most consumers will be enough to buy certificates using one of the commercial suppliers.

All certificates can be divided into the following characteristics:

Digital signature algorithm - public-key cryptographic algorithms are used to sign certificates, most often RSA , DSA or ECDSA . We will not dwell on the algorithms of the GOST family, since they have not yet received mass support in the client software.

Certificates using RSA are the most widely used today and are supported by all versions of protocols and OC.

The disadvantage of this algorithm is key size and comparable performance when generating and verifying a digital signature. Since certificates with a key size of less than 2048 bits are unsafe, and operations with a larger key consume a large amount of processor resources.

DSA-like schemes are faster than RSA when generating a signature (with the same parameter sizes), while ECDSA is much faster than the classical DSA, since all operations take place in a group of points on an elliptical curve. According to our tests, one Xeon 5645 server allows you to make up to 3200 TLS handles per second on the Nginx web server using a certificate signed by RSA with a key size of 2048 bits (ECDHE-RSA-AES128-GCM-SHA256). In this case, using the ECDSA-certificate (ECDHE-ECDSA-AES128-GCM-SHA256), you can already make 6300 handhelds - the difference in performance almost doubled.

Unfortunately, Windows XP <SP3 and some browsers, which share non-zero among the clients of large sites, do not support ECC certificates.

The strength of the most common EDS algorithms is directly dependent on the strength (security) of the hash function used. The main hashing algorithms used are:

All server end certificates used for TLS can be conditionally divided according to the validation method - Extended Validated and others (most often Domain Validated).

Technically, in the extended validated certificate, additional fields are added with the EV flag and often with the company address. The presence of an EV certificate implies a legal verification of the existence of the certificate holder, while certificates of the Domain Validated type only confirm that the certificate holder really controls the domain name.

In addition to the appearance of a beautiful green dash, the sign of the EV certificate also affects the behavior of browsers associated with checking their revocation status. So even Chromium family browsers, which use neither OCSP nor CRL, but rely only on Google CRLsets, for EV, check the status using the OCSP protocol. Below I will talk about the features of the work of these protocols in more detail.

Now that we have dealt with the certificates, we need to understand which protocol versions will be used. As we all remember, the SSLv2 and SSLv3 version protocols contain fundamental vulnerabilities. Therefore, they must be disabled. Almost all clients now have support for TLS 1.0. We recommend using TLS versions 1.1 and 1.2.

If you have a significant number of clients using SSLv3, you can only be allowed to use it with the RC4 algorithm as a compensation measure - we did just that for the transition period. However, if you do not have the same number of users with old browsers as ours, I recommend completely disable SSLv3. The correct configuration for Nginx in terms of protocols will look like this:

Regarding the choice of ciphersuites or cipher suites and hash functions that will be used for TLS connections, web servers should use only secure ciphers. Here it is important to strike a balance between security, speed, performance and compatibility.

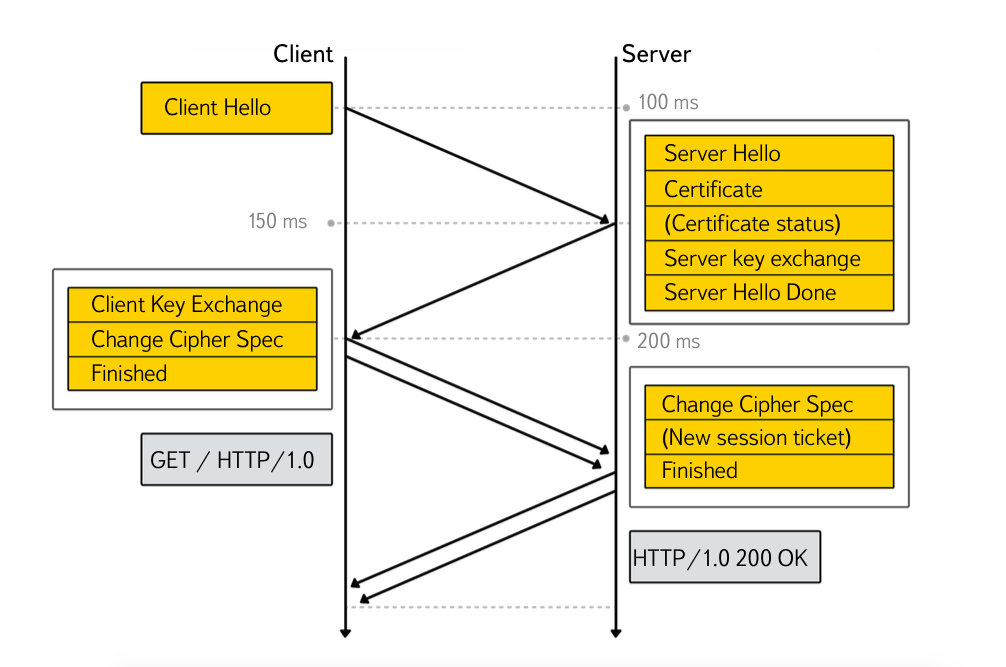

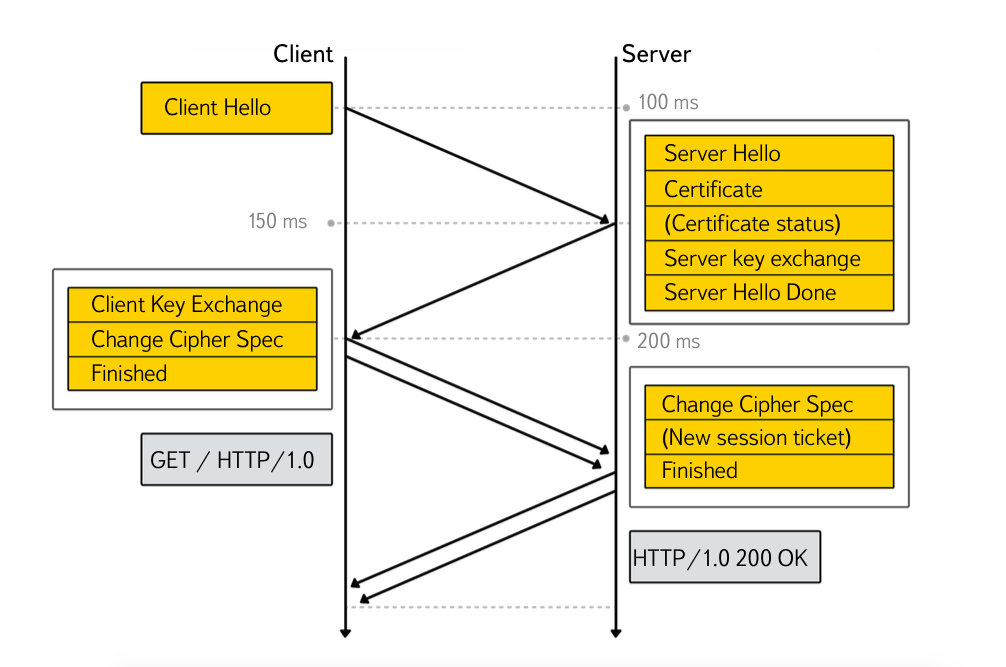

It is considered that the use of HTTPS is very costly both in terms of server-side performance and speed of loading and rendering resources on the client side. This is partly true - an incorrectly configured HTTPS can add 2 (and more) Round Trip Times (RTT) for a handshake.

In order to offset the delays arising from the implementation of HTTPS, the following techniques are used:

To date, there are two main protocols used to check certificate statuses:

Since both protocols run on top of HTTP and at the same time checking the status of the certificate is a blocking procedure, where the servers distributing the CRL or OCSP are located, the responders can directly affect the speed of the TLS handheld.

Different browsers check certificate statuses in different ways. So Firefox uses only OCSP for regular certificates, but CRL is checked for EV. IE and Opera check both CRL and OCSP, and Yandex Browser and other browsers of the Chromium family do not use traditional protocols, relying on CRLsets - lists of revoked certificates of popular resources that come with browser updates.

To optimize the checks, a mechanism called OCSP stapling was also invented, which allows the client to send an OCSP response to the client in the form of a TLS extension along with a certificate. All modern desktop browsers support OCSP stapling (except Safari).

You can enable OCSP stapling in nginx with the following directive:

But if you have a really large and loaded resource, most likely you will want to be sure that the OCSP responses that you cache (Nginx caches responses for 1 hour) are correct.

When using OCSP stapling, mass resources can face such a problem as the wrong time on the client system. This happens due to the fact that, according to the standard, the response time of the responder is limited to a clearly defined time interval, and the time on the client machine may be 5-10-20 minutes behind. To solve this problem for users, we had to teach the server to distribute answers about a day after they were generated (we do about the same when laying out new certificates).

Thus, we have the opportunity to show a warning about the wrong time to people who have the system time shot down for a period of up to a day. In order to randomly rotate OCSP responses, the " ssl_stapling_file " directive is used. For those customers who do not support OCSP stapling, we use the caching of OCSP response responders in our CDN, thereby reducing the response time.

Another effective way to optimize checks is to use short-lived certificates, that is, those in which no status check points are specified. But the period of life of such certificates is very short - from one to three months.

The Certificate Authority can afford to use such certificates. They are almost always used for OCSP responders, since if there are check points in the certificate, Internet Explorer can also check the certificate status of the OCSP responder itself, which will create additional delays.

But even when using OCSP stapling or short-lived certificates, the standard TLS handshake (4 steps) will add 2 RTT delays.

The TLS False Start mechanism allows you to send application data after Stage 3, without waiting for a server response, thus saving 1 RTT. TLS False Start is supported by browsers of the Chromium family and Yandex Browser, IE, Safari, Firefox.

Unfortunately, unlike browsers, not all web servers support this mechanism. Therefore, the following requirements are usually the signal for using TLS False Start:

Prior to SSLv3, an attacker who gained access to the server's private key could passively decrypt all communications that went through the server. Later, the Forward Secrecy mechanism (sometimes using the Perfect prefix) was invented, which uses key agreement protocols (usually based on the Diffie-Hellman scheme and ensures that session keys cannot be recovered, even if the attacker gets access to the server's private key.

A typical nginx configuration for a service running with user data looks like this:

In this configuration, we set the highest priority AES with a 128-bit session key, which is formed by the Elliptic Curve Diffie Hellman (ECDH) algoritm. Next come any other ciphers from ECDH. The second “E” in the abbreviation stands for Ephemeral, i.e. session key that exists within the same connection.

Next, we allow the use of the usual Diffie Hellman (EDH). It is important to note here that using Diffie Hellman with a key size of 2048 bits can be quite expensive.

This part of the config provides us with PFS support. If you use processors with AES-NI support, then AES will be free for you, in terms of resources. Disable 3DES, enable AES128 in non-PFS mode. We leave 3DES and EDH and 3DES in CBC mode for compatibility with very old customers. Turning off the unsafe RC4 and so on. It is important to use the latest version of OpenSSL, then "AES128" will be deployed, including the AEAD ciphers .

PFS has a single flaw - performance penalties. Modern web servers (including Nginx) use the event-driven model. In this case, asymmetric cryptography is most often a blocking operation, since the web server process is blocked and the clients it serves suffer. What can we optimize in this place?

Currently there are two session reuse mechanisms:

In Yandex, there are special mechanisms for generating and securely delivering keys to destination servers.

Once the infrastructure issues have been resolved, let’s go back to the applications. The first thing you need to do is get rid of the so-called. mixed content It all depends on the scale of the project, the quantity and quality of the code. Somewhere you can do with sed, or nginx , but somewhere you have to look for hard-coded http schemes in the DOM tree. We came to the aid of the Content Security Policy, our colleagues from Mail wrote about its implementation earlier .

By adding such a title to the test stand you will receive reports on any content that is loaded via protocols other than

After you get rid of the mixed content, it is important to ensure that the Secure attribute is set for cookies. He tells the browser that cookies cannot be sent over an unsecured connection. So in Yandex, while there are two cookies - sessionid2 and Session_id, one of which is sent only through a secure connection, while the other is left "unsafe" for backward compatibility. Without a “safe” cookie, you cannot get to Mail, Disc, and other important services.

Finally, after you have verified that your service is working correctly over the HTTPS protocol, set up a redirect from the HTTP version to HTTPS, it is important to inform the browser that this resource can no longer be accessed using the unprotected HTTP protocol.

For this, the HTTP Strict Transport Security Security header was invented.

The max-age parameter specifies the period (1 year) during which the protected protocol should be used. The optional includeSubdomains flag says that all subdomains of this domain can also be accessed only through encrypted connections.

In order for users of the Chromium and Firefox family of browsers to always use secure connections, even when they are first accessed, you can add your resource to the browser's HSTS list. In addition to providing security, it will also save one redirect on first access.

To do this, add the preload flag to the header and specify the domain here: hstspreload.appspot.com .

For example, Yandex.Passport has been added to the preload list of browsers.

The entire configuration of a single nginx server will look something like this:

In conclusion, I would like to add that HTTPS is gradually becoming the de facto standard for working with WEB, and is used not only by browsers - most of the mobile application APIs start to work using the HTTPS protocol. You can learn about some features of the secure implementation of HTTPS in mobiles from the report of Yury tracer0tong Leonidchev on a clean-up day in Nizhny Novgorod.

Using HTTPS connections is an important part of a secure web service, since it is HTTPS that ensures the confidentiality and integrity of data at the stage of transferring them between the client and the service. We gradually transfer all our services only to an HTTPS connection. Many of them are already working exclusively on it: Passport, Mail, Direct, Metric, Taxi, Yandex.Money, as well as all feedback forms dealing with personal data of users. For more than a year, Yandex.Mail has even been communicating with other SSL / TLS mail services that support this.

')

We all know that HTTPS is HTTP wrapped in TLS. Why TLS, not SSL? Because in principle, TLS is a newer SSL , while the name of the new protocol most accurately describes its purpose. And in the light of the POODLE vulnerability, we can officially assume that SSL can no longer be used.

Along with HTTP in TLS, almost any application layer protocol can be wrapped. For example, SMTP, IMAP, POP3, XMPP, etc. But since the deployment of HTTPS is the most massive problem and due to the peculiarities of the behavior of browsers has a large number of subtleties, I will tell you about it. However, with some assumptions, many things will be true for other protocols. I will try to tell you about the necessary minimum, which will be useful to our colleagues.

I will conditionally divide the story into two parts - infrastructure, where everything that is below HTTP will be, and part about changes at the application level.

Termination

The first thing the team that is going to deploy HTTPS will have to face is the choice of TLS termination method. TLS termination is the process of encapsulating an application layer protocol in TLS. There are usually three options to choose from:

- Use one of the many third-party services - Amazon ELB , Cloudflare , Akamai and others. The main disadvantage of this method will be the need to protect the channels between third-party services and your servers. Most likely, it will still require the deployment of TLS support in one form or another. A big disadvantage will also be complete dependence on the service provider in terms of supporting the necessary functionality, speed of fixing vulnerabilities. A separate problem may be the need to disclose certificates. Despite this, this method will be a good solution for startups or companies using PaaS .

- For companies using their own hardware and their data centers, a possible option is the Hardware load balancer with TLS termination functions. The only advantage here is performance. Choosing such a solution, you become completely dependent on your vendor, and since the same hardware components are often used inside the products, then they are also from chip manufacturers. As a result, the timing of adding any features is far from ideal. The potential customs difficulties with the importation of such products will be left outside this material.

- Software solutions - the "golden mean". Existing opensource solutions - Nginx , Haproxy , Bud , etc. - give you almost complete control over the situation, adding features, optimizations. The downside is performance - it is lower than that of hardware solutions.

In Yandex, we use software solutions. If you follow our path, then an important step in deploying TLS will be for you to unify the components.

Unification

Historically, at different times, our services used different web server software, so in order to unify everything, we decided to abandon most of the decisions in favor of Nginx, and where it is impossible to refuse - to “hide” them behind Nginx. An exception in this case was a search that uses its own development called - suddenly - Balancer.

Balancer can do a lot of things that others, even commercial solutions, cannot. One day, I think the guys will tell about it in more detail. Having a talented development team, we can afford to support one of our own web server in addition to Nginx.

As for cryptography itself, we use the OpenSSL library. Today it is the most stable and productive implementation of TLS with an adequate license. It is important to use OpenSSL version 1+, since it is optimized to work with memory, there is support for all the necessary modern ciphers and protocols. All our further recommendations will be targeted at users of the Nginx web server.

Certificates

To use HTTPS on your service you will need a certificate. A certificate is a public key and some kind of data in the ASN.1 format, signed by a Certificate Authority. Typically, such certificates are signed by intermediate certification authorities (Intermediate CA) and contain the domain name of your service in the Common Name, or the Alt Names extension.

To check the validity of the certificate, the browser tries to verify the validity of the digital signature for the final certificate, and then for each of the intermediate certifying centers. The certificate of the latter in the chain of certification centers must be signed by the so-called Root CA.

Certificates of root authentication centers are stored in the operating system or in the user's browser (for example, in Firefox). When setting up a web server, it is important to send the client not only the server certificate, but also all intermediate ones. You do not need to send the root certificate - it already exists in the OS.

Large companies can afford to have their own Intermediate CA. For example, until 2012, all Yandex certificates were signed by YandexExternalCA. Using your own Intermediate CA gives you both additional opportunities for certificate optimization and pinning, and imposes additional responsibility, as it allows you to write out a certificate for almost any final domain name, and in the case of compromise it can lead to serious consequences, including revocation of the intermediate CA certificate.

Maintaining your own CA can be too expensive and complicated, so some companies use them in the MPKI - Managed PKI mode. Most consumers will be enough to buy certificates using one of the commercial suppliers.

All certificates can be divided into the following characteristics:

- Digital signature algorithm and hash function used;

- Type of certificate.

Digital signature algorithm - public-key cryptographic algorithms are used to sign certificates, most often RSA , DSA or ECDSA . We will not dwell on the algorithms of the GOST family, since they have not yet received mass support in the client software.

Certificates using RSA are the most widely used today and are supported by all versions of protocols and OC.

The disadvantage of this algorithm is key size and comparable performance when generating and verifying a digital signature. Since certificates with a key size of less than 2048 bits are unsafe, and operations with a larger key consume a large amount of processor resources.

DSA-like schemes are faster than RSA when generating a signature (with the same parameter sizes), while ECDSA is much faster than the classical DSA, since all operations take place in a group of points on an elliptical curve. According to our tests, one Xeon 5645 server allows you to make up to 3200 TLS handles per second on the Nginx web server using a certificate signed by RSA with a key size of 2048 bits (ECDHE-RSA-AES128-GCM-SHA256). In this case, using the ECDSA-certificate (ECDHE-ECDSA-AES128-GCM-SHA256), you can already make 6300 handhelds - the difference in performance almost doubled.

Unfortunately, Windows XP <SP3 and some browsers, which share non-zero among the clients of large sites, do not support ECC certificates.

The strength of the most common EDS algorithms is directly dependent on the strength (security) of the hash function used. The main hashing algorithms used are:

MD5- today is considered unsafe and is not used;SHA-1- used to sign most certificates until 2014, is now recognized as unsafe;SHA-256- an algorithm that has already come to replaceSHA-1;SHA-512- is rarely used today, so we will not dwell on it.

SHA-1 already officially considered unsafe today and is gradually being phased out. So Yandex.Browser and other browsers of the Chromium family in the coming months will begin to mark certificates that are signed using SHA-1 and which expire after January 1, 2016 , as unsafe. All new certificates are properly signed using SHA-256 . Unfortunately, not all browsers and OSs (WinXP <sp3) support this hash function, and for truly large resources this may threaten the loss of customers.All server end certificates used for TLS can be conditionally divided according to the validation method - Extended Validated and others (most often Domain Validated).

Technically, in the extended validated certificate, additional fields are added with the EV flag and often with the company address. The presence of an EV certificate implies a legal verification of the existence of the certificate holder, while certificates of the Domain Validated type only confirm that the certificate holder really controls the domain name.

In addition to the appearance of a beautiful green dash, the sign of the EV certificate also affects the behavior of browsers associated with checking their revocation status. So even Chromium family browsers, which use neither OCSP nor CRL, but rely only on Google CRLsets, for EV, check the status using the OCSP protocol. Below I will talk about the features of the work of these protocols in more detail.

Now that we have dealt with the certificates, we need to understand which protocol versions will be used. As we all remember, the SSLv2 and SSLv3 version protocols contain fundamental vulnerabilities. Therefore, they must be disabled. Almost all clients now have support for TLS 1.0. We recommend using TLS versions 1.1 and 1.2.

If you have a significant number of clients using SSLv3, you can only be allowed to use it with the RC4 algorithm as a compensation measure - we did just that for the transition period. However, if you do not have the same number of users with old browsers as ours, I recommend completely disable SSLv3. The correct configuration for Nginx in terms of protocols will look like this:

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;Regarding the choice of ciphersuites or cipher suites and hash functions that will be used for TLS connections, web servers should use only secure ciphers. Here it is important to strike a balance between security, speed, performance and compatibility.

Security vs. Perfomance

It is considered that the use of HTTPS is very costly both in terms of server-side performance and speed of loading and rendering resources on the client side. This is partly true - an incorrectly configured HTTPS can add 2 (and more) Round Trip Times (RTT) for a handshake.

In order to offset the delays arising from the implementation of HTTPS, the following techniques are used:

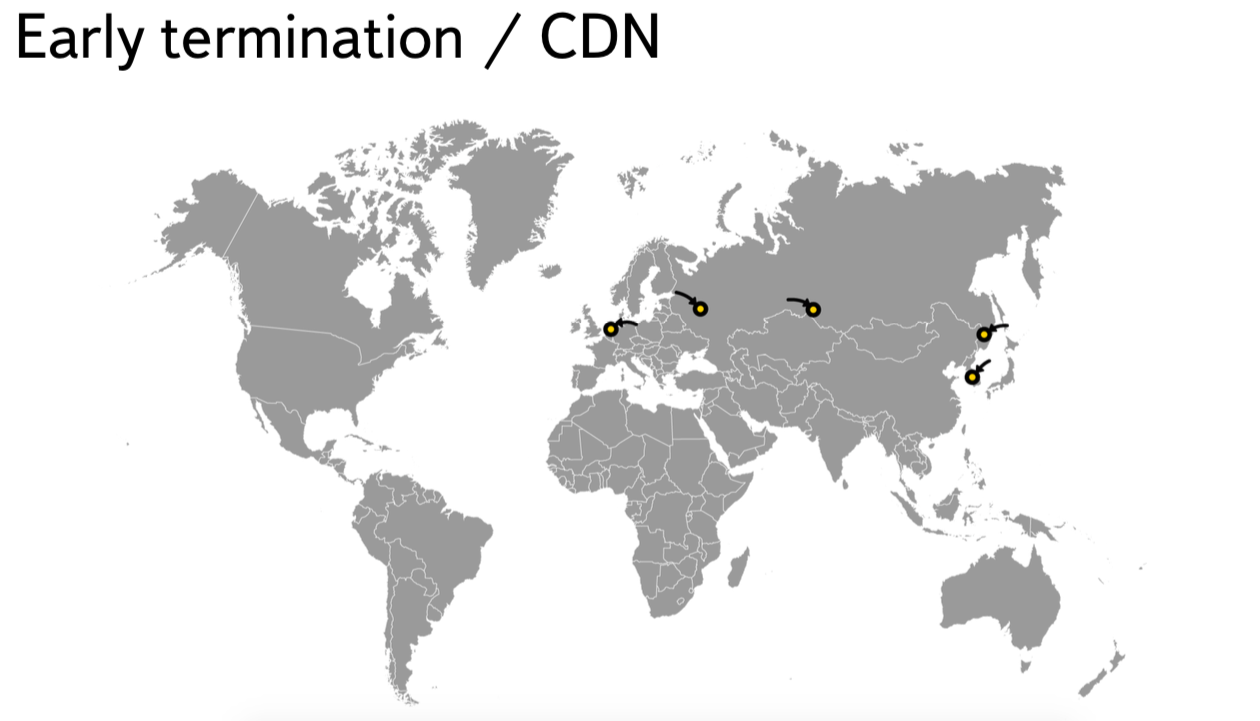

- Content Delivery Networks (CDN). By placing the termination point closer to the client, RTT can be reduced. Thus, making the delays arising from the implementation of HTTPS imperceptible. Yandex successfully uses this technique and constantly increases the number of points of presence.

- Optimization of certificate status checks. At the time of establishing a secure connection, some browsers check the revocation status of the server certificate. Such checks allow you to make sure that the certificate has not been revoked by the owner. The need to revoke a server certificate may arise, for example, after the private keys are compromised. So mass certificates were revoked after the discovery of the Heartbleed vulnerability.

To date, there are two main protocols used to check certificate statuses:

- Certificate Revocation Lists. When using this method, the HTTP browser downloads from the URL specified in the certificate a list of the serial numbers of the revoked certificates. This list is monitored and signed by the CA. Since the file with the list can be large, it is cached for a specified period (most often for 1 week).

- Online Certificate Status Protocol.

Since both protocols run on top of HTTP and at the same time checking the status of the certificate is a blocking procedure, where the servers distributing the CRL or OCSP are located, the responders can directly affect the speed of the TLS handheld.

Different browsers check certificate statuses in different ways. So Firefox uses only OCSP for regular certificates, but CRL is checked for EV. IE and Opera check both CRL and OCSP, and Yandex Browser and other browsers of the Chromium family do not use traditional protocols, relying on CRLsets - lists of revoked certificates of popular resources that come with browser updates.

To optimize the checks, a mechanism called OCSP stapling was also invented, which allows the client to send an OCSP response to the client in the form of a TLS extension along with a certificate. All modern desktop browsers support OCSP stapling (except Safari).

You can enable OCSP stapling in nginx with the following directive:

ssl_stapling on; . It is necessary to specify a resolver .But if you have a really large and loaded resource, most likely you will want to be sure that the OCSP responses that you cache (Nginx caches responses for 1 hour) are correct.

ssl_stapling_verify on; ssl_trusted_certificate /path/to/your_intermediate_CA_and_root_certs; When using OCSP stapling, mass resources can face such a problem as the wrong time on the client system. This happens due to the fact that, according to the standard, the response time of the responder is limited to a clearly defined time interval, and the time on the client machine may be 5-10-20 minutes behind. To solve this problem for users, we had to teach the server to distribute answers about a day after they were generated (we do about the same when laying out new certificates).

Thus, we have the opportunity to show a warning about the wrong time to people who have the system time shot down for a period of up to a day. In order to randomly rotate OCSP responses, the " ssl_stapling_file " directive is used. For those customers who do not support OCSP stapling, we use the caching of OCSP response responders in our CDN, thereby reducing the response time.

Another effective way to optimize checks is to use short-lived certificates, that is, those in which no status check points are specified. But the period of life of such certificates is very short - from one to three months.

The Certificate Authority can afford to use such certificates. They are almost always used for OCSP responders, since if there are check points in the certificate, Internet Explorer can also check the certificate status of the OCSP responder itself, which will create additional delays.

But even when using OCSP stapling or short-lived certificates, the standard TLS handshake (4 steps) will add 2 RTT delays.

The TLS False Start mechanism allows you to send application data after Stage 3, without waiting for a server response, thus saving 1 RTT. TLS False Start is supported by browsers of the Chromium family and Yandex Browser, IE, Safari, Firefox.

Unfortunately, unlike browsers, not all web servers support this mechanism. Therefore, the following requirements are usually the signal for using TLS False Start:

- Server announces NPN / ALPN (not required for Safari and IE);

- The server uses Perfect Forward Secrecy ciphersuites.

Perfect Forward Secrecy

Prior to SSLv3, an attacker who gained access to the server's private key could passively decrypt all communications that went through the server. Later, the Forward Secrecy mechanism (sometimes using the Perfect prefix) was invented, which uses key agreement protocols (usually based on the Diffie-Hellman scheme and ensures that session keys cannot be recovered, even if the attacker gets access to the server's private key.

A typical nginx configuration for a service running with user data looks like this:

ssl_prefer_server_ciphers on; ssl_ciphers kEECDH+AES128:kEECDH:kEDH:-3DES:kRSA+AES128:kEDH+3DES:DES-CBC3-SHA:!RC4:!aNULL:!eNULL:!MD5:!EXPORT:!LOW:!SEED:!CAMELLIA:!IDEA:!PSK:!SRP:!SSLv2; In this configuration, we set the highest priority AES with a 128-bit session key, which is formed by the Elliptic Curve Diffie Hellman (ECDH) algoritm. Next come any other ciphers from ECDH. The second “E” in the abbreviation stands for Ephemeral, i.e. session key that exists within the same connection.

Next, we allow the use of the usual Diffie Hellman (EDH). It is important to note here that using Diffie Hellman with a key size of 2048 bits can be quite expensive.

This part of the config provides us with PFS support. If you use processors with AES-NI support, then AES will be free for you, in terms of resources. Disable 3DES, enable AES128 in non-PFS mode. We leave 3DES and EDH and 3DES in CBC mode for compatibility with very old customers. Turning off the unsafe RC4 and so on. It is important to use the latest version of OpenSSL, then "AES128" will be deployed, including the AEAD ciphers .

PFS has a single flaw - performance penalties. Modern web servers (including Nginx) use the event-driven model. In this case, asymmetric cryptography is most often a blocking operation, since the web server process is blocked and the clients it serves suffer. What can we optimize in this place?

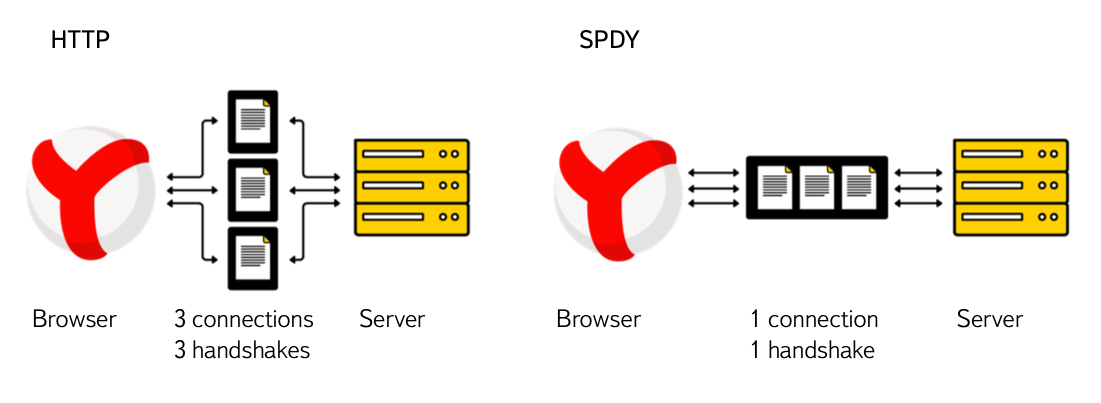

- SPDY.

If you read about the experience of SPDY implementation in the Post , then you noticed that SPDY allows you to reduce the number of connections, and hence the number of handshakes. In nginx 1.5+, SPDY is enabled by adding 4 letters to the config (the server must be compiled with the spdy module - with-http_spdy_module).listen 443 default spdy ssl; - Use elliptical cryptography. Asymmetric cryptography algorithms using elliptic curves are more efficient than their classical prototypes, which is why we increase the priority for ECDH when setting up ciphersuites. As I wrote earlier, in addition to using ECDH, you can use certificates with digital signature on elliptic curves (ECDSA), which will increase performance.

Unfortunately, Windows XP <SP3 and some other browsers, the share of which among clients of large sites is non-zero, do not support ECC certificates. The solution could be to use different certificates for different customers, which will save resources at the expense of newer customers, which are the majority. Openssl version 1.0.2 allows you to select a server certificate depending on client settings. Unfortunately, Nginx out of the box does not allow using multiple certificates for one server. - Use session reuse. Session reuse not only saves server resources (excludes asymmetric cryptography) for PFS / False Start connections, but also reduces the TLS handleshield delay to 1 RTT for regular connections.

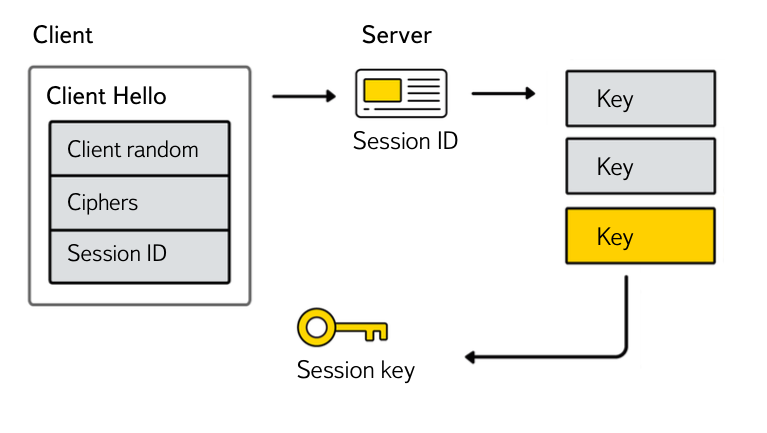

Currently there are two session reuse mechanisms:

- SSL session cache. This mechanism is based on the fact that a unique identifier is given to each client, and a session key is stored on the server using this identifier. The advantage is the support of almost all, including the old, browsers. The downside is the need to synchronize cache containing critical data between physical servers and data centers, which can lead to security problems.

In the case of Nginx, the session cache will work only if the client gets on the same real world where the initial SSL handshake occurred. We still recommend that you enable the SSL session cache, as it will be useful for configurations with a small number of Reals, where the probability of a user hitting the same real is higher.

In nginx, the configuration will look something like this, where SOME_UNIQ_CACHE_NAME is the cache name, which is recommended to use different identifiers for different certificates (not necessarily in nginx 1.7.5+, 1.6.2+), 128Mb is the cache size, 28 hours is the session lifetime.ssl_session_cache shared:SOME_UNIQ_CACHE_NAME:128m;

ssl_session_timeout 28h;

With an increase in the lifetime of the session, you need to be prepared for the fact that in the error logs records may appear:2014/03/18 13:36:08 [crit] 18730#0: ngx_slab_alloc() failed: no memory in SSL session shared cache "SSL".

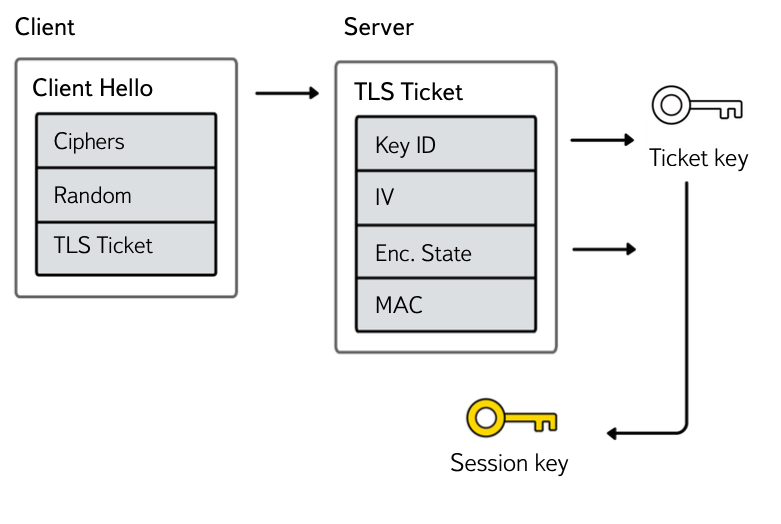

This is due to the peculiarity of displacing data from the session cache in nginx - an attempt is made to allocate memory for the session, if the limit is reached, one of the oldest sessions is killed and the operation is repeated again. That is, the session is successfully added to the buffer, but an error is written to the log at the first call to the allocator function. Such errors can be ignored - they do not affect the functionality (fixed in Nginx 1.4.7). - TLS session tickets . The mechanism is supported only by browsers of the Chromium family, including Yandex Browser, as well as Firefox. In this case, the client is sent the session state, encrypted with a key known to the server, as well as the key identifier. In this case, only keys are shared between servers.

In Nginx, support for static keys for session tickets has been added in versions 1.5.8+. Setting up tls session tickets when working with multiple servers is done as follows:ssl_session_ticket_key current.key; ssl_session_ticket_key prev.key; ssl_session_ticket_key prevprev.key;

In this case, current.key is the key that is currently being used. Prev.key is the key used by N hours before using current.key. Prevprev.key is the key used by N hours before using prev.key. The value of N must be equal to that specified in ssl_session_timeout . We recommend starting at 28 hours.

The important point is the method of rotation of keys, since The attacker who stole the key for encrypting tickets can decrypt all sessions (including PFS) within the key's lifetime.

In Yandex, there are special mechanisms for generating and securely delivering keys to destination servers.

Applications

CSP

Once the infrastructure issues have been resolved, let’s go back to the applications. The first thing you need to do is get rid of the so-called. mixed content It all depends on the scale of the project, the quantity and quality of the code. Somewhere you can do with sed, or nginx , but somewhere you have to look for hard-coded http schemes in the DOM tree. We came to the aid of the Content Security Policy, our colleagues from Mail wrote about its implementation earlier .

By adding such a title to the test stand you will receive reports on any content that is loaded via protocols other than

data: and https:Content-Security-Policy-Report-Only: default-src https:; script-src https: 'unsafe-eval' 'unsafe-inline'; style-src https: 'unsafe-inline'; img-src https: data:; font-src https: data:; report-uri /csp-reportSecure cookies

After you get rid of the mixed content, it is important to ensure that the Secure attribute is set for cookies. He tells the browser that cookies cannot be sent over an unsecured connection. So in Yandex, while there are two cookies - sessionid2 and Session_id, one of which is sent only through a secure connection, while the other is left "unsafe" for backward compatibility. Without a “safe” cookie, you cannot get to Mail, Disc, and other important services.

Set-Cookie: session=1234567890abcdef; HttpOnly; Secure;HSTS

Finally, after you have verified that your service is working correctly over the HTTPS protocol, set up a redirect from the HTTP version to HTTPS, it is important to inform the browser that this resource can no longer be accessed using the unprotected HTTP protocol.

For this, the HTTP Strict Transport Security Security header was invented.

Strict-Transport-Security: max-age=31536000; includeSubdomains;The max-age parameter specifies the period (1 year) during which the protected protocol should be used. The optional includeSubdomains flag says that all subdomains of this domain can also be accessed only through encrypted connections.

In order for users of the Chromium and Firefox family of browsers to always use secure connections, even when they are first accessed, you can add your resource to the browser's HSTS list. In addition to providing security, it will also save one redirect on first access.

To do this, add the preload flag to the header and specify the domain here: hstspreload.appspot.com .

Strict-Transport-Security: max-age=31536000; includeSubDomains; preloadFor example, Yandex.Passport has been added to the preload list of browsers.

Conclusion

The entire configuration of a single nginx server will look something like this:

http { [...] ssl_stapling on; resolver 77.88.8.1; # 127.0.0.1 keepalive_timeout 120 120; server { listen 443 ssl spdy; server_name yourserver.com; ssl_certificate /etc/nginx/ssl/cert.pem; # ssl_certificate_key /etc/nginx/ssl/key.pem; # ssl_dhparam /etc/nginx/ssl/dhparam.pem; # openssl dhparam 2048 ssl_prefer_server_ciphers on; ssl_protocols TLSv1 TLSv1.1 TLSv1.2; ssl_ciphers kEECDH+AES128:kEECDH:kEDH:-3DES:kRSA+AES128:kEDH+3DES:DES-CBC3-SHA:!RC4:!aNULL:!eNULL:!MD5:!EXPORT:!LOW:!SEED:!CAMELLIA:!IDEA:!PSK:!SRP:!SSLv2; ssl_session_cache shared:SSL:64m; ssl_session_timeout 28h; add_header Strict-Transport-Security "max-age=31536000; includeSubDomains;"; add_header Content-Security-Policy-Report-Only "default-src https:; script-src https: 'unsafe-eval' 'unsafe-inline'; style-src https: 'unsafe-inline'; img-src https: data:; font-src https: data:; report-uri /csp-report"; location / { ... } } In conclusion, I would like to add that HTTPS is gradually becoming the de facto standard for working with WEB, and is used not only by browsers - most of the mobile application APIs start to work using the HTTPS protocol. You can learn about some features of the secure implementation of HTTPS in mobiles from the report of Yury tracer0tong Leonidchev on a clean-up day in Nizhny Novgorod.

Source: https://habr.com/ru/post/249771/

All Articles