Intel® Graphics Technology. Part I: Almost Gran Turismo

In a post about the "innovations" of Parallel Studio XE 2015, I promised to write about an interesting technology from Intel - Graphics Technology. Actually, that's what I'm going to do now. The essence of Intel Graphics Technology is to use a graphics core integrated into the processor to perform calculations on it. This is offload on the chart, which naturally gives a performance boost. Is integrated graphics so powerful that this increase will be really great?

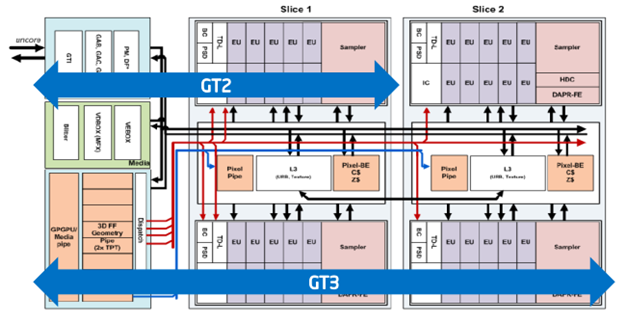

Let's look at the family of new graphics cores GT1, GT2 and GT3 / GT3e, integrated into 4th generation Intel Core processors.

Yes, the graphics were also in the 3rd generation, but these are already “the deeds of bygone days”. The GT1 core has minimum performance, and the GT3 has the maximum:

| HD (GT) | HD 4200 HD 4400 HD 4600 (GT2) | HD 5000 Iris 5100 (GT3), Iris Pro 5200 (GT3e) | |

|---|---|---|---|

| API | DirectX 11.1, DirectX Shader Model 5.0, OpenGL 4.2, OpenCL 1.2 | ||

| Number executive blocks (Execution Unit) | ten | 20 | 40 |

| The number of FP operations per beat | 160 | 320 | 640 |

| The number of threads per executive device / total | 7/70 | 7/140 | 7/280 |

| L3 cache | 256 | 512 | 1024 |

That is, for the case of GT1, everything will be almost the same, only the “layer” needs to be cut in half horizontally. We will not waste time on trifles, and dwell on the possibilities of GT3e graphics, as the most advanced example. So, we have 40 execution units with 7 threads per block. In total, we have up to 280 streams! A good increase in power for the "engine" of our system!

In addition, each stream is available at 4 KB in the register file (GRF - General Register File) - the fastest graphics memory for storing local data. The total file size is 1120 KB.

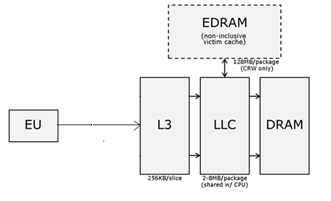

In general, the memory model is of great interest and can be schematically represented as follows:

In addition to registers, the graphics also have their own L3 cache (256 KB for every ½ "layer"), as well as LLC (Last Level Cache), which is L3 processor cache and, thus, common for CPU and GPU. From the point of view of computing on the GPU, there are no L1 and L2 caches. Only in the most powerful GT3e configuration is another 128 MB of eDRAM cache available. It is in the same package with the processor component, but is not part of the Haswell chip, and plays an important role in increasing the performance of the integrated graphics, almost eliminating the dependence on computer RAM (DRAM), some of which can also be used as video memory.

Not all versions of processors have the same integrated graphics. Server models prefer to have significantly more processing cores instead of graphics, so the cases in which the use of Graphics Technology is possible are significantly narrowed. I finally got a laptop with Haswell and Intel HD Graphics 4400 integrated into the processor, which means you can play with Intel Graphics Technology, which is supported on 64-bit Linux systems, as well as on 32-bit and 64-bit Windows systems.

')

Actually, on demand for the hardware, everything is clear - without it, it’s pointless to talk about calculations on the graphics core. The documentation (yes, yes ... I even had to read it, because everything did not work right away) said that everything should work with these models:

- Intel Xeon Processor E3-1285 v3 and E3-1285L v3 (Intel C226 Chipset) with Intel HD Graphics P4700

- 4th Generation Intel Core Processors with Intel Iris Pro Graphics, Intel Iris Graphics or Intel HD Graphics 4200+ Series

- 3rd generation Intel Core processors with Intel HD Graphics 4000/2500

“The piece of iron is suitable, the compiler with GT support is set. Everything should fly! ”- I thought, and began to collect the samples supplied with the compiler for Graphics Technology.

From the point of view of the code, I did not notice anything extraordinary. Yes, there were some pragmas before the cilk_for cycles, like these:

void vector_add(float *a, float *b, float *c){ #pragma offload target(gfx) pin(a, b, c:length(N)) cilk_for(int i = 0; i < N; i++) c[i] = a[i] + b[i]; return; } We will talk about this in detail in the next post, but for now let's try to collect a sample with the / Qoffload option. It seems that everything compiled, but the error stating that the linker (ld.exe) could not be found stopped me a bit. It turned out that I missed one important point and not everything is so trivial. I had to dig into the documentation.

It turned out that the software stack for running an application with offload on integrated graphics looks like this:

The compiler does not know how to generate code that can be immediately executed on a chart. It creates an IR (Intermediate Representation) code under a vISA (Virtual Instruction Set Architecture) architecture. And that, in turn, can be executed (converted in runtime) on the graph with the help of JIT's, supplied in the installation package with drivers for Intel HD Graphics.

When compiling our code using offload for Graphics Technology, an object manager is generated in which the part that runs on the graphics core is “embedded”. This shared file is called fat . When linking such fat people (fat object managers), the code running on the graph will be in the section embedded in the binary on the host, called .gfxobj (for the case of Windows).

Here it becomes clear why there was no linker. The Intel compiler does not and did not have its own linker, and what about Linux, what about Windows. And here in one file you need to “sew” object files in different formats. Microsoft's simple linker cannot do this, so you need to install a special version of binutils (2.24.51.20131210), available here , and then prescribe the same ld.exe (in my case C: \ Program Files (x86) \ Binutils for MinGW (64 bit) \ bin ) in PATH.

After installing everything I needed, I finally collected a test project on Windows and got the following:

dumpbin matmult.obj Microsoft (R) COFF/PE Dumper Version 12.00.30723.0 Copyright (C) Microsoft Corporation. All rights reserved. Dump of file matmult.obj File Type: COFF OBJECT Summary 48 .CRT$XCU 2C .bss 5D0 .data 111C .data1 148F4 .debug$S 68 .debug$T 32F .drectve 33CF8 .gfxobj 6B5 .itt_notify_tab 8D0 .pdata 5A8 .rdata AD10 .text D50 .xdata The required object for execution on the graph can be extracted from the fat object using a special tool (offload_extract). If the environment for running the Intel compiler is set in our console, it is quite simple to do this:

offload_extract matmult.obj As a result, in the daddy you can find a separate object with the prefix GFX at the end, in my case - matmultGFX.o. By the way, he has never been in PE format, but in ELF.

By the way, if offload is not possible and the graphics core is not available while the application is running, execution occurs on the host (CPU). This is achieved using compiler tools and offload runtime.

With how everything should work, we figured out. Then we will talk about what is available to the developer and how to write code that will eventually work on the chart.

There was so much information that in the framework of one post everything still does not fit, therefore, as they say, “to be continued ...”.

Source: https://habr.com/ru/post/249743/

All Articles