How we made a mod under the Oculus Rift for World of Tanks

Prehistory

About a year and a half ago, the DK1 fell into the hands of the developers of the Minsk studio Wargaming. A month later, when everyone played enough in Team Fortress and Quake in Full 3D, the idea arose to embed something with Oculus in the Tanks themselves. On the process, results and pitfalls of working with Oculus - read below.

In World of Tanks, we support a certain amount of gaming peripherals, which expands the player's UX (vibroncaps, for example). However, with the advent of the two billionth Oculus Rift, we decided that it would be time to please not only the players seat, but also give them a new eye candy.

')

Honestly, no one knew how it should look like, how to help the player. The task of developing the mod set, as they say, "just for lulz". Slowly, when the brain refuses to think about the main tasks, we started the integration: we downloaded the Oculus SDK, installed it and started to deal with the source code of the examples.

The work began using the first devkit, which was somewhat dangerous for the psyche. The fact is that the first devkit had very low resolution screens. Fortunately, quickly enough we got our hands on the HD version, which is more fun to work with.

Sdk

We started development with SDK version 0.2.4 for DK1. Then, when receiving the HD Prototype, the new SDK version was not required. Therefore, we spent 90% of the time using the SDK version that is rather old, but nonetheless satisfying our needs. Then, when the work on the Oculus-mod was almost completed, DK2 came to us. And it turned out that the old SDK is no longer suitable for him. But is this a problem? Download the new SDK, already version 0.4.2. Suddenly it turned out that it was rewritten a little more than completely. I had to change almost the entire wrapper over the device, make various edits. But the most interesting thing happened with the rendering. If before the pixel shader was simple enough, in the new version it was changed and complicated. And blame the lens. I don’t know why such a decision was made, but the side effect of the lenses is terrible chromatic aberrations, decreasing from the edge of the field of view towards the center. To fix this defect and was rewritten pixel shader. The solution is at least strange: to degrade the performance of the application due to a strange engineering solution. But! Inquisitive mind and resourcefulness will save the galaxy: it turned out that the lenses from DK1 and HD P are also excellent for DK2. And they have no side effects. Like this.

Initialization

The process of initializing the device itself, getting the context is completely transferred from the example - Ctrl + C Ctrl + V in action. There are no pitfalls here.

Rendering

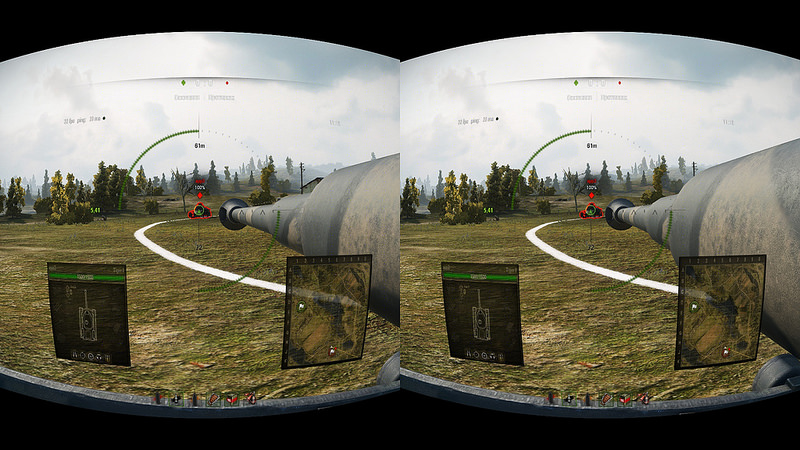

The stereoscopic image in the device is obtained in the classical way, by drawing the scene from two different angles and providing each of the images to the corresponding eye. Read more about this here .

In line with this, our original stereoscopic imaging algorithm was as follows:

1. Installation of matrices

Since for each eye it is necessary to produce a render with a small offset, you first need to modify the original view-matrix. The required transformation matrices for each eye are provided by the Oculus Rift SDK. The modification is as follows:

• Get a matrix of additional transformation.

• Transpose it (Oculus Rift uses a different coordinate system).

• Multiply the obtained matrix on the left by the original one.

2. Render two times with the change of matrix

After modifying the view-matrix for the left eye and setting it in the render context, we draw as usual. After that, we modify the view-matrix once again for the right eye, put it in a render-context and draw again.

3. Post-effect lens distortion

For a more complete filling of the visual perception zone, Oculus uses lenses that give a side effect in the form of distortion of the geometry of objects. In order to suppress this effect, an additional post-effect is applied to the final render, distorting the image in the opposite direction.

4. Output the final image

Finally, the image is formed by combining the renders for both eyes, in which each is drawn on the corresponding half of the screen, and applying the post-effect of distortion to them.

Challenges

In the course of development, we quickly discovered that currently there are no clear, 100% working guidelines for OR work in games. If you run several different games with OR support, then each of them will have different implementations of interfaces and controls in the oculus. As far as we know, the developers of Oculus Rift do not specifically make strict requirements and rules. Their idea is that game developers themselves set up experiments. For this reason, during the development process, we had to redo our integration with Oculus more than once: it would seem that it was ready, but at some points something was not right, something annoying, and as a result, half of the logic had to be discarded.

It adds a couple more fun of our features. Firstly, in World of Tanks, the camera basically hangs over the tank, and turning the head in the oculus in this mode by design does not match your experience from the real world. You can’t hang over the equipment you are leading in life (and sometimes it could be so useful ...).

In addition, World of Tanks is a PvP game, where you are opposed by

Common UI Problem

In our game - however, as in most others - almost the entire UI (both in the hangar and in battle) is located on the sides of the screen. And if you produce output UI without any additional modifications, the following problem occurs. Due to the design features of the Oculus, only the central part of the image is in scope. And UI, in turn, does not fall into this part (or partially).

Here are the ways to solve this problem, we tried.

1. Reduce the interface and make it appear in the center of the screen, getting into view. To do this, it is necessary to produce its output in a separate render target and then apply it during post-processing.

This method solves the problem of visibility UI, but dramatically reduces the information content and readability.

2. Use the Oculus orientation matrix to position the UI, that is, enable the user to view not only the 3D scene, but also the UI.

This method is devoid of the disadvantages of the first: information content and readability are limited only by the resolution of the device. But it has its drawbacks: to perform the usual actions (viewing the tanks in the carousel; checking the balance of silver, etc.) requires a lot of head movements and - what follows from the previous one - it is difficult to synchronize the movements of the UI and the camera for the 3D scene.

3. Combine the previous methods, i.e. “Scaling” the interface and at the same time giving the opportunity to inspect it. The main problem is the selection of such a parameter of the scale that would preserve the readability of the text and would allow to get rid of a large number of head movements.

At first, we didn’t do the separation between the interfaces in the hangar and in combat. Moreover, the use of the Oculus orientation matrix for inspecting the UI was inspired by the combat interface. Almost the entire visible area was occupied by the 3D scene, and this seemed to be correct, but the inability to find out the number of “units of strength” of our own tank or the location of allies / opponents on the minimap did not contribute to getting a fan from the bouncy tank rubilov in full 3D. It was then that we thought about the “inspected” UI: to see, for example, the minimap, we had to turn a little and tilt our head - a completely natural movement for a person who knows the interface of our game. And here we are faced with a problem that I described: after five minutes of constant rotation of my head in search of a minimap, a tank doll and the number of shells, the neck began to hurt.

Evolution, as indicated above, was the use of a hybrid version: now part of the interface was visible from the beginning and only a small “refinement” of the head was required for a full inspection.

It would seem that the problem has been solved, but after watching the guys from our publisher, this option is shallowed.

Even despite the naturalness of movements for information, the constant need to rotate the head and then quickly return to its original position to perform active actions was recognized as uncomfortable and hindering the enjoyment of the game. Since there are no active actions in the hangar, it was decided to leave the hybrid version in it: by that time HD Prototype arrived in time and gave decent text quality with a small UI scale.

In the same HD Prototype, due to the increased resolution, more 3D scenes began to fit into the visible area. We decided to stop the experience with the combat UI and make it static, but customizable, allowing you to change the position and size of its elements directly in runtime.

Simultaneously with the solution of these problems, we had to think about another point: some elements of the UI should not be scaled together with others (for example, indicators of the direction of impact, equipment markers, scopes). I had to make adjustments to the rendering order: some of the interface elements now began to be drawn along with the 3D scene.

Camera Management

Oculus is not only an image output device, but also an input device. The input is the quaternion of orientation (in DK1 and HD P is the matrix) of the device in space. Therefore, we wanted to use this data not only to position the UI, but also to control the cameras.

Hangar

In the hangar, the control circuit of the cameras was made like this: the mouse controls the angle of inclination and the length of the tank "selfie stick". At the end of this “stick” there is a camera, the direction of which can be controlled with the help of Oculus: look around, inspect not only the tank, but also the surrounding space.

Arcade mode

In the arcade mode, the mouse rotates, as before, rotate the coordinate system from which the aiming takes place, the turns of the head in the oculus occur in this coordinate system.

Sniper mode

Initially, we were working on the opportunity to aim the head. But they quickly abandoned this approach due to the low accuracy of shooting, the load on the neck and the difficulties in synchronizing the movements of the mouse and head, the speed of turning the head and the turret of the tank.

Then they tried to make some kind of Team Fortress aiming system: the camera is controlled by head movements, but there is some space in the center of the visible area in which the sight can only be moved with the mouse for more accurate shooting. The prototype was not bad, but the difficulties of use remained.

Therefore, we decided to dwell on the simplest and most effective option: the camera is controlled by the head and the mouse. That is, when the head moves, the aiming point does not change - only the direction of the camera. And when the mouse moves, both the aiming point and the direction of the camera change.

Strategic mode

Initially, it was not planned to use Oculus to play the art, so there are no modifications in the strategic mode.

Gunner mode

Once, having exhausted ideas, no matter how interesting the Oculus could be as an input device, we discussed the pros and cons of the device and suggested that it would be easier for the player to imagine himself in the virtual 3D world as a virtual person than a virtual tank. And they decided to highlight the role in the tank, which the player will be interested in trying on: this role was the role of the gunner. We already had a camera that can be fixed on any node of the tank, so it only remained to adjust the positioning and limit the field of view so that the player could not look inside the tank or through the barrel. For the test, we chose two tanks - the IC and Tiger I - because of their popularity and the availability of their models in HD at the time of development. The implementation took only a few days, but in the end the mode turned out to be the most interesting and unusual in terms of gameplay.

The name of the regime so far “floats”: someone calls it “the gunner’s camera”, someone “the camera from the commander’s turret”. The main feature of the mode is that the camera in it hangs in the coordinate system of the real turret of the tank, and at the same time head turns are allowed using the Oculus Rift. At the same time, the entire tank of the player is not hiding, so that you can take a look at the whole bulk of your car. But in this perspective, the tank is perceived much more impressive than through the camera, a few dozen meters away. In this case, the question arose, to which point of the tower to mount the camera in this mode. For the IC and Tiger I, we made a hardcode version: the camera in it is mounted next to the barrel. Generating points for the remaining hundreds of our tanks in the automatic mode is not easy to implement, and manual processing will take too much time. For this reason, the ability to manually adjust the camera's position keys is in vogue.

findings

Oculus Rift is a very interesting device that adds a lot of new sensations to the games. However, as is often the case, the creation of a production-ready-integration of such a device is not a matter of a couple of days. If you decide to build its support into an already existing game (not one that was originally made for VR), then you will need:

1. Partial rework of the in-game menu. Even if you refuse to fully integrate it into virtual space (projecting elements onto game objects, location according to real world coordinates, etc.), the menu display will need to be adjusted.

2. Strong, perhaps a radical rework directly gameplay UI. Oculus Rift has not the largest resolution, and if you just take the HUD out of the game and hang it in front of Oculus, then the interface elements will take up too much space. In addition, it will not leave the feeling of "flies before my eyes." So, it is necessary to cut the UI, put something on the eyes, carry something more secondary, and this requires the UI to react to the rotation of the head. A combat UI projected onto game objects (cabin, helmet, and the like) will potentially take up too few pixels on the screen when drawing, and there will be no informativeness from it.

3. The gaming camera architecture must be extensible. Oculus is not only a device for displaying pictures on its screens, but also a device for inputting the orientation of the head. Consequently, these data need to somehow be shoved into gaming cameras in a human way. And if you need the opportunity to look around, then the aiming system in the game should be independent of the orientation of the camera. In our case, the camera of the gunner, for example, is obtained by the composition of the logic of the sniper and arcade modes. Also, the separation of the logic of the review and the logic of aiming facilitates the integration of Oculus with already existing control modes, without forcing overriding them.

4. And last but equally important: Oculus requires the creation of a control system specific to it. An implementation in which the movement by the oculus is the same as moving the mouse may well not work (this option, perhaps, immediately falls well only on flight simulators, where the logic of turning the pilot’s head is still implemented and the view from the cockpit is the main mode review).

In short, the advantages of Oculus include:

• sufficient openness for cooperation;

• SDK development;

• PR;

• John Carmack (lol).

Minuses:

• lack of general recommendations for gameplay integration;

• oddity with chromatic aberration in 0.4.2;

• perception complexity in third-person games (especially if the hero is a tank, not a humanoid);

• common problems for all helmets:

- focusing;

- screen resolution (even in the HD version (sic!));

- discomfort with prolonged use (neck + headache).

The mod itself can be downloaded from this link .

Source: https://habr.com/ru/post/249115/

All Articles