Do not rush to throw out the old servers, you can assemble fast Ethernet-storage in an hour

Once we installed new EMC array disk shelves for one of our large customers. When I left the facility, I paid attention to people in the form of a transport company, who pulled out of the racks and prepared a large number of servers for loading. Communication with the sysadmins of customers is tight, so it quickly became clear that these are servers — old machines that have little memory and processing power, although there are plenty of disks. Updating them is not profitable and the glands will be sent to the warehouse. And they will be written off somewhere in a year when they become covered in dust.

Iron, in general, not bad, just past generation. Naturally, it was a pity to throw it out. Then I offered to test the EMC ScaleIO. In short, it works like this:

')

ScaleIO is software that is installed on servers on top of the operating system. ScaleIO consists of the server part, the client part and control nodes. Disk resources are combined into one virtual single-level system.

How it works

To operate the system you need three control nodes. They contain all information about the state of the array, its components and the ongoing processes. This is a sort of array orchestrator. For ScaleIO to function properly, at least one controlling node must be alive.

The server part is small clients that combine free space on servers into a single pool. On this pool, you can create the moon (one moon will be distributed across all servers in the pool) and give them to customers. ScaleIO can use server RAM as a read cache. The cache size is set for each server separately. The larger the total volume, the faster the array will work.

The client part is a block input-output device driver, representing distributed across different moon servers as a local disk. So, for example, loon ScaleIO looks on Windows:

The requirements for installing ScaleIO are minimal:

CPU | Intel or AMD x86 |

Memory | 500 MB RAM for control node 500 MB RAM per data node 50 MB RAM for each client |

Supported Operating Systems | Linux: CentOS Windows: 2008 R2, 2012, or 2012 R2 Hypervisors: · VMware ESXi OS: 5.0, 5.1, or 5.5, managed by vCenter 5.1 or 5.5 only · Hyper-V · XenServer 6.1 · RedHat KVM |

Of course, all data is transmitted via Ethernet. All I / O and bandwidth are available to any application in the cluster. Each host writes to many nodes at the same time, which means that the throughput and the number of input / output operations can reach very large values. An additional advantage of this approach is that ScaleIO does not require a thick interconnect between the nodes. If the server is Ethernet 1Gb, the solution is suitable for streaming recording, archive or file garbage. Here you can run a test environment or developers. When using Ethernet 10Gb and SSD drives, we get a good solution for databases. On SAS disks, you can upgrade datastores on VMware. At the same time, virtual machines can work on the same servers from which space is given to the shared moon, because under ESX there is both a client and a server part. I personally love this variation.

With a large number of disks, according to probability theory, the risk of failure of any of the components increases. The solution is interesting: in addition to the RAID groups at the controller level, data mirroring is used for different servers. All servers are divided into fault-sets - a set of machines with a high probability of simultaneous failure. Data is mirrored between the fault-sets in such a way that the loss of one of them will not lead to data unavailability. One fault-set may include servers located in the same rack, or servers with different operating systems. The last option is pleasant because you can roll out patches on all Linux or Windows machines at the same time, without fear of a cluster crashing due to operating system errors.

Tests

ScaleIO is installed using the installation manager. It is necessary to download software packages for different operating systems and a csv-file with the desired result. I took 8 servers, half from Windows, half from SLES. Installation on all 8 took 5 minutes and a few clicks on the "Next" button.

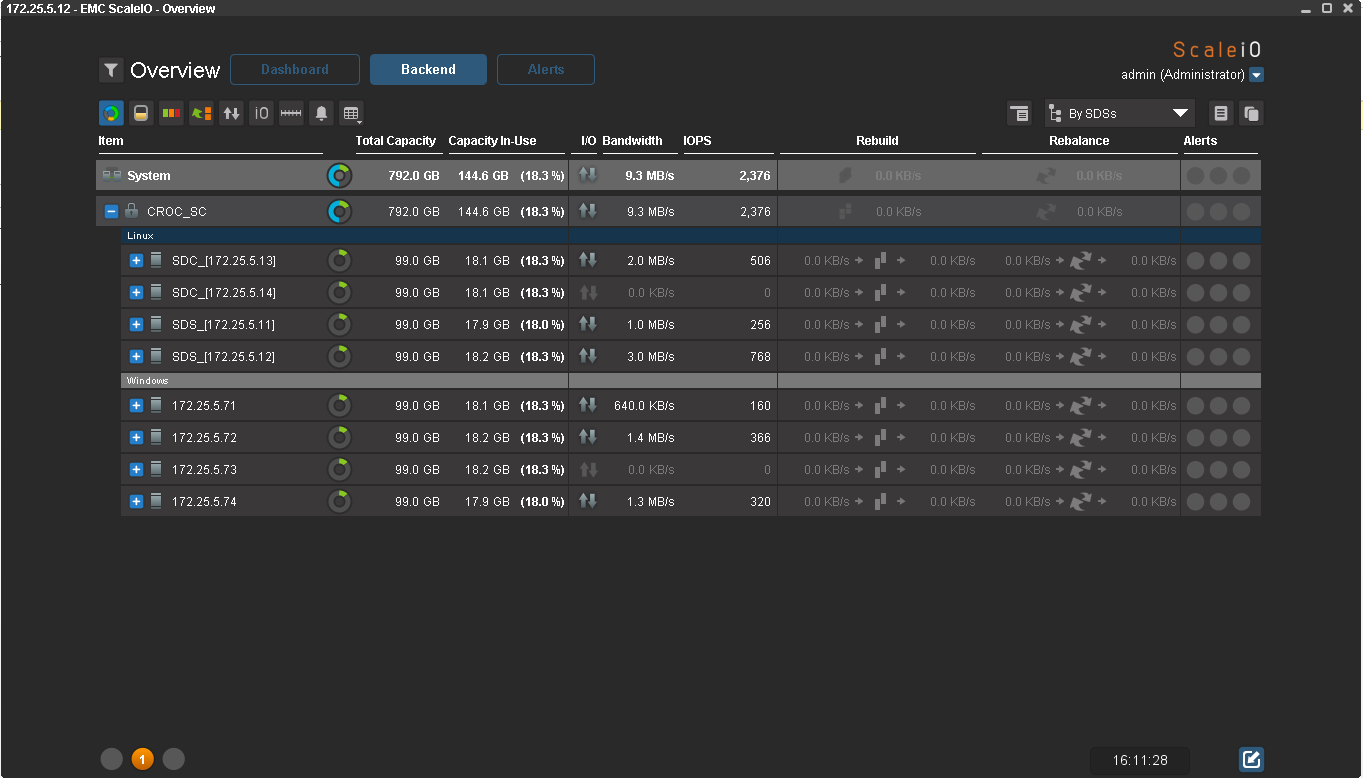

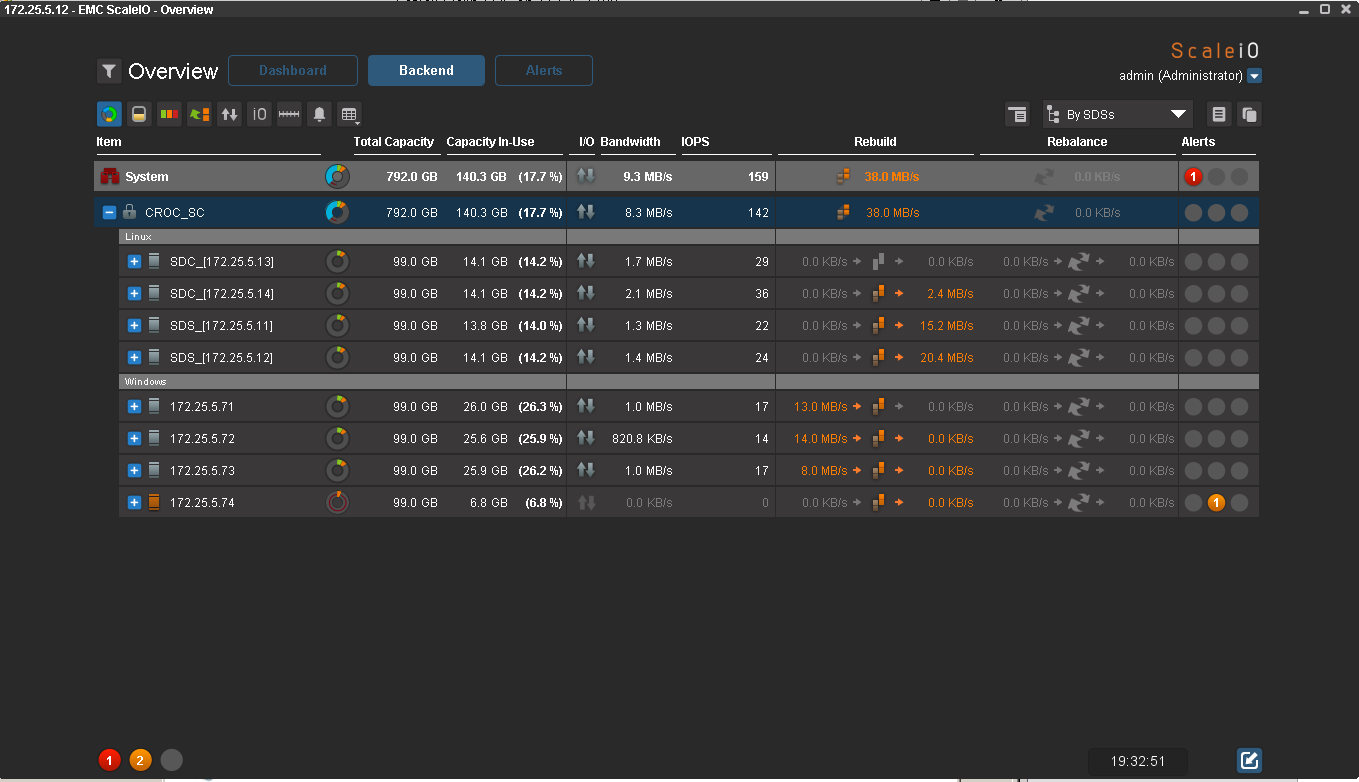

Here is the result:

This is, by the way, a GUI through which you can control the array. For lovers of the console, there is a detailed manual on cli-commands. The console, as always, is more functional.

For tests, I divided all data nodes into 2 Failover sets: with Windows OS and with SLES. Mapim is our first host disk:

Disk size is small, only 56 GB. Further, according to the plan, tests for fault tolerance, but I do not want to wait for the end of the rebuild for more than 10 minutes.

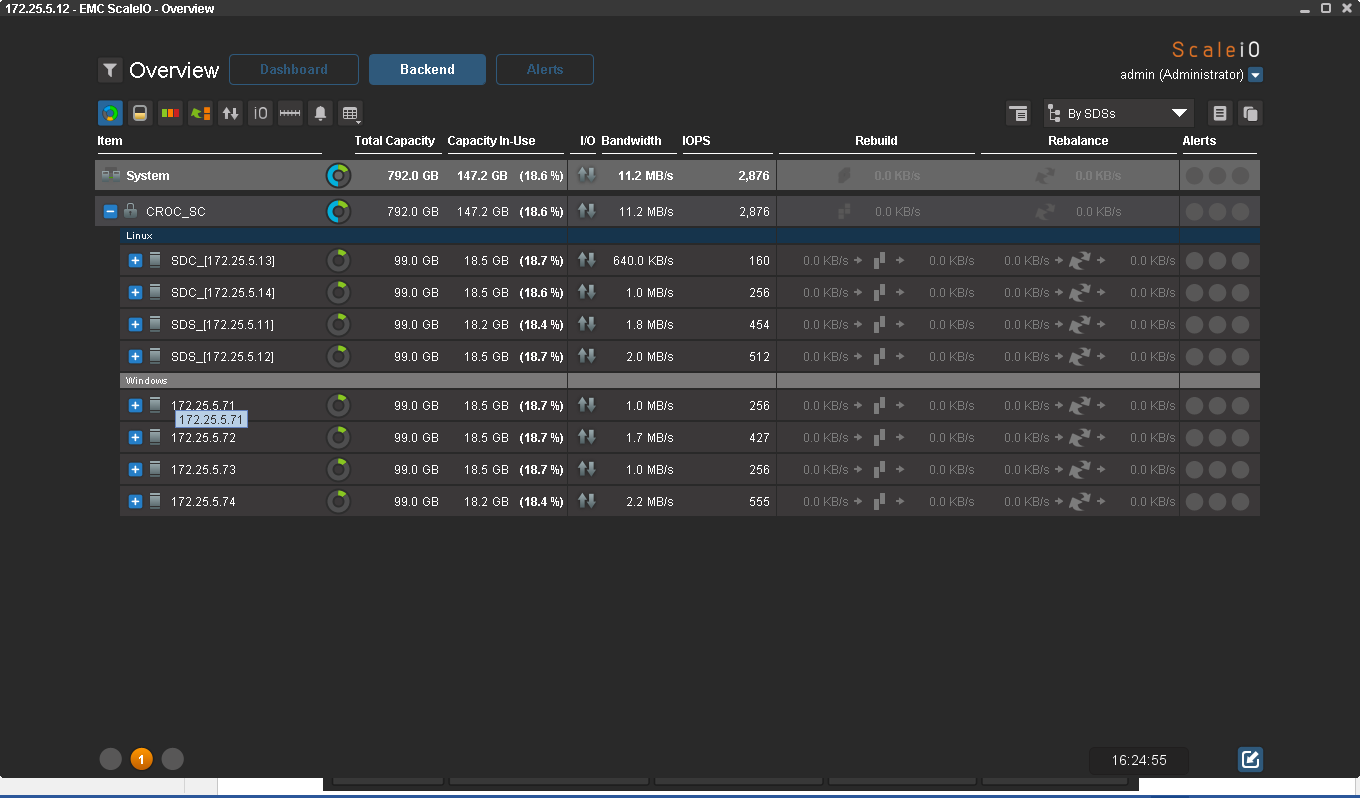

For load emulation, the easiest way is to use IOmeter. If the disc falls off even for a second, I will definitely find out. Unfortunately, there is no way to test performance in this test: the servers are virtual and the datastor is EMC CX3. Normal equipment is occupied in production. Here the first bytes ran:

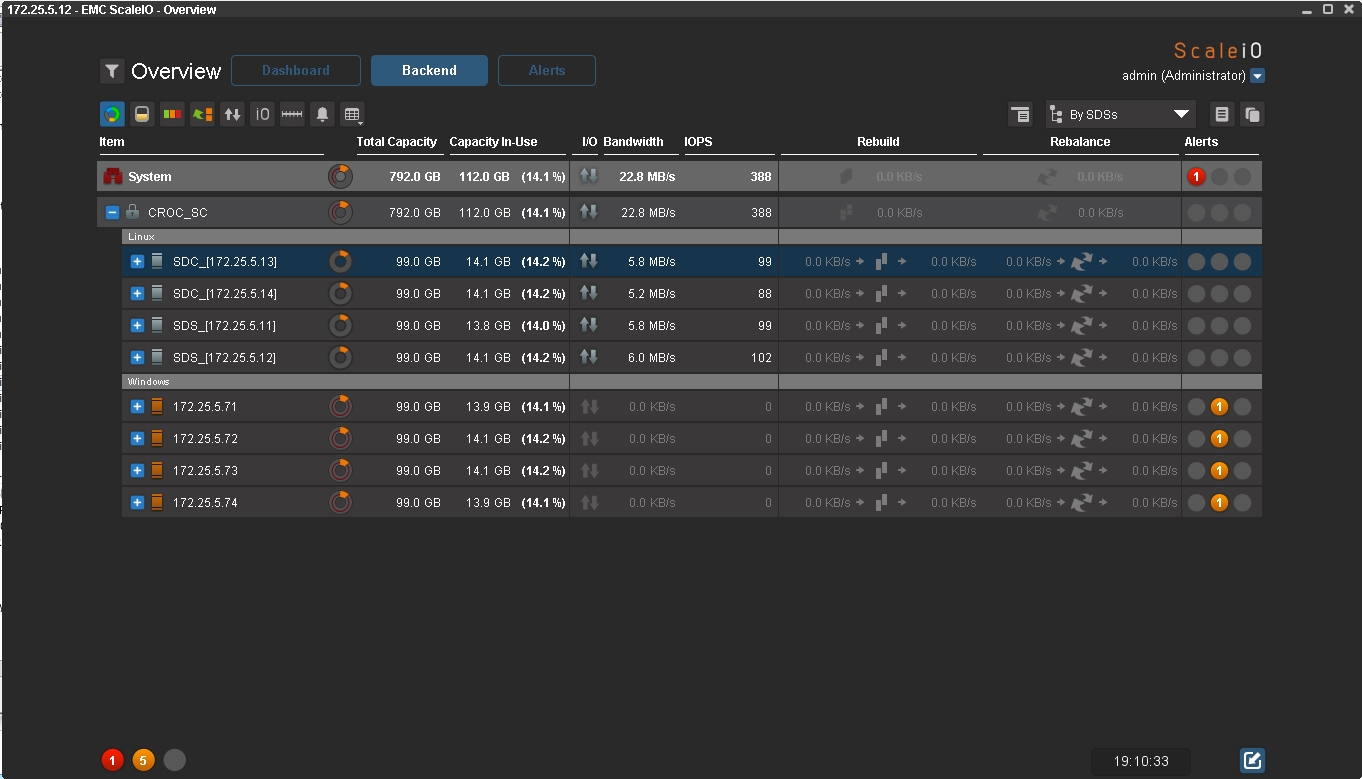

As I wrote earlier, one fail-set can be turned off. The fun begins. It's nice to know that everything will be fine with the client's production in emergency situations, therefore at KROK we create such situations in our lab. The best way to convince a customer about the reliability of a high availability solution is to turn off one of the two equipment racks. Here I do the same:

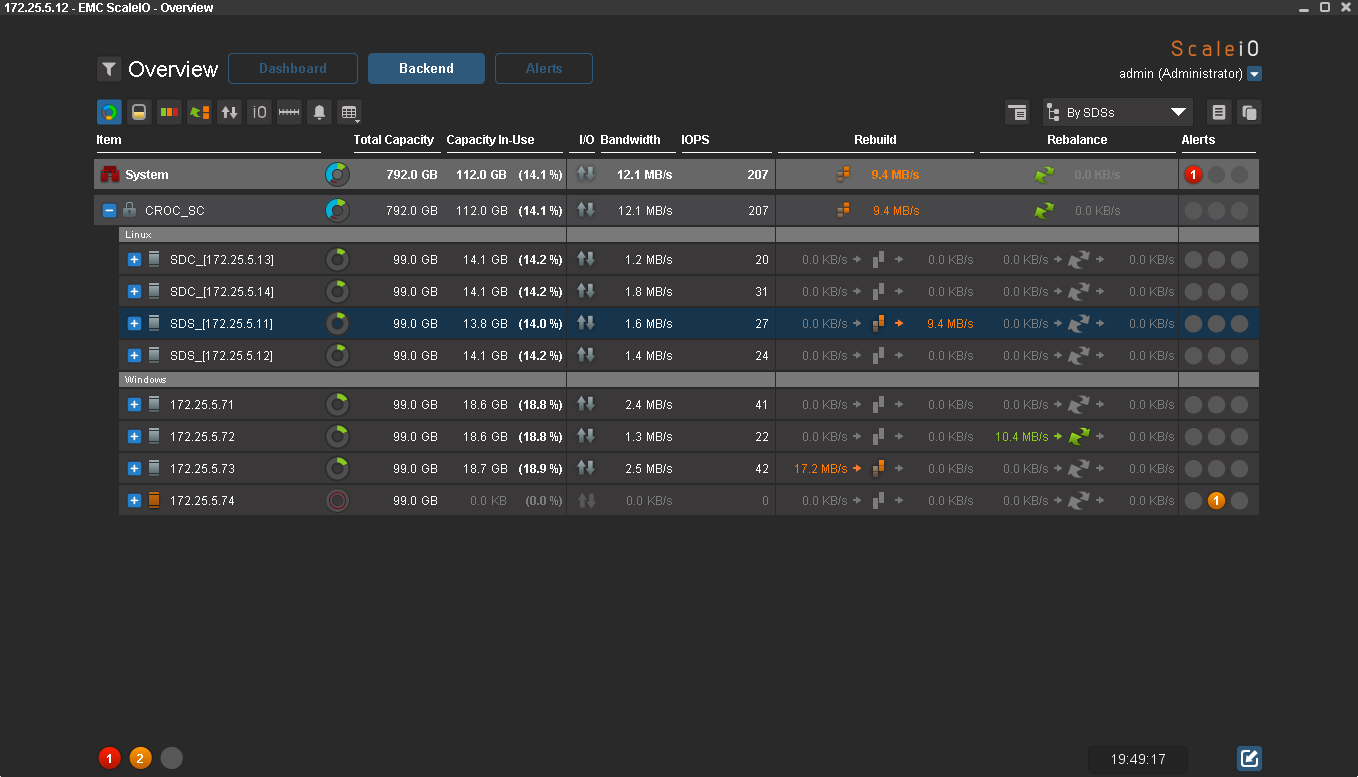

The GUI shows that all nodes with the Windows operating system are not accessible, which means that the data are no longer protected by redundancy. The pool has moved to Degraded status (red instead of green), and IOmeter continues to write. For a host, a simultaneous failure of half of the nodes went unnoticed.

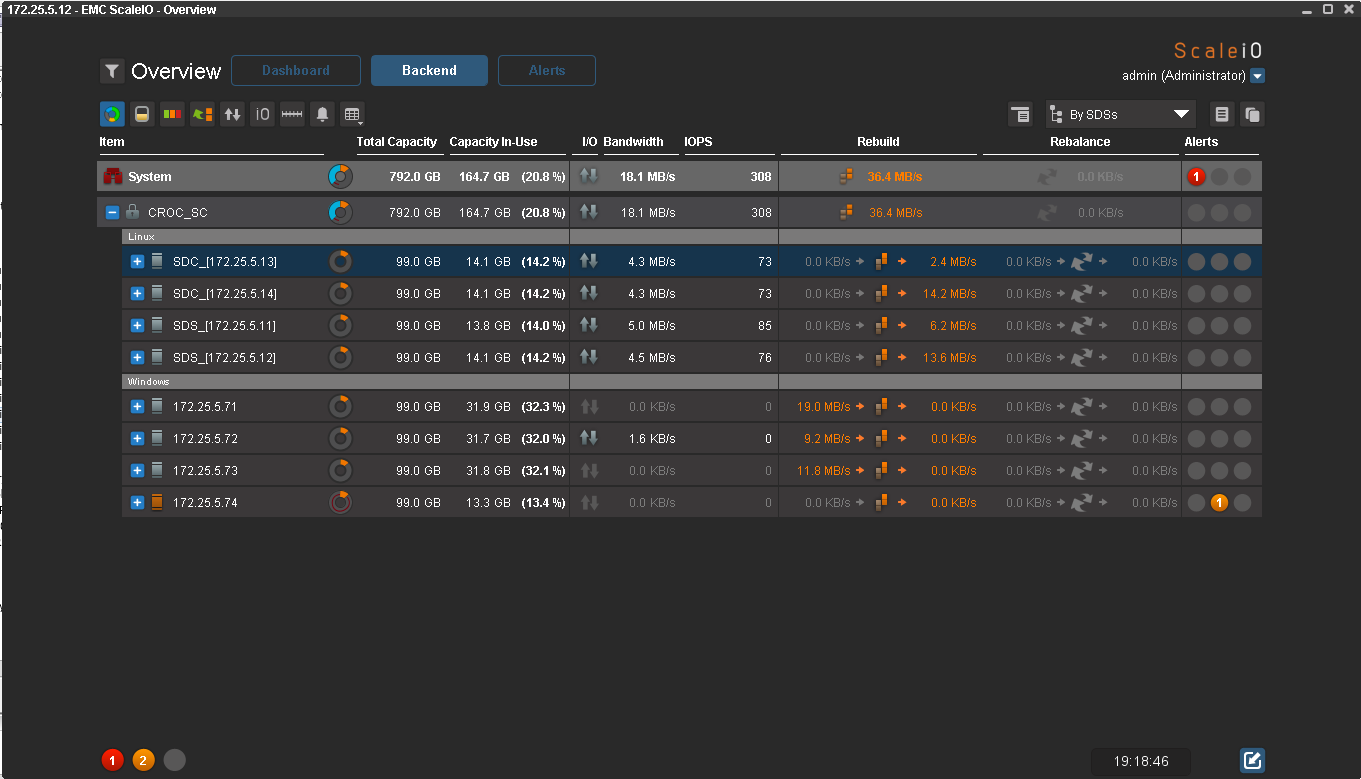

Let's try to include 3 of the four nodes:

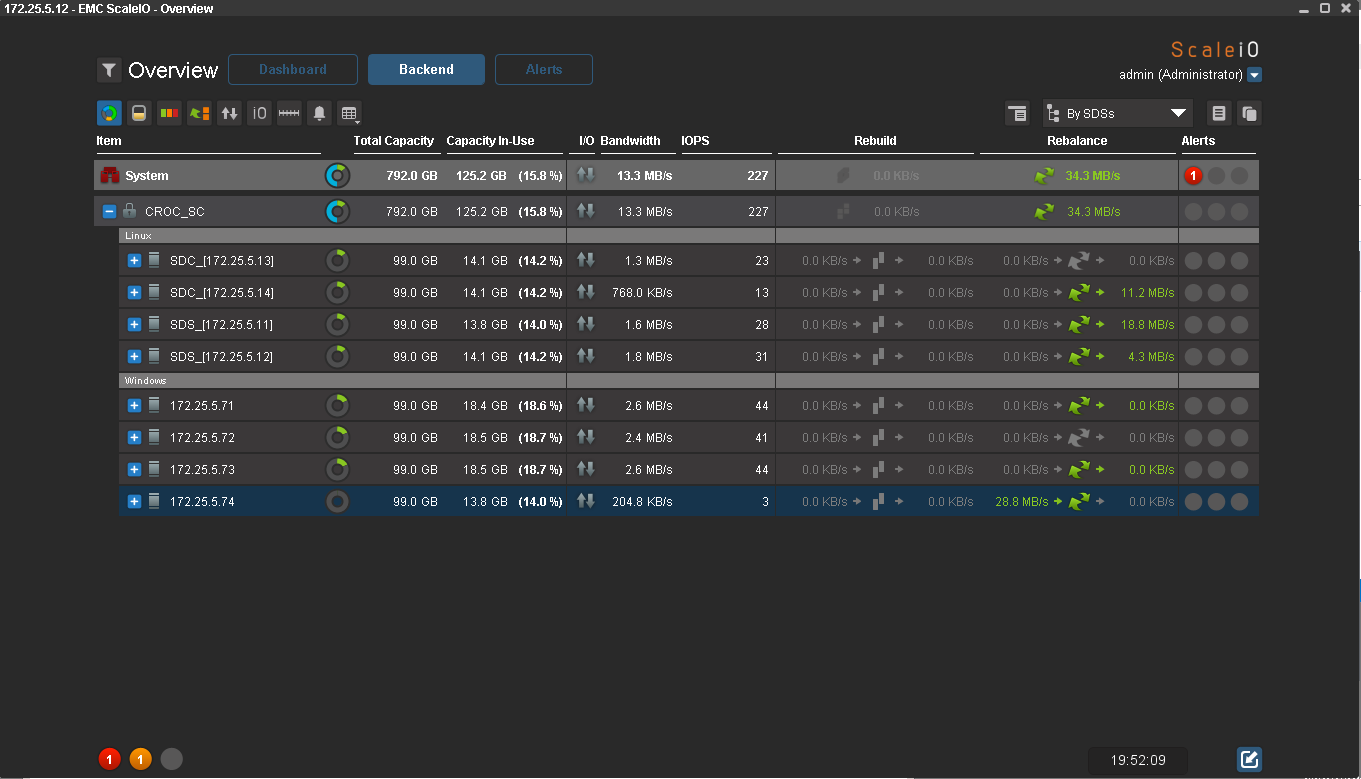

Rebild started automatically, normal flight. Interestingly, data redundancy will be restored automatically. But since now the node with Windows is one less, they will take up more space. As the redundancy recovers, the data will turn green.

Half ready:

And now everything:

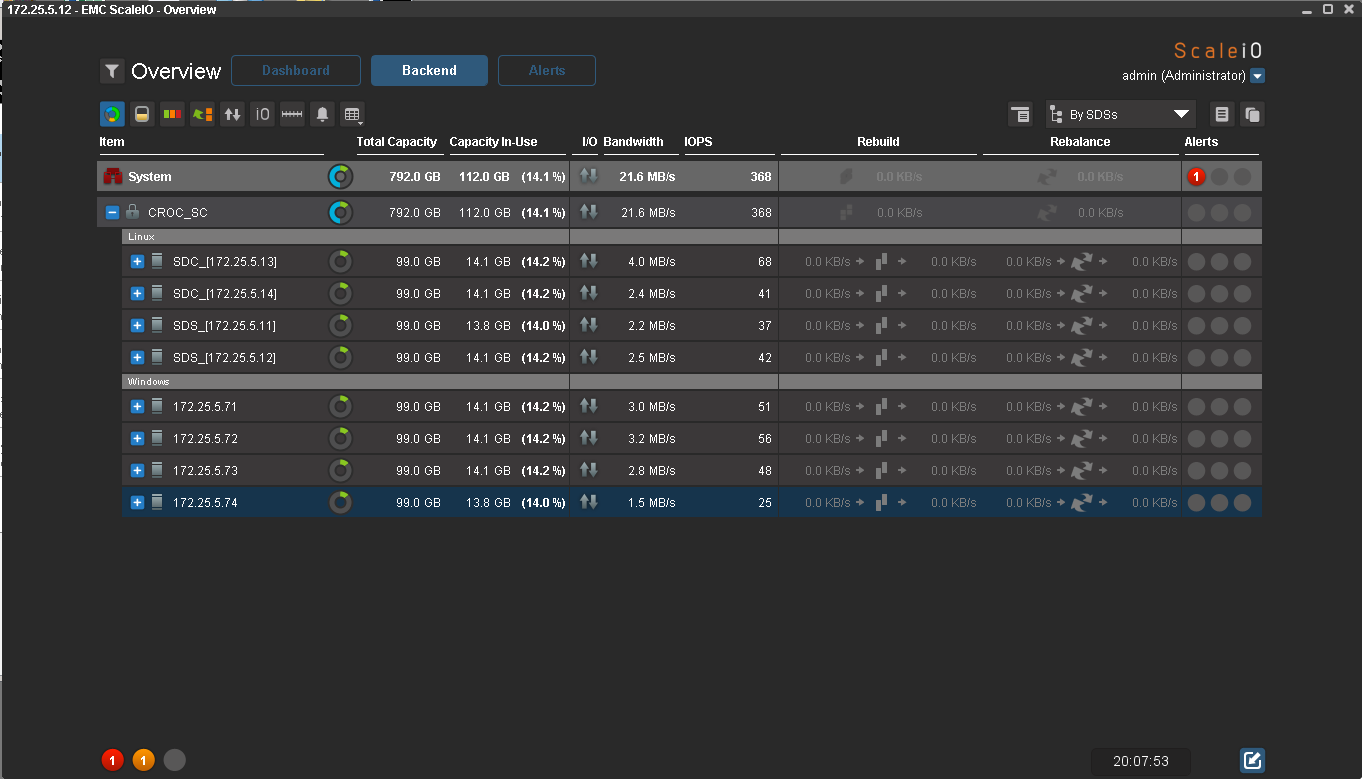

Data has fully recovered redundancy. At the 4th node now there is nothing. When I turn it on, balancing will start inside one Failover set. The same thing happens when adding new nodes.

Rebalance data started. Interestingly, the data is copied from all the nodes, and not just from the nodes of the same file set. After the end of the rebalance, all nodes take up 14% of the place. IOmeter still writes data.

The first iteration of tests is over, the second will be on another gland. Need to test performance.

Price

Licensing policy ScaleIO - pay only for raw capacity. The licenses themselves do not bind to the gland. This means that you can put ScaleIO on the most ancient hardware, and in a couple of years you can replace it with modern servers without additional licensing. It is a bit unusual after the standard licensing policy of arrays, when upgrade disks are more expensive than the same disks upon initial purchase.

The ScaleIO price list is approximately $ 1,572 per terabyte of raw space. Discounts are negotiated separately (I think if you have read this far, you do not need to explain what the “price” price is). ScaleIO has analogues among open source solutions, and from other manufacturers. But ScaleIO has an important plus - round-the-clock support for EMC and a huge implementation experience.

Colleagues from EMC claim that you can save up to 60% of costs in a five-year term compared to mid-range storage. This is achieved both by reducing the cost of licenses, and by reducing the requirements for iron, as well as power, cooling and, accordingly, a place in the data center. As a result, the decision turned out quite "anti-crisis". I think this year it will be useful to many people.

What else can you do

- ScaleIO can be divided into isolated domains (this is for cloud providers).

- Software can create snapshots and limit the speed of clients,

- Data on ScaleIO can be encrypted and laid out in pools with different performance.

- Linear scaling. With each new host bandwidth, performance and capacity increase.

- Failover sets allow you to lose servers as long as there is free space to restore data redundancy.

- Variability. You can collect cheap file trash on SATA-drives, and you can fast storage system with SSD-drives.

- It completely replaces mid-storage storage systems on some projects.

- You can use unused space on existing servers or donate a second life to the old hardware.

From minuses - a large number of ports in network switches and a large number of ip-addresses are required.

Typical applications

- The most obvious is to build a public or private cloud company. The solution is easy to expand, nodes are easy to add and change.

- For infrastructure virtualization. There are also clients for ESX, so nothing prevents us from making the ScaleIO pool (you can even on local disks of the same servers that ESX is on) and put virtual machines on it. Thus, it is possible to provide good performance and additional fault tolerance at a relatively low cost.

- In the configuration with SSD and Ethernet 10G, ScaleIO is perfect for medium and small databases.

- In the configuration with capacious SATA-disks, you can make a cheap storage or archive, which, however, can not be placed on the tape.

- ScaleIO on SAS disks will be an excellent solution for developers and testers.

- Suitable for some video editing tasks, when you need a combination of reliability speed and low price (you need to test in the butt for specifics, of course). The system can be used to store large files like streaming from HD cameras.

Total

Failover tests were successful. Everything goes to the fact that the old servers will go back to performance testing, and then in combat use. If the customer from the story at the beginning does as intended, someone will receive an award for the saved non-acidic budget.

If you are interested in the solution, there is a desire to test it for specific tasks or just to discuss it - write to rpokruchin@croc.ru. On the test iron, you can do everything, as in that joke about a new Finnish chainsaw and Siberian men.

Source: https://habr.com/ru/post/248891/

All Articles