Testing with Microsoft tools - field experience

This article was created by our friends, partners from Kaspersky Lab, and describes the actual experience of using Microsoft's testing tools with recommendations. The author is a testing engineer at Kaspersky Lab, Igor Shcheglovitov.

Hello to all. I work as a testing engineer at Kaspersky Lab in a team that develops a cloud-based server infrastructure on the Microsoft Azure cloud platform.

The team consists of developers and testers (approximately in the ratio of 1 to 3). Developers write C # code and practice TDD and DDD, thanks to which the code turns out to be testable and loosely coupled. Tests written by developers are launched either manually from Visual Studio or automatically when building a build on TFS. To start the build, we have a Gated Check-In trigger installed, so it runs when the Source Control checker is installed. A special feature of this trigger is that if for some reason (whether it is a compilation error or tests did not pass), the build drops, then the check itself, which launched the build, does not fall into SourceControl.

You, probably, faced the statement that it is difficult to test the code? Some resort to pair programming. Other companies have dedicated testing departments. We have this mandatory code review and automated integration testing. Unlike modular - integration tests are developed by dedicated testing engineers, which I belong to.

Clients interact with us through remote SOAP and REST API. Services themselves are based on WCF, MVC, data is stored in Azure Storage. The Azure Service Bus and Azure Cloud Queue are used to reliably and scale long business processes.

')

Some lyrics: there is an opinion that a tester is a kind of stage to become a developer. This is not entirely true. The line between programmers and testing engineers disappears every year. Testers should have a great technical background. But at the same time, have a slightly different thinking than the developers. Testers should be aimed at the destruction in the first place, the developers to create. Together, they should try to make a quality product.

Integration tests, as well as the main code, are developed in C #. Simulating the actions of the end user, they check business processes on configured and running services. To write these tests using Visual Studio and the framework MsTest. Developers use the same thing. In the process, testers and developers produce cross-code review, so that team members can always speak the same language.

Test scripts (test cases) are live and managed through MTM (Microsoft Test Manager). The test case in this case is the same TFS entity as a bug or task. Test cases are manual or automatic. Due to the specifics of our system, we use only the latter.

Automatic test cases are linked by full CLR name with test methods (one case - one test method). Before the advent of MTM (Visual Studio 2010), I ran tests as modular, limited to the studio. It was difficult to build reports on testing, manage tests. Now this is done directly through MTM.

Now it is possible to combine test cases into test plans, and inside plans to build flat and hierarchical groups via TestSuite.

We have a Feature Branch development, i.e. major improvements are made in separate branches, stabilization and release. We created a test plan for each branch (to avoid chaos). After stabilization is completed in the Feature Branch branch, the code is transferred to the main branch, and the tests are transferred to the corresponding test plan. Test cases are very easy to add to test plans (Ctrl + C Ctrl + V, or via the TFS query).

Some recommendations. Personally, I try to separate stable tests from new ones for each branch. This is easy to do through TestSuite. The peculiarity here is that stable (or regression) tests must pass 100%. If you are wrong, then it is worth thinking. Well, after the new functionality becomes stable, the corresponding tests are simply transferred to the regression Test Suite.

For me personally, one of the most boring and routine processes was the creation of test cases. The process of autotest development is different for everyone. Someone at the beginning writes detailed test scenarios, and then creates auto tests on the basis. I have the opposite. I write in the code (in the test class) dummy tests (without logic). Then there is the implementation of logic, architecture component testing, test data and so on.

After the tests are created, they must be transferred to TFS. It's one thing when there are 10 test pieces, and if it is 50 or 100, you have to spend a lot of time on routinely filling in the steps, associating each new test case with the test method.

To simplify this process, Microsoft invented the tcm.exe utility, which can automatically create test scripts in TFS and include it in the test plan. This utility has several drawbacks, for some reason you cannot add tests from different assemblies to a single test plan or you cannot specify steps and a normal name for a test case. In addition, the utility itself appeared relatively recently. When it was not there yet, we created a self-written utility that automates the process of creating test scripts. Special custom attributes TestCaseName and TestCaseStep are also developed for specifying the name and steps of the test script.

The utility itself can both update and create test scenarios, include it in the desired plan. The utility can work in silent mode, its launch is included in the Worfklow TFS build. Thus, it starts automatically by itself and adds or updates existing test scripts. As a result, we have a current test plan.

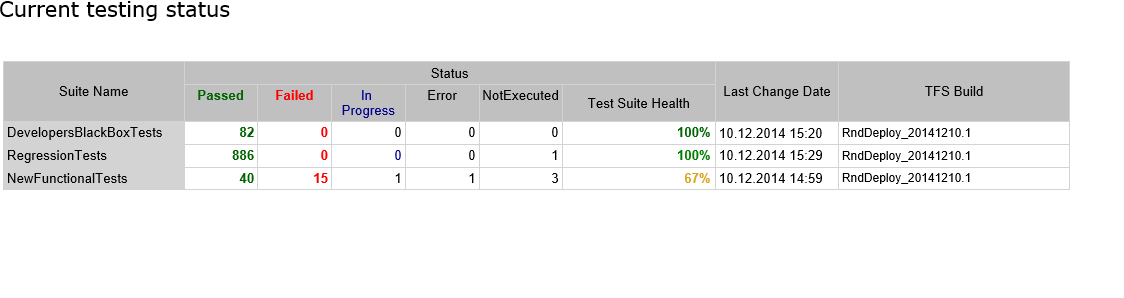

The status of the test run in the test plan (which is equivalent to the quality of the code) is visible in special reports. TFS has a set of ready-made report templates that build diagrams based on OLAP data of cubes on test case and test plan statuses. But we have a self-written and simplified report. We made it so that everyone who looked at it would immediately understand everything.

Here is an example:

A special feature of the report is that it is not built on the basis of cubes that are not rebuilt synchronously and may not contain actual data; our report uses data that is pulled directly through the MTM API. Thus, at any time we get the current status of the test plan.

The report is built in HTML format and sent to the whole team via SmtpClient. This is all done using a simple utility, the launch of which is included directly in the Workflow build: download the utility .

In addition to managing test scripts, MTM is also used to manage test environments and configure agents that run tests. To speed up the execution of tests (here we are talking about tests that check long asynchronous business processes) we use 6 testing agents, i.e. due to the horizontal scaling of agents, we achieve parallel test execution.

On their own, integration auto tests can be manually launched via either MTM or Visual Studio. But manual start happens during debugging (either tests or code). Our team has a reproducing test for almost every bug. Next, the bug along with the test is passed to the development. And it is easier for developers to simulate a problem situation and correct it.

Now about the process of regression.

In our project there is a special build that initiates a full run of tests, it runs on the trigger as a Rolling Build. That is, if the successful Gated Checkin build was successful, then the Rolling Build is launched. The peculiarity of this build is at the same time going to a maximum of one build.

The beginning of this build is the same as the previous one - collecting the latest versions of the test and main project, and then the unit tests run.

If everything went well, then specially written Power Shell scripts are launched that deploy the package of collected services to Azure. Deploem uses Azure REST API. The deployment itself is performed on an intermediate deployment of the cloud service. If the deployment was successful and the role runs without errors, then start the configuration check scripts. If everything is good, then the roles switch (the intermediate deployment becomes the main one) and the integration tests themselves are run.

After running the tests, a letter is sent to the whole team, which contains a report on the status of the tests.

Before writing tests, classical modular and integration, I recommend reading the books The Art of Unit Testing and xUnit Test Patterns: Refactoring Test Code

These books (I personally love the first) contain a large number of tips and tricks, as it should, and do not write tests on any xUnit framework.

I would like to finish my article by listing the basic principles of writing auto tests code in our team:

- The “clear” CLR name of the test method . Here the basic idea is that by looking at one test name, the programmer can understand what he will check, with what data, and what is expected. The test name should consist of 3 parts, separated by an underscore, and look something like this: FunctionalUnderTest_Conditions_ExpectedResult

- Strict dough structure - Arrange-Act-Assert . Data preparation, some action and verification. The meaning is that the tests should have the simplest and most compact structure, essentially consisting of 3 steps described earlier. Due to this, good readability is achieved, as well as ease of support and debugging tests.

- Tests should not contain conditional logic and cycles . There are no comments here, if we had such tests, we would have to write tests for tests.

- One test - one test . Often, people insert a bunch of Assert`s into their tests, and then they can't figure out which one of them the test fell to. Try to do one test in the test, it makes debugging and troubleshooting much easier. Here I will add a little bit that in some cases, after one action, it is required to check the status of several objects, and the state of each of them is characterized by many properties. In this case, it is advisable to do two tests, and check all properties in separate methods for helpers with a normal name, so that instead of Assert.IsTrue, call your redefined assertion. Also, while writing property checks, try to write readable error messages. Here I just gave an example. In fact, there are lots of different patterns.

- Tests, even integration tests, should be quick . Try to write them with this in mind. I have seen many examples of tests with such inserts: Thread.Sleep (60000). Avoid this. If you expect to perform some kind of asynchronous action, then think for yourself how you can track its execution (for example, an asynchronous process changes the state in the database, etc.). Or maybe it is worth breaking the test into several?

- You may not be doing this yet, but follow the thread safety tests. Since In the future, you will most likely come to the fact that you want to speed up the execution of tests and almost one of the ways to achieve this acceleration will be multithreading.

- Independence Tests should not depend on the order of their execution, and from each other. Write tests taking into account this principle. Try to clear the test data, as they often lead to various unforeseen consequences and slow down the execution of tests.

And finally, I want to add that the right tests really make the development fast and the code quality.

Source: https://habr.com/ru/post/248747/

All Articles