Handling custom gestures for Leap Motion. Part 1

Hello!

During the holidays, the Leap Motion sensor came into my hands. For a long time I wanted to work with him, but the main work anduseless pastime session did not allow.

Once, about 10 years ago, when I was a schoolboy and didn’t do anything, I bought Igromania magazine, complete with which a disc was supplied with all sorts of interesting game and shareware software. And in this magazine there was a section about useful software. One of the programs was Symbol Commander, a utility that allows you to record movements with the mouse, recognize recorded movements and, when recognized, perform the actions assigned to these movements.

')

Now, with the development of contactless sensors (Leap Motion, Microsoft Kinect, PrimeSence Carmine), the idea has arisen to repeat this functionality for one of them. The choice fell on Leap Motion.

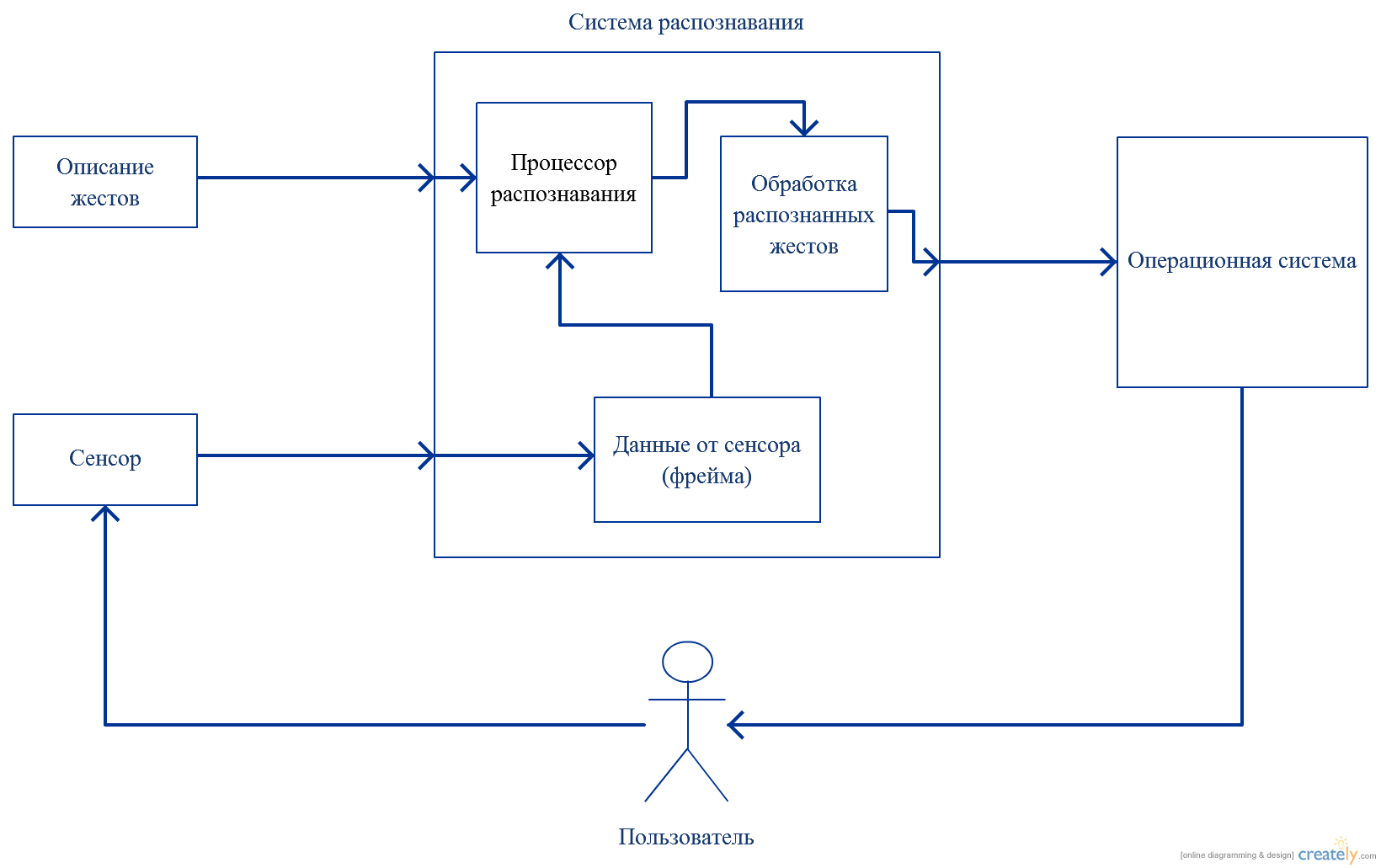

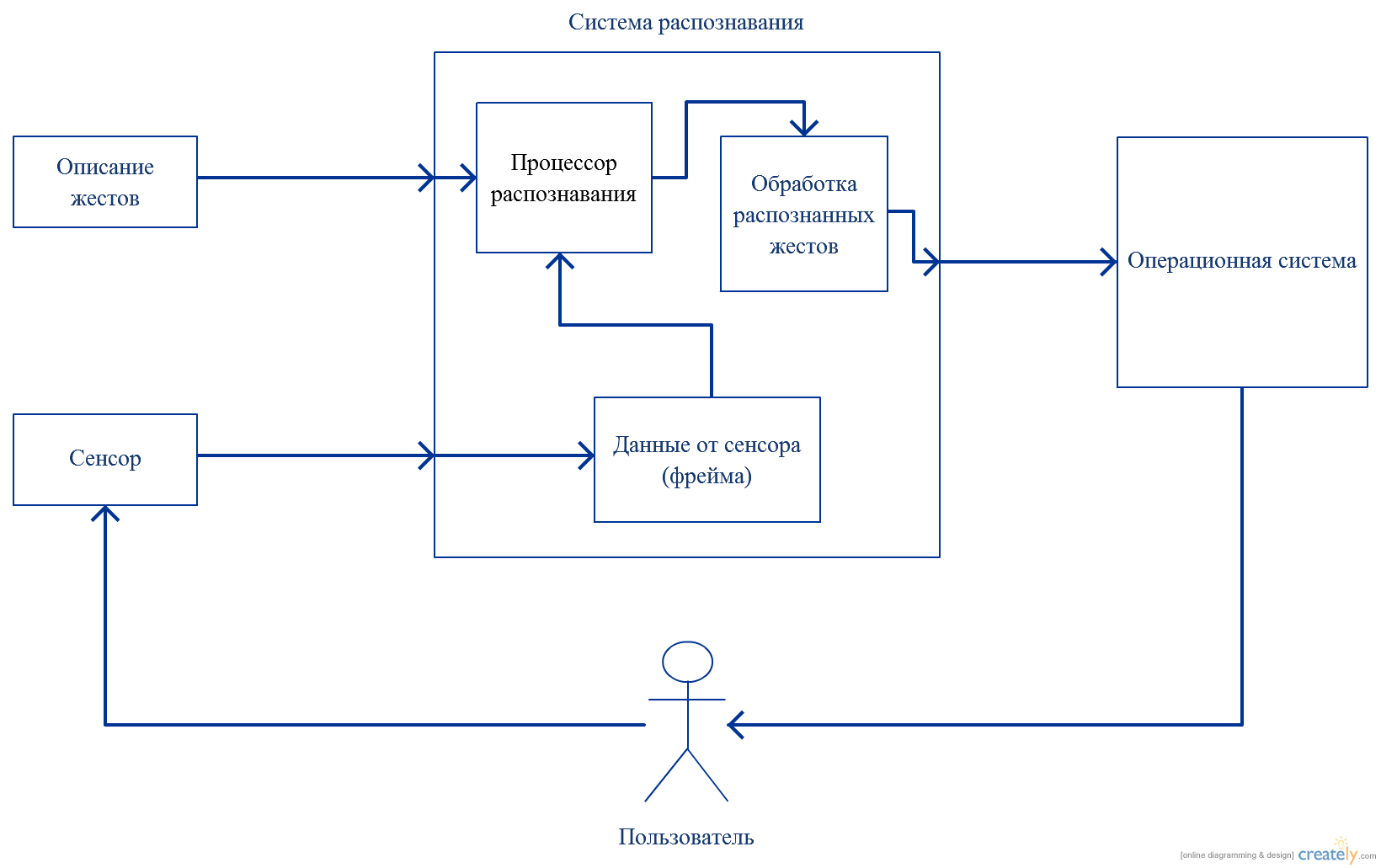

So what is needed to process custom gestures? Gesture representation model and data processor. The scheme of work looks like this:

Thus, the development can be divided into the following stages:

1. Designing a model for describing gestures

2. The implementation of the processor recognition of the described gestures

3. Implementing gesture and command compliance for the OS

4. UI for recording gestures and setting commands.

Let's start with the model.

The official Leap Motion SDK provides a set of pre-defined gestures. For this, there is an enum GestureType containing the following values:

Since for myself I have set the task of processing custom gestures, the gesture description model will have its own.

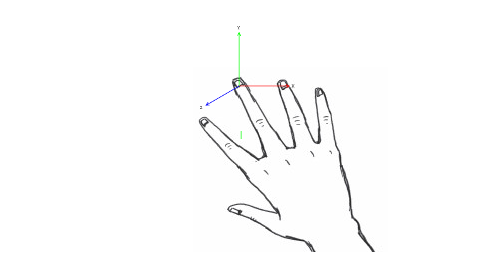

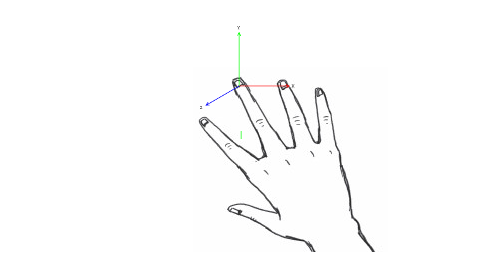

So what is a gesture for Leap Motion?

The SDK provides a Frame in which you can get a FingerList, which contains a collection of descriptions of the current state of each finger. Therefore, to begin with, it was decided to follow the path of least resistance and consider the gesture as a set of finger movements along one of the axes (XYZ) in one of the directions (the corresponding component of the coordinate of the finger increases or decreases).

Therefore, each gesture will be described by a set of primitives consisting of:

1. The axis of movement of the finger

2. Directions of movement

3. The order of execution of the primitive in the gesture

4. The number of frames during which the gesture of the primitive must be performed.

For the gesture you also need to specify:

1. Name

2. The index of the finger that makes this gesture.

Gestures in this version will be described in XML format. For example, here’s an XML description of the well-known Tap gesture (“click” with your finger):

This fragment sets a Tap gesture consisting of two primitives - lowering a finger for 10 frames and, accordingly, raising a finger.

We describe this model for the recognition library:

OK, the model is ready. Let's go to the recognition processor.

What is recognition? Considering that on each frame we can get the current state of the finger, recognition is the verification of the compliance of the finger states with the specified criteria within a given period of time .

Therefore, we will create a class inherited from Leap.Listener and override the OnFrame method in it:

Here we check the status of the fingers once in a period of time equal to FRAME_INTERVAL. For tests FRAME_INTERVAL = 5000 (the number of microseconds between the frames being processed).

From the code it is obvious that recognition is implemented in the CheckFinger method. The parameters of this method are the gesture that is being checked at the moment, and Leap.Finger is an object representing the current state of the finger.

How does recognition work?

I decided to make three containers - a container of coordinates to control the direction, a container of frame counters, the position of the fingers of which satisfies the conditions of gesture recognition and a container of counters of recognized primitives to monitor the execution of gestures.

In this way:

At the moment, tap (finger pressing) and round (circular motion) gestures are described.

The following steps will be:

1. Stabilization of recognition (yes, now it is not stable. I am considering options).

2. Implementing a UI application for normal user operation.

Source code available on github

During the holidays, the Leap Motion sensor came into my hands. For a long time I wanted to work with him, but the main work and

Once, about 10 years ago, when I was a schoolboy and didn’t do anything, I bought Igromania magazine, complete with which a disc was supplied with all sorts of interesting game and shareware software. And in this magazine there was a section about useful software. One of the programs was Symbol Commander, a utility that allows you to record movements with the mouse, recognize recorded movements and, when recognized, perform the actions assigned to these movements.

')

Now, with the development of contactless sensors (Leap Motion, Microsoft Kinect, PrimeSence Carmine), the idea has arisen to repeat this functionality for one of them. The choice fell on Leap Motion.

So what is needed to process custom gestures? Gesture representation model and data processor. The scheme of work looks like this:

Thus, the development can be divided into the following stages:

1. Designing a model for describing gestures

2. The implementation of the processor recognition of the described gestures

3. Implementing gesture and command compliance for the OS

4. UI for recording gestures and setting commands.

Let's start with the model.

The official Leap Motion SDK provides a set of pre-defined gestures. For this, there is an enum GestureType containing the following values:

GestureType.TYPE_CIRCLE GestureType.TYPE_KEY_TAP GestureType.TYPE_SCREEN_TAP GestureType.TYPE_SWIPE Since for myself I have set the task of processing custom gestures, the gesture description model will have its own.

So what is a gesture for Leap Motion?

The SDK provides a Frame in which you can get a FingerList, which contains a collection of descriptions of the current state of each finger. Therefore, to begin with, it was decided to follow the path of least resistance and consider the gesture as a set of finger movements along one of the axes (XYZ) in one of the directions (the corresponding component of the coordinate of the finger increases or decreases).

Therefore, each gesture will be described by a set of primitives consisting of:

1. The axis of movement of the finger

2. Directions of movement

3. The order of execution of the primitive in the gesture

4. The number of frames during which the gesture of the primitive must be performed.

For the gesture you also need to specify:

1. Name

2. The index of the finger that makes this gesture.

Gestures in this version will be described in XML format. For example, here’s an XML description of the well-known Tap gesture (“click” with your finger):

<Gesture> <GestureName>Tap</GestureName> <FingerIndex>1</FingerIndex> <Primitives> <Primitive> <Axis>Z</Axis> <Direction>-1</Direction> <Order>0</Order> <FramesCount>10</FramesCount> </Primitive> <Primitive> <Axis>Z</Axis> <Direction>1</Direction> <Order>1</Order> <FramesCount>10</FramesCount> </Primitive> </Primitives> </Gesture> This fragment sets a Tap gesture consisting of two primitives - lowering a finger for 10 frames and, accordingly, raising a finger.

We describe this model for the recognition library:

public class Primitive { [XmlElement(ElementName = "Axis", Type = typeof(Axis))] // public Axis Axis { get; set; } [XmlElement(ElementName = "Direction")] //: +1 -> public int Direction { get; set; } // -1 -> [XmlElement(ElementName = "Order", IsNullable = true)] // public int? Order { get; set; } [XmlElement(ElementName = "FramesCount")] // public int FramesCount { get; set; } } public enum Axis { [XmlEnum("X")] X, [XmlEnum("Y")] Y, [XmlEnum("Z")] Z }; public class Gesture { [XmlElement(ElementName = "GestureIndex")] // public int GestureIndex { get; set; } [XmlElement(ElementName = "GestureName")] // public string GestureName { get; set; } [XmlElement(ElementName = "FingerIndex")] // public int FingerIndex { get; set; } [XmlElement(ElementName = "PrimitivesCount")] // public int PrimitivesCount { get; set; } [XmlArray(ElementName = "Primitives")] // public Primitive[] Primitives { get; set; } } OK, the model is ready. Let's go to the recognition processor.

What is recognition? Considering that on each frame we can get the current state of the finger, recognition is the verification of the compliance of the finger states with the specified criteria within a given period of time .

Therefore, we will create a class inherited from Leap.Listener and override the OnFrame method in it:

public override void OnFrame(Leap.Controller ctrl) { Leap.Frame frame = ctrl.Frame(); currentFrameTime = frame.Timestamp; frameTimeChange = currentFrameTime - previousFrameTime; if (frameTimeChange > FRAME_INTERVAL) { foreach (Gesture gesture in _registry.Gestures) { Task.Factory.StartNew(() => { Leap.Finger finger = frame.Fingers[gesture.FingerIndex]; CheckFinger(gesture, finger); }); } previousFrameTime = currentFrameTime; } } Here we check the status of the fingers once in a period of time equal to FRAME_INTERVAL. For tests FRAME_INTERVAL = 5000 (the number of microseconds between the frames being processed).

From the code it is obvious that recognition is implemented in the CheckFinger method. The parameters of this method are the gesture that is being checked at the moment, and Leap.Finger is an object representing the current state of the finger.

How does recognition work?

I decided to make three containers - a container of coordinates to control the direction, a container of frame counters, the position of the fingers of which satisfies the conditions of gesture recognition and a container of counters of recognized primitives to monitor the execution of gestures.

In this way:

public void CheckFinger(Gesture gesture, Leap.Finger finger) { int recognitionValue = _recognized.ElementAt(gesture.GestureIndex); Primitive primitive = gesture.Primitives[recognitionValue]; CheckDirection(gesture.GestureIndex, primitive, finger); CheckGesture(gesture); } public void CheckDirection(int gestureIndex, Primitive primitive, Leap.Finger finger) { float pointCoordinates = float.NaN; switch(primitive.Axis) { case Axis.X: pointCoordinates = finger.TipPosition.x; break; case Axis.Y: pointCoordinates = finger.TipPosition.y; break; case Axis.Z: pointCoordinates = finger.TipPosition.z; break; } if (_coordinates[gestureIndex] == INIT_COUNTER) _coordinates[gestureIndex] = pointCoordinates; else { switch (primitive.Direction) { case 1: if (_coordinates[gestureIndex] < pointCoordinates) { _coordinates[gestureIndex] = pointCoordinates; _number[gestureIndex]++; } else _coordinates[gestureIndex] = INIT_COORDINATES; break; case -1: if (_coordinates[gestureIndex] > pointCoordinates) { _coordinates[gestureIndex] = pointCoordinates; _number[gestureIndex]++; } else _coordinates[gestureIndex] = INIT_COORDINATES; break; } } if(_number[gestureIndex] == primitive.FramesCount) { _number[gestureIndex] = INIT_COUNTER; _recognized[gestureIndex]++; } } public void CheckGesture(Gesture gesture) { if(_recognized[gesture.GestureIndex] == (gesture.PrimitivesCount - 1)) { FireEvent(gesture); _recognized[gesture.GestureIndex] = INIT_COUNTER; } } At the moment, tap (finger pressing) and round (circular motion) gestures are described.

The following steps will be:

1. Stabilization of recognition (yes, now it is not stable. I am considering options).

2. Implementing a UI application for normal user operation.

Source code available on github

Source: https://habr.com/ru/post/248377/

All Articles