NetApp FAS and VMware ESXi: Swap

Continuing the topic of host optimization with VMware ESXi , let's look at how to deal with Swap in the infrastructure of NetApp FAS living on storage systems. Although this article should be helpful and not only to owners of NetApp FAS systems.

One of the most important features of virtualization is the ability to utilize server equipment more efficiently, which implies Overcommit resources. If we are talking about RAM , it means that we can configure each virtual machine with more memory than it actually has on the server. And then we rely on ESXi to get rid of the fight for resources - it took (such a process is often called reclamation) the wrong memory of one virtual machine and gave it to the one that really needs it. At that moment when there is not enough memory, the process of swapping memory begins.

To begin with, there are two types of swapping that can occur on an ESXi host. They are very often confused, so let's conditionally call them Type 1 and Type 2.

')

VMware ESXi default data location

This is the easiest type of swaping to describe. Memory is allocated not only for virtual machines, but also for various components of the host, for example, for a virtual machine monitor (VMM) and virtual devices. Such memory can be swapped in order to allocate more physical memory to more needed virtual machines. According to VMware, this feature can free up from 50MB to 10MB of memory for each virtual machine without significant performance degradation.

This type of swaping is enabled by default, VMX Swap is stored in the folder with the virtual machine as a file with the extension vswp (the location can be changed through the parameter sched.swap.vmxSwapDir ). VMX swapping can be turned off by setting the virtual machine parameter sched.swap.vmxSwapEnabled = FALSE .

A virtual machine "sees" as much as supposedly "physical" memory, how much RAM is specified in its settings. From the point of view of the guest OS , all such allocated memory for it is physical. In fact, the hypervisor hides behind the virtualization layer, for the guest OS , what kind of memory is actually used: physical or vSwap. When the memory “inside” the guest OS ends, it starts swapping itself. And here you need not to get confused - this process has nothing to do with host-level swaping ( VMX ). For example, for a virtual machine with 4GB of memory configured, the hypervisor can allocate this memory on the host, both in physical memory and in the host swap ( VMX ) - for a virtual machine, this is not important, all 4GB will be used as physical memory simply because that the guest OS has no idea that its memory is virtualized.

When the guest OS wants to use more than 4GB of memory, it will use its own swapping, Windows uses pagefile.sys, in Linux it is a dedicated Swap partition. Thus, the swap is saved inside the virtual disk - inside one of the VMDK files of the virtual machine. Those. The pagefile.sys / swap partition is part of a virtual disk virtual machine.

And this is what happens when the balun driver is used: when it “swells up”, it clogs all the free (from the guest OS point of view) memory, and the balun driver makes sure that it actually gets physical, not swapped host memory, and when Such a virtual machine does not have free memory, this forces the guest OS to start swapping data. And this is good, since the guest OS knows better what is swapped to disk and what is not.

When we understand that the ESXi host has a lot of swap, it means that we have a lot of overspending resources, all reclamation and memory saving techniques (ballooning, page sharing and memory compression) do not save - the host simply does not have enough RAM . It is considered that the host is in the “Hard state” state (1% –2% of the memory is free) or “Low state” (1% or less). This state of affairs significantly affects performance, it should be avoided. Another problem is that when Swapping at the host level has no control over what goes there, unlike the guest OS swapping (Type 2), the hypervisor does not know which data is more important for the guest OS , so it is important to install VMware Tools so that the driver works baluning

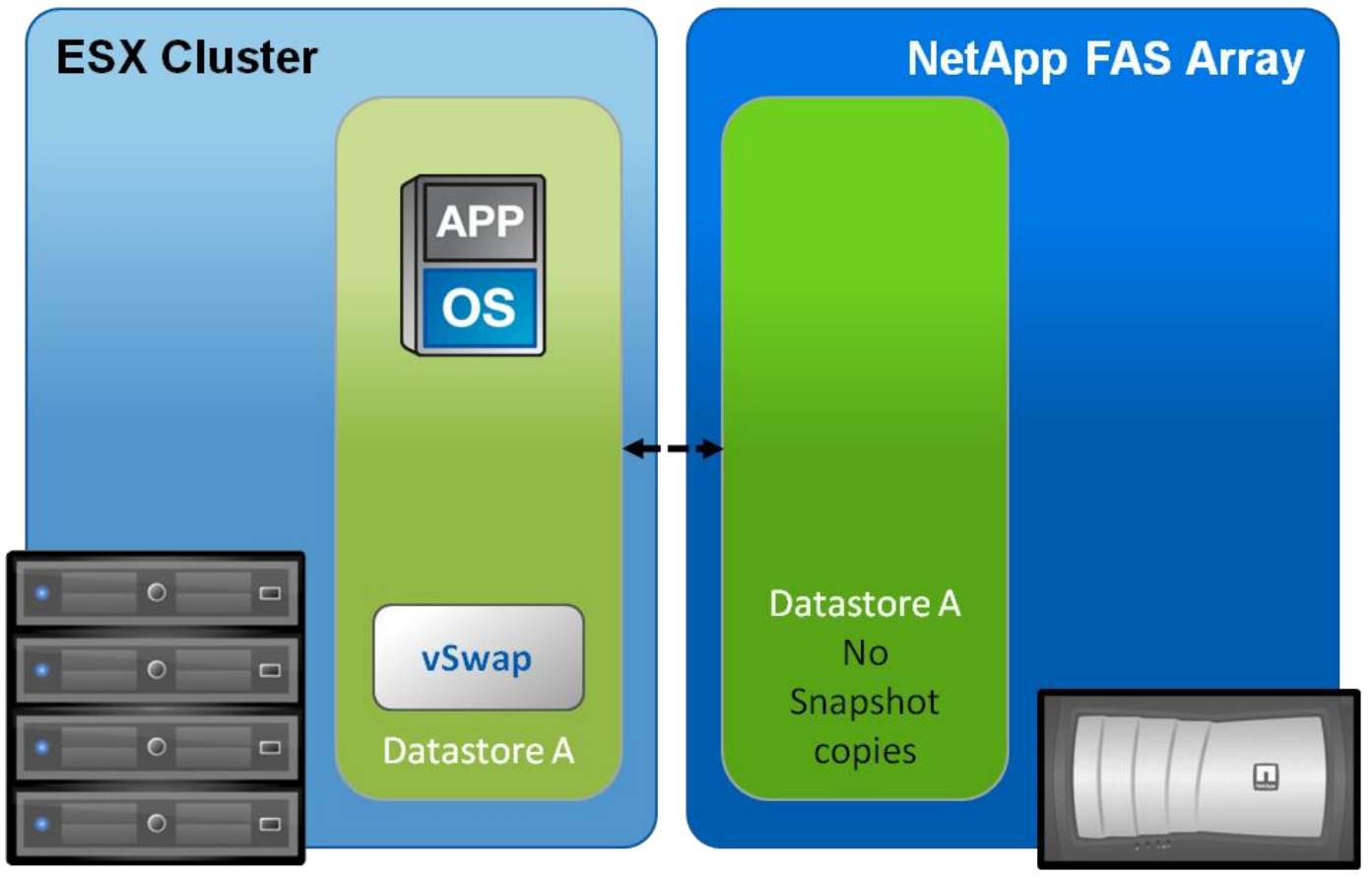

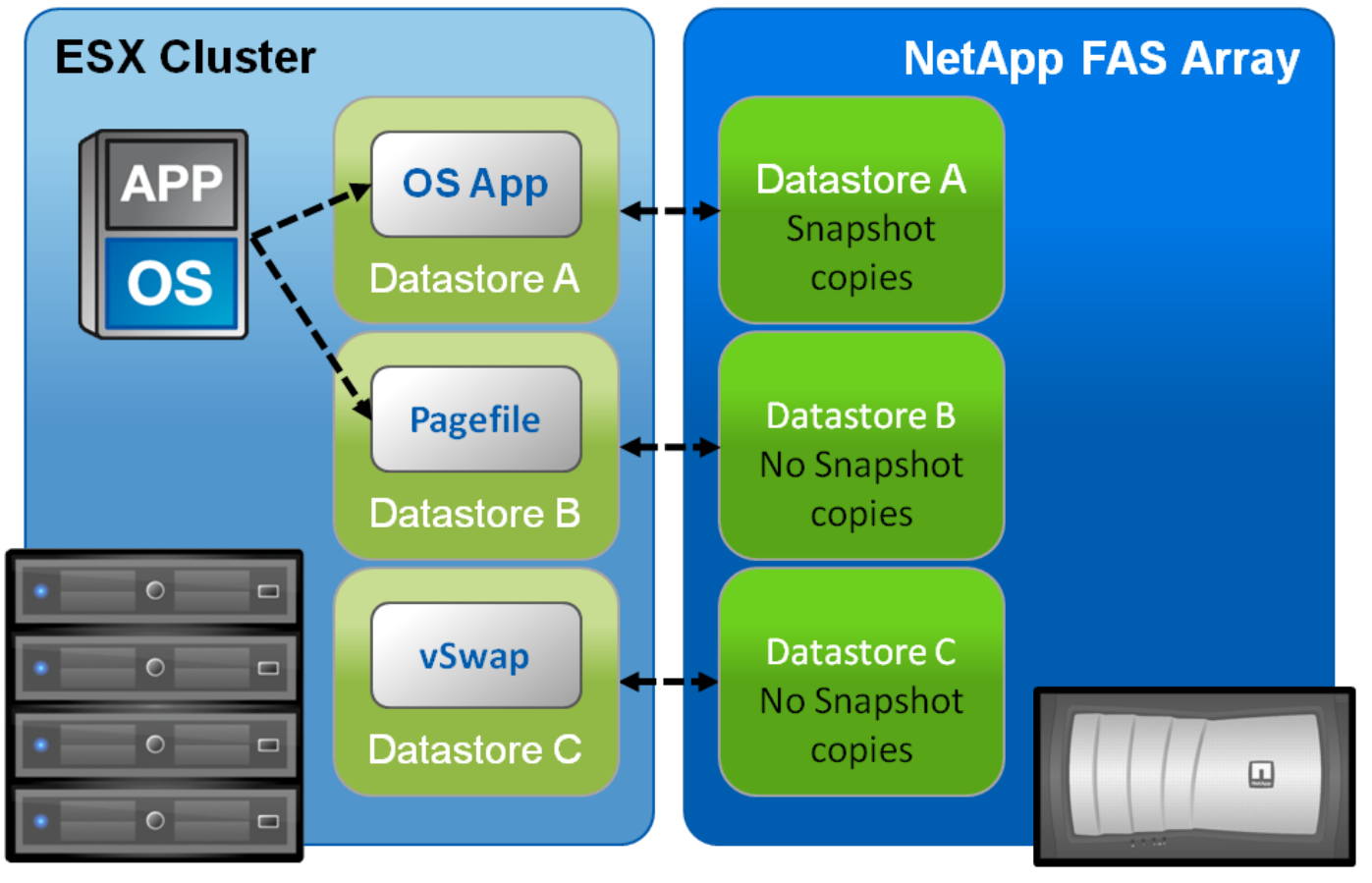

vSwap (Type 1) is saved by default to the .vswp file in the folder with the virtual machine. NetApp recommends saving vSwap (Type 1) to a dedicated datastore.

Such a file exists only for running virtual machines — upon startup, the hypervisor creates this file and deletes it when the virtual machine is turned off. Pay attention to the size of this file - it is equal to the difference between the configured memory for the virtual machine and the reserve of physical memory for this machine. This ensures the presence of a given memory space when the virtual machine starts (no matter what memory is used - real physical or vSwap). The reserve ensures that the virtual machine always receives the necessary space in the physical memory of the host. And the difference between the reserve and the configured memory of the virtual machine is stored in the vswp file.

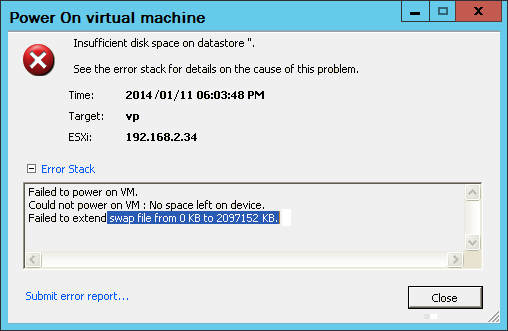

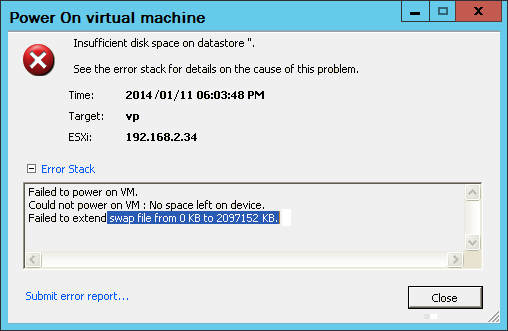

Below is an example of running a virtual machine with 4GB of memory and a reserve of 2GB. Remember that vSwap is deleted after shutdown? And if at this moment the datastore storing this virtual machine is full and there remains free 1.8GB of space, what will happen?

What can you do about it? Of course, you can free up space on the datastore or place vSwap (Type 1) on another datastore. By default, it is stored in a folder with a virtual paddle. If this is a “standalone” host: Configuration> Software> Virtual Machine Swapfile Location . The location of the vSwap files can also be changed at the settings level of each virtual machine. Open the virtual machine settings and select the Options tab:

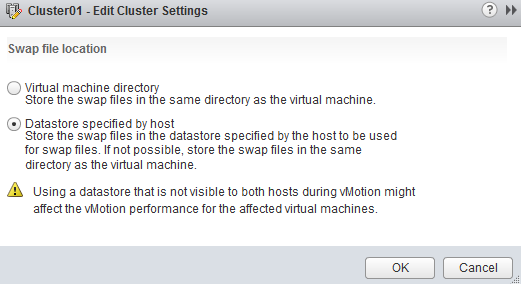

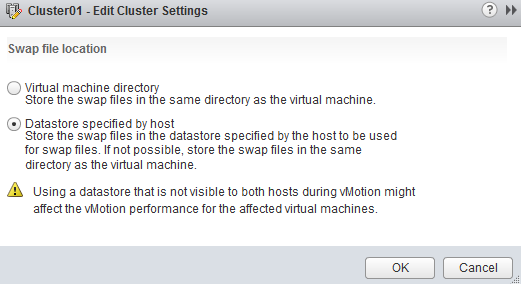

If this host is part of a cluster, take a look at the cluster settings. Store the swapfile in the datastore specified by the host.

Next, select the datastore where vSwap (Type 1) will be stored.

Swap's recommended layout for NetApp FAS

Firstly, it is worth noting that the general rule of VMware says, if possible, to place vSwap (Type 1) in the folder with the virtual machine, since moving to a separate datastore can slow down vMotion. But in the particular case of VMware + NetApp FAS comes another recommendation. NetApp recommends storing vSwap (Type 1) on a separate, dedicated, one for all virtual machines datastore , since in this case, the difference during the migration of vMotion is almost eliminated. VMware recommends that the space for vSwap (Type 1) be greater than or equal to the difference configured and reserved for the virtual machine memory. Otherwise, virtual machines may not start. Example: we have 5 virtual machines with a 4GB memory size and a reservation for each 3GB.

The minimum datastore size for vSwap Type 1:

5VM * (4 GB - 3GB) = 5GB

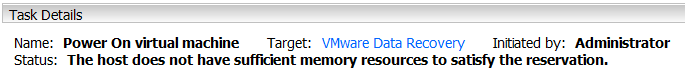

If there is no free physical 5VM * 3GB = 15 GB of memory on the host, the machines may not start, displaying an error:

The host does not have sufficient memory resources

And at the same time an event log will be created.

Secondly, this is performance - placing the swap on a slower datastor, if you are sure that there will almost never be a swap. Or if the virtual machines will request more memory than there is on the host, and the swapping will be permanent - on a faster datastore.

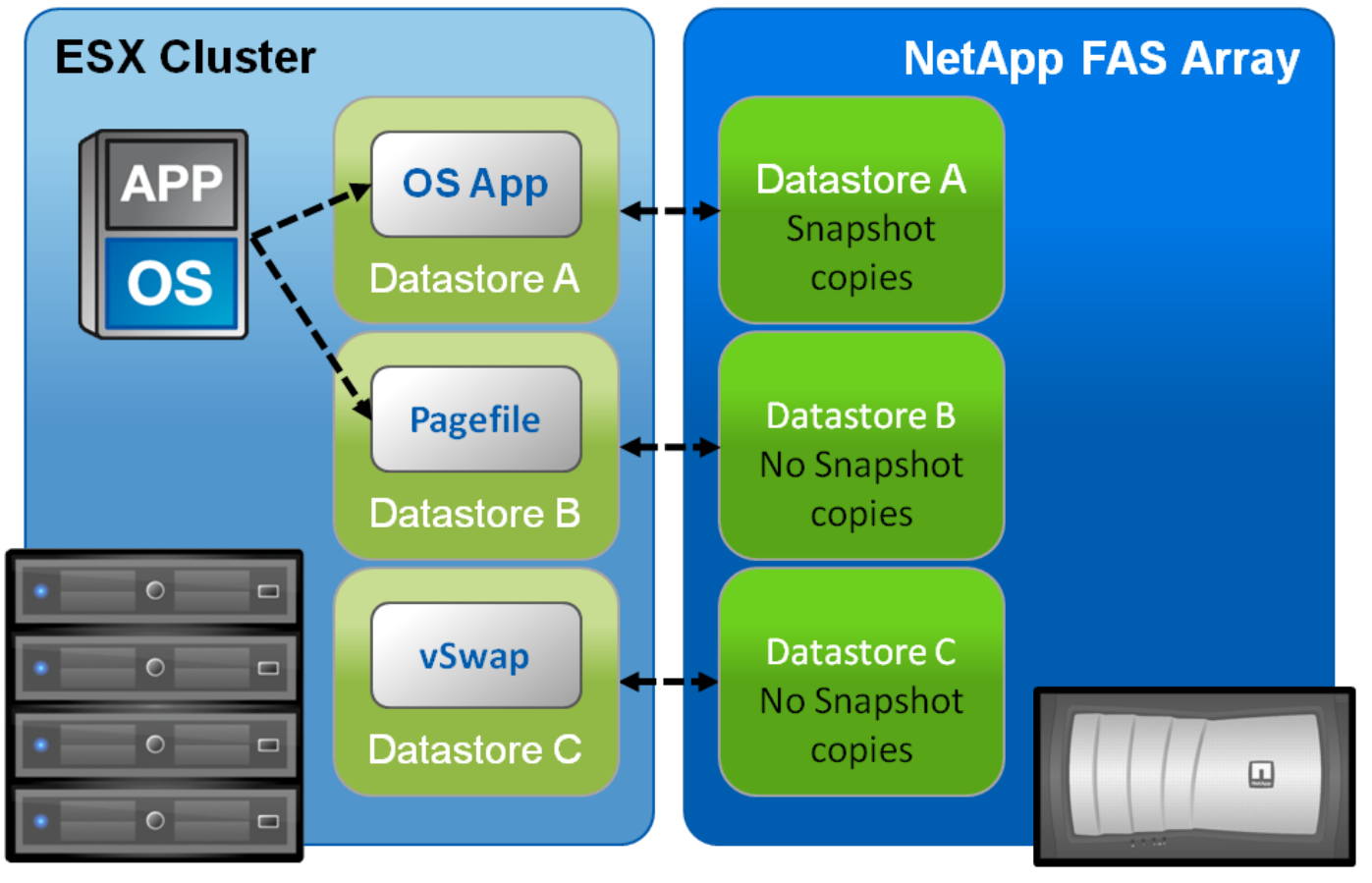

Thirdly, these are snapshots and deduplication at the storage level. Since netap snapshots do not affect performance, they are the norm of life, they are performed continuously and are part of the NetApp backup paradigm for FAS systems . Swap is absolutely useless information when backing up and restoring. If it is used extensively, and snapshots are often removed, snapshots will be “captured” and stored, all the time a snapshot lives, this useless information. And since the swap is constantly changing, and the special flash captures only the new modified data, each new snapshot will store this new modified data from the swap, mercilessly eating the usable space under the useless data on the storage. There is no reason to dedup the swap, because the data in the swap is not needed during the restoration and will never be read. In this sense, it is convenient to make swaps to a separate datastore. NetApp recommends placing the swap on a separate datastore with snapshots turned off and deduplication, so we can further reduce the overhead in snapshots and replication.

NetApp does not recommend using local disks (pages 76-78, DEC11) on the host for storing vSwap (Type 1) because such a configuration has a negative effect on the speed of the vMotion operation.

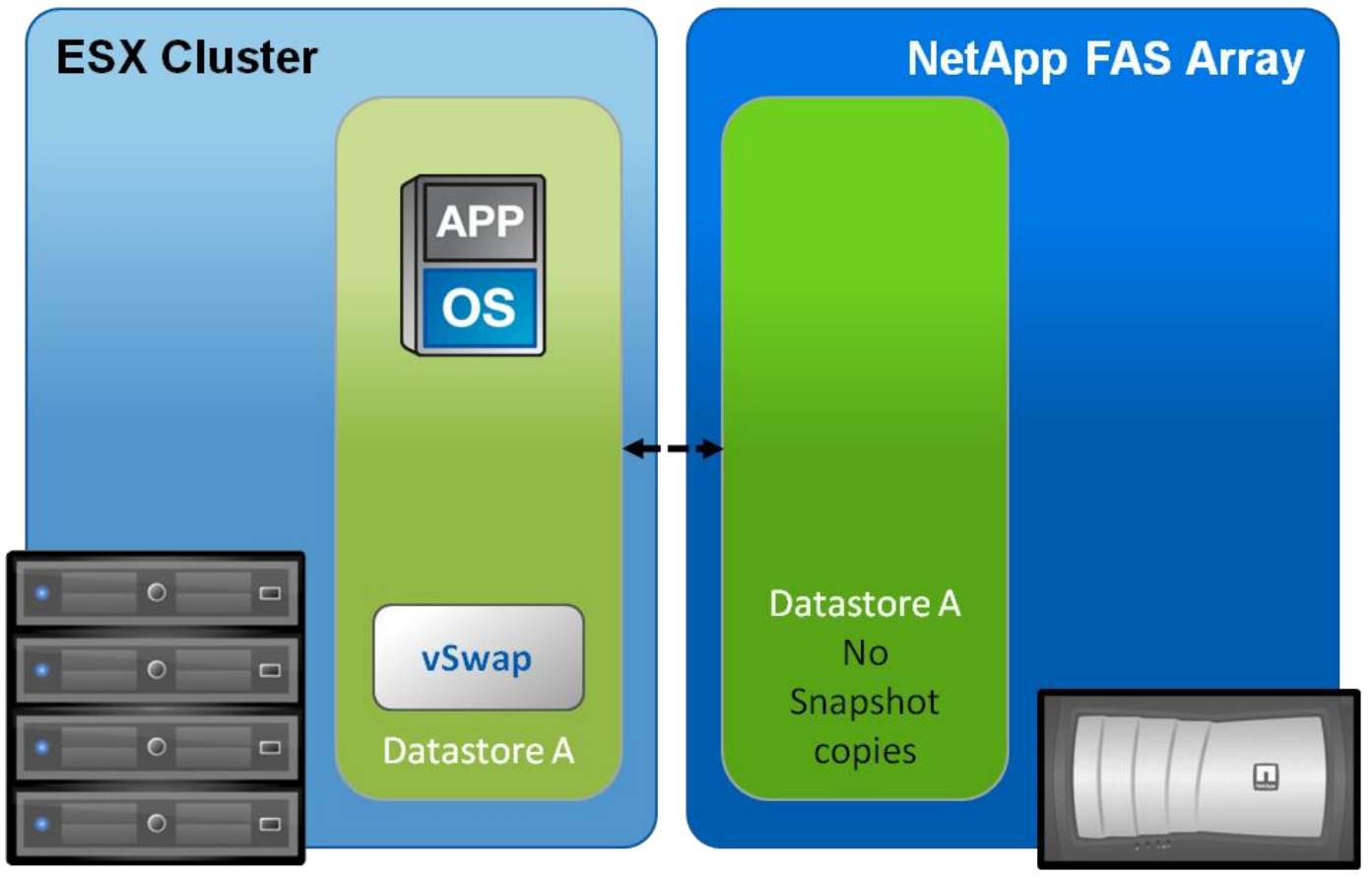

Placing temporary data such as Swap and Pagefile.sys on a dedicated datastor (creating a dedicated virtual disk for this purpose) allows you not to deduplicate or back up this data as well as vSwap (Type 1). It is very important not to forget to specify this disk as Independent , so that the VSC agent does not force FAS to remove snapshots from the section that stores Swap files (Type 2).

NetApp doesn’t have recommendations for Swap Type 2; making this data is an optional design that has its pros and cons:

In addition to the rendered vSwap Type 1, swap Type 2 will further reduce the overhead on snapshots and, as a result, increase the throughput for replication. In ACB for DR solutions, the presence of Swap (both types) does not have any meaning, since it is not used at system start. If we stop storing Swap (Type 2) in snapshots and backups, this should not cause any problems during local recovery, since the restored .vmx config file will contain a link to the VMDK file that stores the partition with Swap, because it will be be in the same place. But this will add additional steps when restoring to a remote site, in configurations such as SRM , SnapProtect, and other DR solutions.

Optional data layout on NetApp FAS

So, when restoring a virtual machine without a VMDK file of the guest OS that saves the swap, you need to add steps to create a virtual disk, a file system on it and connect it to the virtual machine (or connect an existing VMDK with the created file system). Read more TR-3671 .

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary please in the comments

One of the most important features of virtualization is the ability to utilize server equipment more efficiently, which implies Overcommit resources. If we are talking about RAM , it means that we can configure each virtual machine with more memory than it actually has on the server. And then we rely on ESXi to get rid of the fight for resources - it took (such a process is often called reclamation) the wrong memory of one virtual machine and gave it to the one that really needs it. At that moment when there is not enough memory, the process of swapping memory begins.

To begin with, there are two types of swapping that can occur on an ESXi host. They are very often confused, so let's conditionally call them Type 1 and Type 2.

')

VMware ESXi default data location

Type 1. VMX swapping (vSwap)

This is the easiest type of swaping to describe. Memory is allocated not only for virtual machines, but also for various components of the host, for example, for a virtual machine monitor (VMM) and virtual devices. Such memory can be swapped in order to allocate more physical memory to more needed virtual machines. According to VMware, this feature can free up from 50MB to 10MB of memory for each virtual machine without significant performance degradation.

This type of swaping is enabled by default, VMX Swap is stored in the folder with the virtual machine as a file with the extension vswp (the location can be changed through the parameter sched.swap.vmxSwapDir ). VMX swapping can be turned off by setting the virtual machine parameter sched.swap.vmxSwapEnabled = FALSE .

Type 2. Guest OS Swapping

A virtual machine "sees" as much as supposedly "physical" memory, how much RAM is specified in its settings. From the point of view of the guest OS , all such allocated memory for it is physical. In fact, the hypervisor hides behind the virtualization layer, for the guest OS , what kind of memory is actually used: physical or vSwap. When the memory “inside” the guest OS ends, it starts swapping itself. And here you need not to get confused - this process has nothing to do with host-level swaping ( VMX ). For example, for a virtual machine with 4GB of memory configured, the hypervisor can allocate this memory on the host, both in physical memory and in the host swap ( VMX ) - for a virtual machine, this is not important, all 4GB will be used as physical memory simply because that the guest OS has no idea that its memory is virtualized.

When the guest OS wants to use more than 4GB of memory, it will use its own swapping, Windows uses pagefile.sys, in Linux it is a dedicated Swap partition. Thus, the swap is saved inside the virtual disk - inside one of the VMDK files of the virtual machine. Those. The pagefile.sys / swap partition is part of a virtual disk virtual machine.

And this is what happens when the balun driver is used: when it “swells up”, it clogs all the free (from the guest OS point of view) memory, and the balun driver makes sure that it actually gets physical, not swapped host memory, and when Such a virtual machine does not have free memory, this forces the guest OS to start swapping data. And this is good, since the guest OS knows better what is swapped to disk and what is not.

ESXi Host swapping

When we understand that the ESXi host has a lot of swap, it means that we have a lot of overspending resources, all reclamation and memory saving techniques (ballooning, page sharing and memory compression) do not save - the host simply does not have enough RAM . It is considered that the host is in the “Hard state” state (1% –2% of the memory is free) or “Low state” (1% or less). This state of affairs significantly affects performance, it should be avoided. Another problem is that when Swapping at the host level has no control over what goes there, unlike the guest OS swapping (Type 2), the hypervisor does not know which data is more important for the guest OS , so it is important to install VMware Tools so that the driver works baluning

vSwap (Type 1) is saved by default to the .vswp file in the folder with the virtual machine. NetApp recommends saving vSwap (Type 1) to a dedicated datastore.

/vmfs/volumes/4b953b81-76b37f94-efef-0010185f132e/vp # ls -lah |grep swp --rw------ 1 root root 2.0G Jan 11 18:02 vp-c6783a5c.vswp Such a file exists only for running virtual machines — upon startup, the hypervisor creates this file and deletes it when the virtual machine is turned off. Pay attention to the size of this file - it is equal to the difference between the configured memory for the virtual machine and the reserve of physical memory for this machine. This ensures the presence of a given memory space when the virtual machine starts (no matter what memory is used - real physical or vSwap). The reserve ensures that the virtual machine always receives the necessary space in the physical memory of the host. And the difference between the reserve and the configured memory of the virtual machine is stored in the vswp file.

Below is an example of running a virtual machine with 4GB of memory and a reserve of 2GB. Remember that vSwap is deleted after shutdown? And if at this moment the datastore storing this virtual machine is full and there remains free 1.8GB of space, what will happen?

What can you do about it? Of course, you can free up space on the datastore or place vSwap (Type 1) on another datastore. By default, it is stored in a folder with a virtual paddle. If this is a “standalone” host: Configuration> Software> Virtual Machine Swapfile Location . The location of the vSwap files can also be changed at the settings level of each virtual machine. Open the virtual machine settings and select the Options tab:

If this host is part of a cluster, take a look at the cluster settings. Store the swapfile in the datastore specified by the host.

Next, select the datastore where vSwap (Type 1) will be stored.

Why place a swap on a separate datastor?

Swap's recommended layout for NetApp FAS

Firstly, it is worth noting that the general rule of VMware says, if possible, to place vSwap (Type 1) in the folder with the virtual machine, since moving to a separate datastore can slow down vMotion. But in the particular case of VMware + NetApp FAS comes another recommendation. NetApp recommends storing vSwap (Type 1) on a separate, dedicated, one for all virtual machines datastore , since in this case, the difference during the migration of vMotion is almost eliminated. VMware recommends that the space for vSwap (Type 1) be greater than or equal to the difference configured and reserved for the virtual machine memory. Otherwise, virtual machines may not start. Example: we have 5 virtual machines with a 4GB memory size and a reservation for each 3GB.

The minimum datastore size for vSwap Type 1:

5VM * (4 GB - 3GB) = 5GB

If there is no free physical 5VM * 3GB = 15 GB of memory on the host, the machines may not start, displaying an error:

The host does not have sufficient memory resources

And at the same time an event log will be created.

Secondly, this is performance - placing the swap on a slower datastor, if you are sure that there will almost never be a swap. Or if the virtual machines will request more memory than there is on the host, and the swapping will be permanent - on a faster datastore.

Thirdly, these are snapshots and deduplication at the storage level. Since netap snapshots do not affect performance, they are the norm of life, they are performed continuously and are part of the NetApp backup paradigm for FAS systems . Swap is absolutely useless information when backing up and restoring. If it is used extensively, and snapshots are often removed, snapshots will be “captured” and stored, all the time a snapshot lives, this useless information. And since the swap is constantly changing, and the special flash captures only the new modified data, each new snapshot will store this new modified data from the swap, mercilessly eating the usable space under the useless data on the storage. There is no reason to dedup the swap, because the data in the swap is not needed during the restoration and will never be read. In this sense, it is convenient to make swaps to a separate datastore. NetApp recommends placing the swap on a separate datastore with snapshots turned off and deduplication, so we can further reduce the overhead in snapshots and replication.

NetApp does not recommend using local disks (pages 76-78, DEC11) on the host for storing vSwap (Type 1) because such a configuration has a negative effect on the speed of the vMotion operation.

Should I endure Type 2 Swap?

Placing temporary data such as Swap and Pagefile.sys on a dedicated datastor (creating a dedicated virtual disk for this purpose) allows you not to deduplicate or back up this data as well as vSwap (Type 1). It is very important not to forget to specify this disk as Independent , so that the VSC agent does not force FAS to remove snapshots from the section that stores Swap files (Type 2).

NetApp doesn’t have recommendations for Swap Type 2; making this data is an optional design that has its pros and cons:

In addition to the rendered vSwap Type 1, swap Type 2 will further reduce the overhead on snapshots and, as a result, increase the throughput for replication. In ACB for DR solutions, the presence of Swap (both types) does not have any meaning, since it is not used at system start. If we stop storing Swap (Type 2) in snapshots and backups, this should not cause any problems during local recovery, since the restored .vmx config file will contain a link to the VMDK file that stores the partition with Swap, because it will be be in the same place. But this will add additional steps when restoring to a remote site, in configurations such as SRM , SnapProtect, and other DR solutions.

Optional data layout on NetApp FAS

So, when restoring a virtual machine without a VMDK file of the guest OS that saves the swap, you need to add steps to create a virtual disk, a file system on it and connect it to the virtual machine (or connect an existing VMDK with the created file system). Read more TR-3671 .

I ask to send messages on errors in the text to the LAN .

Notes and additions on the contrary please in the comments

Source: https://habr.com/ru/post/247631/

All Articles